(。・∀・)ノ゙ Hey there! Yeah! 🫡 Welcome aboard! 🎉

1️⃣If you're not yet familiar with blockchain, bitcoin, and IC, no worries. I'm here to give you the lowdown on the history of crypto!

2️⃣If you've heard of IC but haven't gotten the full scoop yet, you've come to the right place to learn more.

3️⃣Wanna hear Dominic's story? He's right here!

—〦———〦———〦———〦—————〦———→ ∞ 💥 Blockchain Singularity

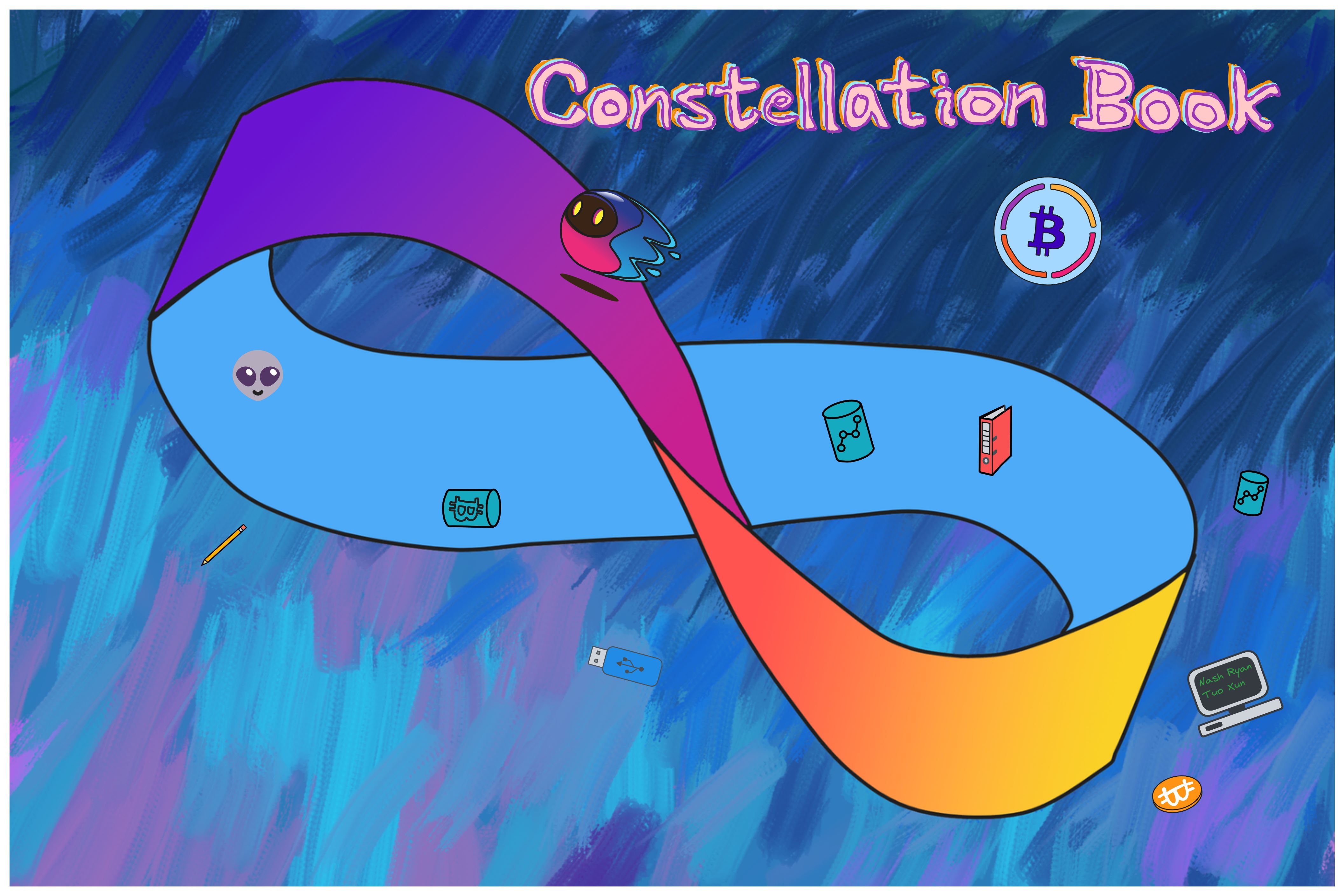

Sometimes, I'm really awestruck to be living in such a miraculous era. Just a few years ago, we were mocking Bitcoin, and now decentralized finance, Ethereum and crypto have become deeply embedded. And in this rapidly evolving new world, a bunch of new technologies are adding color to our lives in their own unique ways: The Internet Computer, a next-gen general purpose blockchain compute platform.

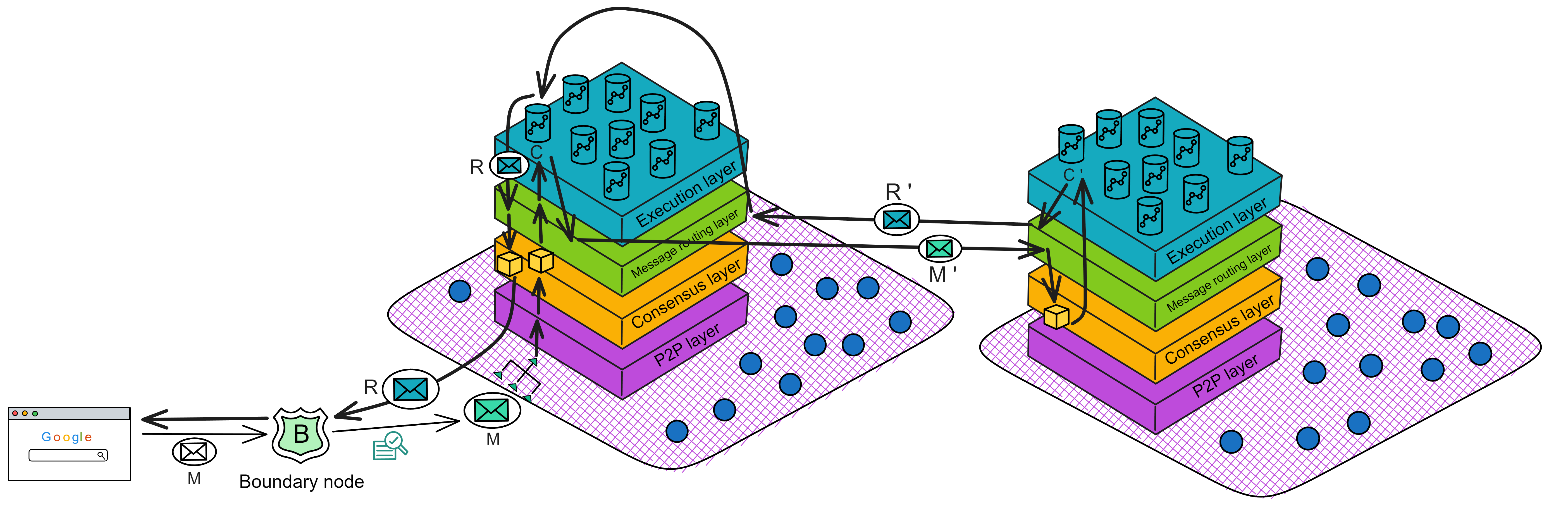

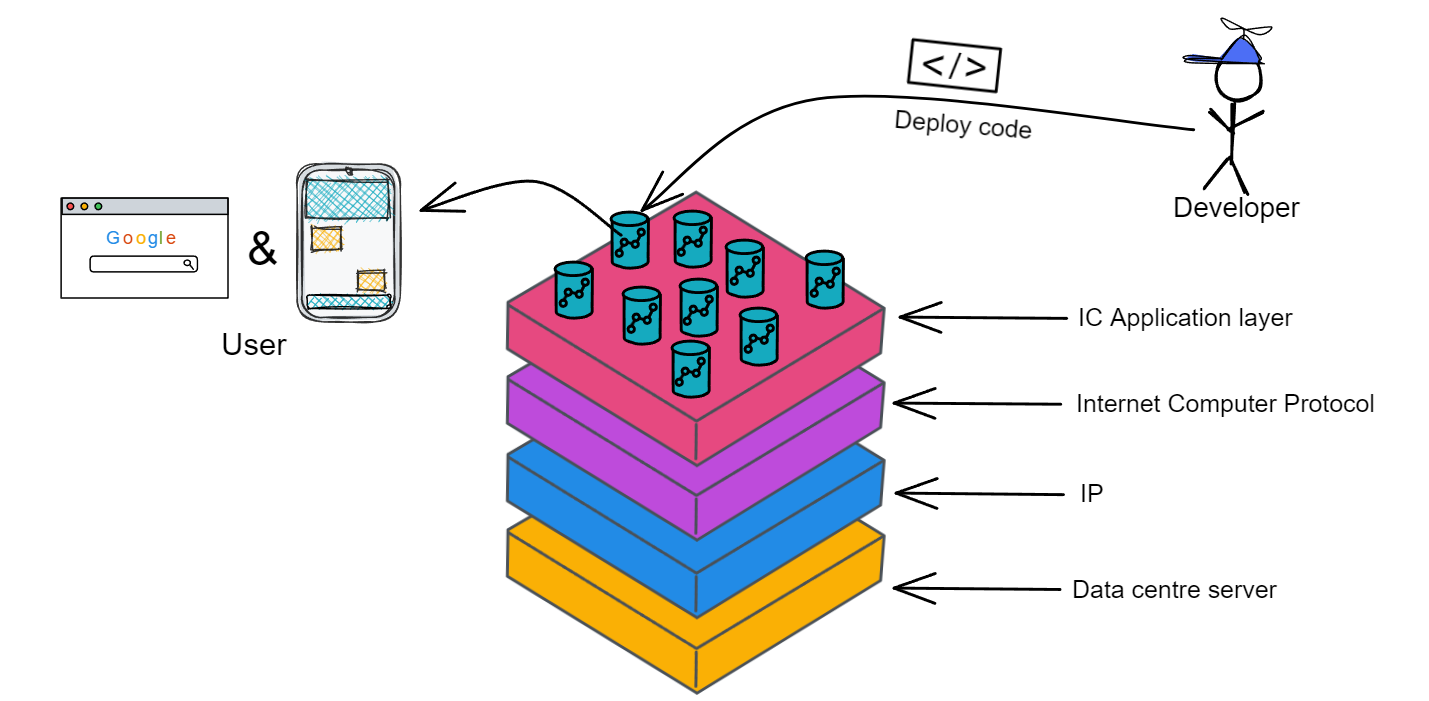

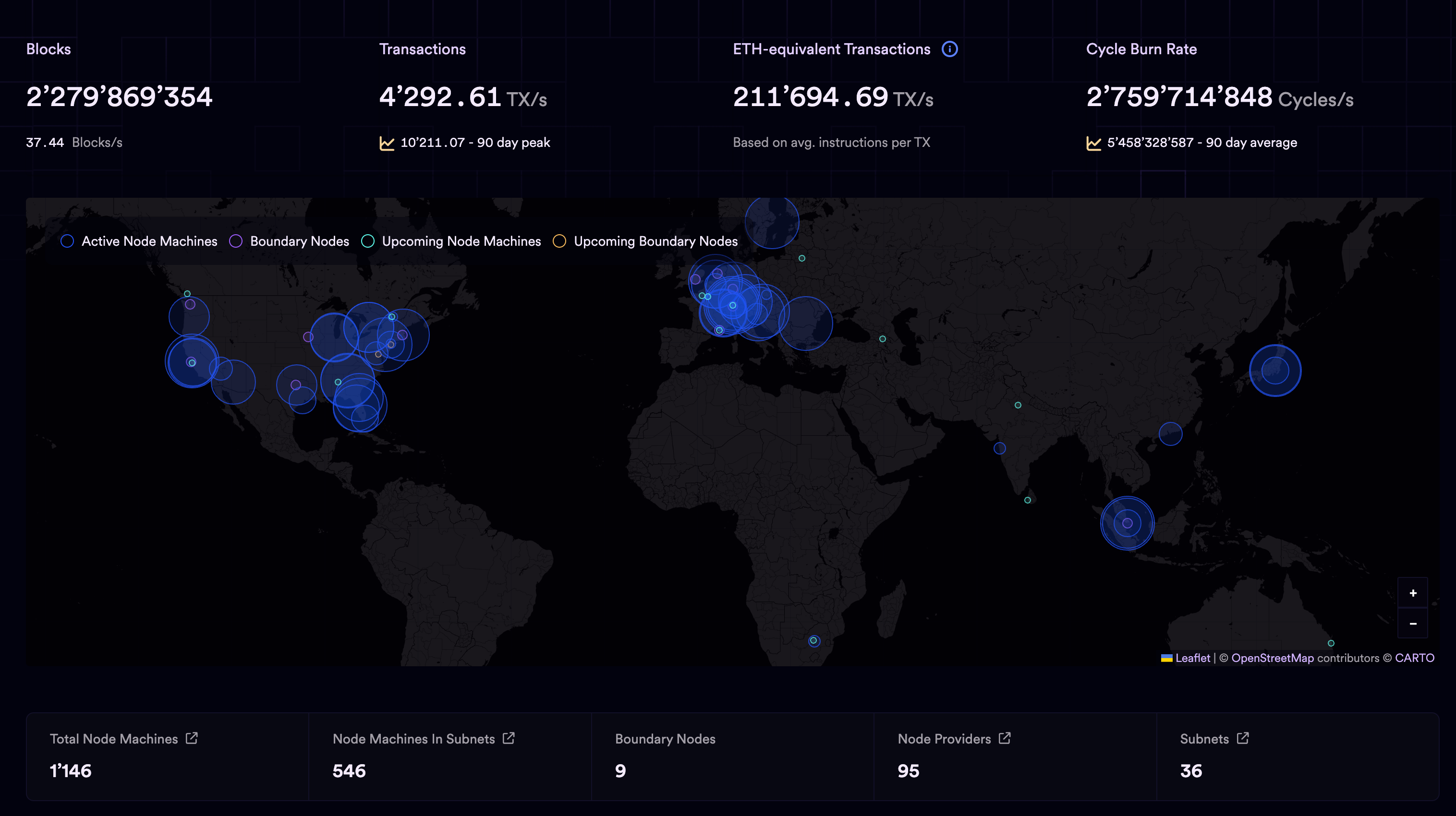

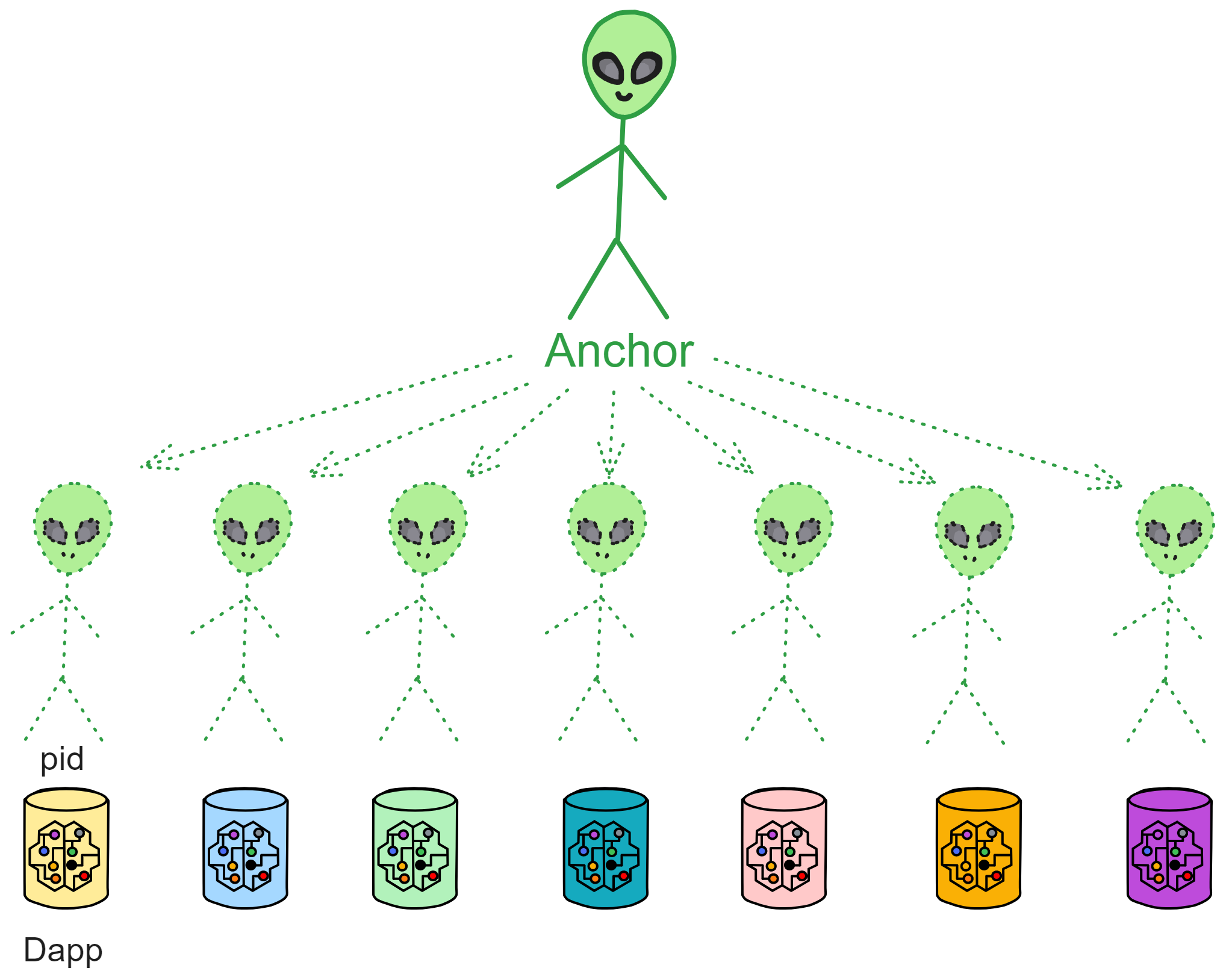

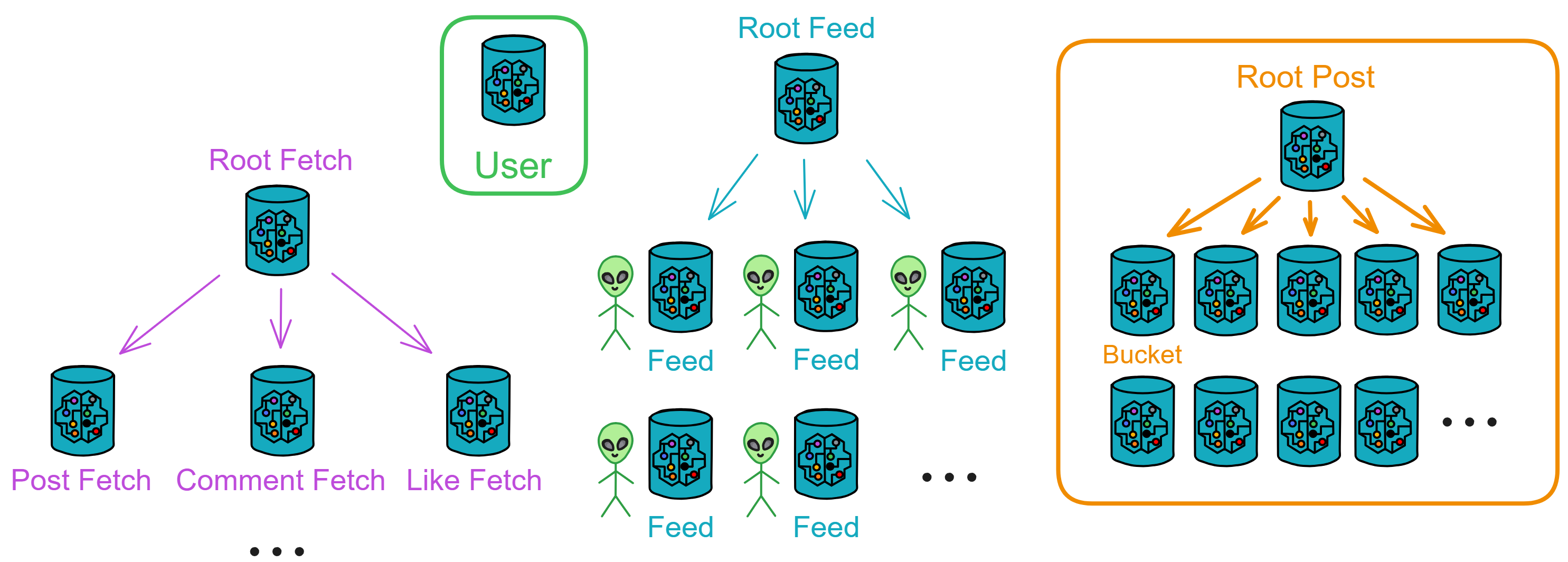

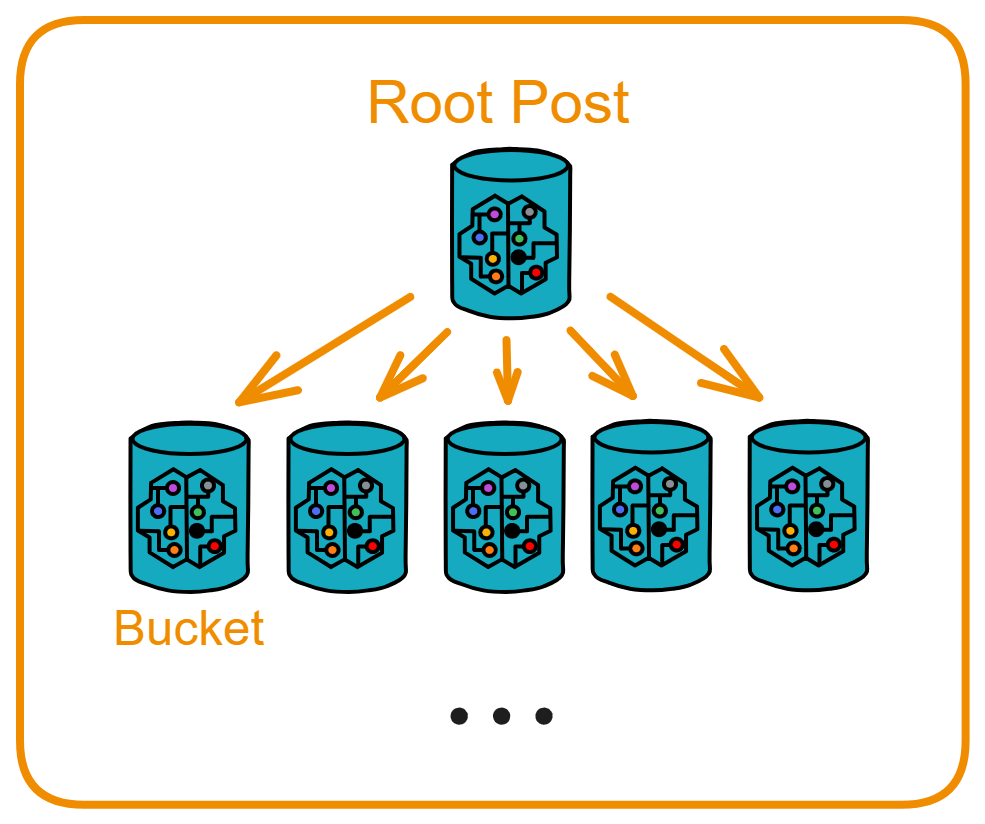

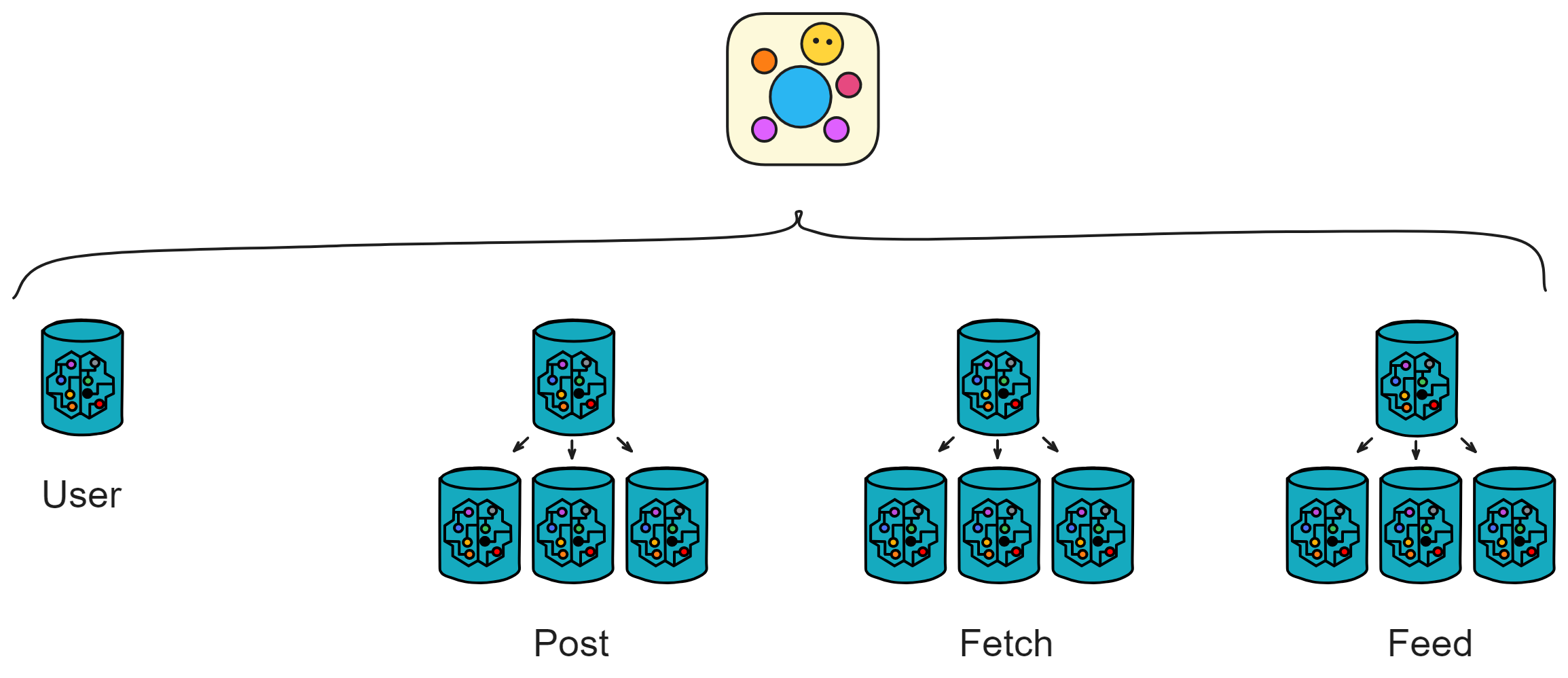

Originating from Dominic's ideas in 2015: a horizontally scalable decentralized World Computer. The embryo was completed in 2018. After years of deep optimization of the underlying protocols, it launched in 2021. After years of development, it aims to become a decentralized cloud services platform, The underlying infrastructure has been developed into a decentralized cloud, while the upper-layer applications achieve decentralization through DAO-controlled permissions to accomplish the goal of decentralization. deploying various dapps 100% on-chain without the need for other centralized services.

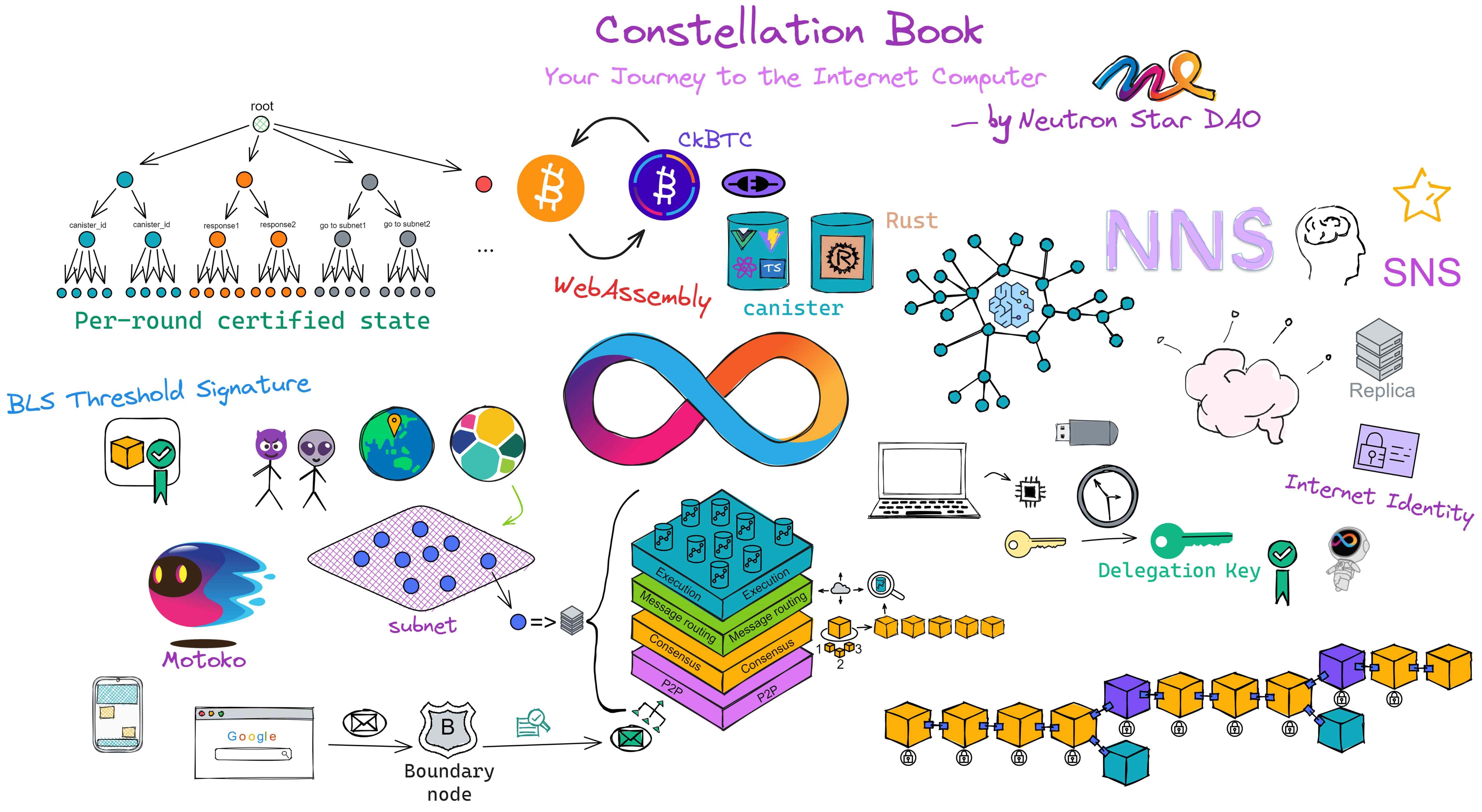

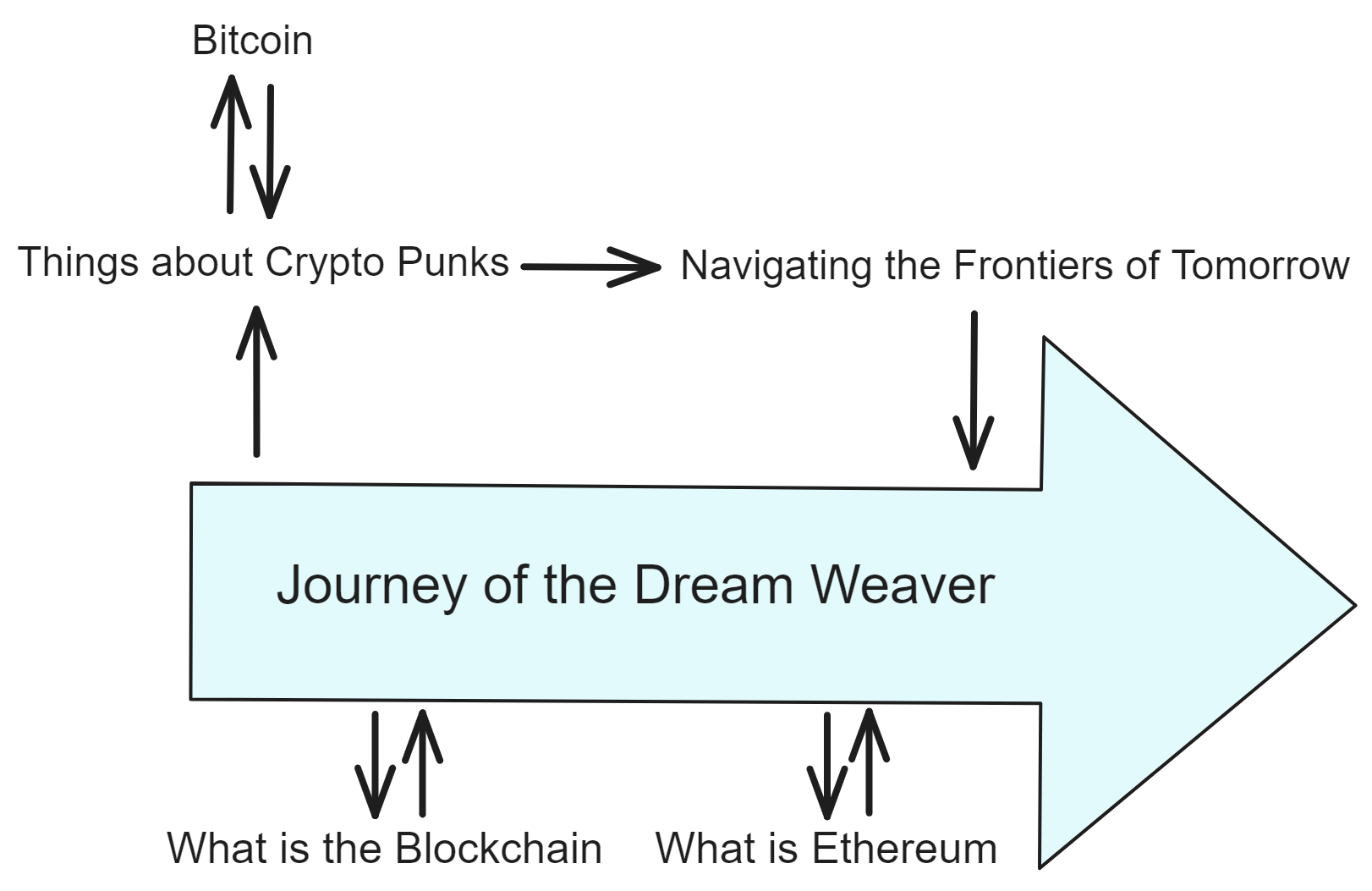

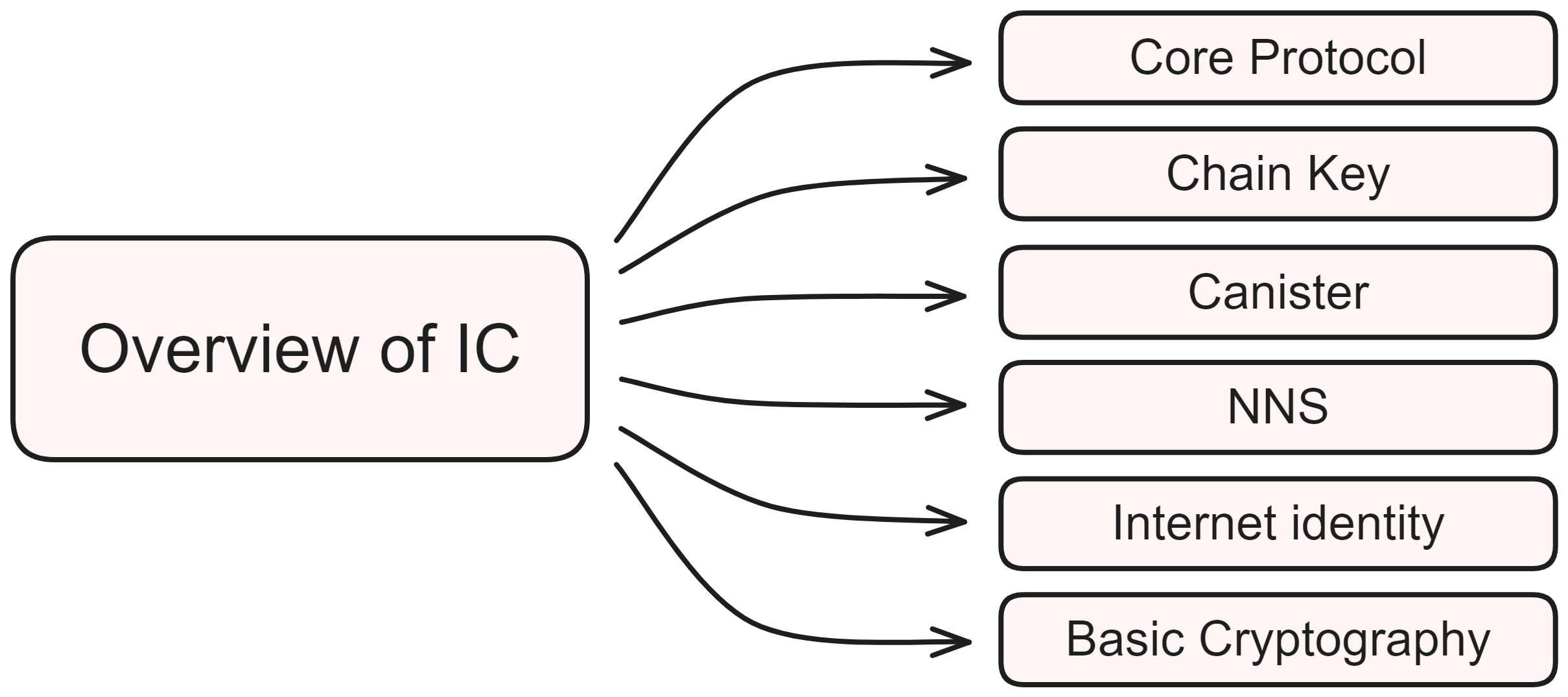

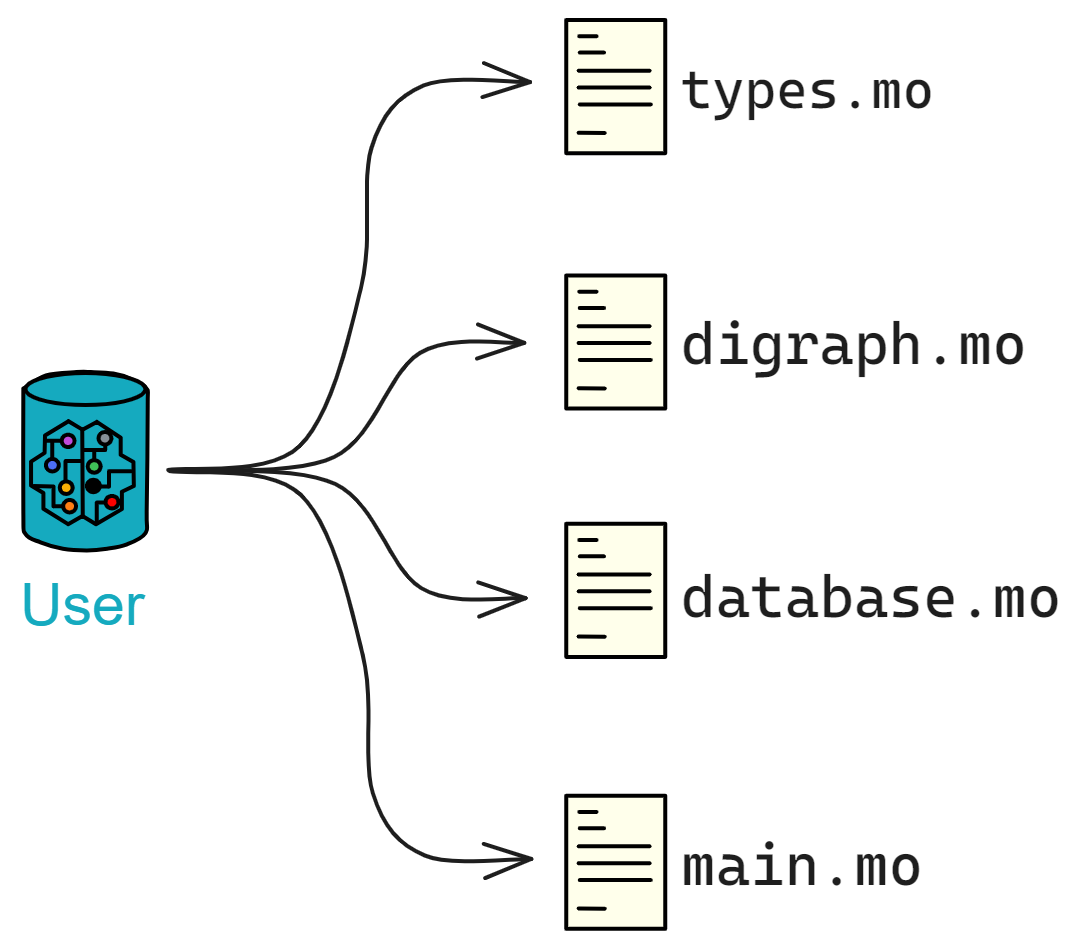

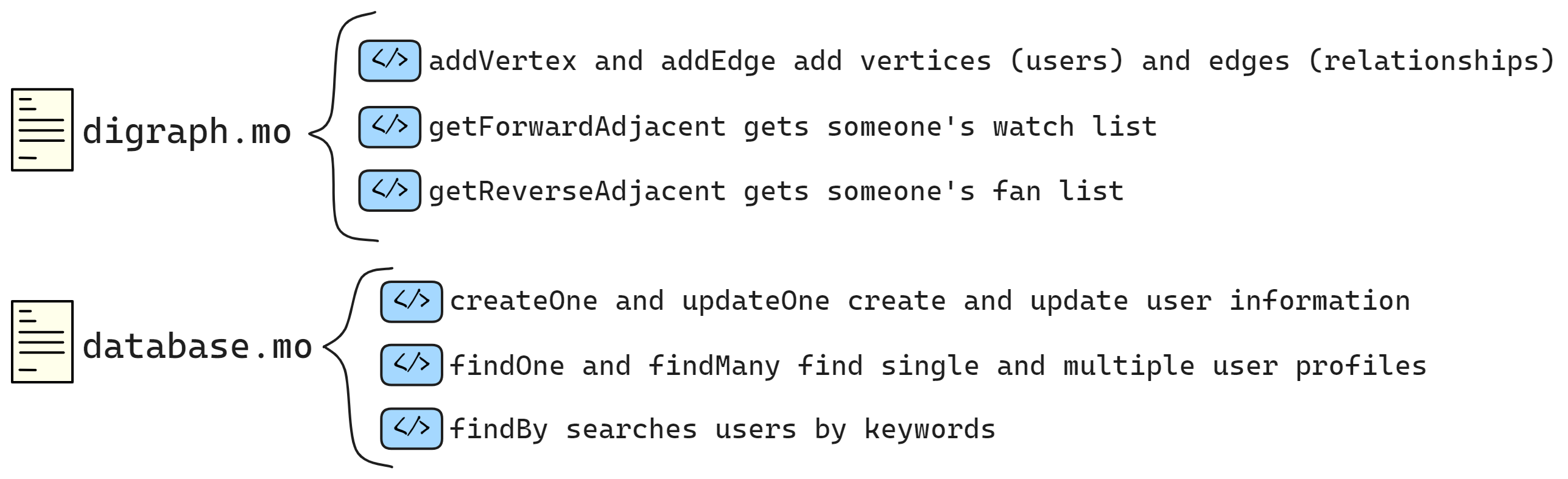

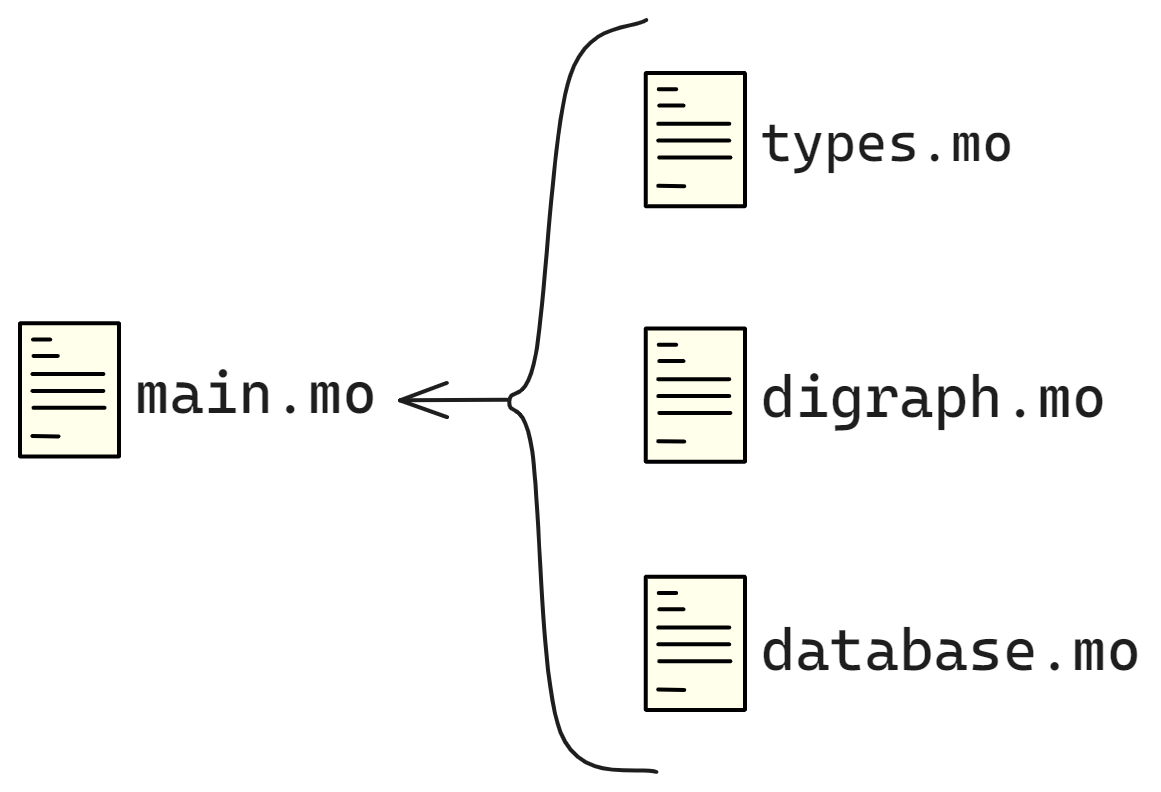

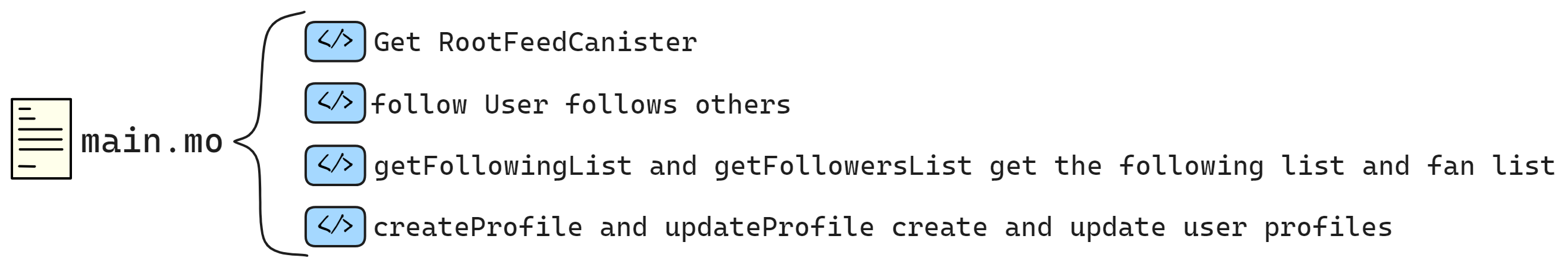

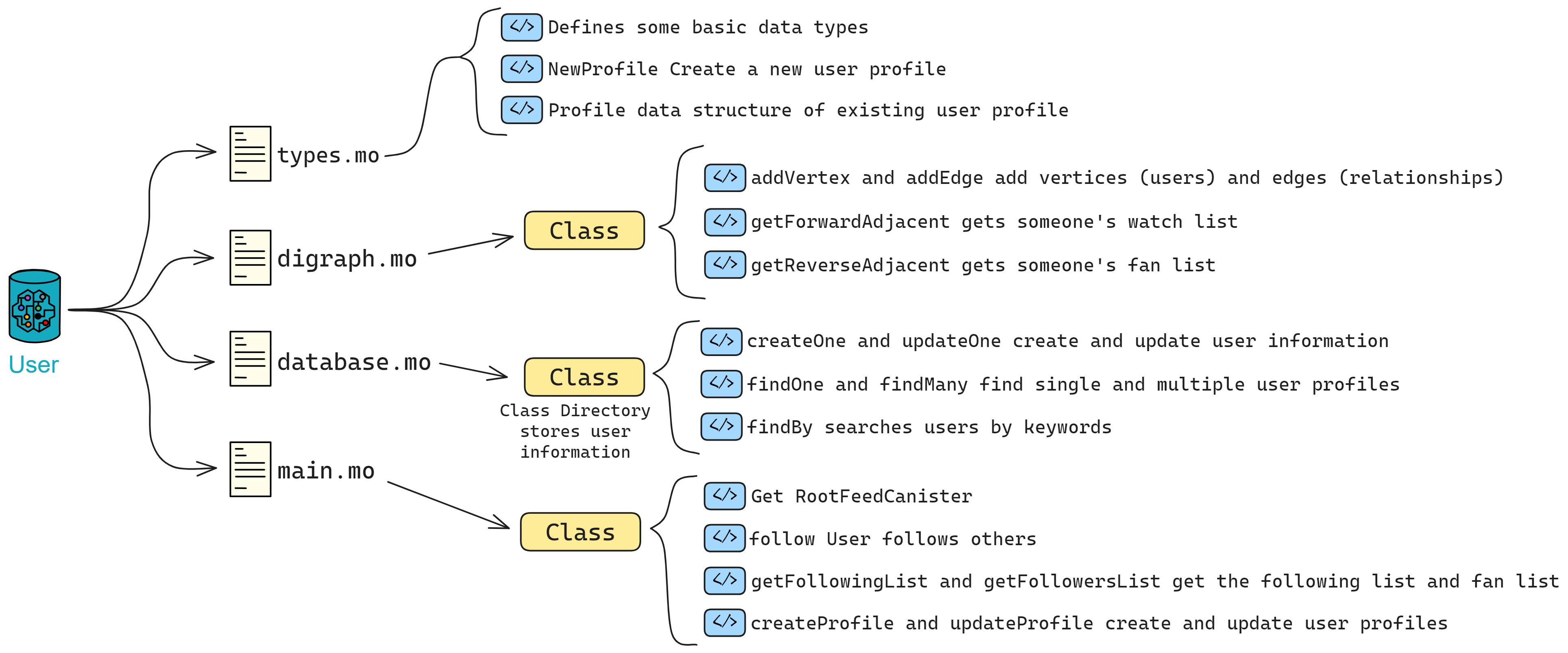

The structure of the Constellation Book:

-

The first half is vivid and interesting, and the second half is concise

-

The first half talks about IC principles, and the second half discusses development in practice

Quick IC Overview in Videos

Why do I Write this book

Early on, after learning about IC, I collected and arranged materials from the IC Whitepaper, Medium, ICPL forums, and IC Developer Forums. After talking to a friend about the IC architecture, I told her I was making notes on the IC resources and would share them when they were ready. I didn't expect that it would take a year. After experiencing the long years of procrastination, and with what I learned later, I finally put together the first version of the notes. After a period of further study, I thought it would be better to share these notes. Making it an open-source book helps everyone learn about IC and contributes to the IC developer community.

Learn blockchain with zero thresholds and level the learning field for IC.👨💻👩💻

Why open source?

I really like the Rust open-source community. There are many open-source books and high-quality projects in the Rust community, which have helped me a lot. I have learned a lot from the Rust open-source community. Projects like Bitcoin, Ethereum, and their related projects also have a strong open-source atmosphere, and I hope that more and more open-source projects will emerge in the IC community for everyone to learn from each other.

In addition, the IC content is updated and iterated quickly. With open-source references, everyone can contribute, keeping the content fresh and up-to-date.

Join the developer discussion group for this book, correct errors, make modifications, suggest improvements, and contribute to the open-source book together!

Journey of the Dream Weaver

In every geek's heart lies a dream of decentralization.

This is a story about Dominic Williams.

JAHEBIL😎

He liked to call himself "JAHEBIL", which stands for "Just Another Hacker Entrepreneur Based in London".

He wrote code, started businesses, and worked as a "dream maker" in London.

He was brave and optimistic, living secludedly, was not interested in socializing, and was only concerned about the brand of his company, living a repetitive and focused life. Even working 18 hours per day in an unfriendly entrepreneurial environment in the UK, he could still hum the happiest tunes.

Compared to Silicon Valley, the UK's entrepreneurial environment was like a living hell. The success of numerous companies in Silicon Valley led to even greater investments, which attracted top entrepreneurs from around the world to the area, either to reach the pinnacle of success or fail. Unlike Silicon Valley's talented pool, in the UK, every company that Dominic founded could only produce limited returns, then he would start another company, getting caught in a vicious cycle of working hard, making dreams, maintaining dreams, and making new dreams... He had to sharpen his skills to improve technically while ensuring the company remained profitable.

Tired of the cyclical life that was only draining his enthusiasm, the seed of hope ground at Dominic's heart. In 2010, as a dream maker, he decided to take chances!

Fight My Monster was a massively multiplayer online game and a children's social network. He planned to connect children from all over the world to play this game online. Players have their own monsters and use different skills to attack each other in a turn-based battle. At that time, on the other side of the earth, China was also going crazy for "Roco Kingdom" developed by Tencent.

After comparing HBase, Cassandra, and other databases, Dominic chose the early Cassandra beta, the first horizontally scalable distributed database. Dominic built various tools for Cassandra, including the first system that ran atomic transactions on scalable eventually consistent storage. They were the first team in the world to attempt to use a complex Cassandra system in a production environment.

Dominic wanted to connect millions of users worldwide through a distributed system, which was a significant innovation at the time. After several test runs, the game was officially launched on New Year's Day in 2011, and in just two weeks, it gained 30,000 users, skyrocketing to 300,000 users in just a few months.

The team achieved remarkable success in scaling up their business despite having a meager budget. However, they had overlooked one crucial factor - the development of a large-scale online game required an army of specialists, including Flash developers, database administrators, network engineers, payment system experts, operations and maintenance personnel, complexity analysts, cartoon artists, sound engineers, music composers, animation experts, advertising gurus, and more.

This massive expenditure surpassed any budget they had previously allocated to their entrepreneurial projects. In due course, Dominic and his friends exhausted their investment and were forced to seek additional funding. Day and night's overnight work resulted in an almost perfect growth chart, "so raising funds shouldn't be too difficult".

Dominic introduced the project to investors, saying, "Fight My Monster is growing rapidly and will soon exceed one million users. We believe that engineers live in an exciting era, and the Internet infrastructure is already mature. Many things can suddenly be achieved in new ways. This company was initially self-sufficient on a very limited budget. You may have heard that Fight My Monster is expanding, and now many excellent engineers have the opportunity to join us".

"Let me explain our architecture plan and why we did it this way. As you can see, it is not a traditional architecture. We chose a simple but scalable three-tier architecture, which we host in the cloud. I hope this system works..." Dominic continued passionately.

"Since you already have so many users, maybe you should try to get more of them to pay. This will not only prove your ability to make money but also get our investment." The other party frowned, clearly reluctant to invest. Faced with such a crazy user growth, London investors even suspected Dominic of fabricating data.

Now, Dominic's heart was shattered like a biscuit. He had underestimated the difficulty of financing.

Soon, the pieces turned to crumbs. At this point, competitors had already secured funding from other investment firms and prevented other investment firms from investing in Fight My Monster.

Was it because they weren't working hard enough?

Since Cassandra was also in early development, at the end of 2011, due to bugs in Cassandra beta code, Fight My Monster's user data was almost lost. It took several days and nights of work from Cassandra senior engineers and Dominic's team to save the data, ultimately resolving this horrific incident.

Dominic was in a constant flurry of activity.

He raced between his home and the company like a wound-up toy car: just after fixing a bug, he was off to meet with investors without even testing it first; he barely had time to eat, his head buried in meetings with engineers to discuss system adjustments; as soon as he left the company, he was off to the supermarket to buy Christmas gifts for his wife...

The team leans heavily on him in all aspects. His workload has become insanely heavy, even in a place like Silicon Valley where securing investments is a piece of cake. Back in the day, Dominic was putting in 12-18 hours a day – a work rhythm that's rarer than a unicorn in today's startup scene. Balancing business management, system oversight, and coding, he had to carve out time for his personal life. Before you knew it, Dominic's wife had also acclimated to this lifestyle: during the day, she directed and planned game scenarios, optimizing gameplay and devising project workflows; come evening, she seamlessly transitioned into cooking, tidying up the house, perfectly in sync with Dominic. It's a juggling act that makes Cirque du Soleil look like child's play.

Dominic's photos.

Dominic became even more hardworking after that. Fortunately, he happened to meet a company willing to invest in him in Silicon Valley. Finally, an investor was moved by this dreamer in front of them. Within a few weeks of raising funds, Fight My Monster's user count quickly reached 1 million. A few months later, Dominic relocated the company to San Mateo (a small town near San Francisco).

HHe went downstairs to grab a coffee, and when he got back, his notepad was chock-full of common ConcurrentHashMap issues and fixes. He listened to geeks dish on startup team-building tips and networked with Silicon Valley venture capitalists.

After a year of development, Dominic was very excited in 2012:

"Fight My Monster appeared on TechCrunch today, worth a big cheer, thank you!!! We are working hard and hope we can achieve our dreams."

"If you haven't played Fight My Monster yet, I suggest you give it a try - there really is nothing better online. We are incubating in the UK, and the best time to experience the site is weekdays (after school) from 4 pm to 8 pm or weekends during the day."

However, setbacks soon followed. After raising funds, the company's newly hired financial executive disagreed with the original team on strategy, and the disagreement escalated into a fatal decision-making mistake. Although the user base continued to grow, Fight My Monster was facing insurmountable obstacles.

From a financial return perspective, Fight My Monster was a failure, with the user base only expanding to over 3 million in 2013.

However, this experience was very valuable, and the most precious part was finding a group of capable colleagues who were also obsessed with the distributed system that Dominic loved. Dominic admired Fight My Monster's designer Jon Ball, who was always able to create a bunch of beautiful models using the team's design system, and later set the record for the highest ad viewing rate. There was also Aaron Morton, the Cassandra engineer who said "We work together, believe each other", who worked with Dominic to build the "engine" behind the game - a distributed database.

In hindsight, Dominic's Flash game was no longer popular as people were gradually shifting towards mobile games and tablets. In 2010, Steve Jobs announced that Apple would no longer support Flash on its devices due to its impact on device performance. Additionally, due to frequent security vulnerabilities, BBC published an article titled "How much longer can Flash survive?" Shortly after, Adobe announced that it was abandoning the Flash project and switching to Animate for professional animation.

Reflecting on his experience, Dominic said, "We could have succeeded but needed to move faster: if I had my time again, I would have relocated to The Valley very soon after the company started growing to raise money faster and gain access to a bigger pool of experienced gaming executives".

Engineers turned entrepreneurs, entrepreneurs turned engineers

Although the gaming industry was withering away, in Silicon Valley, a strange yet formidable force captured Dominic's attention, causing ripples to emerge in his mind's stagnant pool of inspiration. These ripples quickly transformed into rolling waves, propelling him towards a new frontier.

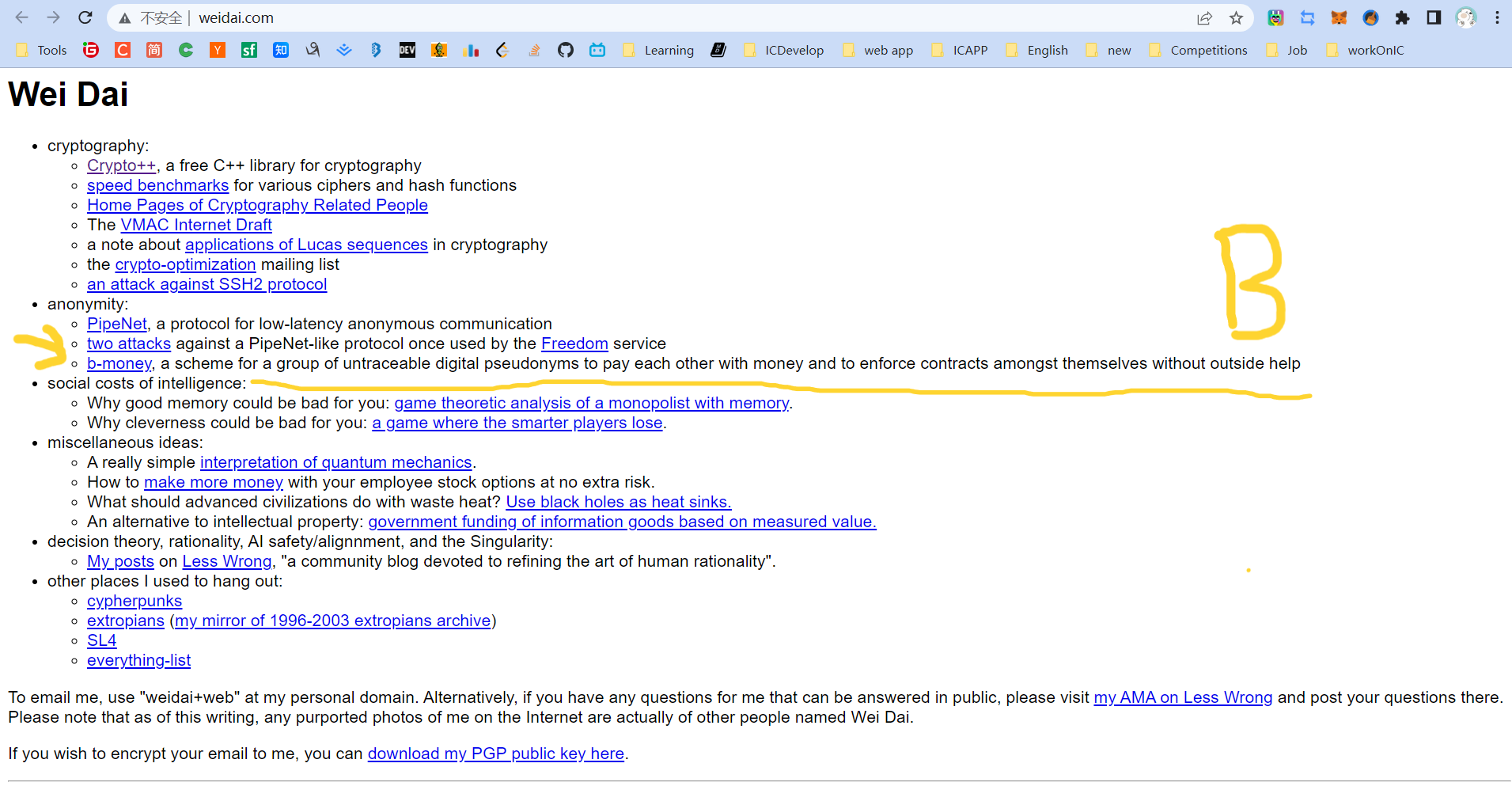

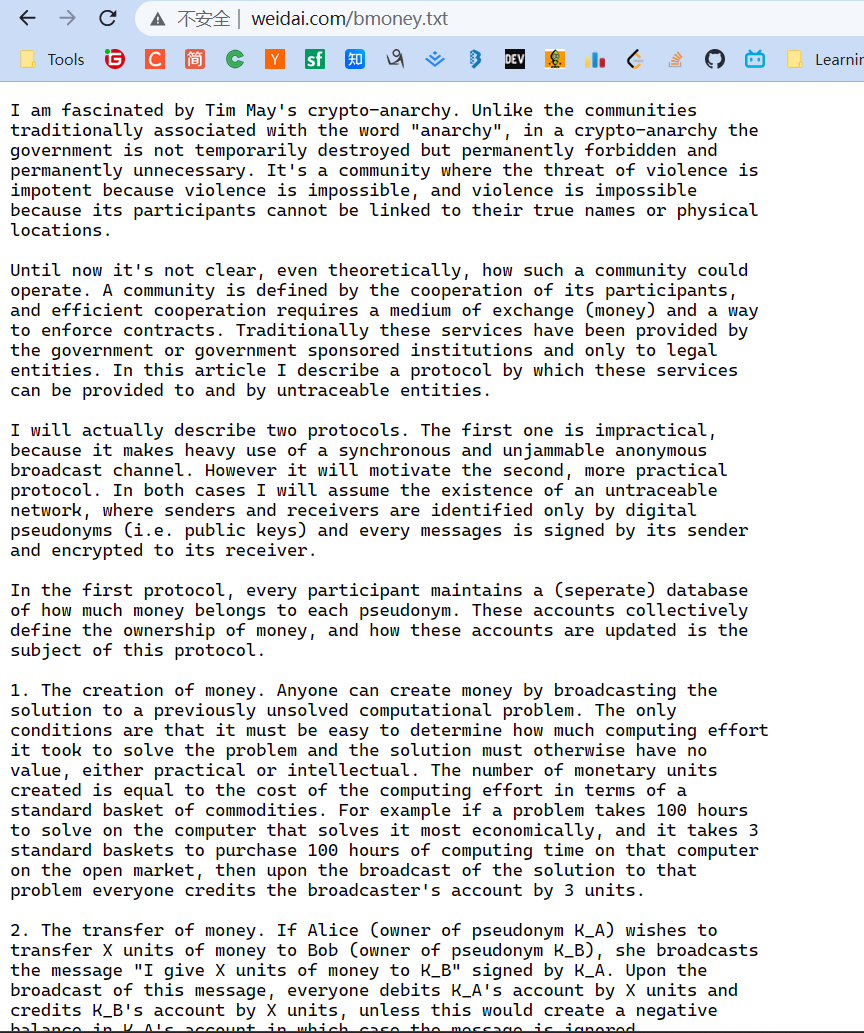

Rewinding back to 1998, Dominic was developing an online storage system for a London-based startup and utilizing Wei Dai's Crypto++ library extensively. While browsing Wei Dai's website, Dominic stumbled upon an article about "b-money", one of the early precursors to Bitcoin.

Little did Dominic know that this article from 1998 would ignite the spark for Bitcoin and connect the timeline of his cryptography career for the next decade.

After leaving Fight My Monster in 2013, Dominic became obsessed with Bitcoin, a long-dormant interest that had been sparked by the "b-money" article he had stumbled upon back in 1998.

Wei Dai wrote in b-money:

"I am fascinated by Tim May's crypto-anarchy. Unlike the typical understanding of 'anarchy', in a crypto-anarchy the government is not temporarily destroyed but permanently forbidden and unnecessary. It's a community where the threat of violence is impotent because violence is impossible, and violence is impossible because its participants cannot be linked to their true names or physical locations through cryptography".

Click here to see more about Crypto Punks.

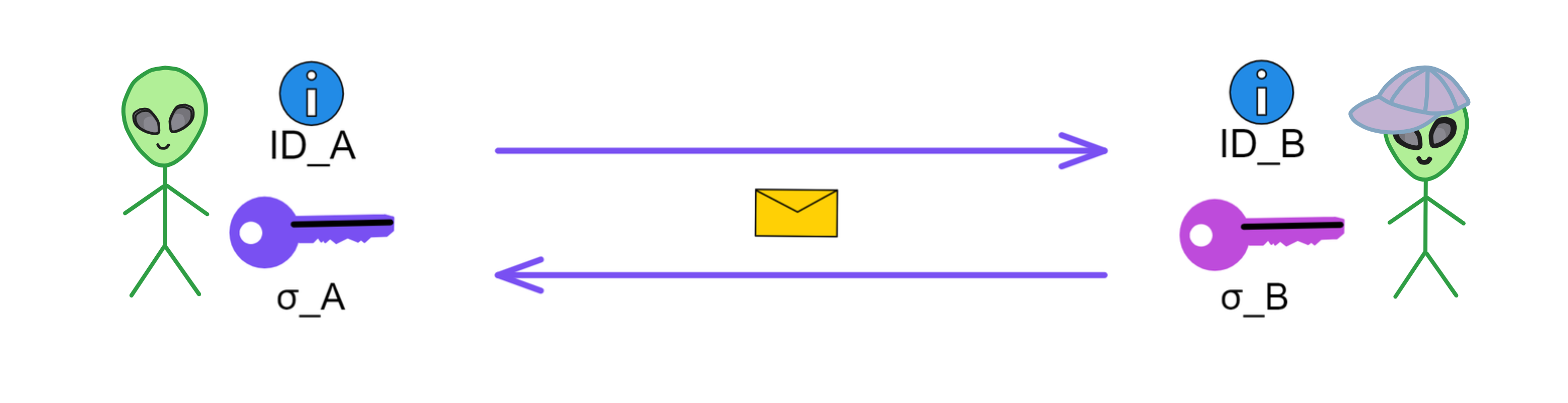

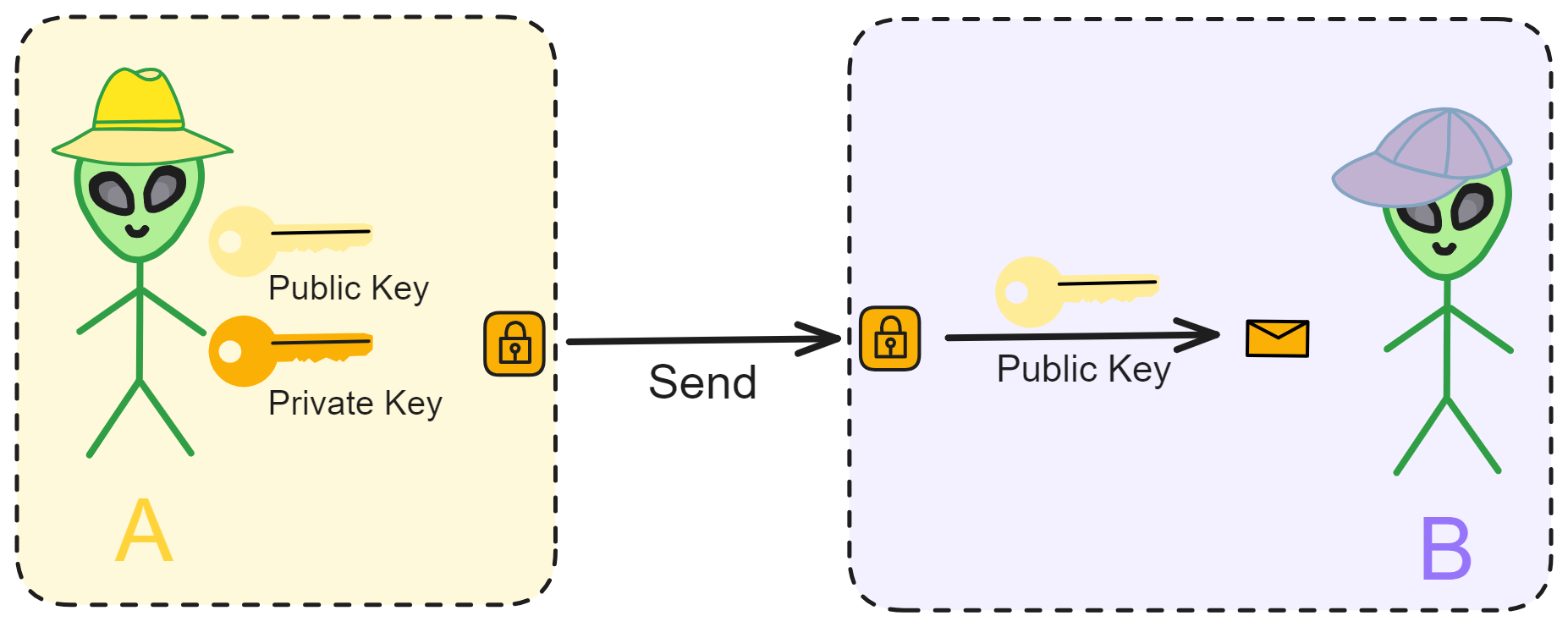

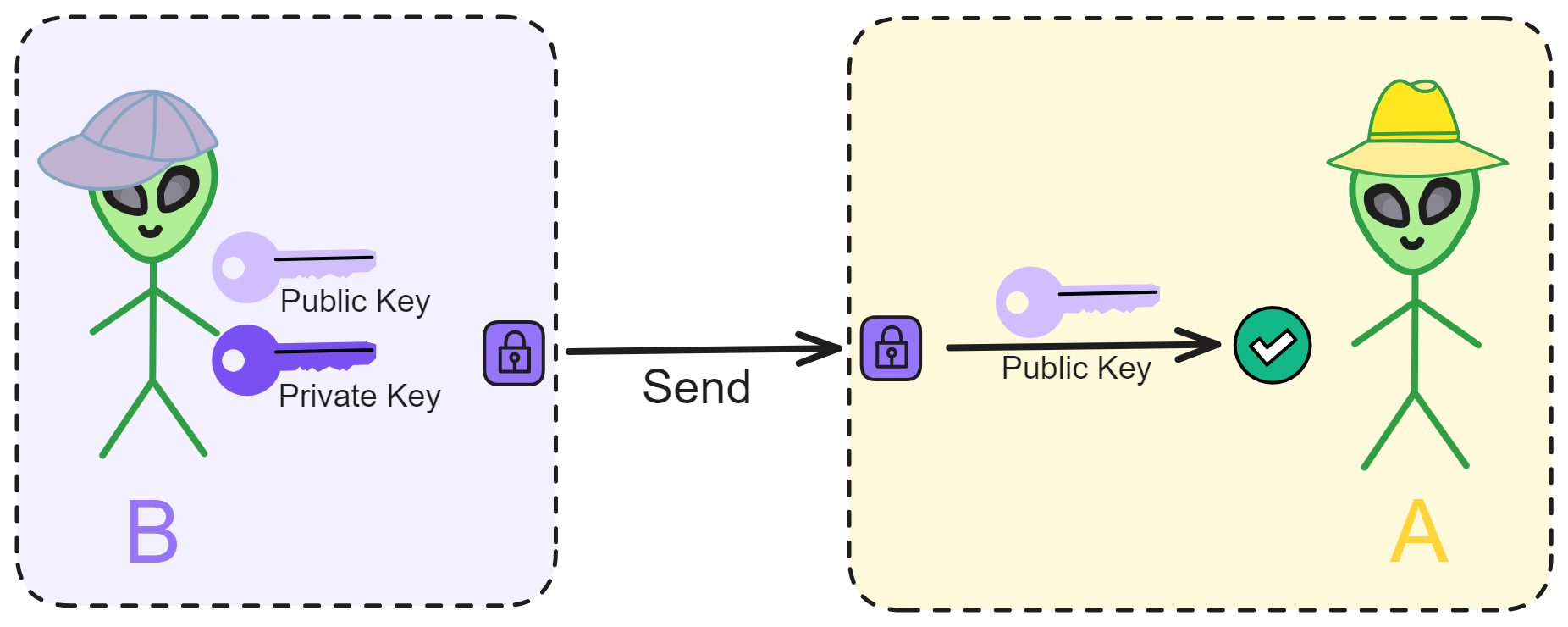

B-money outlined a protocol for providing currency exchange and contract execution services in an anonymous community. Wei Dai first introduced a less practical protocol as a prelude because it required a synchronous, interference-free anonymous broadcast channel. He then proposed a practical protocol. In all schemes, Wei Dai assumed the existence of an untraceable network where senders and receivers could only be identified by digital pseudonyms (i.e., public keys), and each message was signed by the sender and encrypted for the receiver.

Wei Dai described in detail how to create currency, how to send it, how to prevent double-spending, how to broadcast transaction information, and how to achieve consensus among servers.

We cannot determine the relationship between Wei Dai's b-money and Bitcoin or whether he was inspired by "The Sovereign Individual" to design a scheme. However, Wei Dai's website shows that it was last updated on January 10, 2021.

Dominic said, "I love algorithms and distributed computing, and I won many awards in this field during university. More importantly, I had never encountered a technical field that combined the thinking of finance, law, politics, economics, and philosophy, while also having the potential to drive significant change in the world. For me, this emerging field was a dream come true. I made a bigger life decision to re-enter this field with my career".

In 2013, Dominic started trading cryptocurrencies full-time, while picking up some basics about consensus algorithms on the side 🤣😉. He was interested in designing faster consensus mechanisms to work in conjunction with proof-of-stake (PoS) architectures.

Here is Dominic's "Bitcoin ATM kiss" in 2014.

On February 7th, Mt. Gox, the world's largest Bitcoin exchange, announced its bankruptcy. Dominic took to Twitter to express his distress at the beloved Bitcoin's plummeting value.

Bitcoin crashed to 666 USD. (It's currently priced at 23,000 USD, down from its peak of 69,000 USD.)

He buckled down, diving deep into traditional Byzantine fault tolerance, combined with his previous experience building online games. Dominic conceived a decentralized network that could horizontally scale like Cassandra - allowing more and more servers to join while maintaining high performance. In just a few days, Dominic published a paper describing a scalable cryptocurrency called Pebble. The paper quietly circulated in the small crypto circles, the first to describe a decentralized sharded system. In this system, each shard uses an asynchronous Byzantine consensus algorithm to reach agreement.

While learning, Dominic didn't forget to trade Bitcoin. Investing in Bitcoin brought him some peace of mind, and now he could focus on designing consensus algorithms without having to work day and night or in a frenzy.

Later Dominic fused the early Ethereum ethos, like an intricate tapestry. Inspired by Ethereum, after Dominic heard of the concept of the "World Computer", it became his ultimate goal - he thought perhaps this was what the future internet would look like.

He realized smart contracts were actually a brand new, extremely advanced form of software. He saw that if the limitations of performance and scalability could be broken through, then undoubtedly almost everything would eventually be rebuilt on blockchains. Because smart contracts run on open public networks, superior to private infrastructure, they are inherently tamper-proof, unstoppable, can interconnect on one network, making each contract simultaneously part of multiple systems, providing extraordinary network effects, and can operate autonomously, inheriting blockchain properties, and so on.

Most of the details have faded over time into the mists of history - although not much time has passed, in the rapidly changing evolution of blockchain, this period seems to have already spanned the peaks and valleys of an entire lifetime.

Dominic's research focuses on protocols and cryptography, which are like dry kindling that reignites the dreamer's inner spark. Dominic believes that these protocols and cryptographic algorithms can change the world. He took the "D" for decentralized and the "finity" for infinity and combined them to create "DFINITY". DFINITY aims to create a decentralized application platform with infinite scalability.

After returning to Mountain View, California from China, Dominic tweeted, "China Loves Blockchain :)".

Like Ethereum, Dominic also received investment in China. The reason is simple. Silicon Valley invested in Bitcoin very early and gained huge returns, so they don't care much about "altcoins" (the secular view is that coins other than Bitcoin are "altcoins", which are basically coins that are slightly improved versions of Bitcoin).

Next, I need to introduce what the DFINITY team is doing in detail.

Point. Line. Surface. Solid!

We know that Bitcoin is the pioneer of blockchain. Bitcoin itself emerged slowly in the long-term pursuit of decentralized currency projects by cypherpunks. Click here to see more about Blockchain.

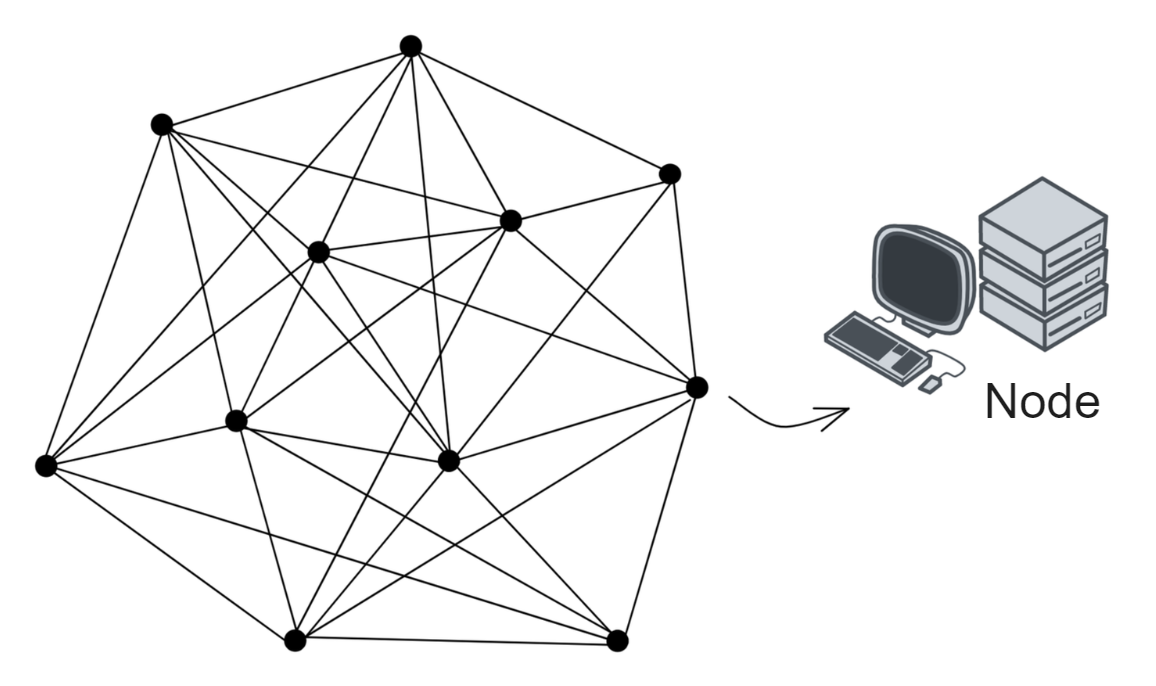

It created an open ledger system: people from all over the world can join or leave at any time, rely on consensus algorithms to keep everyone's data consistent, and create a co-creation, co-construction, and shared decentralized network. People just need to download the Bitcoin software (downloading the source code and compiling it is also possible), and then start running it on their own machines to join the Bitcoin network. Bitcoin will enable computers around the world to reach a consensus and jointly record every transaction. With everyone's record, the legendary feature of "immutability" in blockchain is achieved, which is actually a matter of majority rule and not being able to cheat everyone.

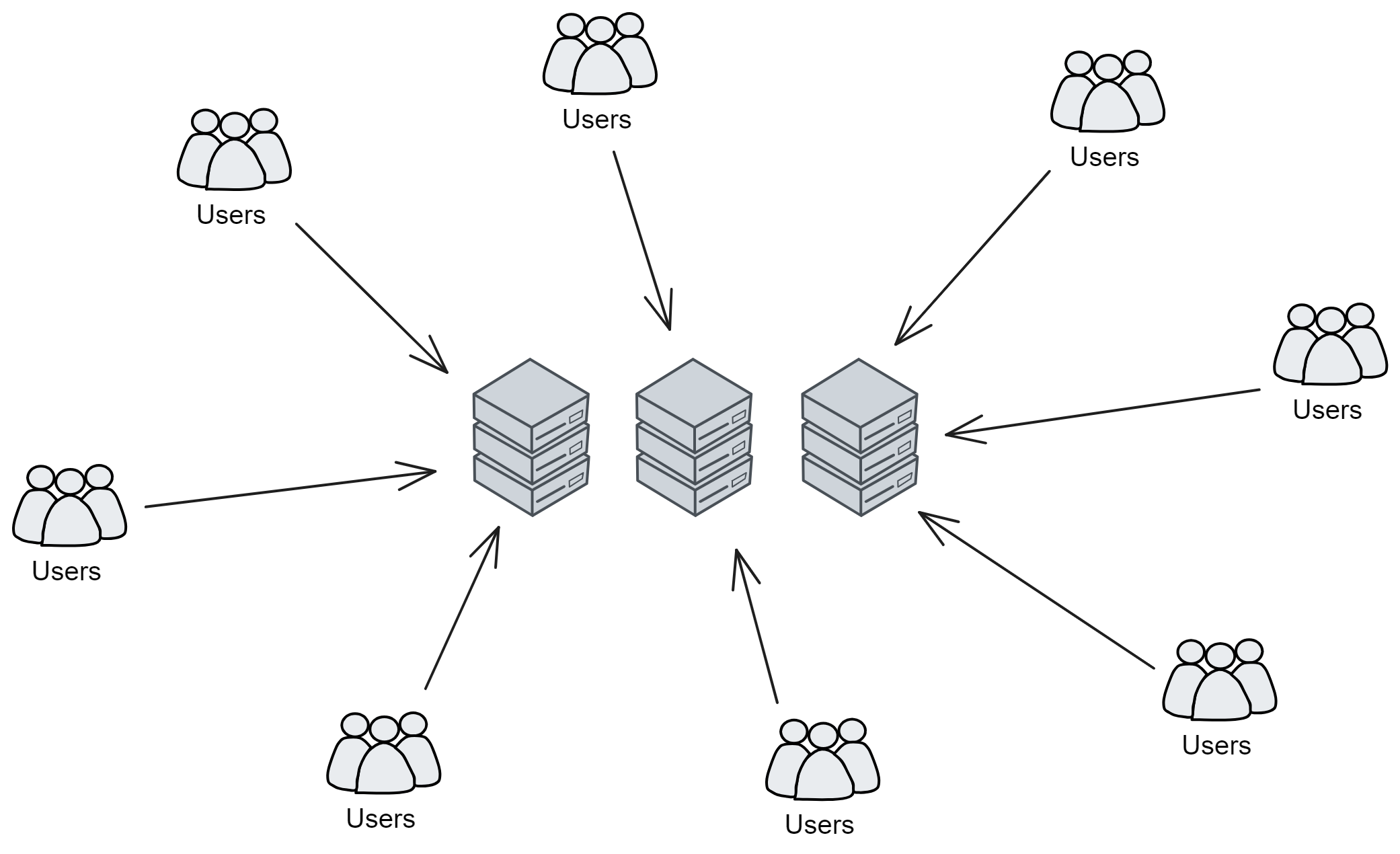

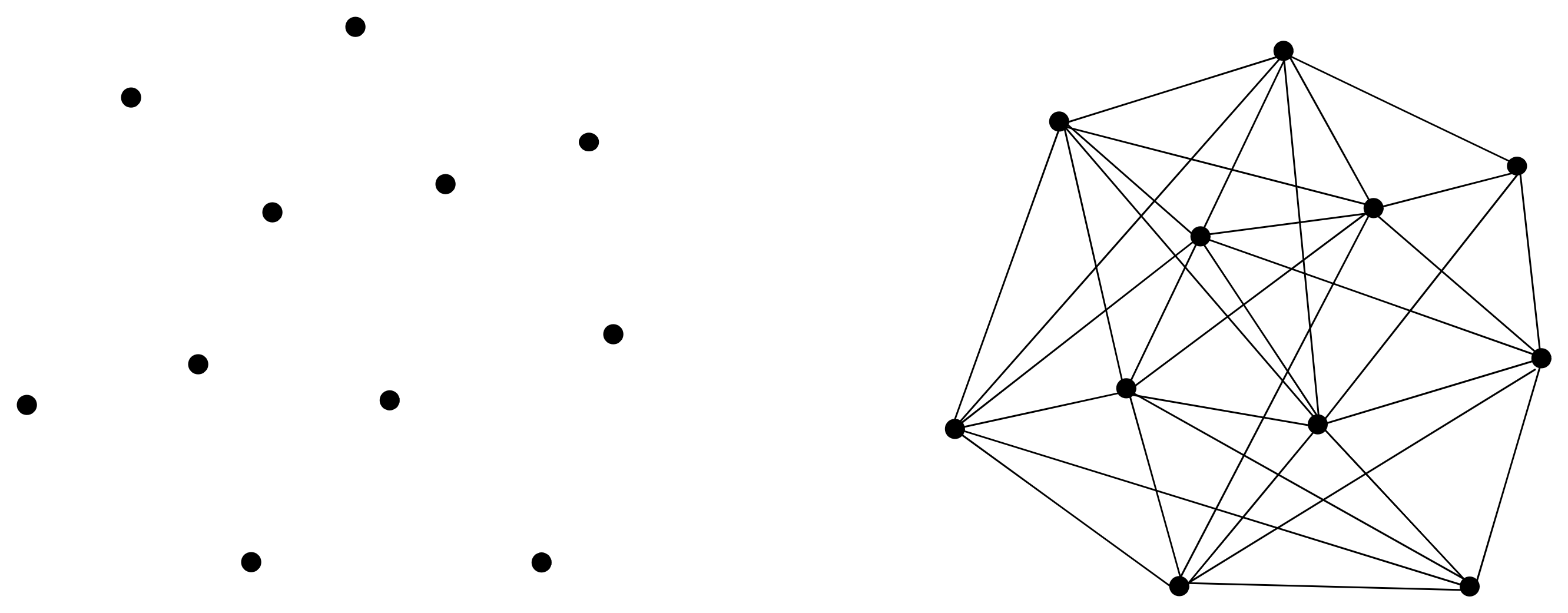

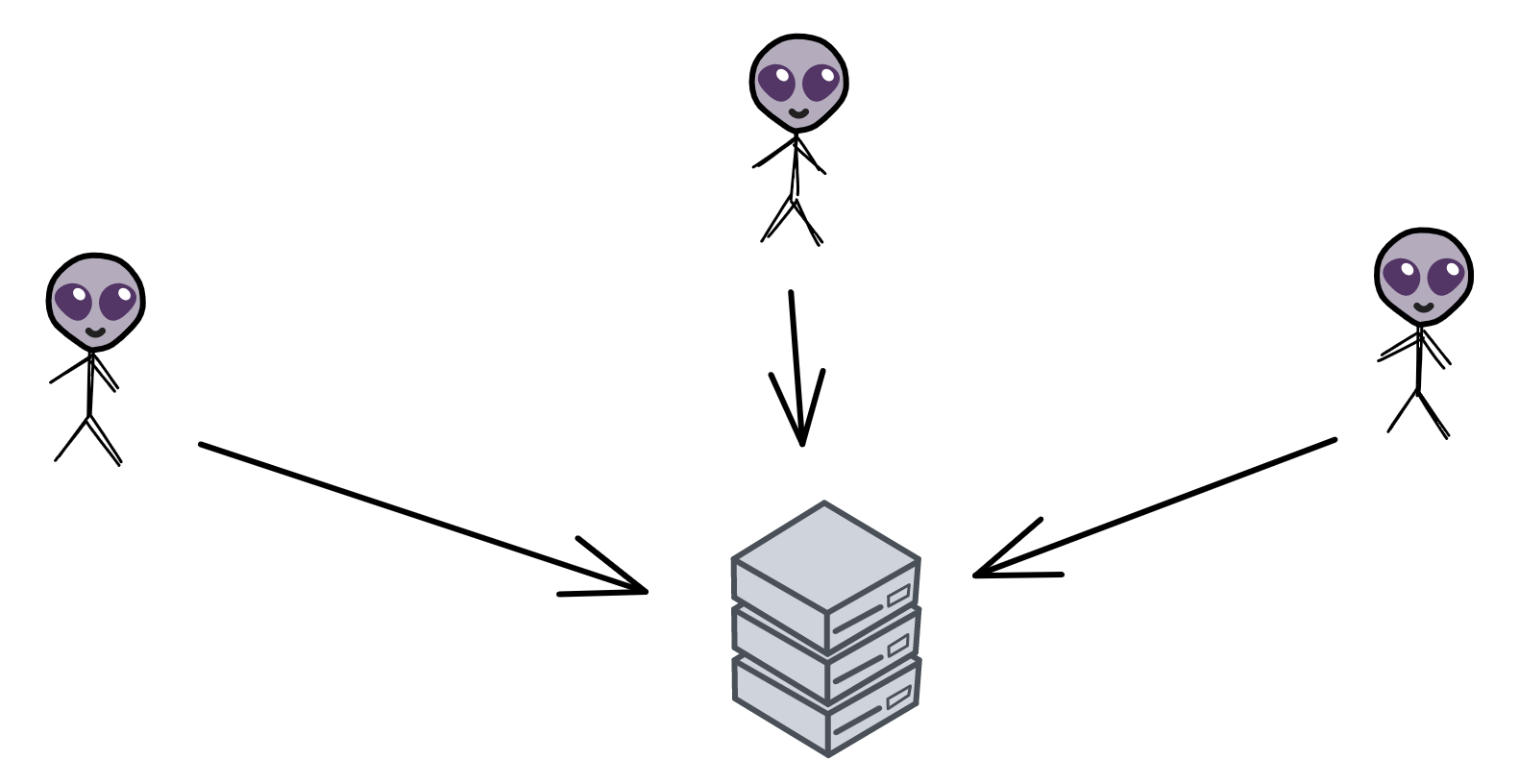

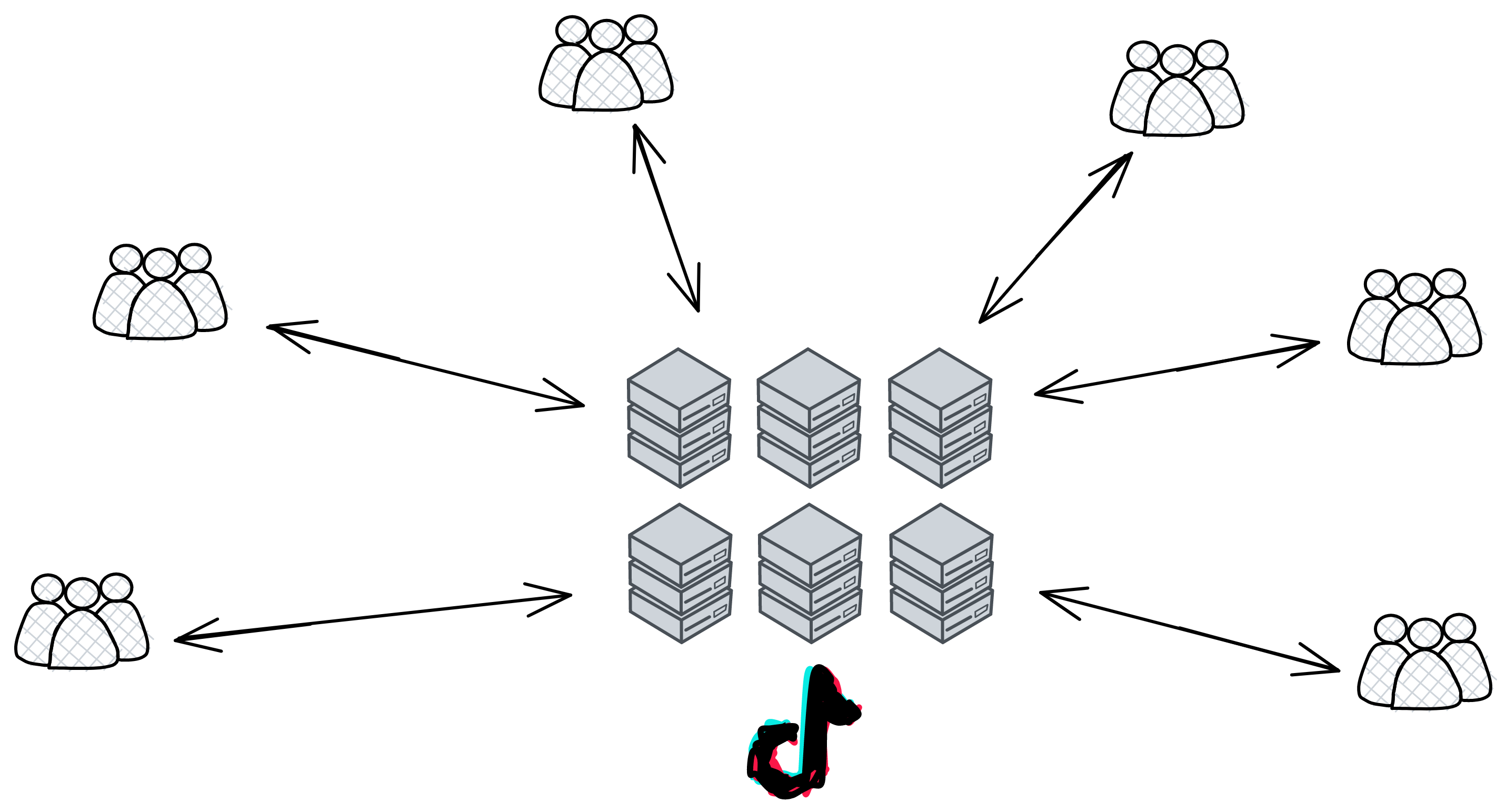

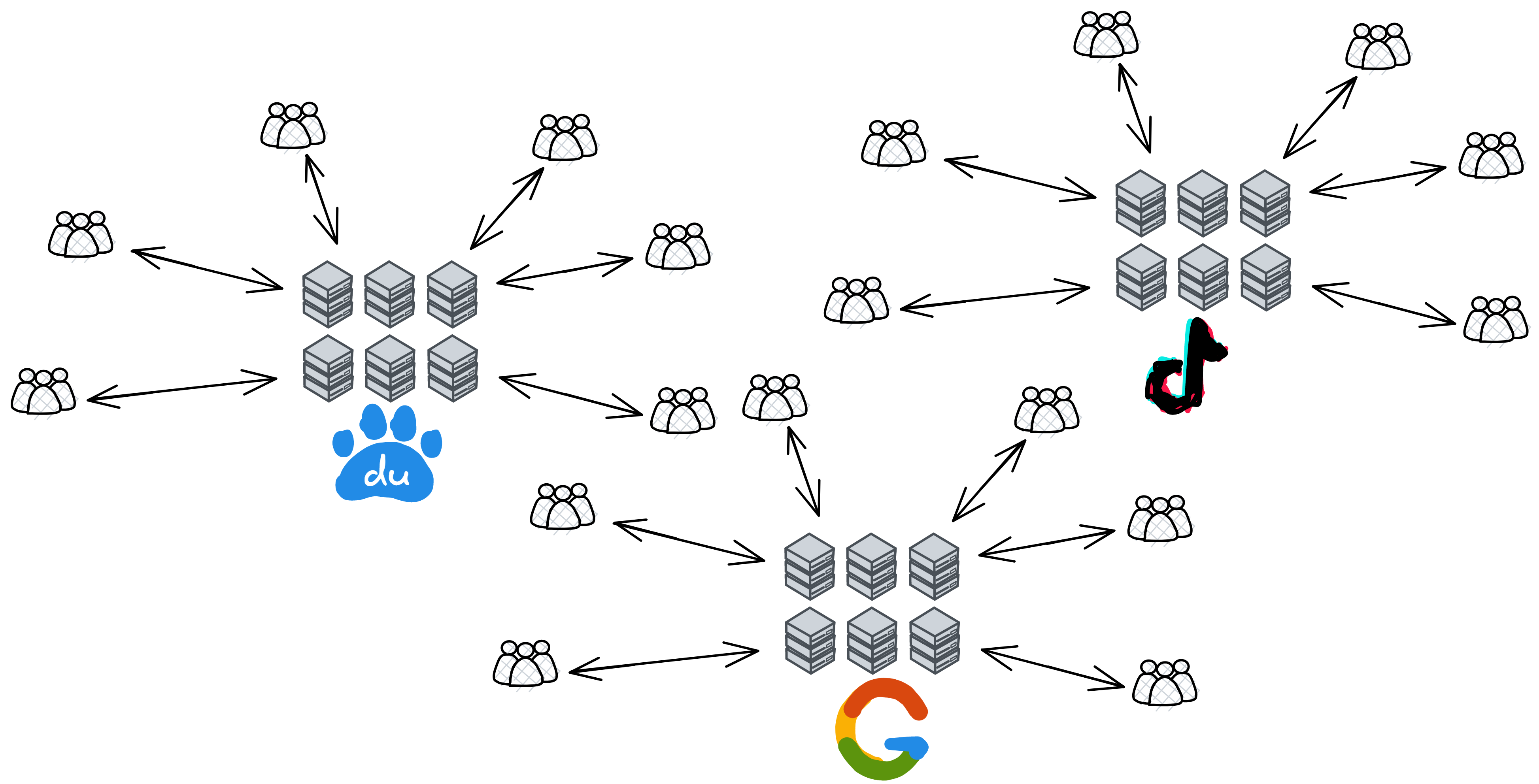

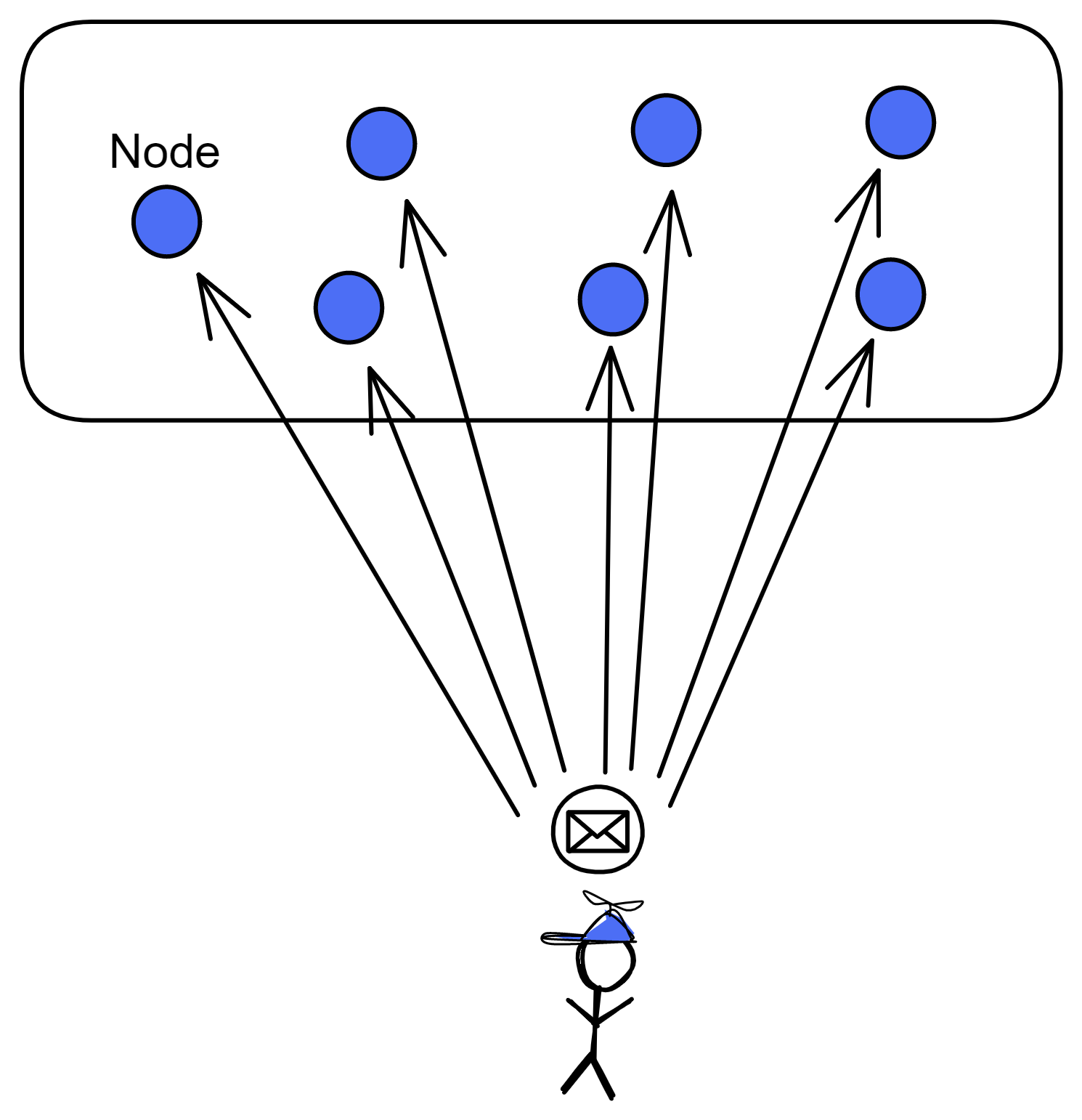

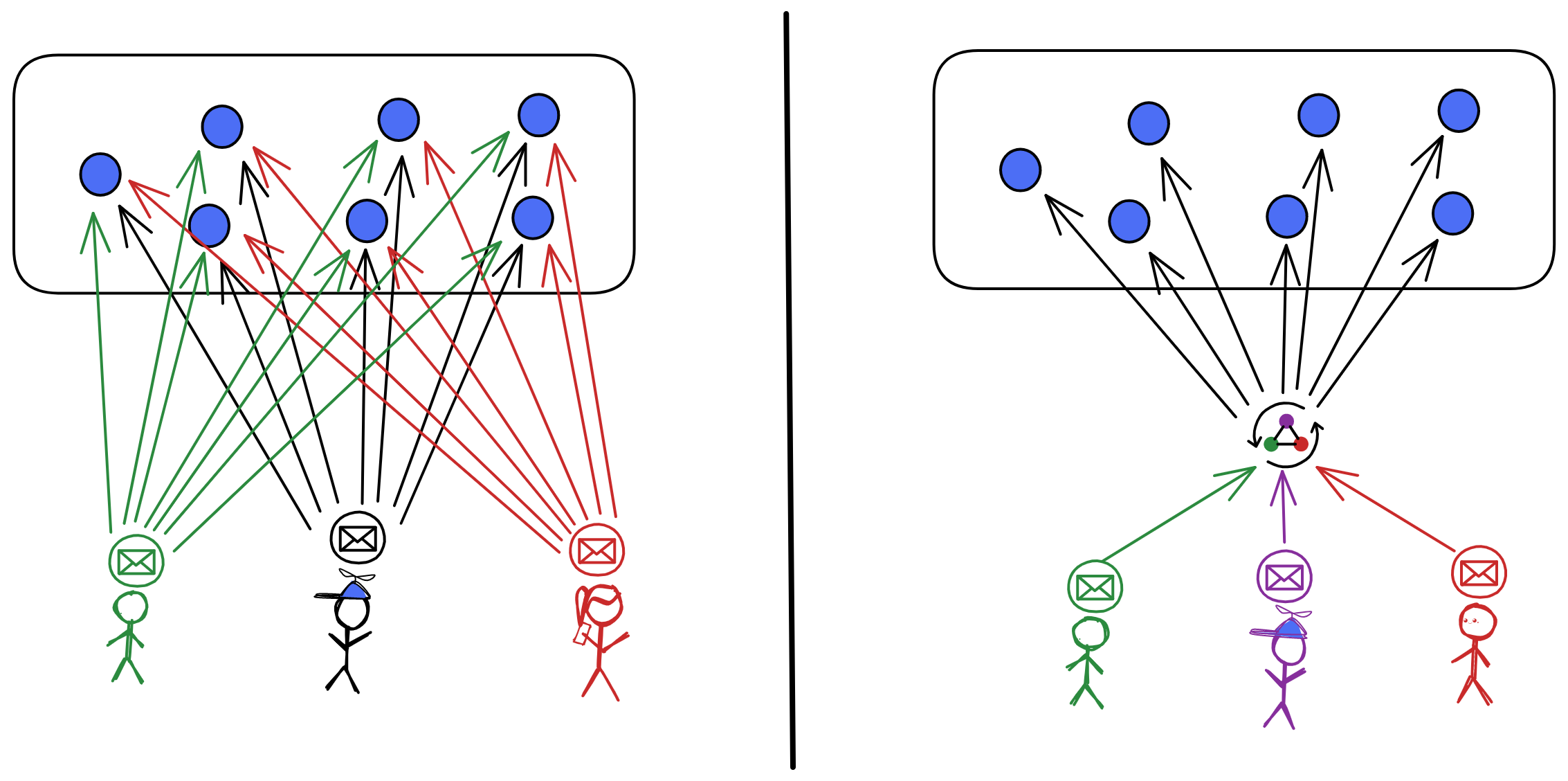

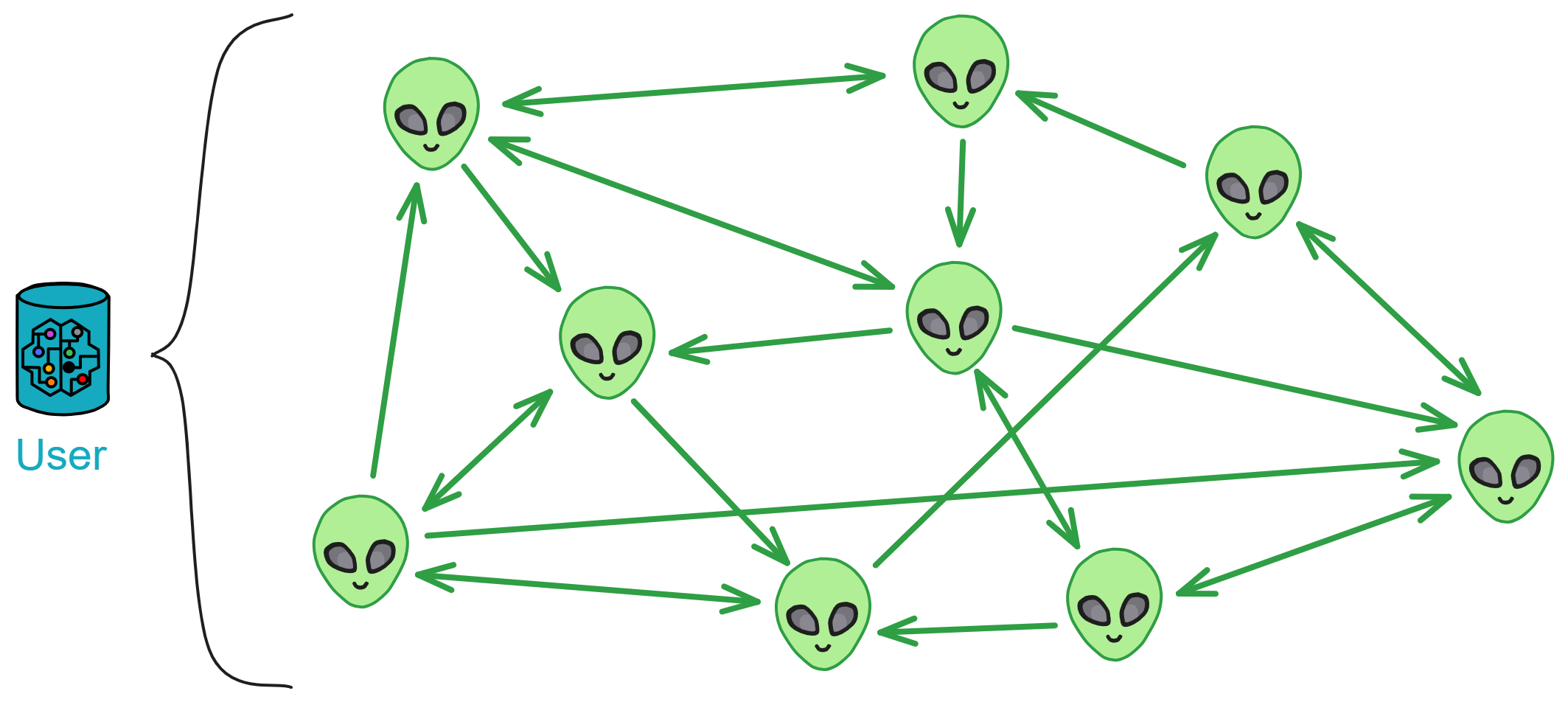

In the traditional network architecture, user data is indiscriminately stuffed into servers. Users cannot truly control their own data, and whoever controls the server has the final say. If we can view this one-to-many relationship as "points" scattered around the world, the user's data flows into each point tirelessly.

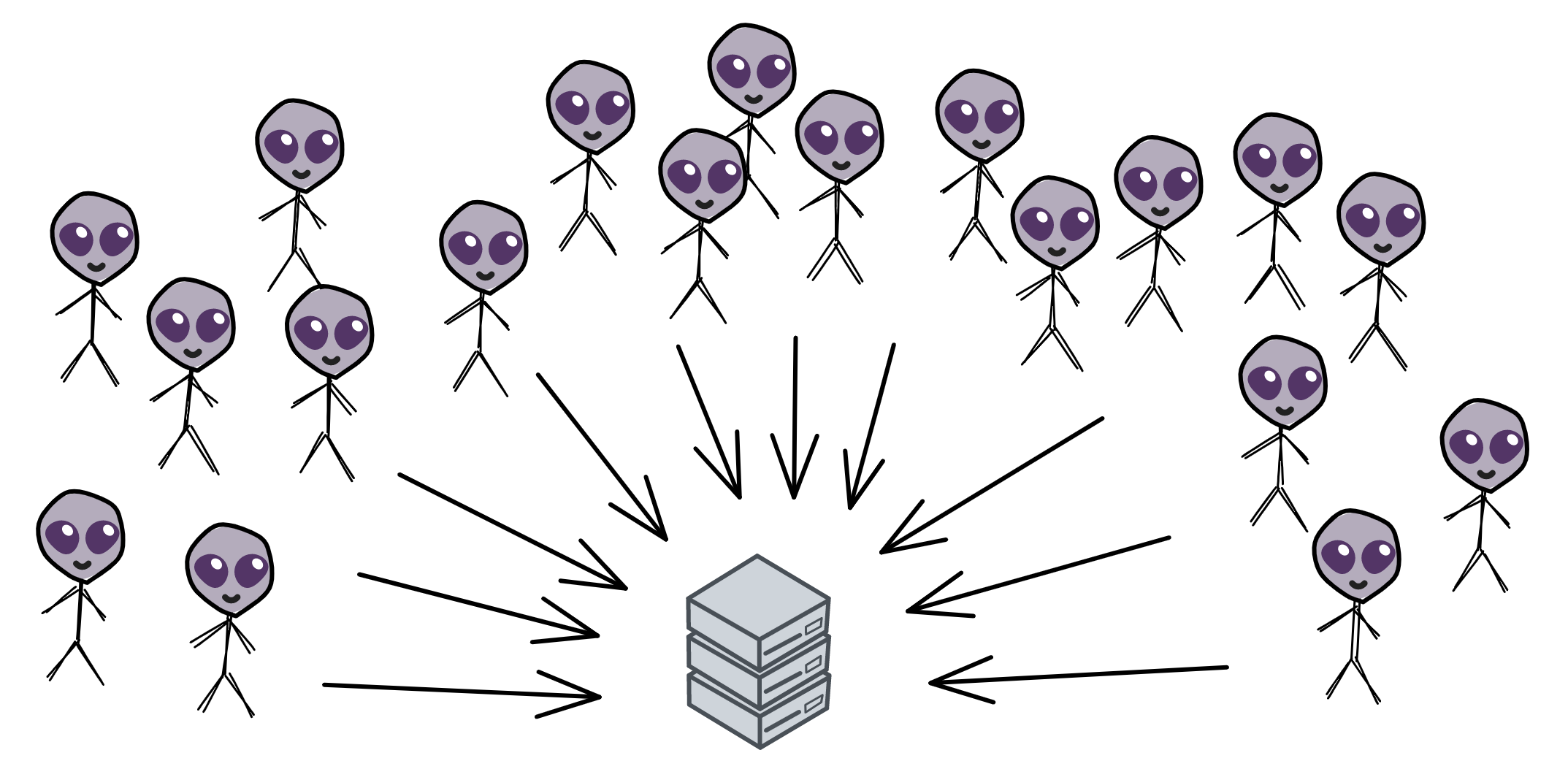

So the Bitcoin network can be seen as a "line", it connects isolated points into a line, making the internet more fair and open. What Bitcoin does is to combine computers from all over the world to form a huge "world ledger". So what if you want to record something else? Just imitate Bitcoin and create a new one!

Six years after the birth of Bitcoin, a "surface" that can deploy software on a decentralized network gradually emerged, called Ethereum. Ethereum is not a replica of Bitcoin's world ledger. Ethereum has created a shared and universal virtual "world computer", with Ethereum's virtual machine running on everyone's computer. Like the Bitcoin ledger, it is tamper-proof and cannot be modified. Everyone can write software and deploy it on the virtual machine, as long as they pay a bit of ether to the miners. (There are no miners anymore, but that's another article 😋)

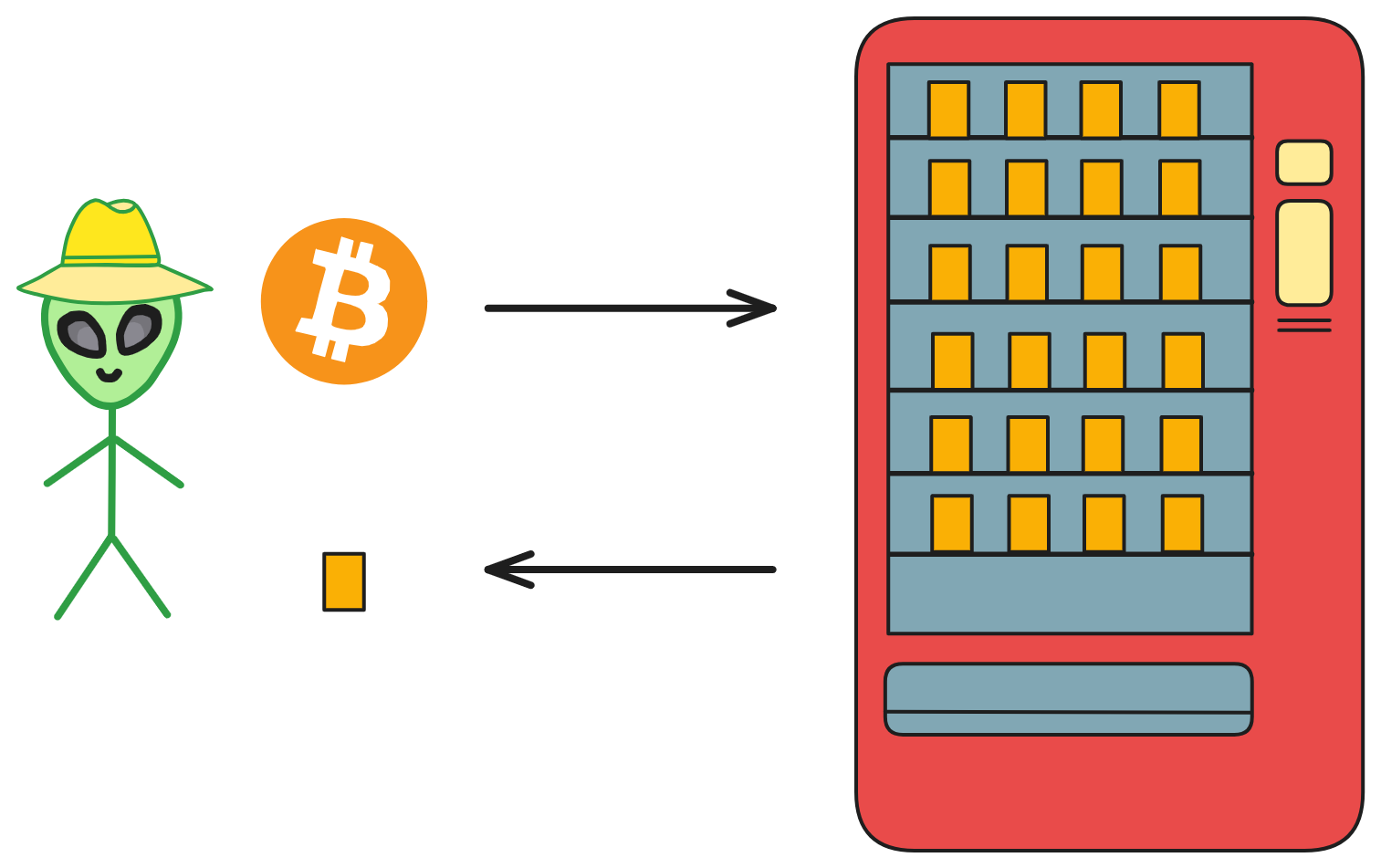

Deployed software on the blockchain becomes an automated vending machine, permanently stored within a distributed and decentralized network that fairly and justly evaluates each transaction to determine if conditions are met. With the blockchain's immutable storage capabilities, the phrase "code is law" is coined. Here, software is referred to by another name: "smart contracts".

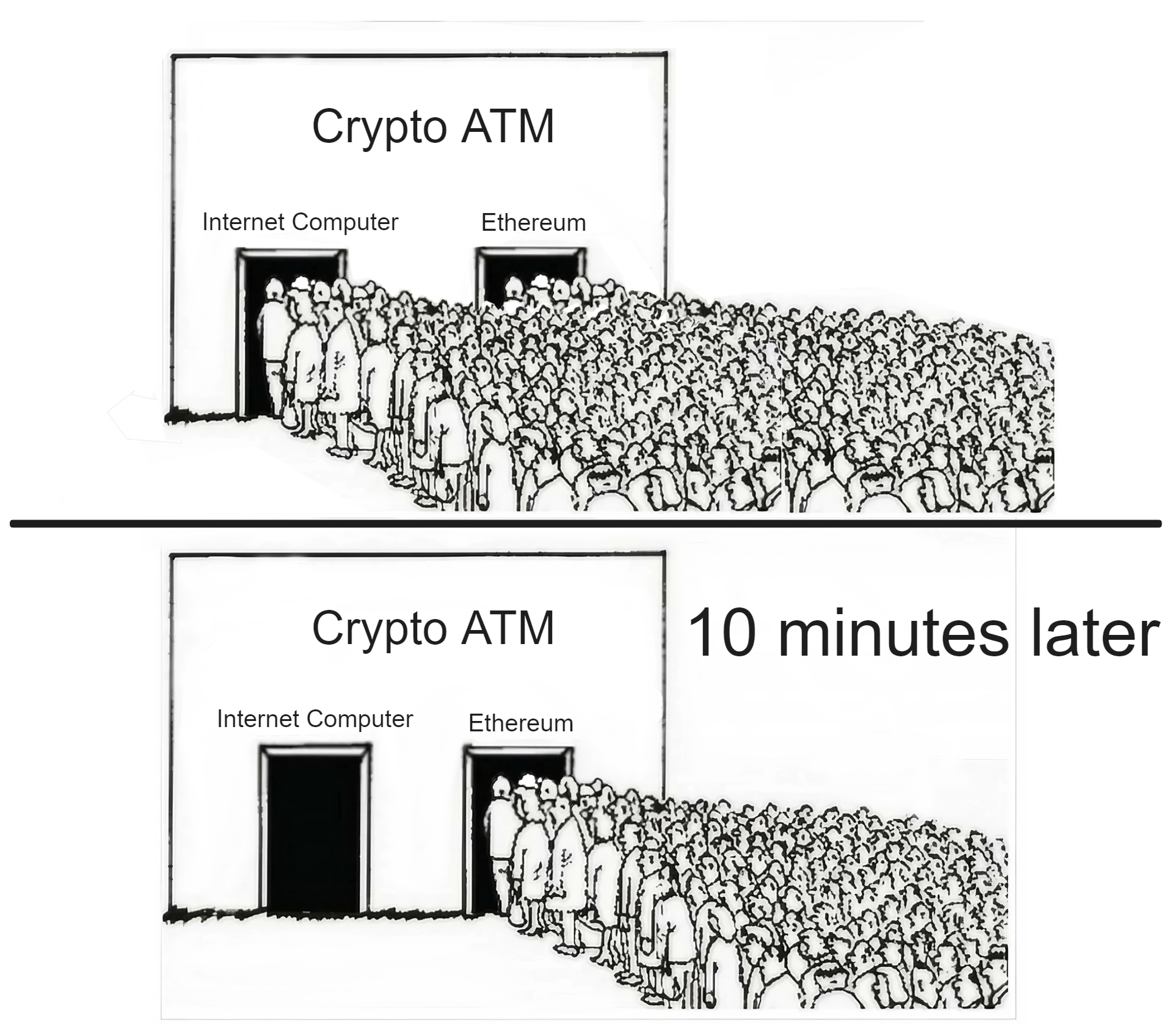

However, ahem, interrupting for a moment. The idea is beautiful, but reality can be cruel. In the early Ethereum community, there was indeed a desire to create a "world computer," establishing a decentralized infrastructure distributed worldwide. But Ethereum's architecture has certain limitations, including lower transaction execution performance, high gas fees due to rising ETH prices, poor scalability, and the inability to store large amounts of data, among other issues.

Dominic eagerly hoped his research could be put to use by the Ethereum project. His motivation was not for money, but a long-held passion for distributed computing, now sublimated into boundless aspirations for blockchain, making it hard for him to imagine anything that could eclipse the excitement and determination before him. He soon became a familiar face in Ethereum circles, often discussing at various conferences the possibilities of applying new cryptography and distributed computing protocols in next-generation blockchains.

The solution to this problem can take two approaches. The first is to improve the existing architecture, such as transitioning Ethereum's consensus from PoW to PoS (Casper), building shard chains, or creating sidechains. The other approach is to start over and design a new architecture that can handle information processing and large-scale data storage at high speeds.

Should they keep improving, scaling and retrofitting Ethereum's old architecture, or start from scratch to design a real "World Computer"?

At the time, people were interested in his ideas, but the inertia was that his concepts were too complex and distant, requiring too much time to realize and fraught with difficulties. Even though Ethereum did not adopt Dominic's ideas later, he was still grateful to early Ethereum members like Vitalik and Joe Lubin for patiently listening to his ideas in many early discussions.

Finally, Dominic made the difficult decision to start from scratch and design a real "World Computer".

When we try to solve a specific problem, we often find that the key is to create powerful "tools". With a more advanced and practical tool, and continuously maintain and improve it, it gradually becomes a more powerful tool to solve many valuable problems. A common business phenomenon is that in order to realize a product or service, a tool is developed, and then it is found that this tool has wider applicability, and then the tool itself evolves into a larger, more successful and higher-valued product.

Amazon's cloud services were originally designed to solve the problem of waste of computing resources after Black Friday. Later, it became the world's earliest and largest cloud service provider. Similarly, SpaceX solved the problem of high rocket launch costs. In order to thoroughly solve the scalability problem, Dominic decided to redesign the consensus algorithm and architecture.

The opportunity finally arrived in November 2015, in London.

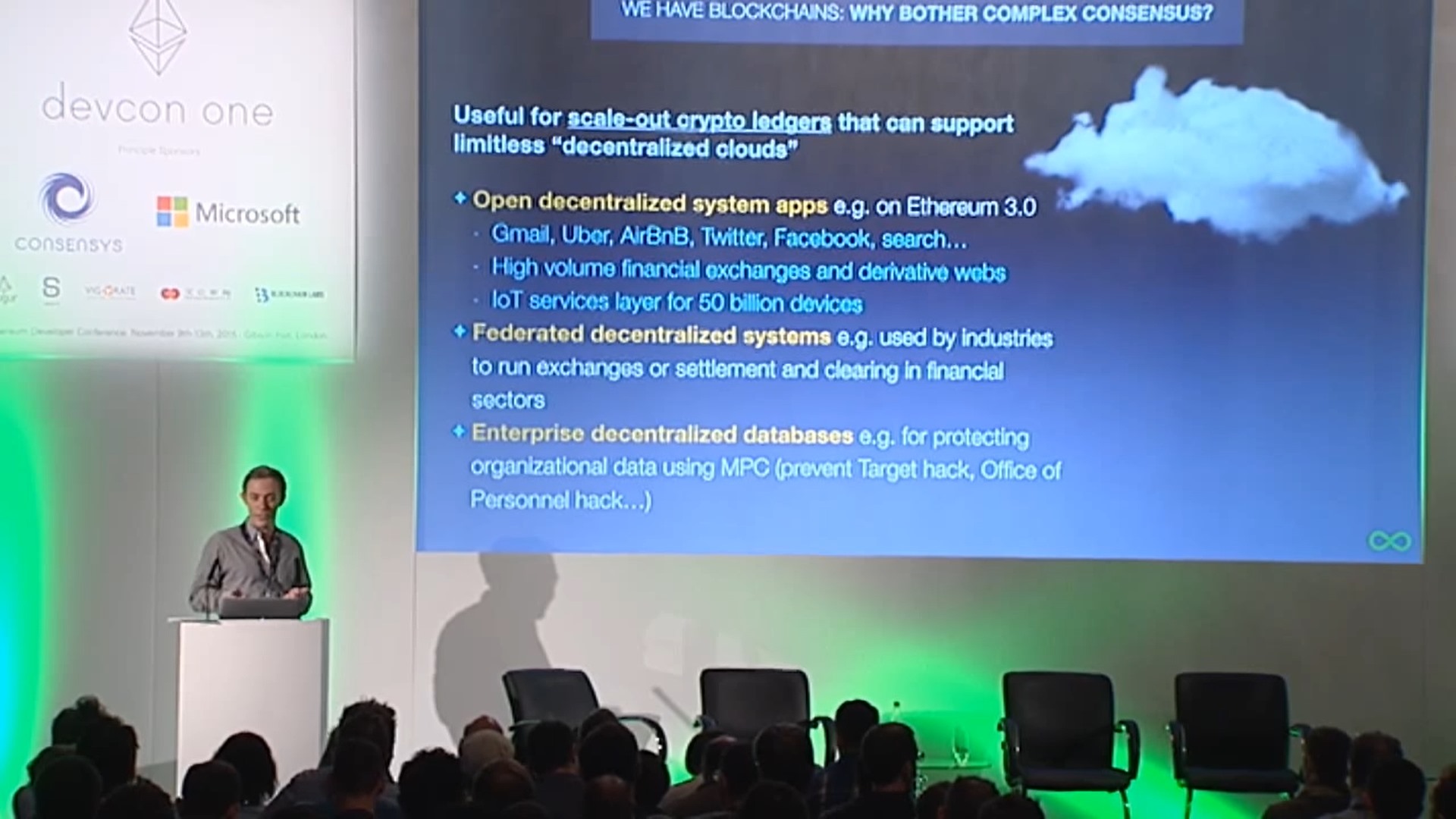

Dominic presented the consensus algorithm he had been studying at devcon one.

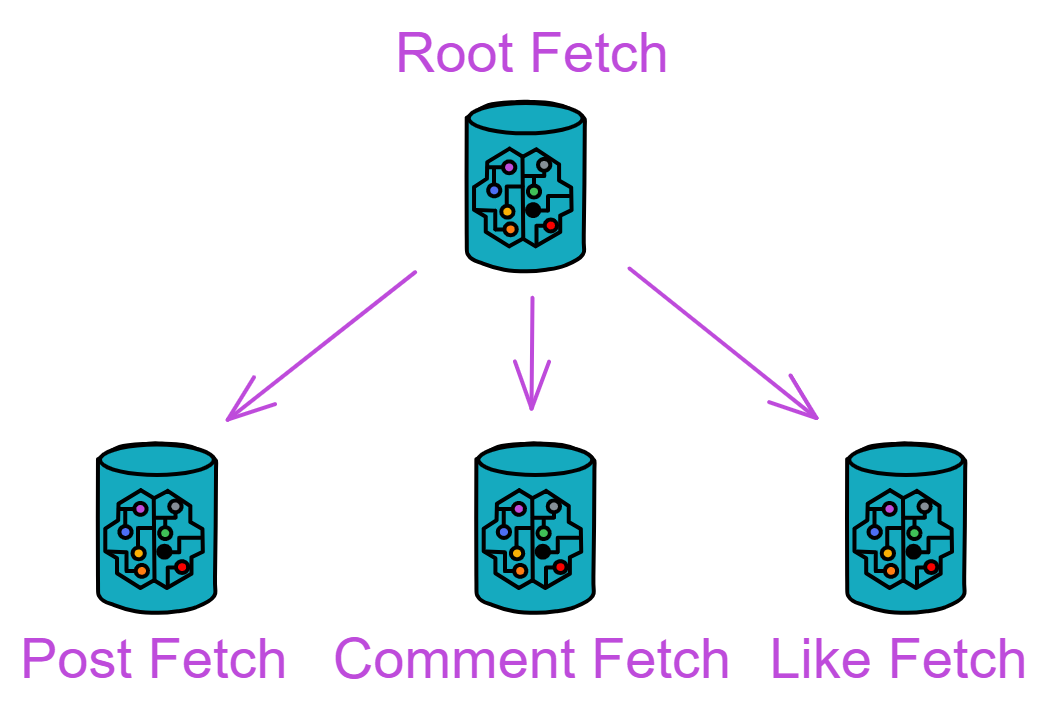

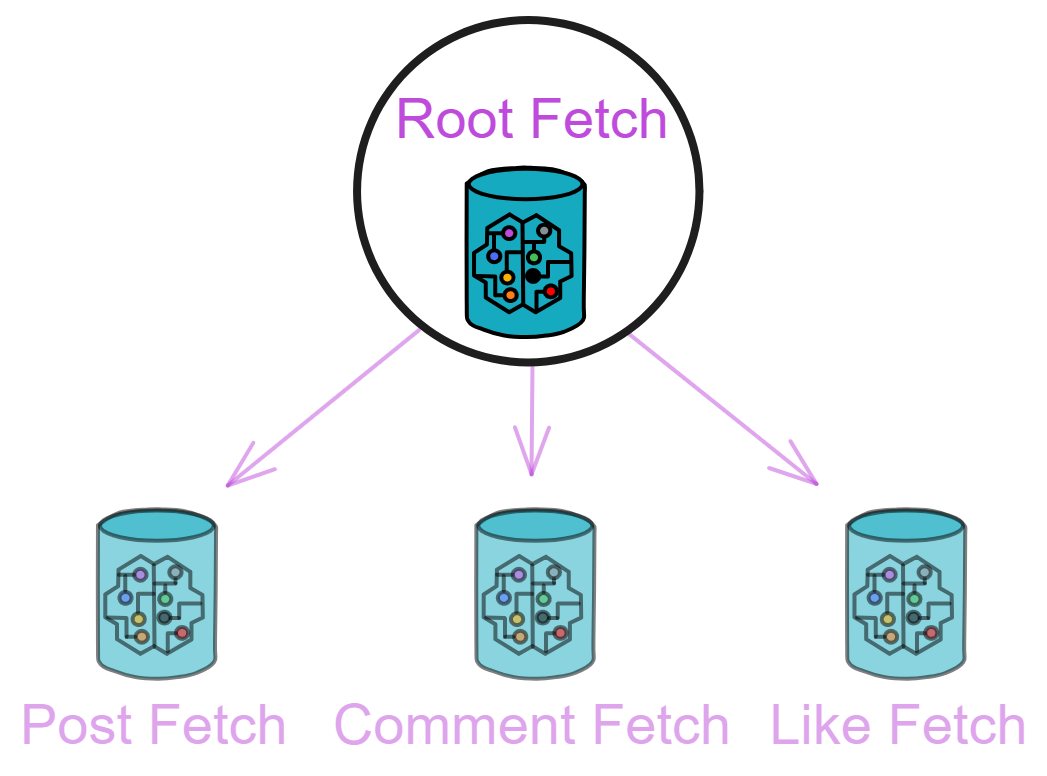

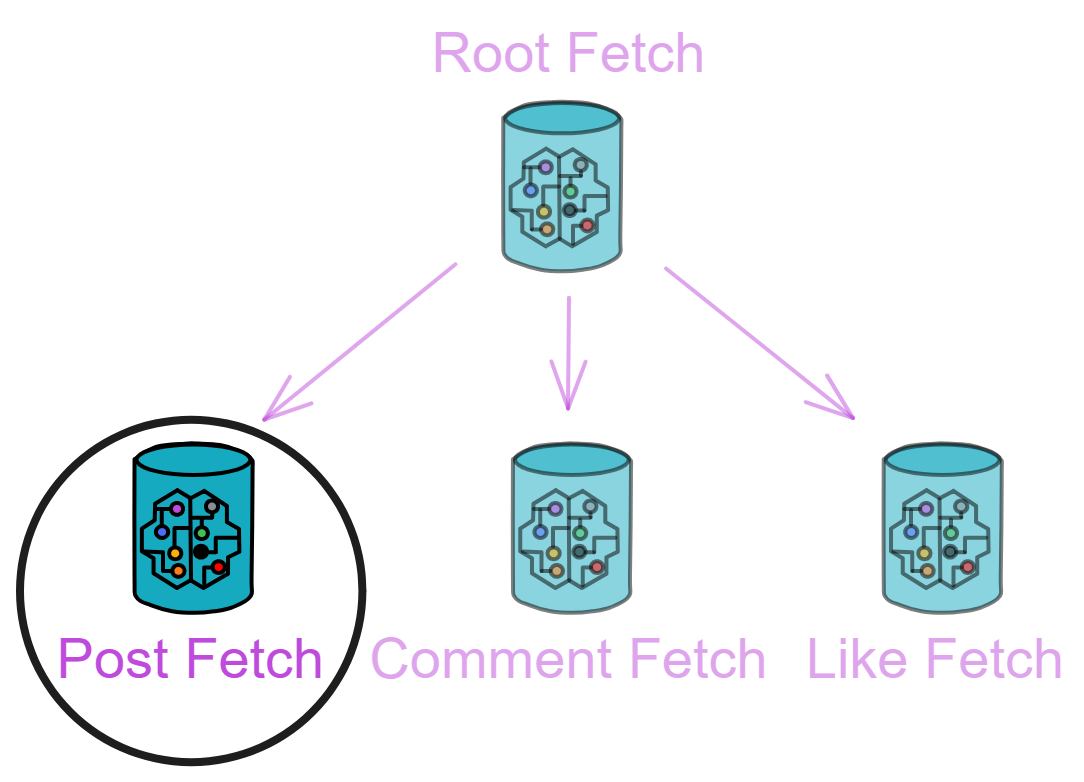

Now we can see from Dominic's presentation at devcon one in 2015 that he described the IC as Ethereum 3.0. In fact, it wouldn't be too much to call it Blockchain 3.0. If Bitcoin and Ethereum are called "Blockchain 1.0" and "Blockchain 2.0", he wanted to create a "solid", a true world computer, even naming the project the Internet Computer (IC for short). Based on the "face" to support large-scale applications, it can horizontally expand and achieve unlimited scalability as a "world computer".

Oops, sorry, it should be this one:

During the conversation, Dominic discovered that even the staunchest Bitcoin supporters were very interested in the concept of Ethereum. This reinforced his belief in the potential of Trusted Computing.

Dominic had an even grander vision than Ethereum. He wanted to create a public network of servers that would provide a "decentralized cloud" - a trusted computing platform. Software would be deployed and run on this decentralized cloud.

Dominic is working on reshaping a completely decentralized infrastructure, which can also be understood as the next generation of internet infrastructure or as a decentralized trusted computing platform combined with blockchain😉.

Simply put:

Traditional defense systems: mainly composed of firewalls, intrusion detection, virus prevention, etc. The principle of traditional network security is passive defense, often "treatment after the event". For example, when an application has a virus, it is necessary to use anti-virus software to detect and kill it. At this time, the enterprise has already incurred losses to some extent.

Trusted computing: based on cryptographic computation and protection, combined with secure technology to ensure full traceability and monitoring. The principle of trusted computing is active defense. Since the entire chain from application, operating system to hardware must be verified, the probability of virus and network attacks is greatly reduced.

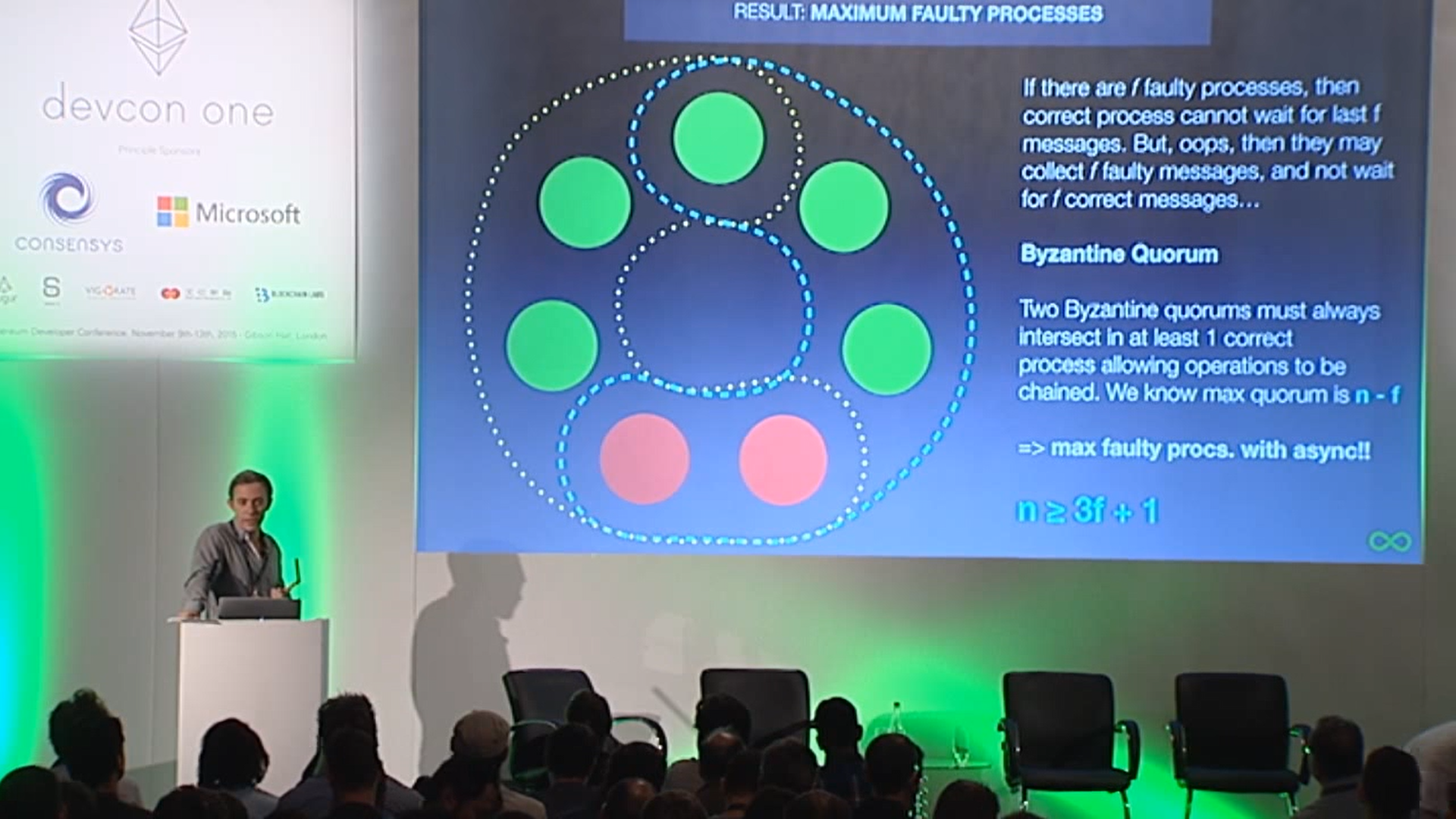

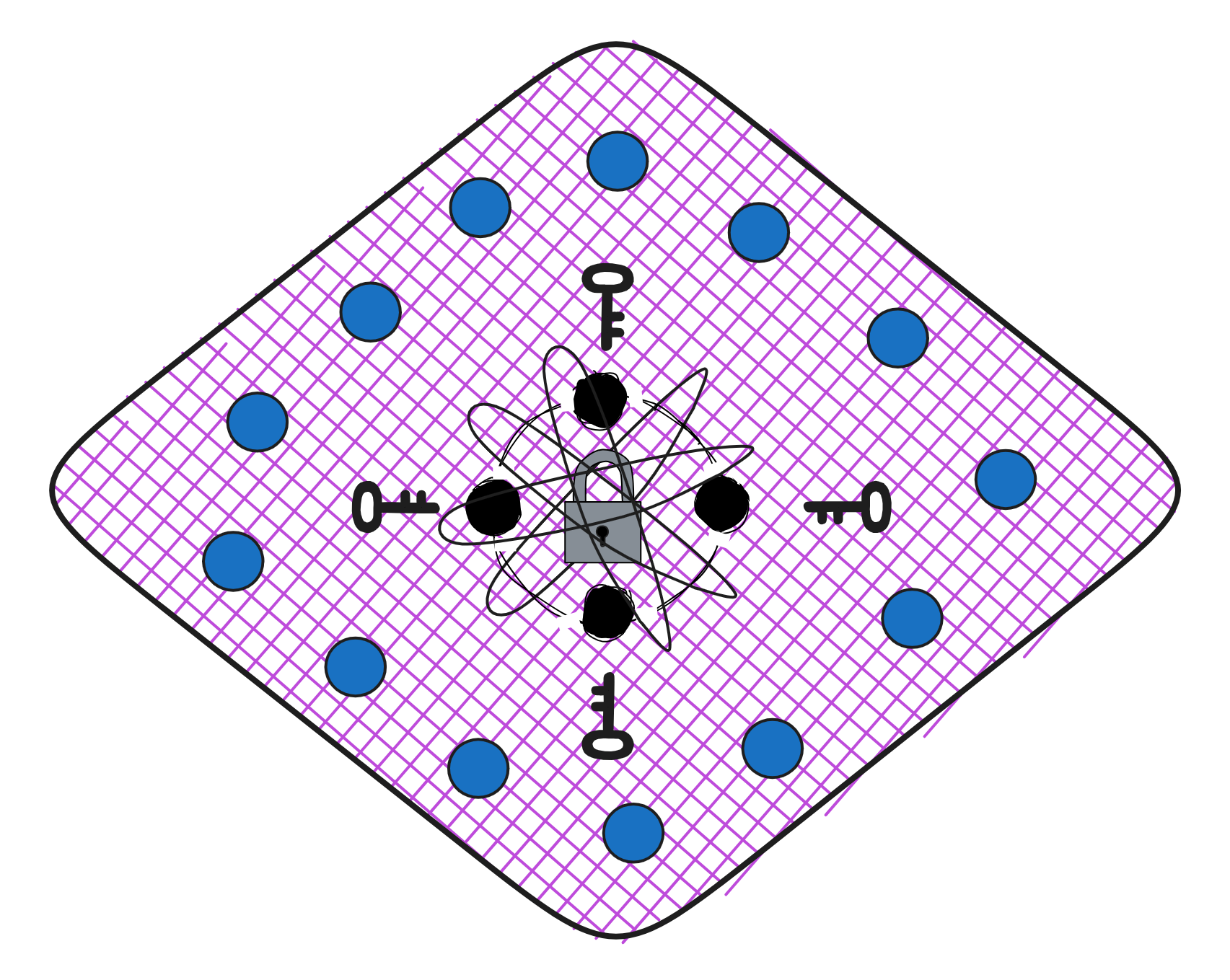

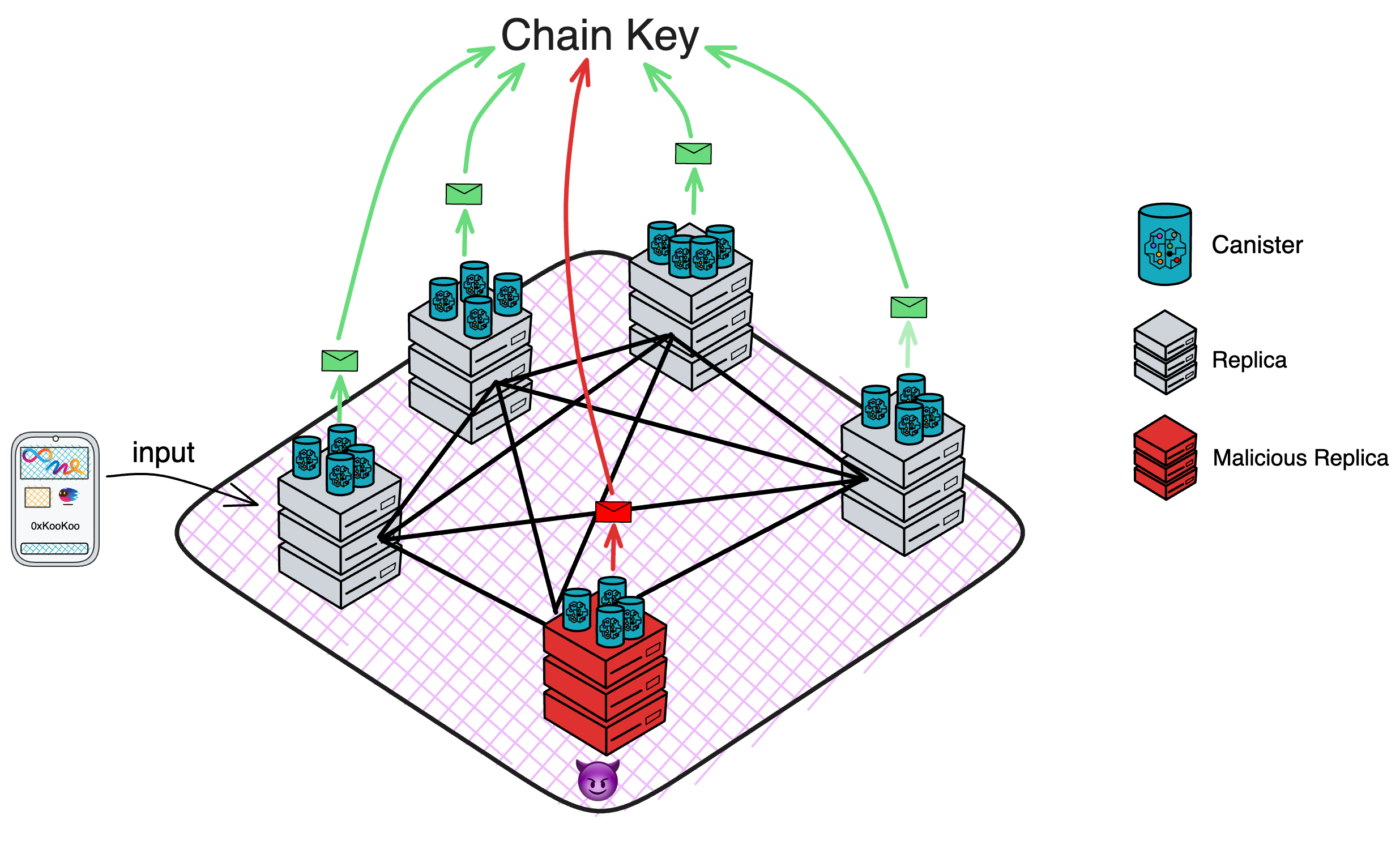

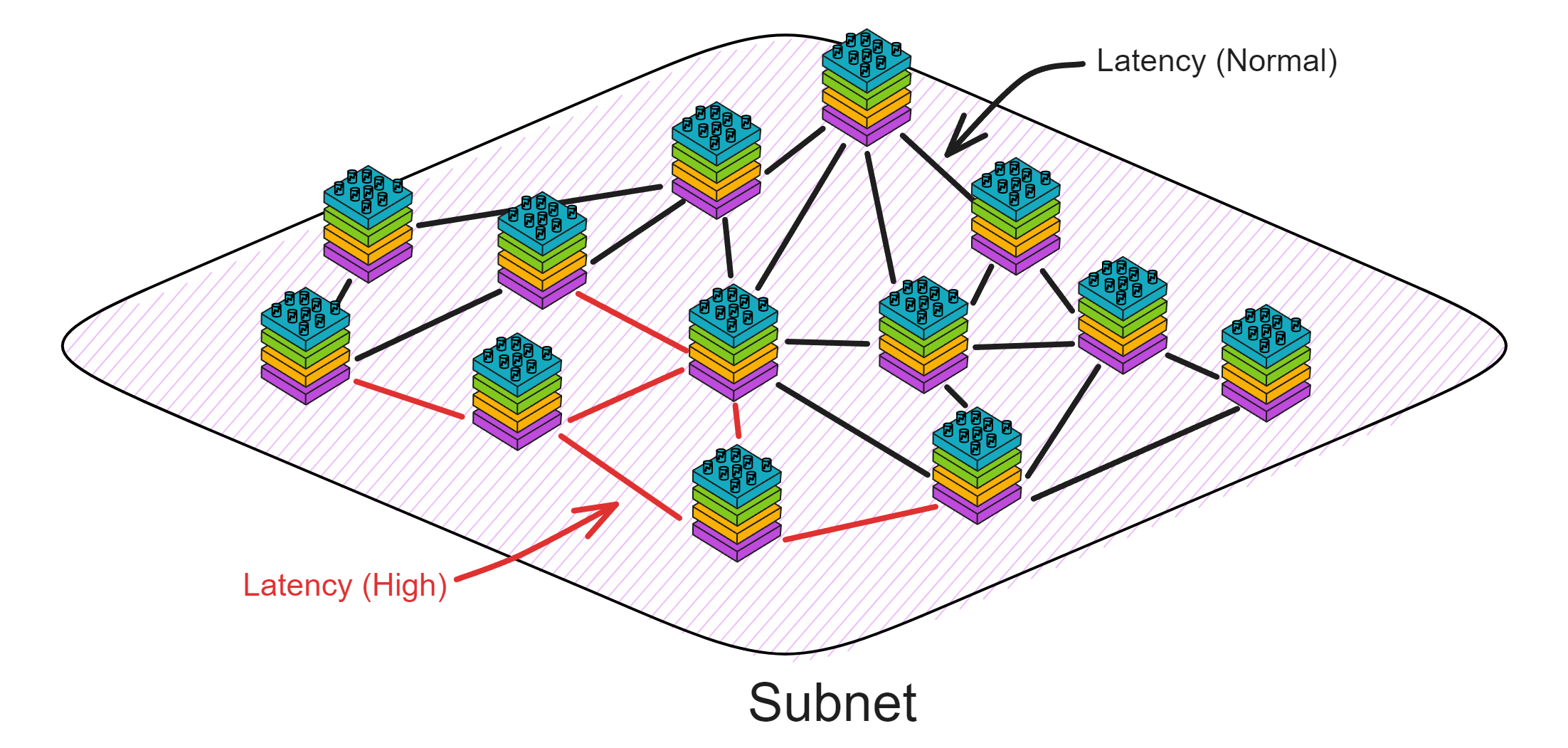

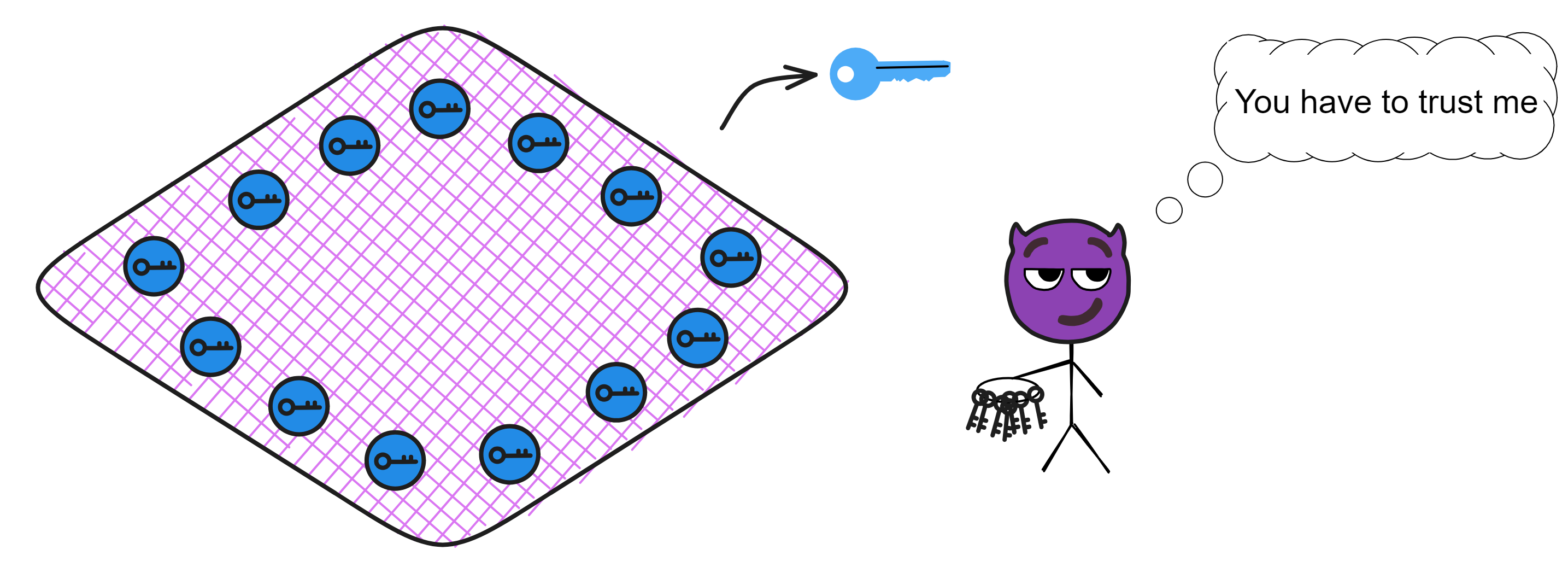

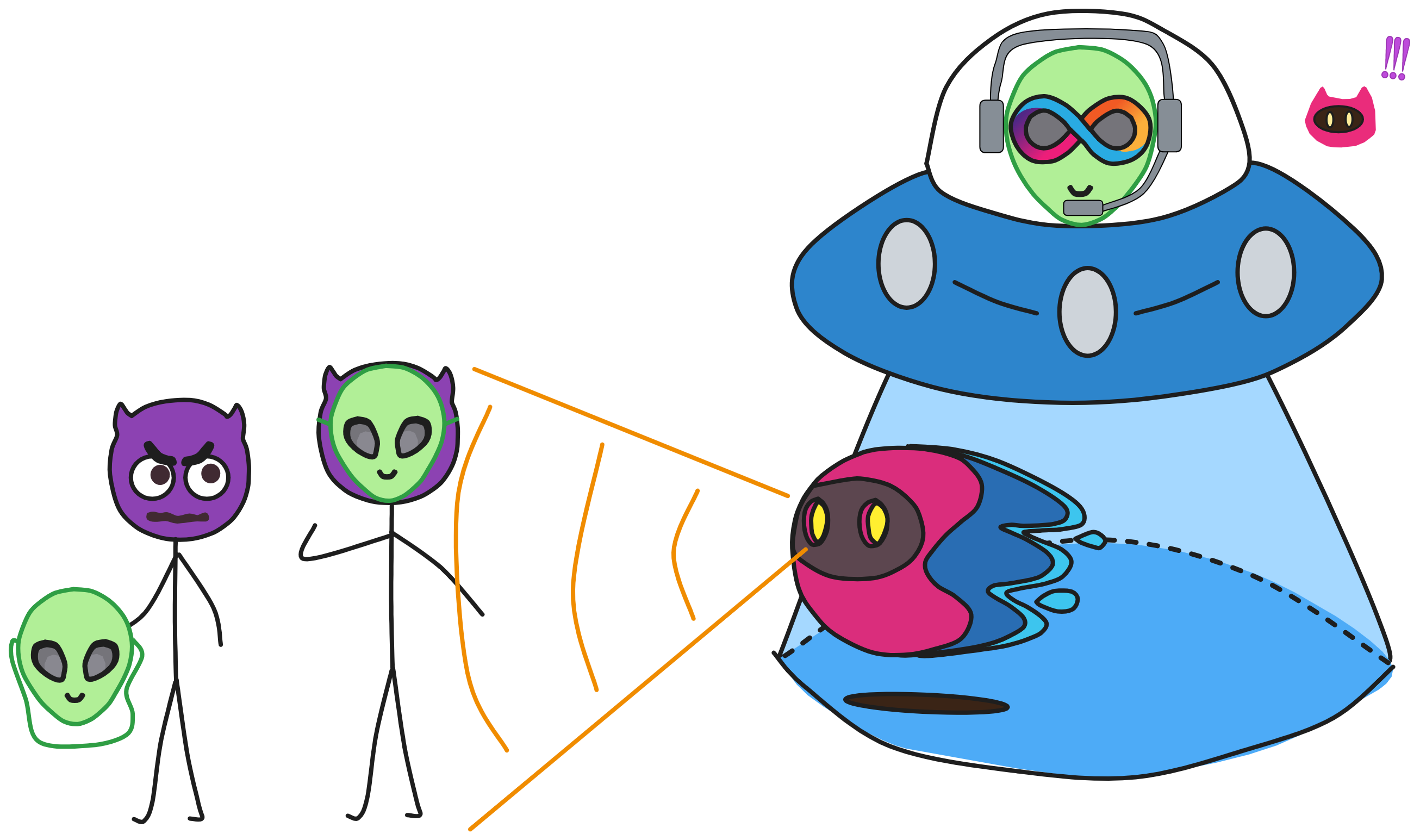

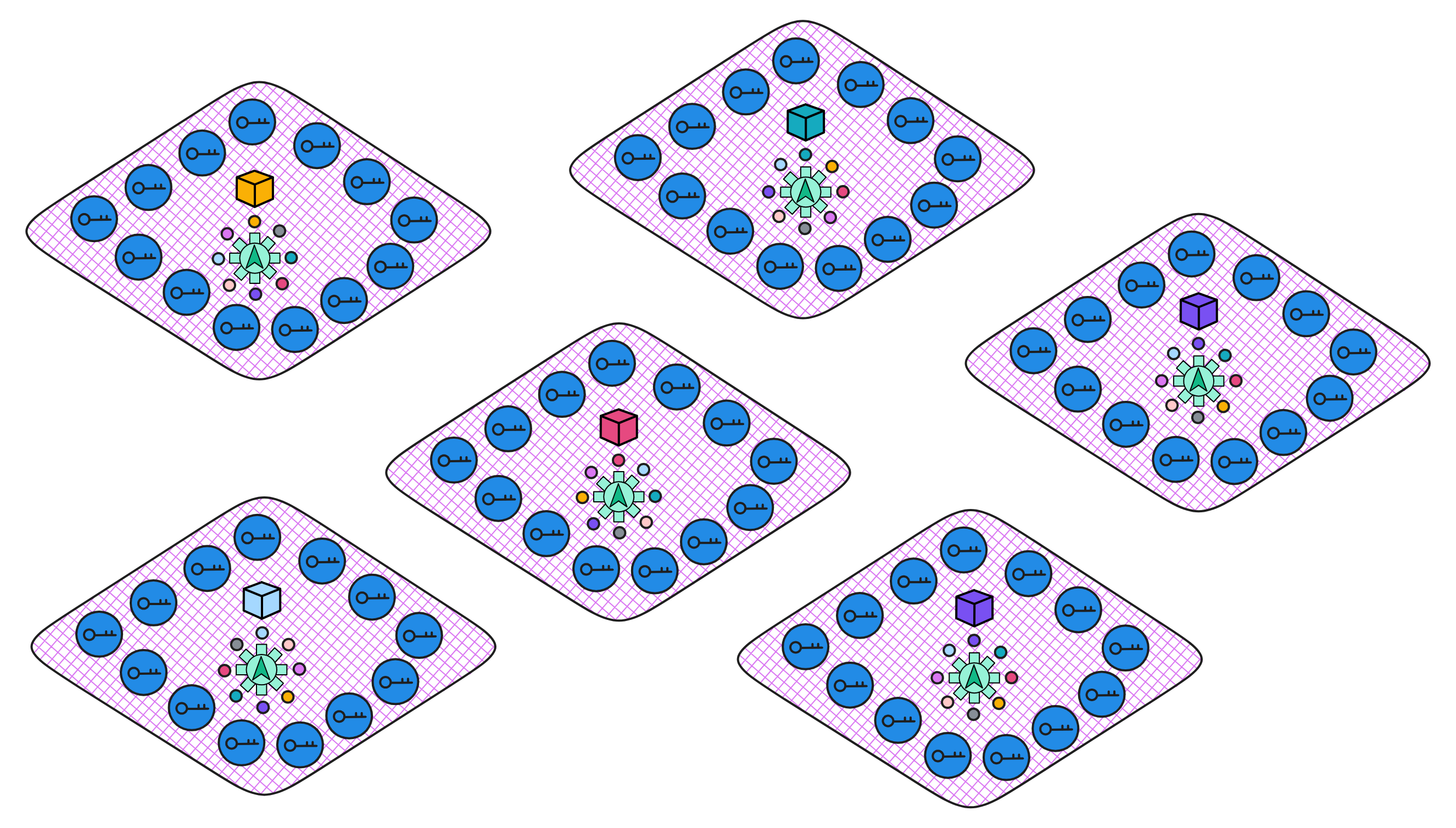

Blockchain has something called a consensus algorithm, which is responsible for coordinating the nodes in the network (a node is a group of servers, which can be understood as a high-performance computer). The consensus algorithm can ensure that everyone's information in the network is in agreement, because this is a network that anyone can join or leave at any time, and it is not known which node might intentionally disrupt it (you can refer to my future blog post about the Byzantine Generals problem). With the consensus algorithm, even if one-third of the nodes in the network are malicious, the other nodes can still reach a consensus normally (the resistance of different consensus algorithms varies).

Decentralized platforms not only involve token transfers between parties, but also rely on consensus algorithms to establish a barrier and keep malicious actors at bay. However, efficiency and decentralization are difficult to achieve simultaneously, and it's challenging to create a fully decentralized system that can both protect nodes and allow for coordination and data synchronization among them. Dominic's goal is to merge trusted computing and blockchain to create a limitless, open, high-performance, strongly consistent, scalable, and data-intensive blockchain network composed of servers from all over the world, without the need for firewalls.

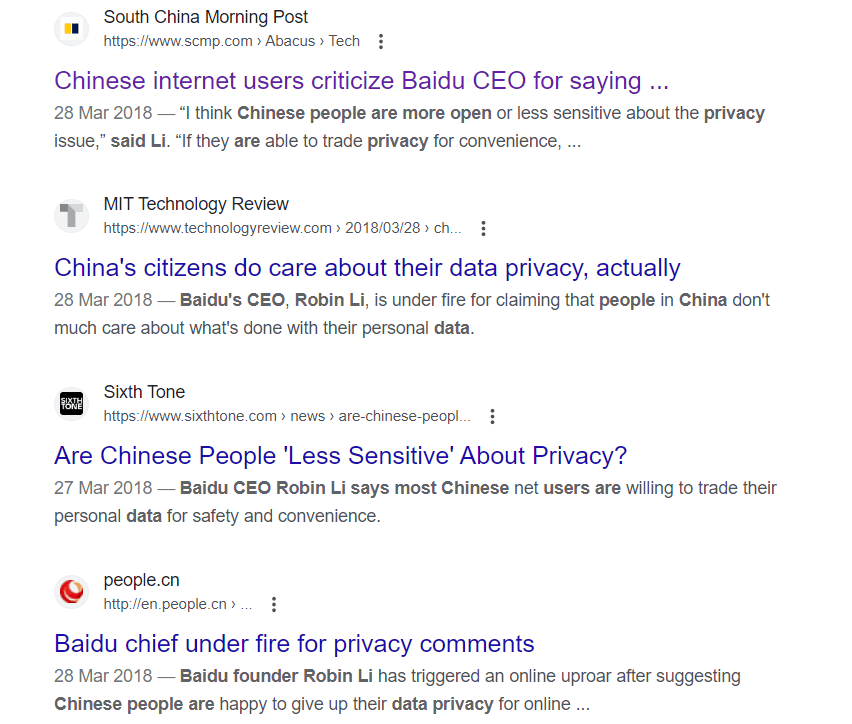

For Dominic, the future of blockchain is the future of the internet, and vice versa. The internet is no longer just about connecting servers in data centers to users, but first forming a trusted and secure blockchain network composed of servers from around the world, and then deploying apps and serving users on top of it. Dominic hopes that banking and finance, the sharing economy (such as Uber), social networks, email, and even web searches can all be transferred to such a network.

In retrospect, Ethereum made the right call not adopting Dominic's ideas back then. Because while focused on proof-of-work (PoW), Ethereum was also exploring upgrade paths to proof-of-stake (PoS). The blueprint he outlined was too ambitious to realize in a limited timeframe. To achieve his vision would have required an enormous, stellar team relentlessly researching, inventing new cryptographic techniques, and more.

In the fall of 2016, Dominic announced his return as a "decentralized adventurer". With the theoretical framework in place, the adventure of a dreamer has officially begun!

DFINITY !

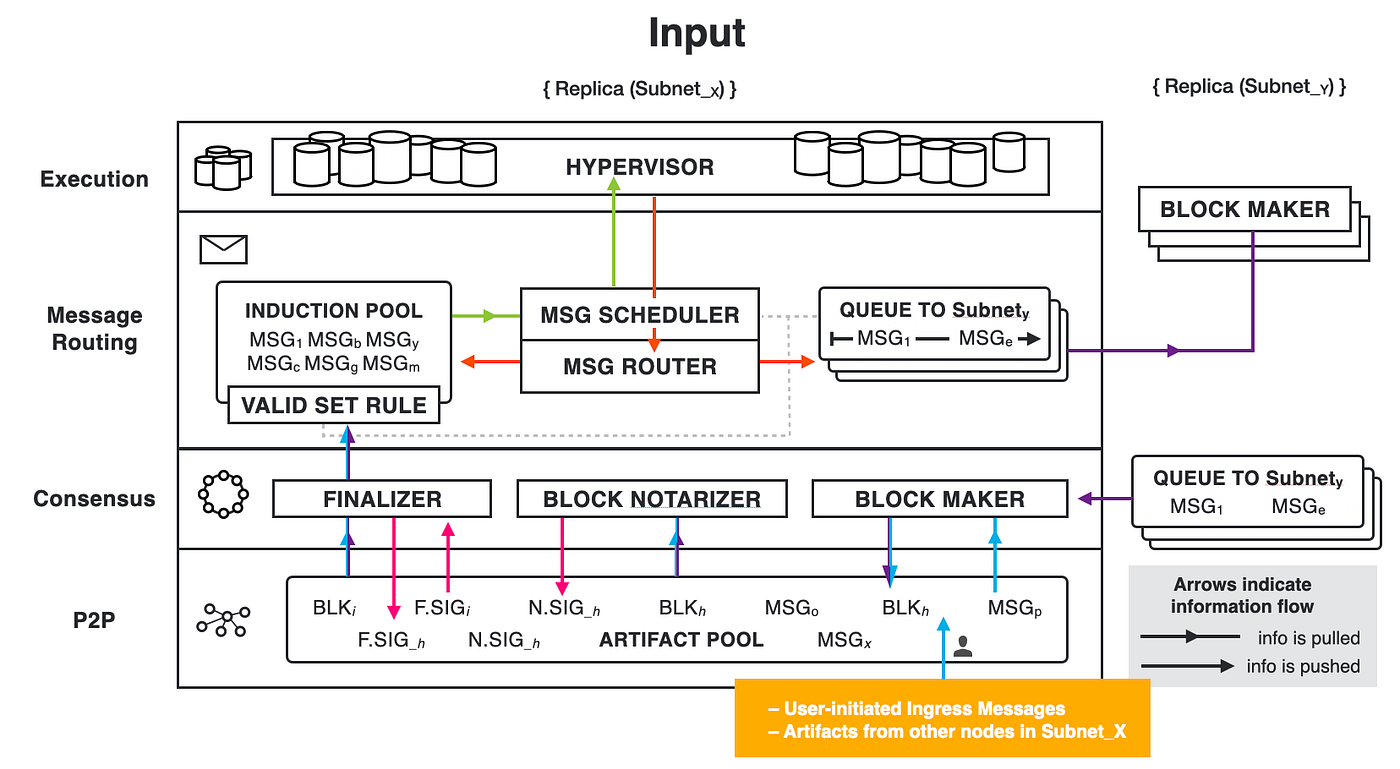

IC re-designed the blockchain architecture and developed more efficient consensus, along with innovative cryptographic combinations, in order to achieve the idea of a "world computer". The goal is to solve the limitations of speed, efficiency, and scalability in traditional blockchain architectures.

Dominic is busy researching with the technical team on his left hand, writing strategic plans for the team on his right hand, and going to various blockchain forums to introduce the project with his mouth.

Over the years, Dominic has shared many critical cryptographic technologies with other blockchain teams, such as the application of VRF, which has been adopted by many well-known projects (such as Chainlink, etc.).

In February 2017, Dominic participated in a roundtable forum with Vitalik Buterin and many other experts. From left to right: Vitalik Buterin (left one), Dominic (left two), Timo Hanke (right one).

Ben Lynn (second from left in the red T-shirt) is demonstrating a mind-blowing technology called Threshold Relay, which can significantly improve the performance of blockchain and quickly generate blocks.

By the way, engineer Timo Hanke (third from left in the middle) was a mathematics and cryptography professor at RWTH Aachen University in Germany before he created AsicBoost in 2013, which increased the efficiency of Bitcoin mining by 20-30% and is now a standard for large-scale mining operations.

Ben Lynn is one of the creators of the BLS signature algorithm, and the "L" in BLS stands for the "L" in his name. After graduating from Stanford with a Ph.D., he worked at Google for 10 years before joining DFINITY in May 2017. If you haven't heard of the BLS algorithm, you must have read Ben Lynn's "Git Magic," which was all the rage on the internet a few years ago.

2021 was not an ordinary year.

May 10th. The IC mainnet was launched.

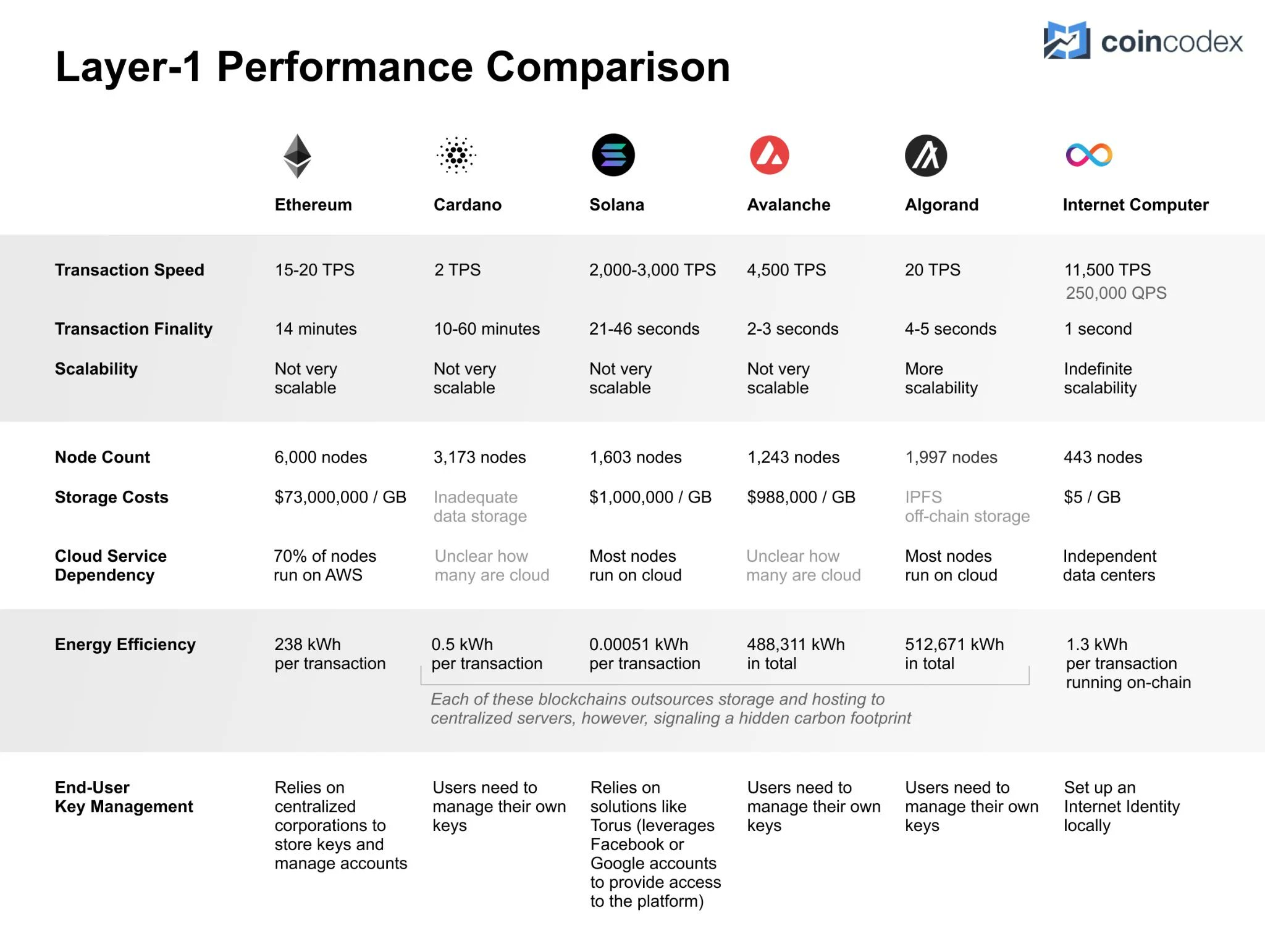

The chart above shows a comparison of performance, storage data costs, and energy consumption against other blockchains.

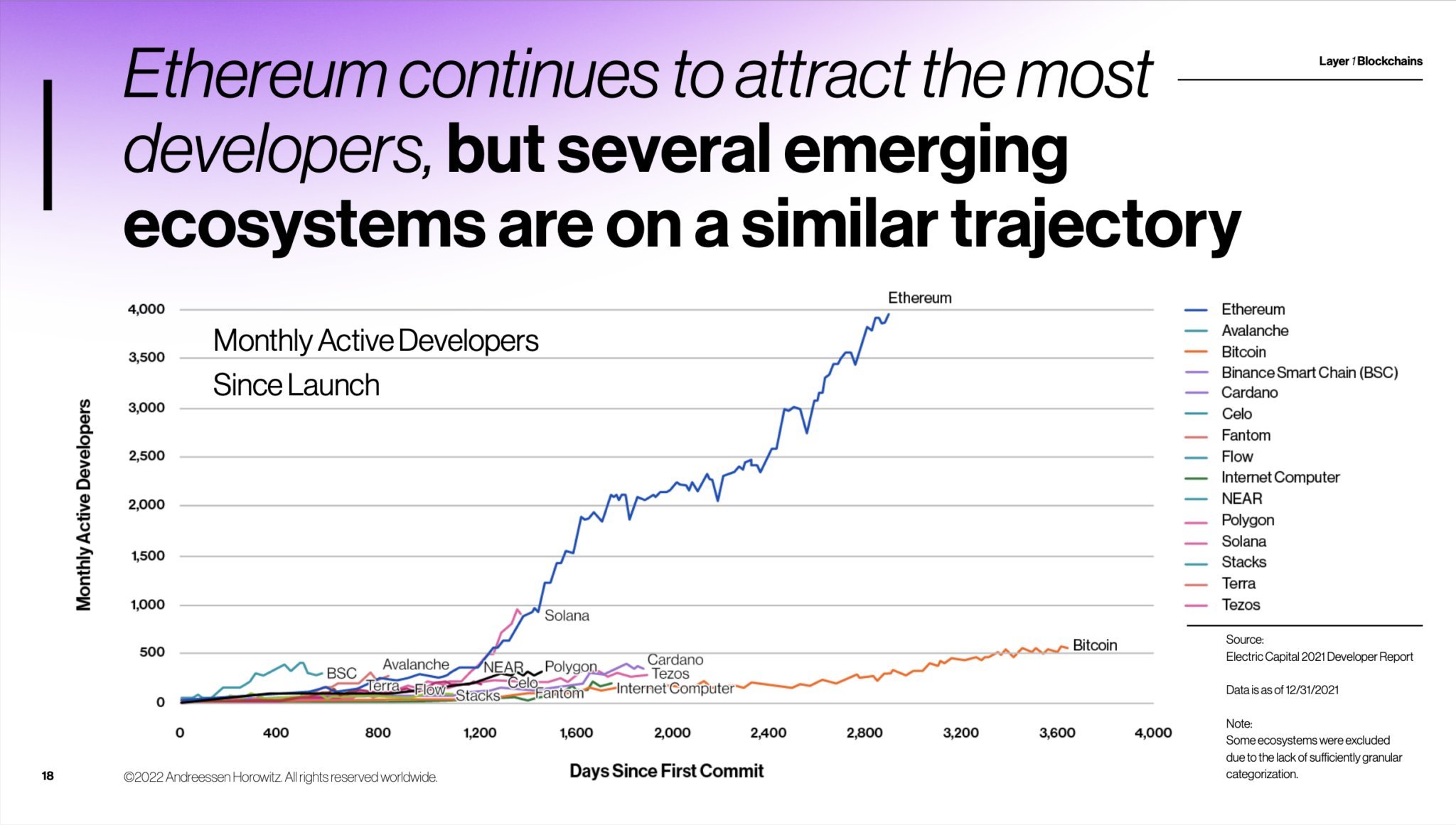

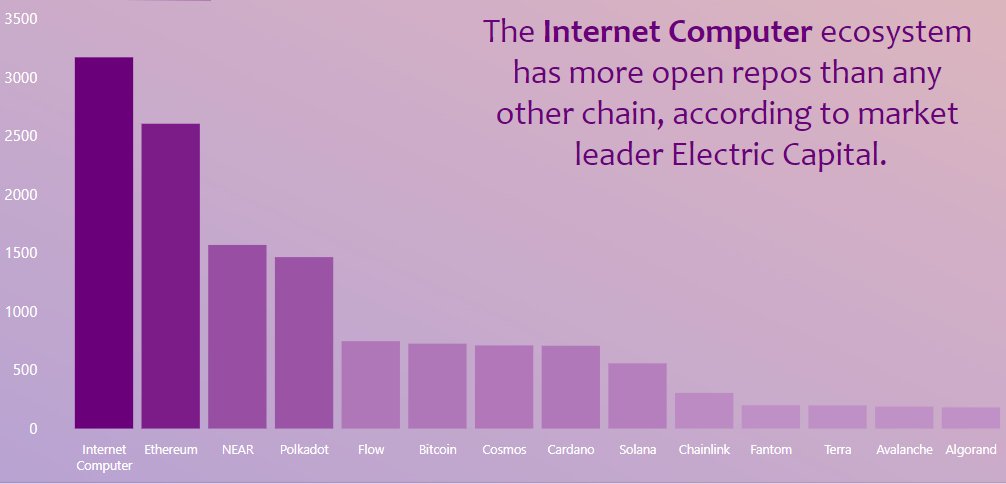

When the IC mainnet went live, there were already over 4,000 active developers. The chart below shows the growth of developers compared to other blockchains.

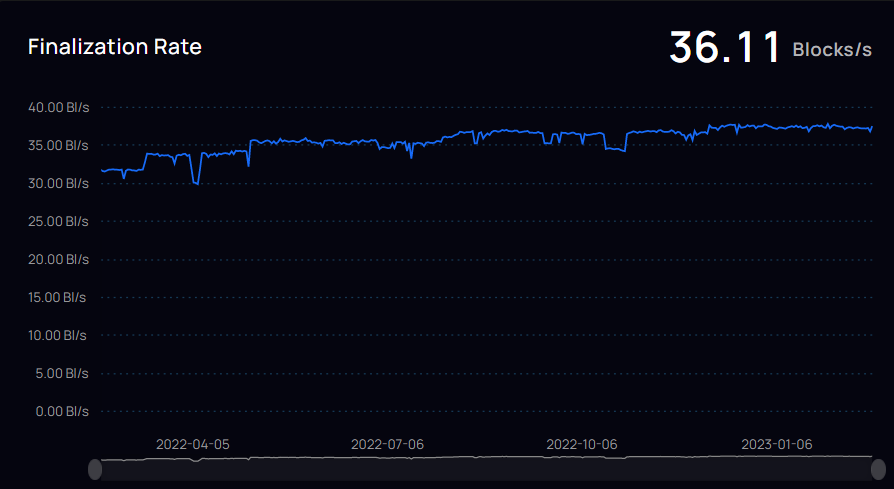

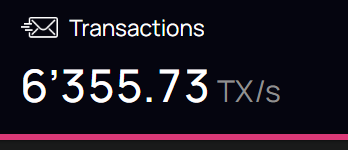

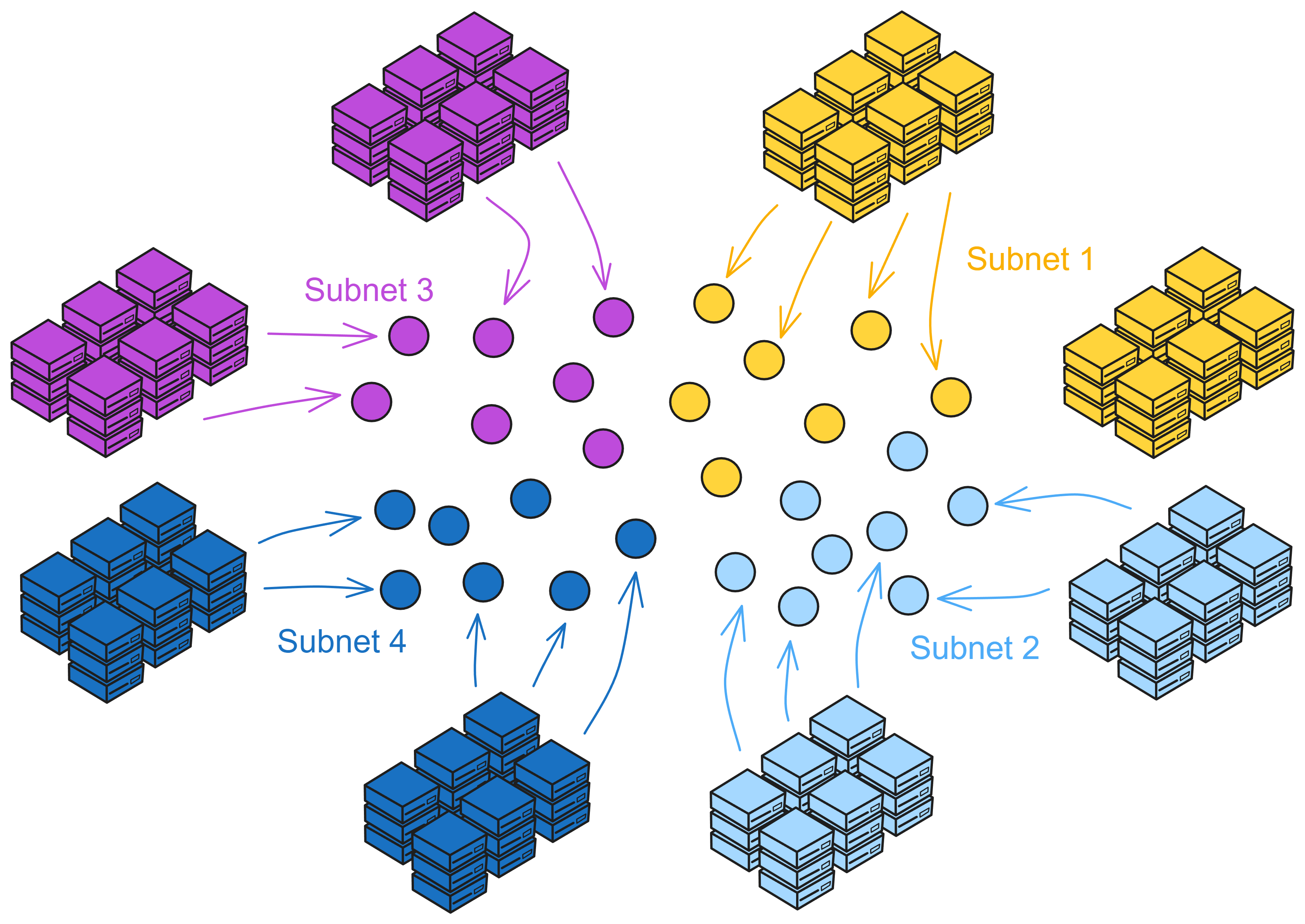

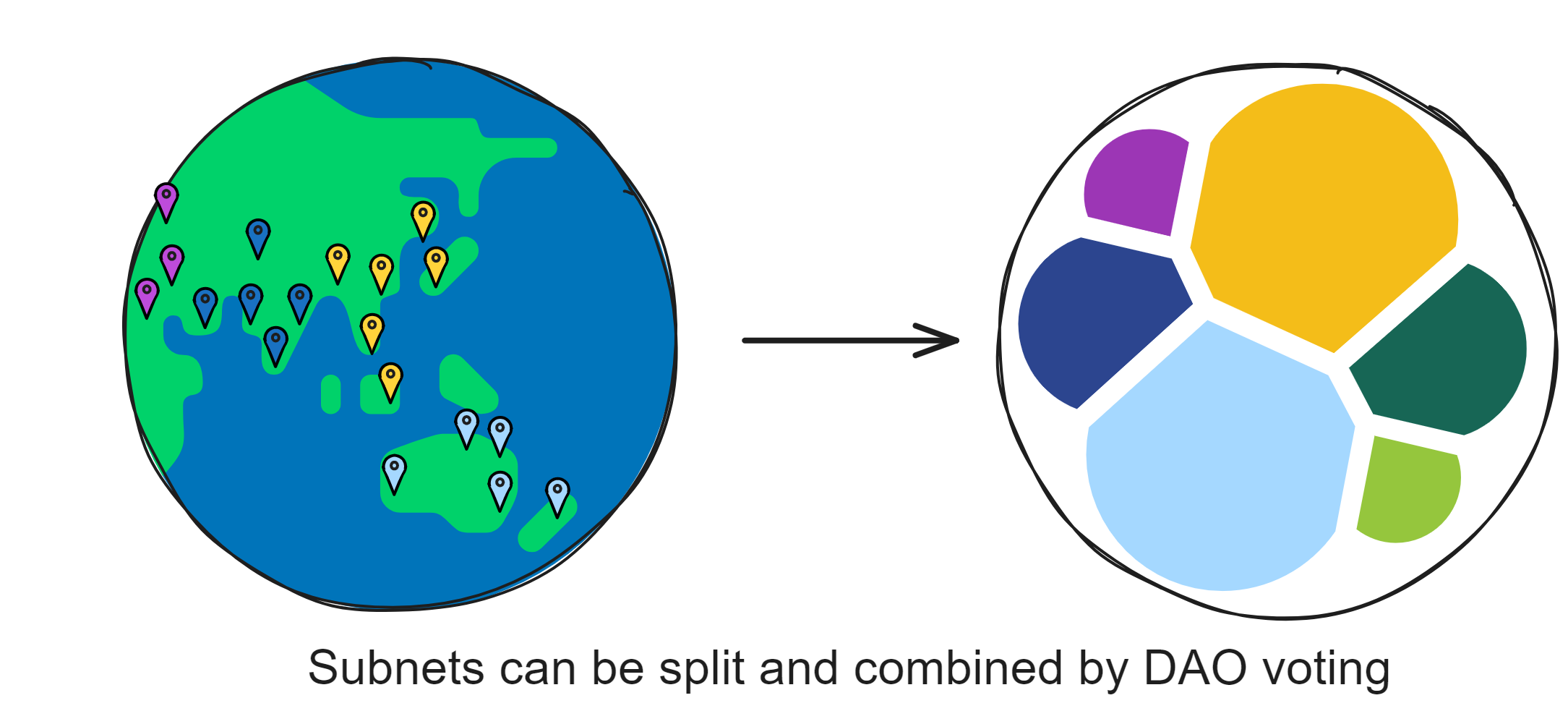

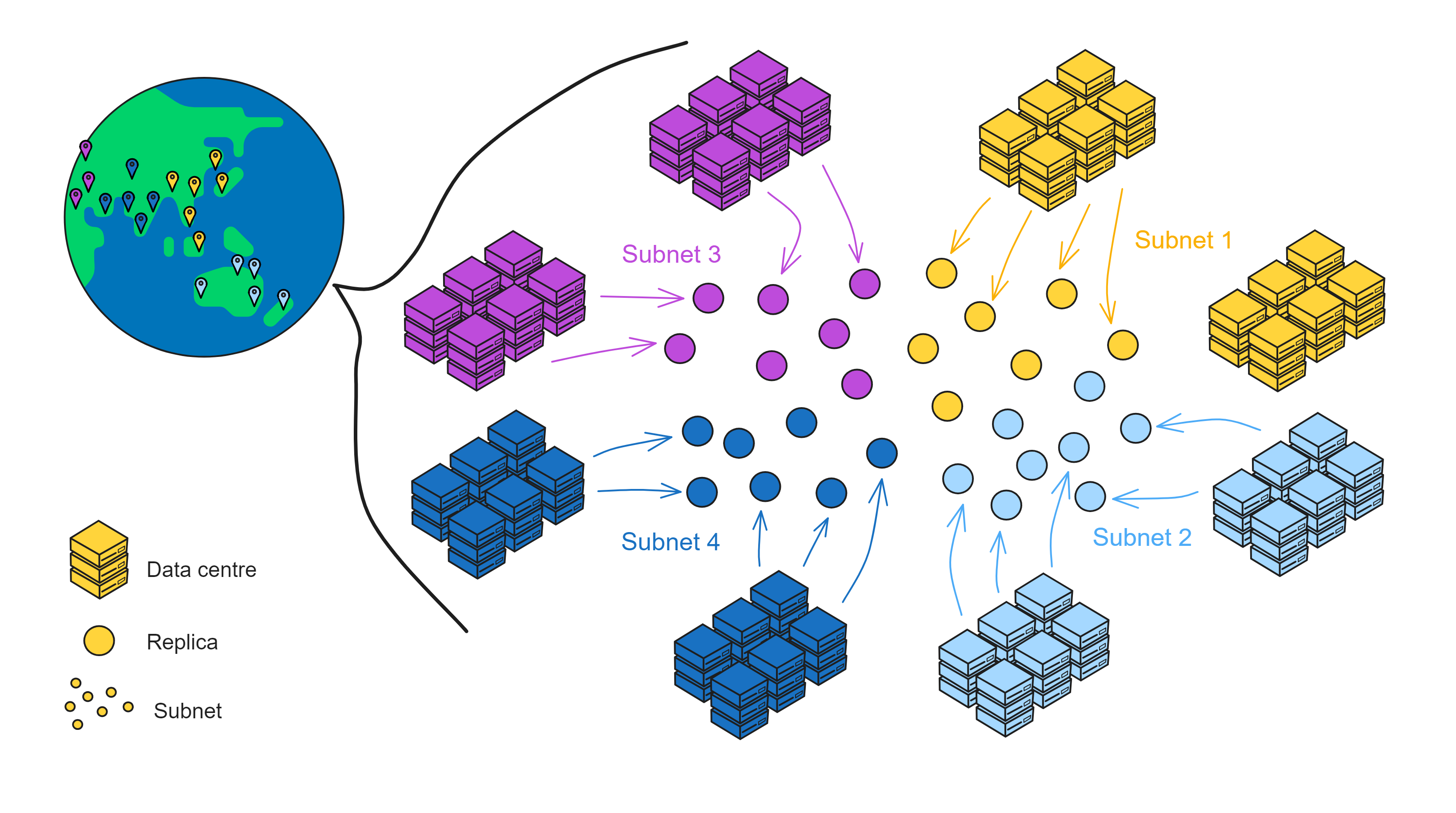

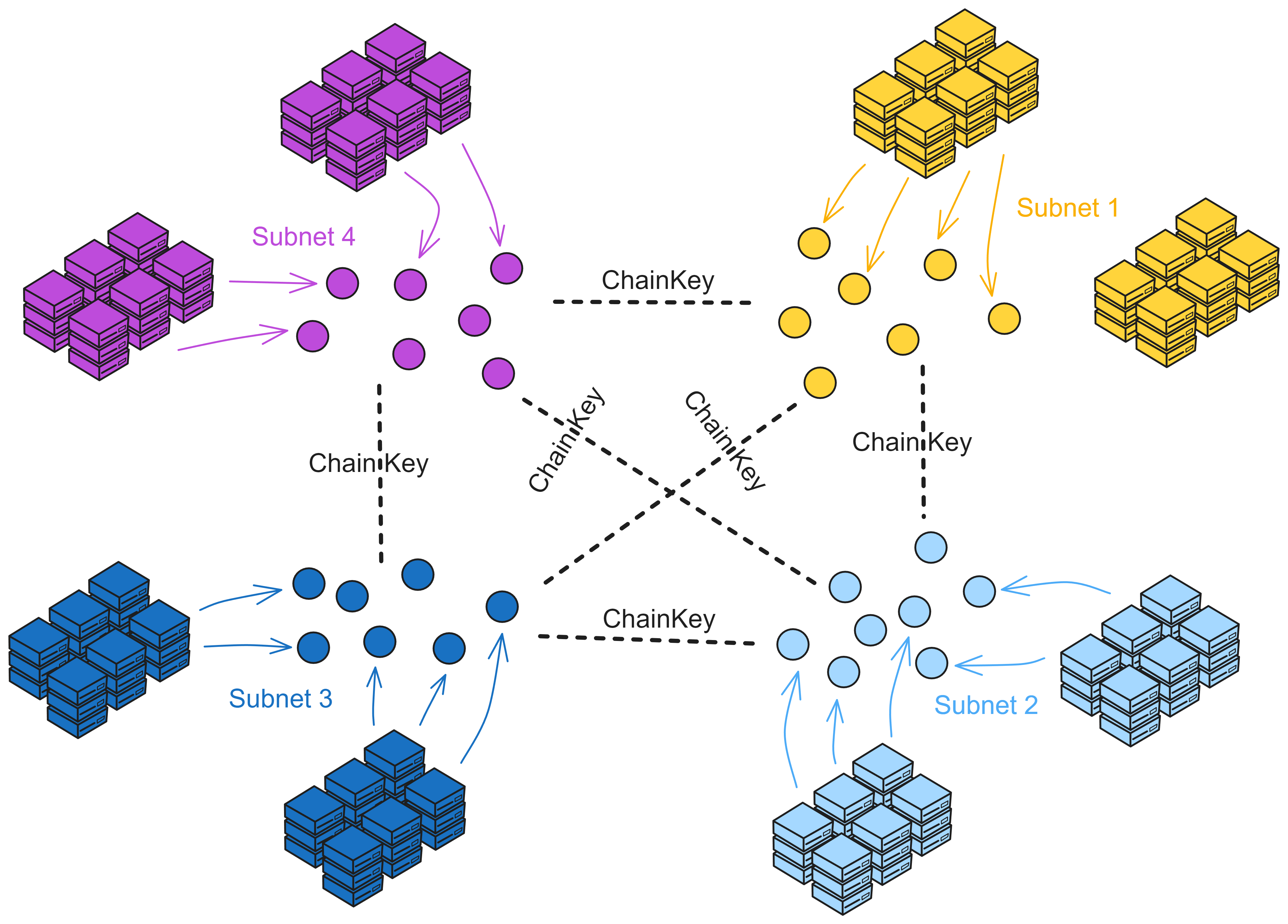

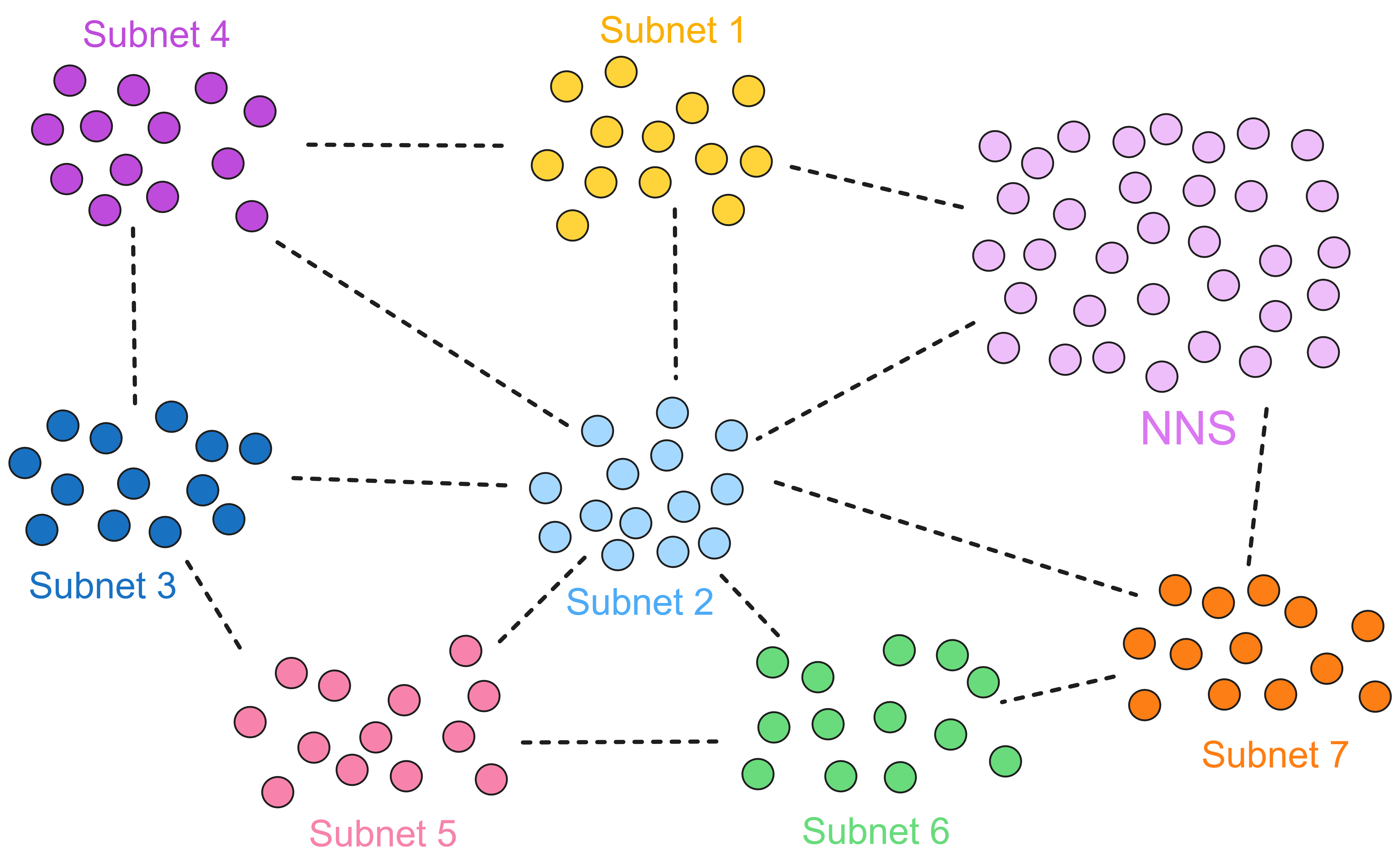

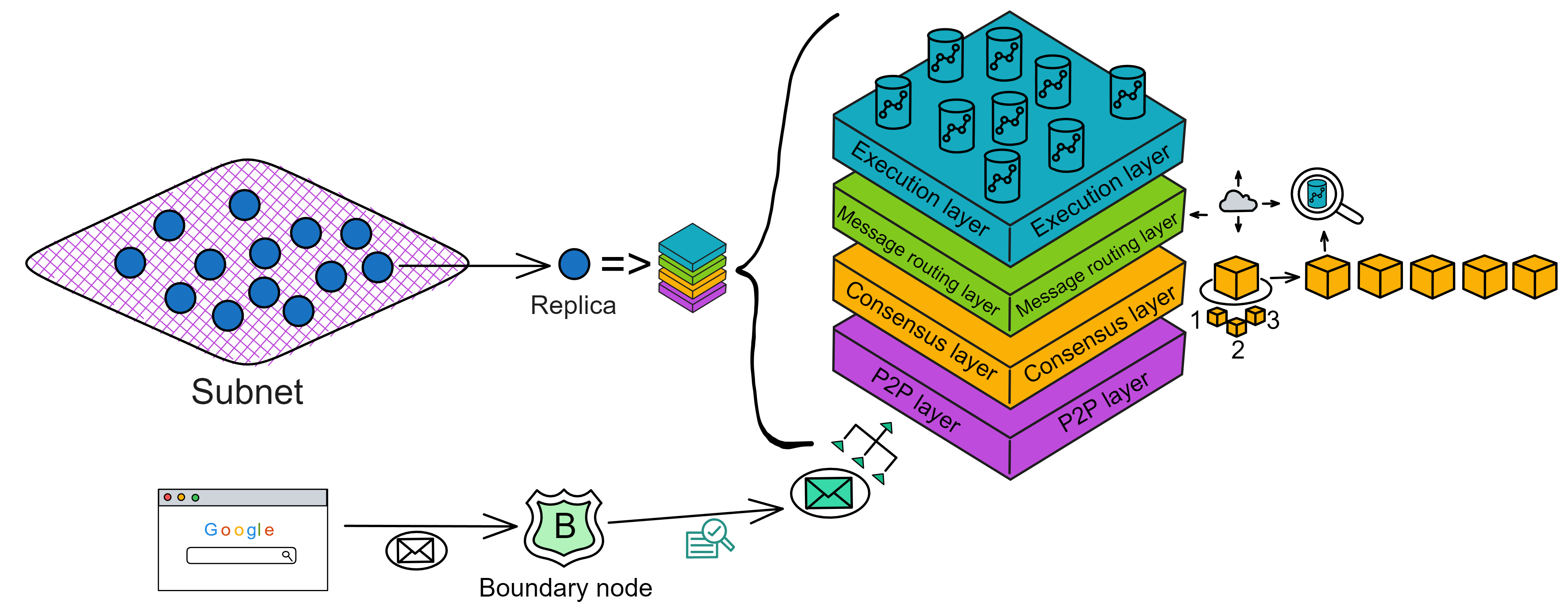

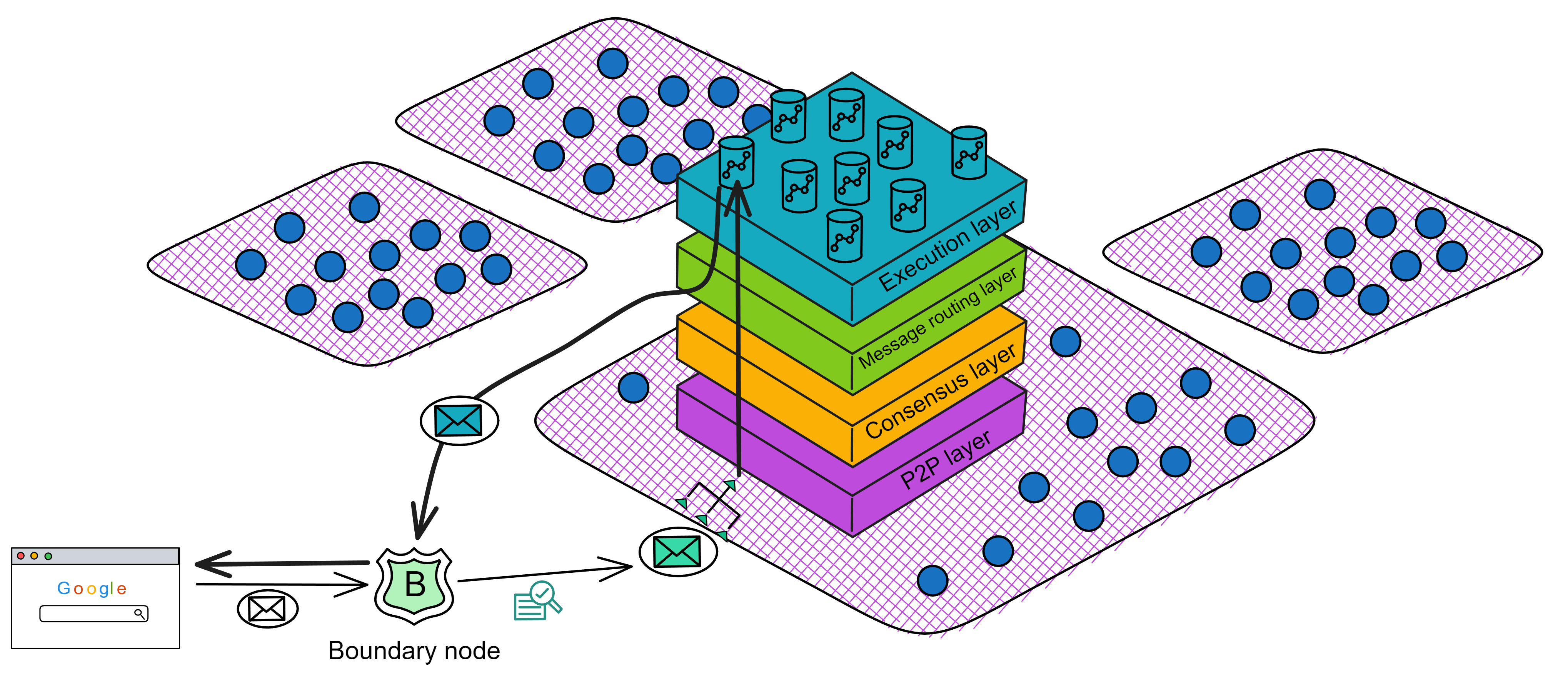

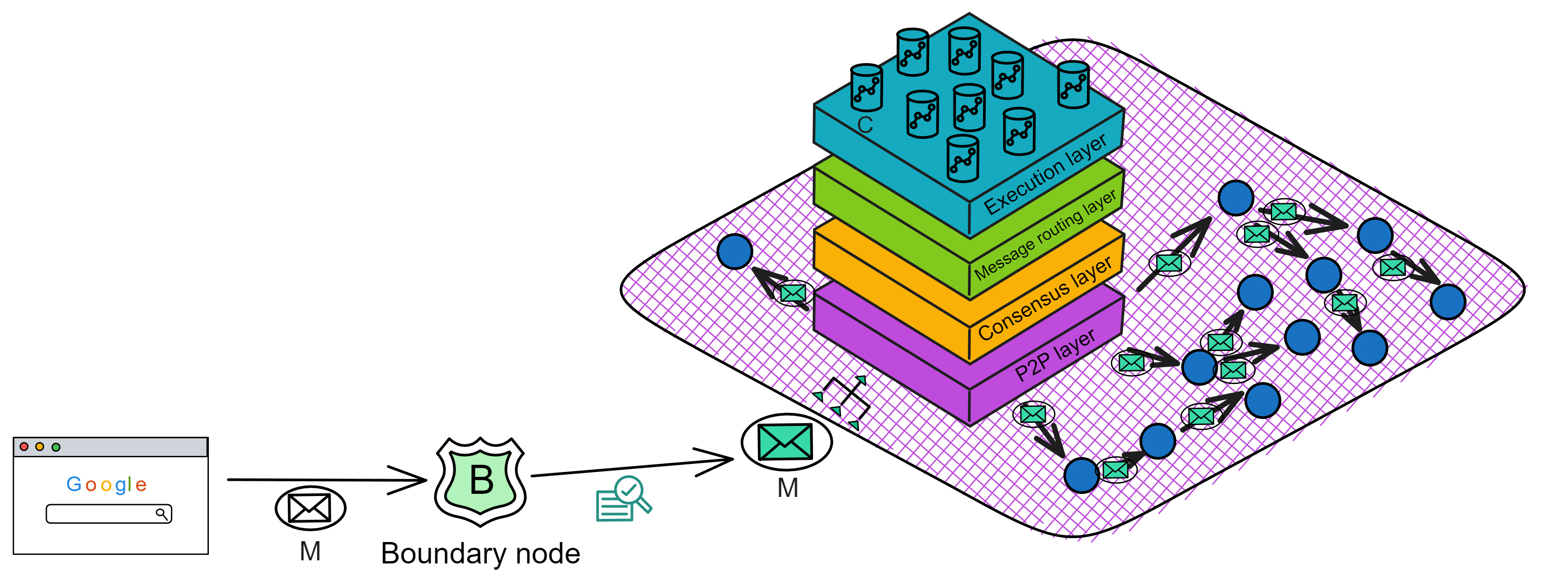

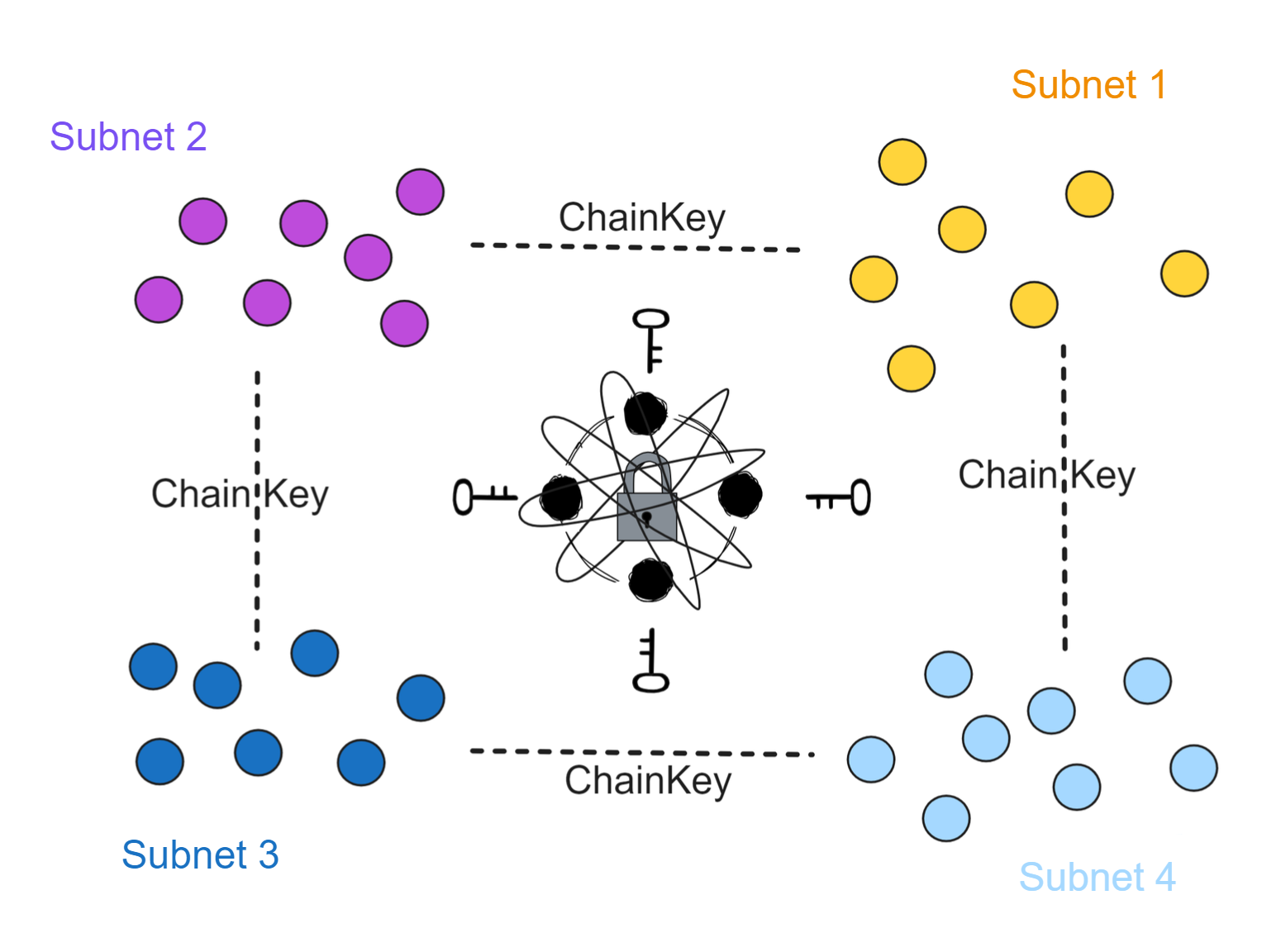

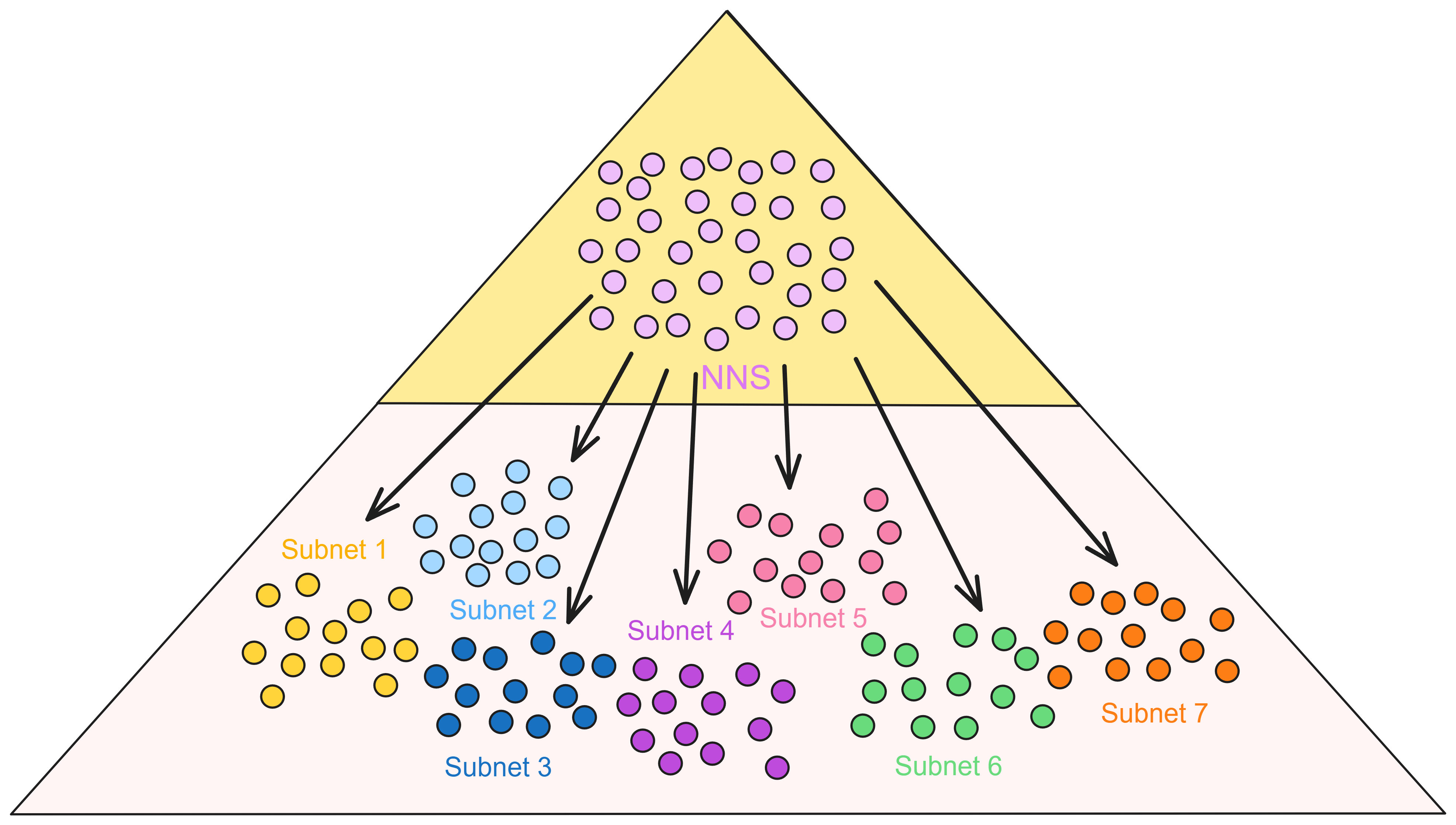

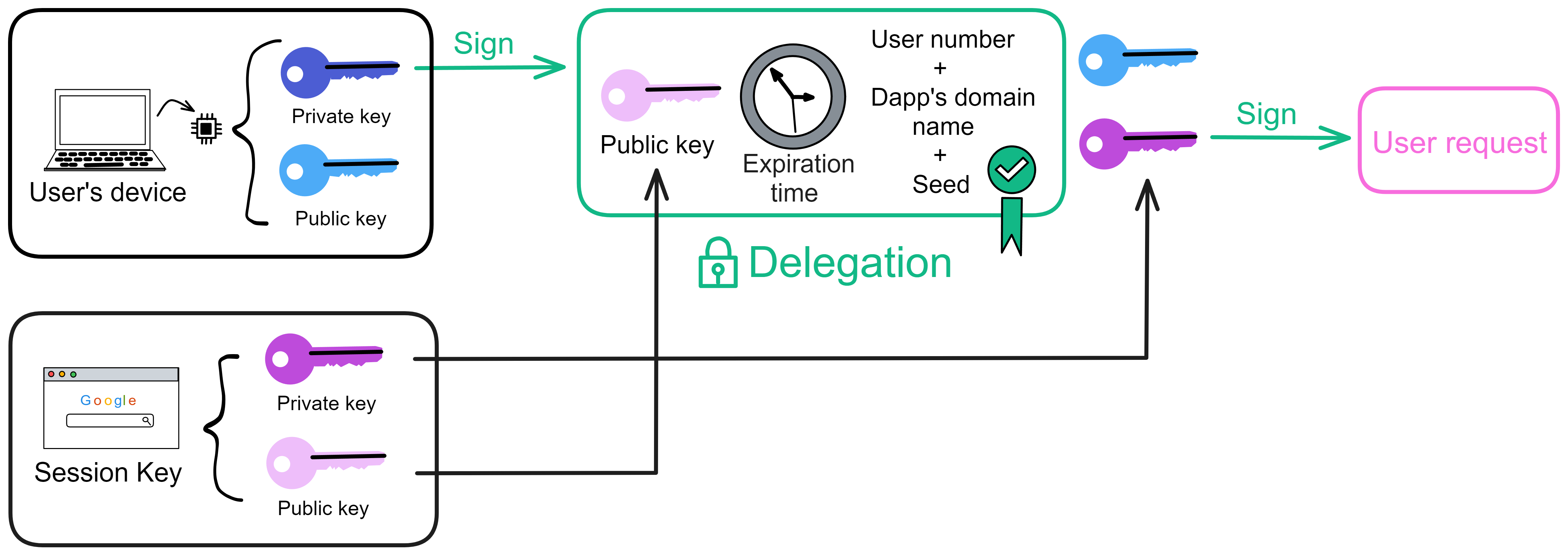

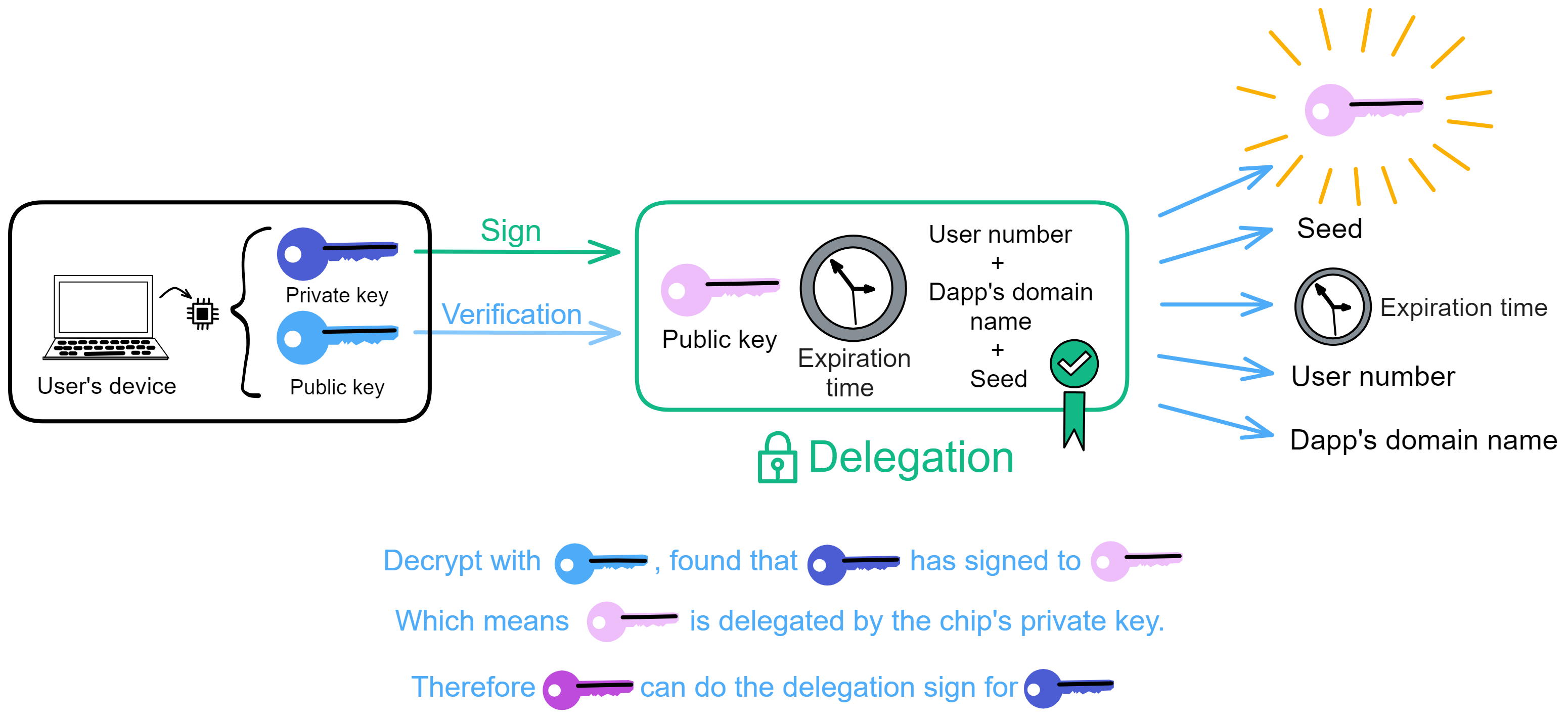

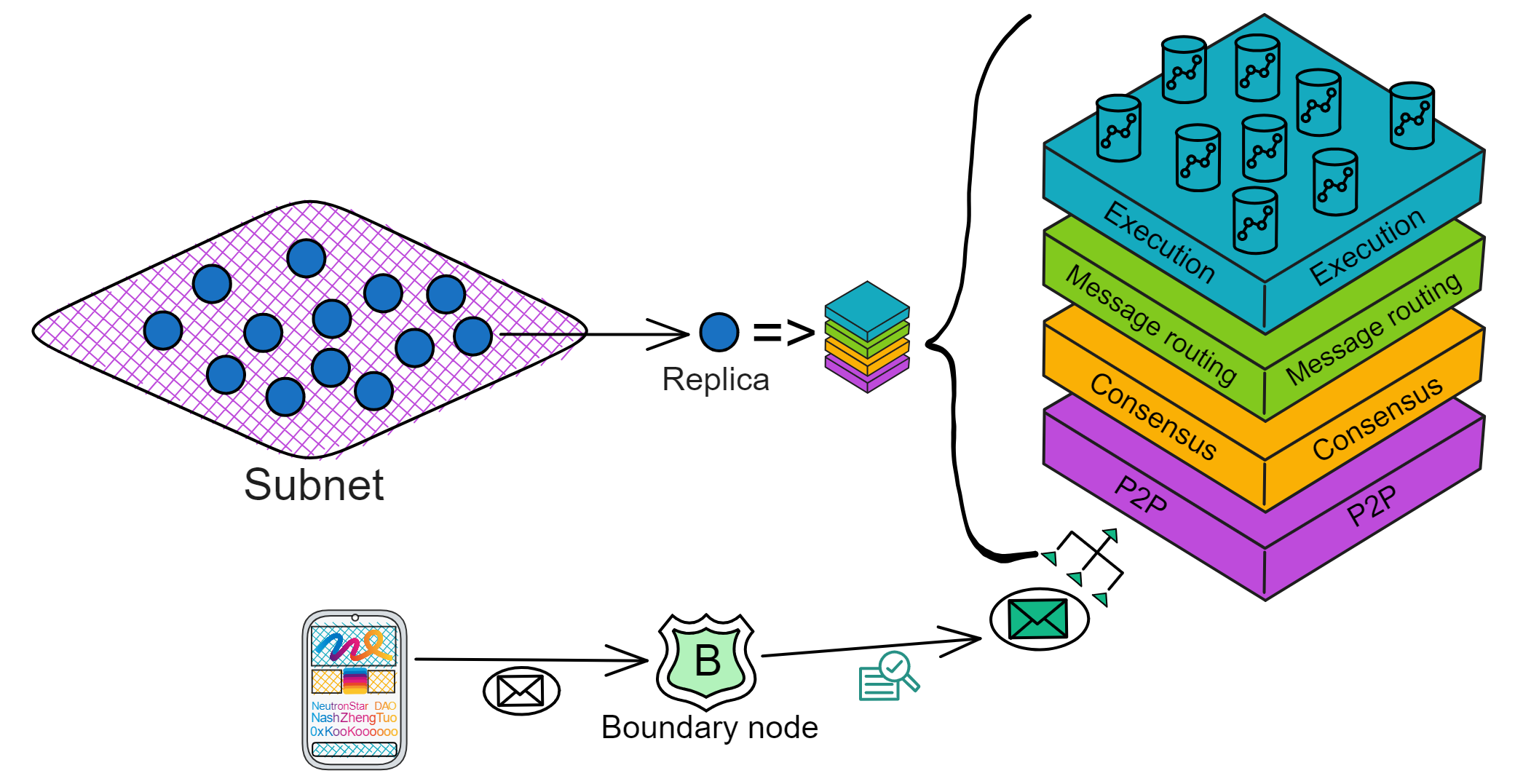

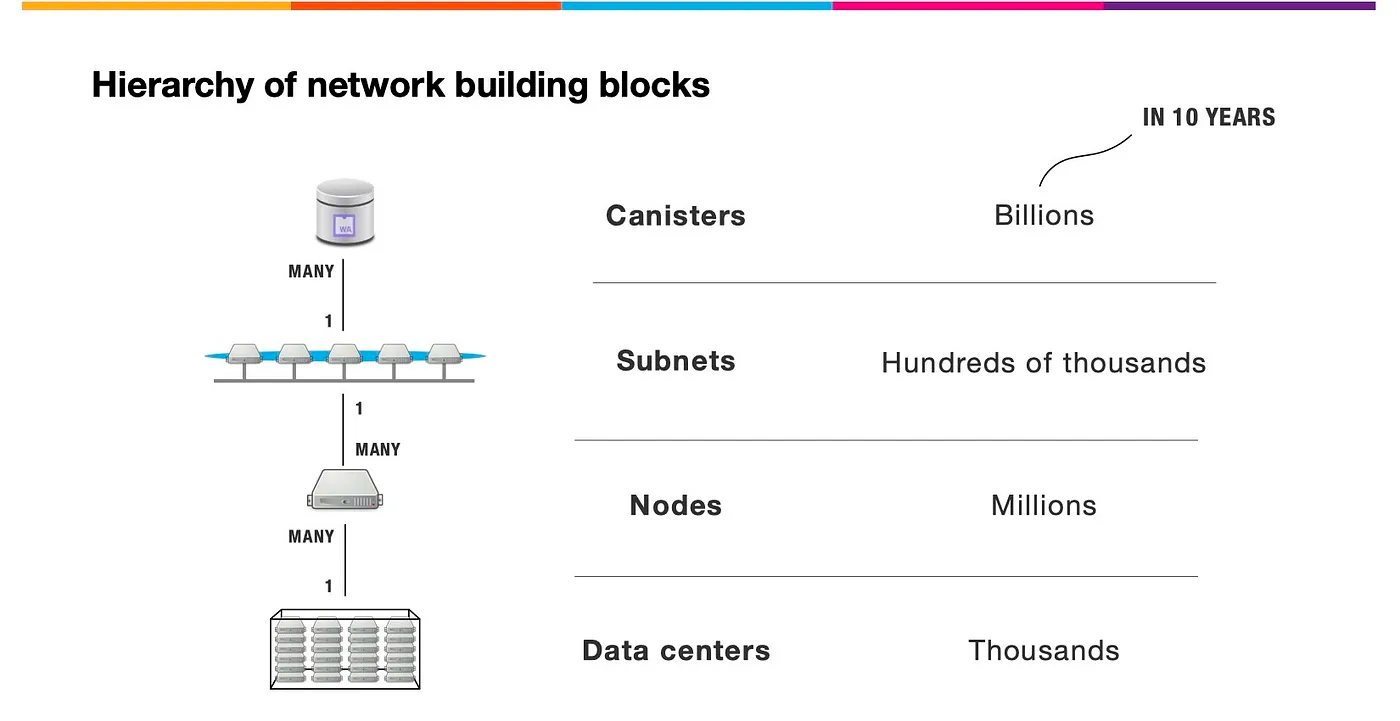

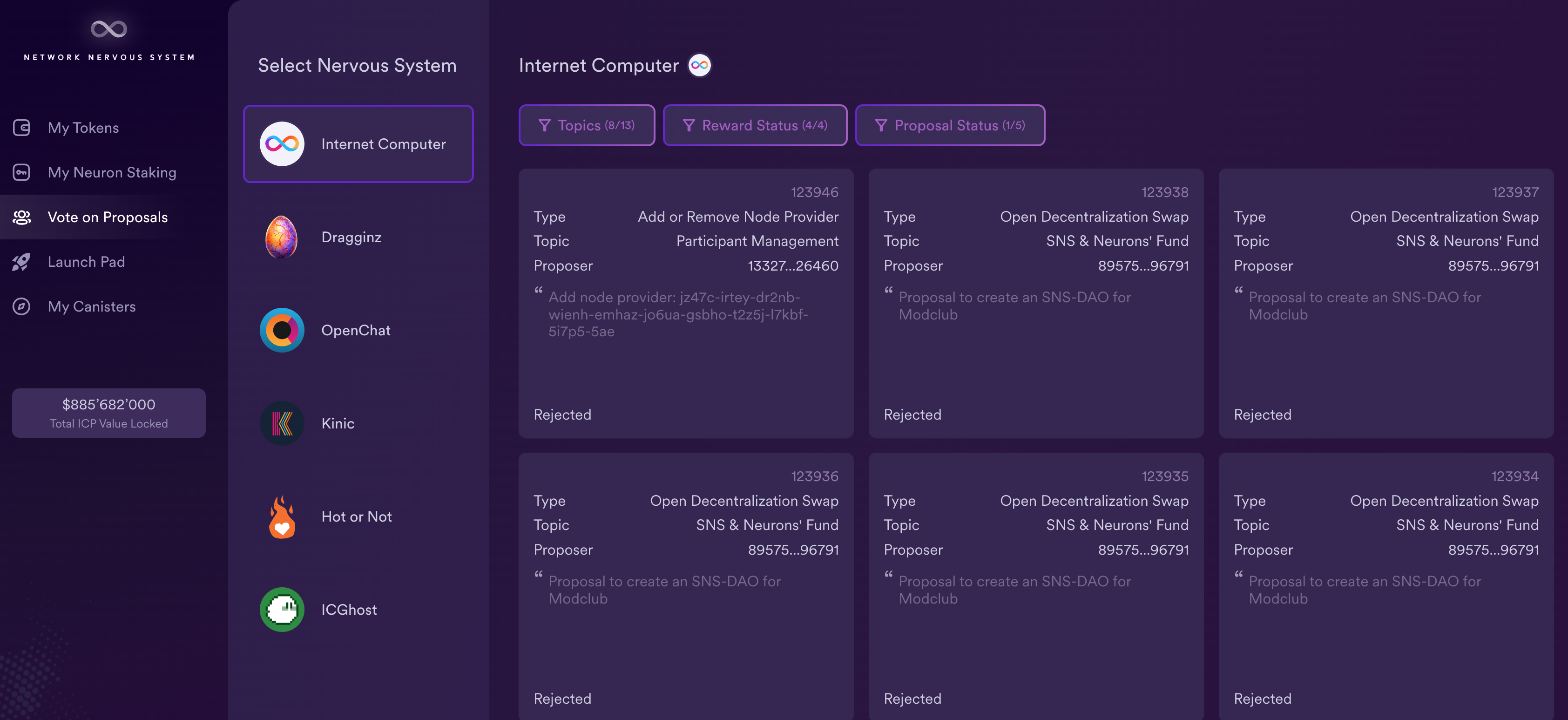

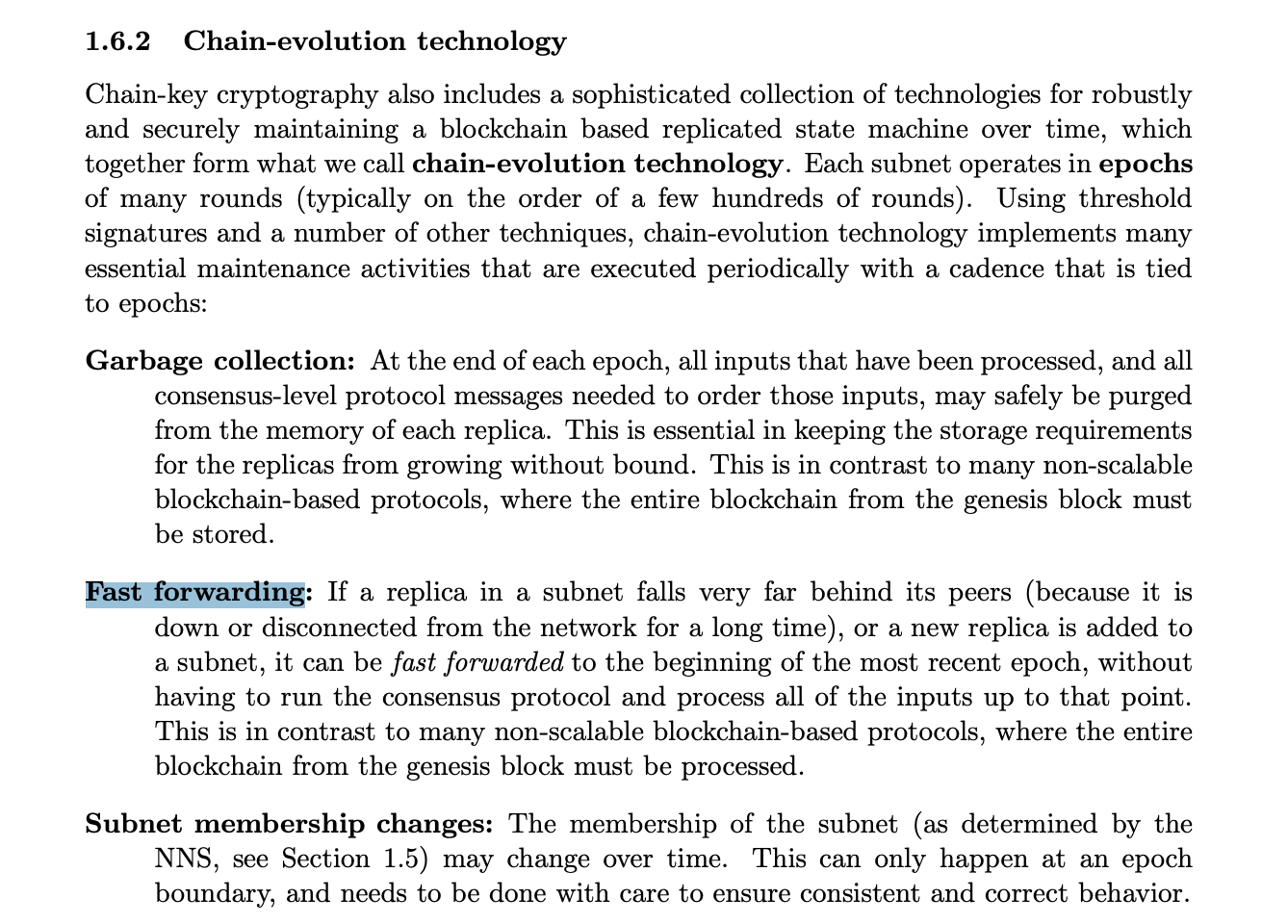

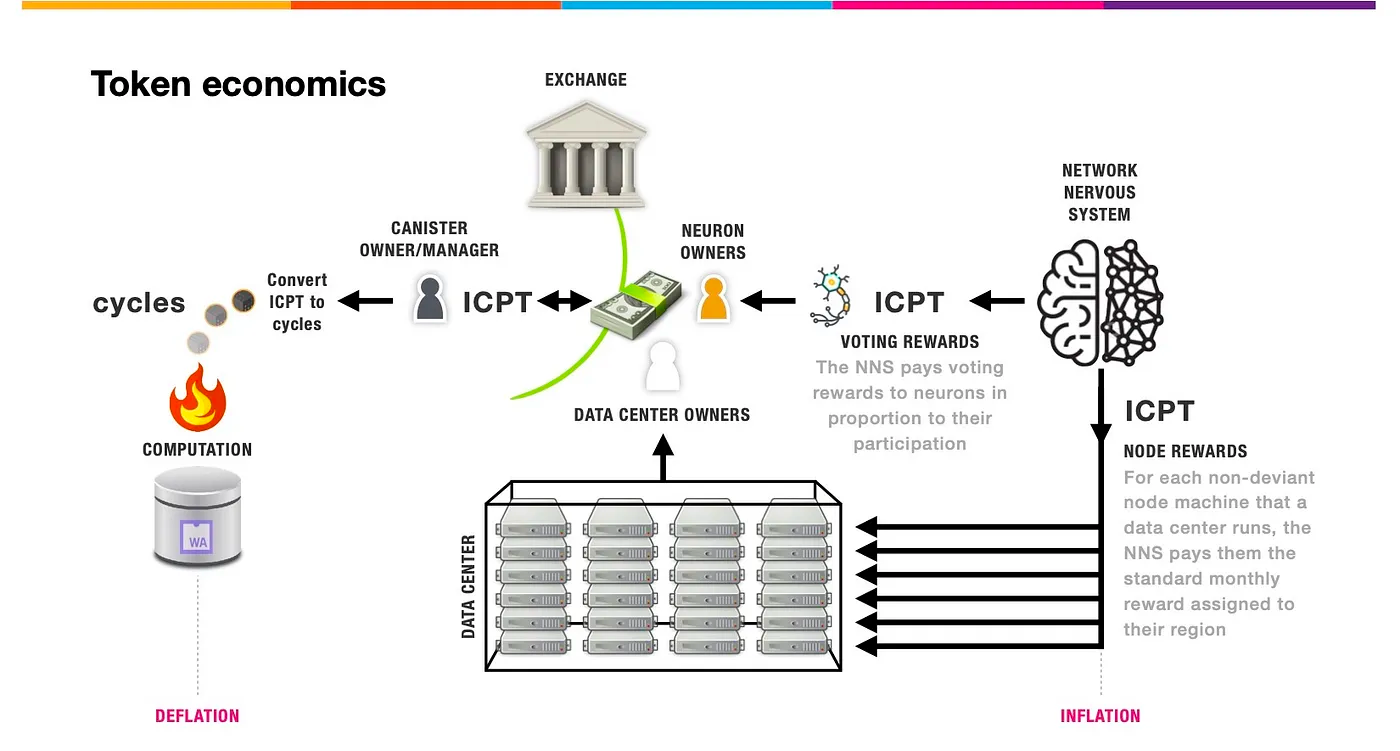

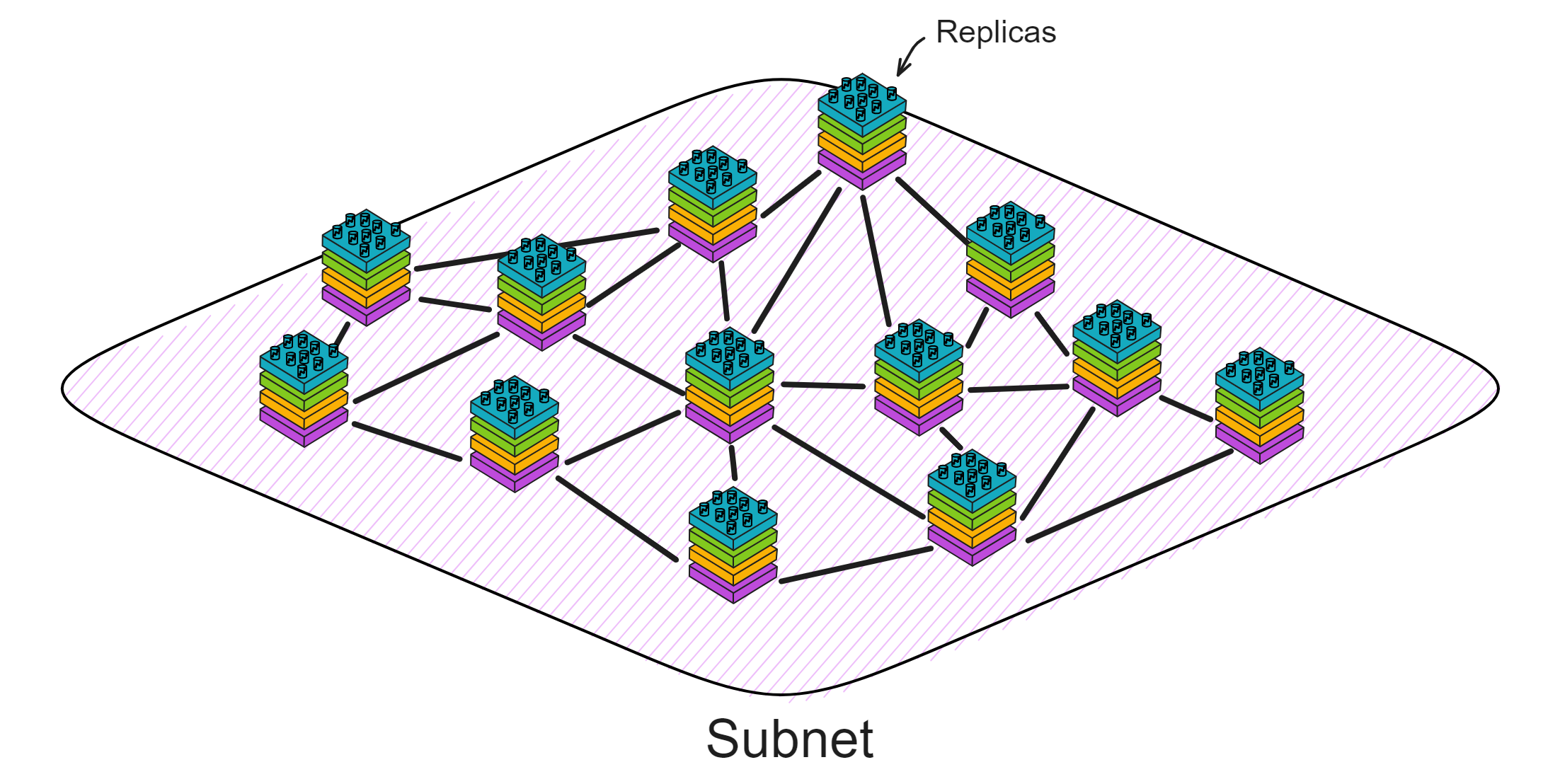

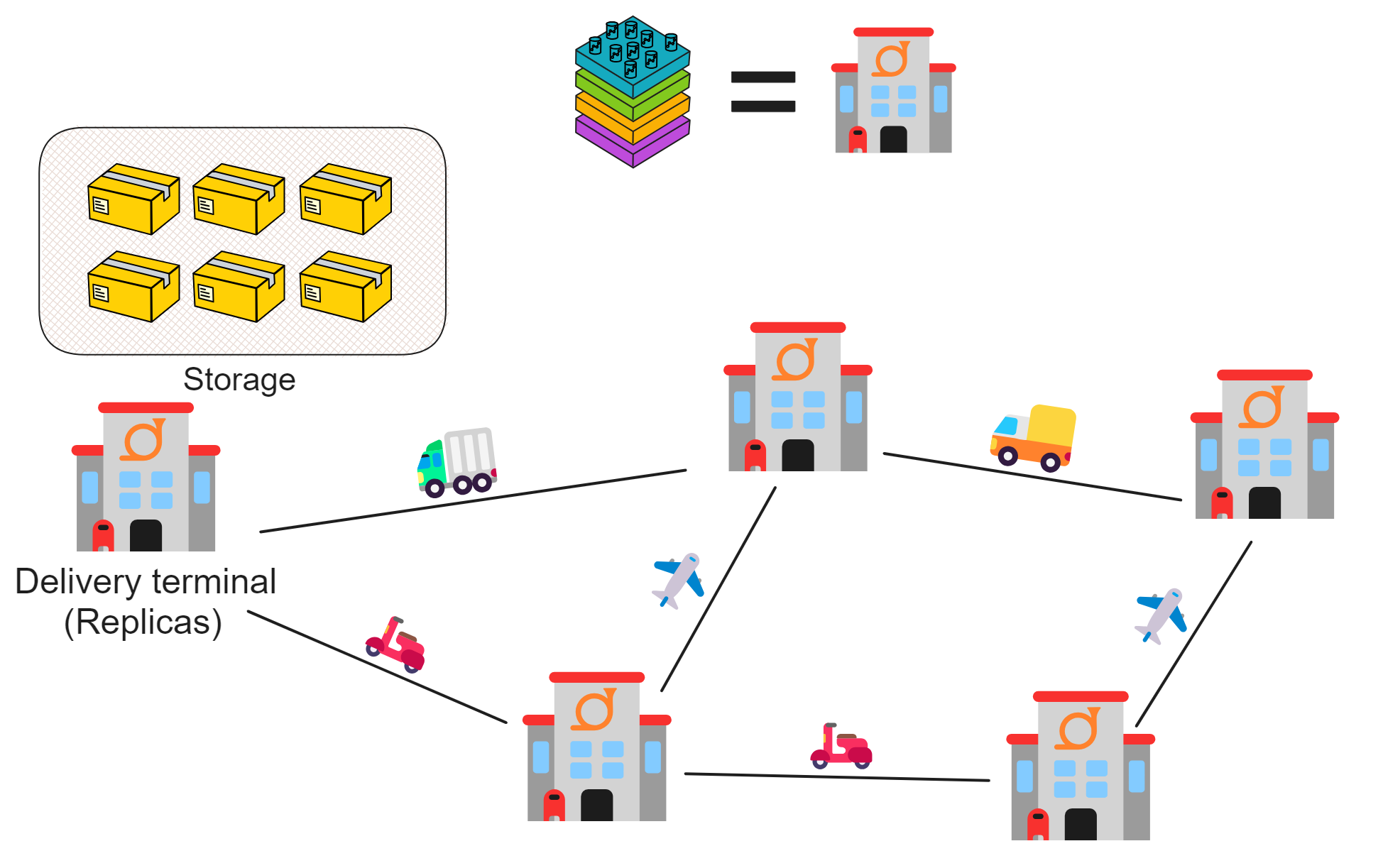

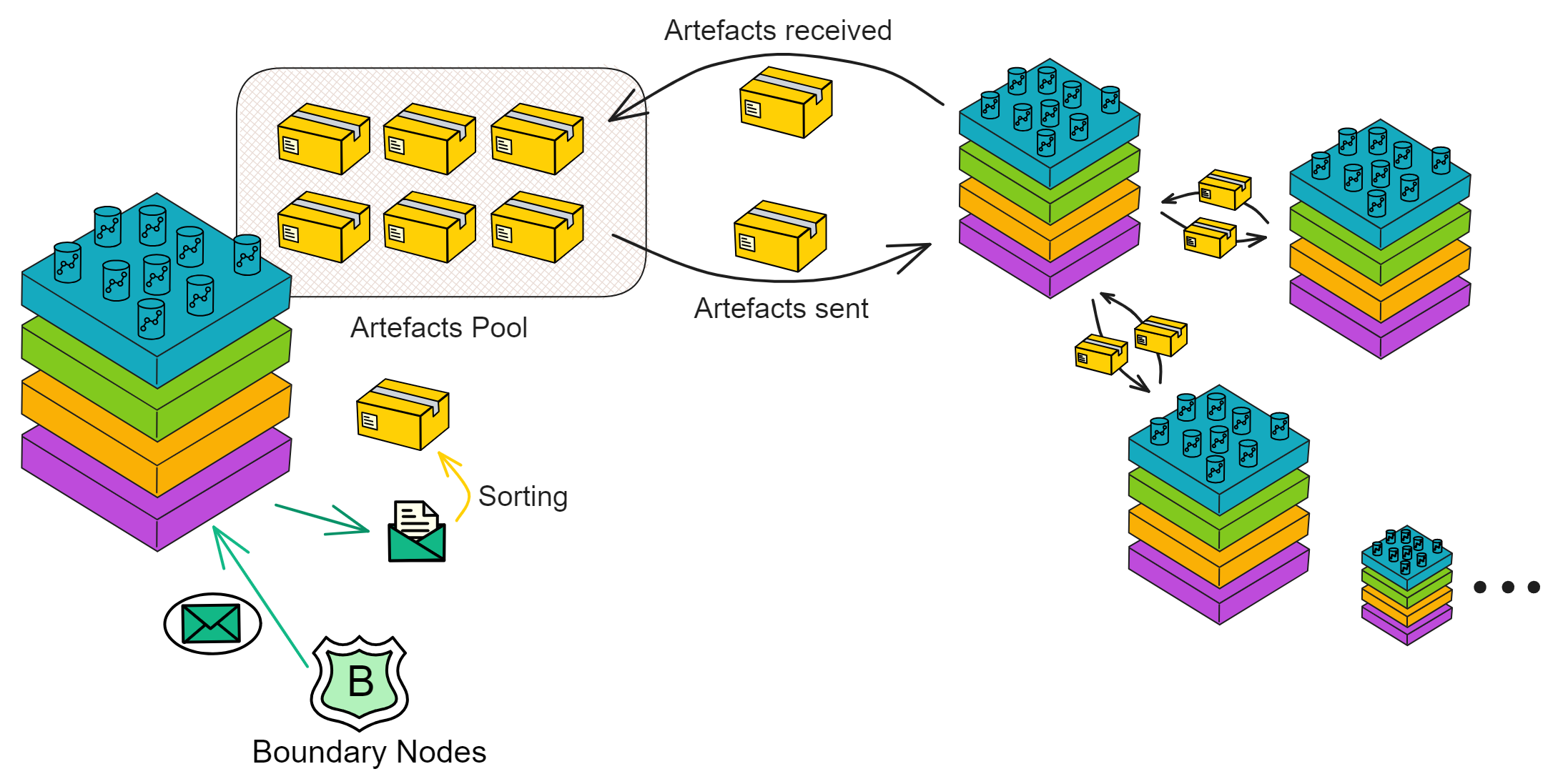

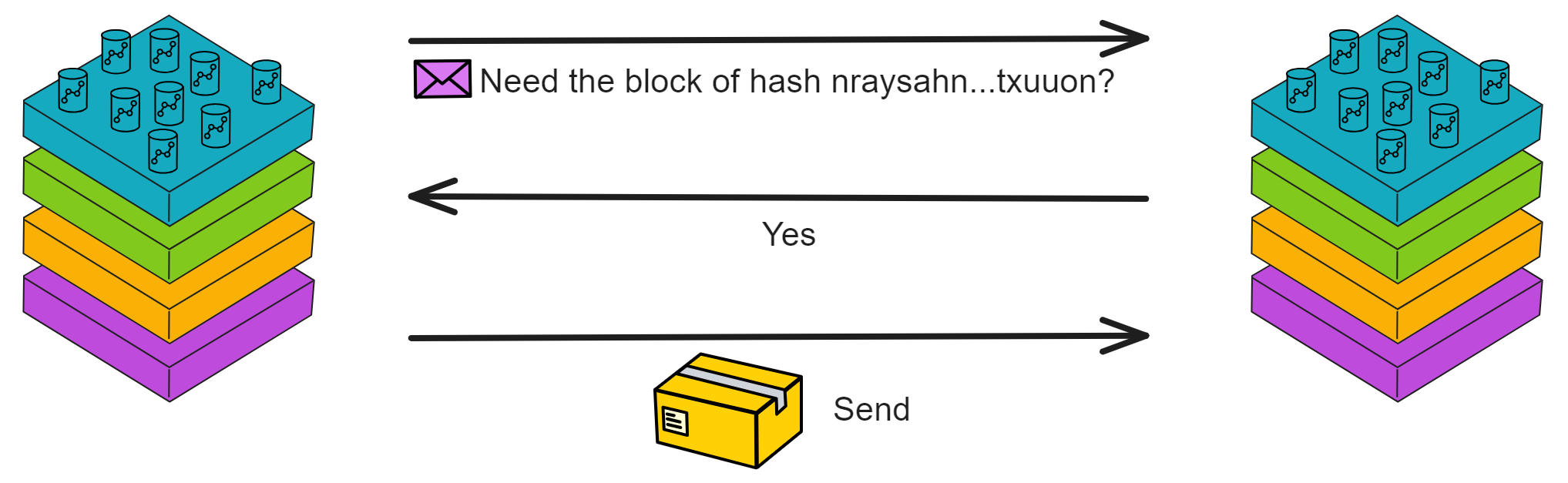

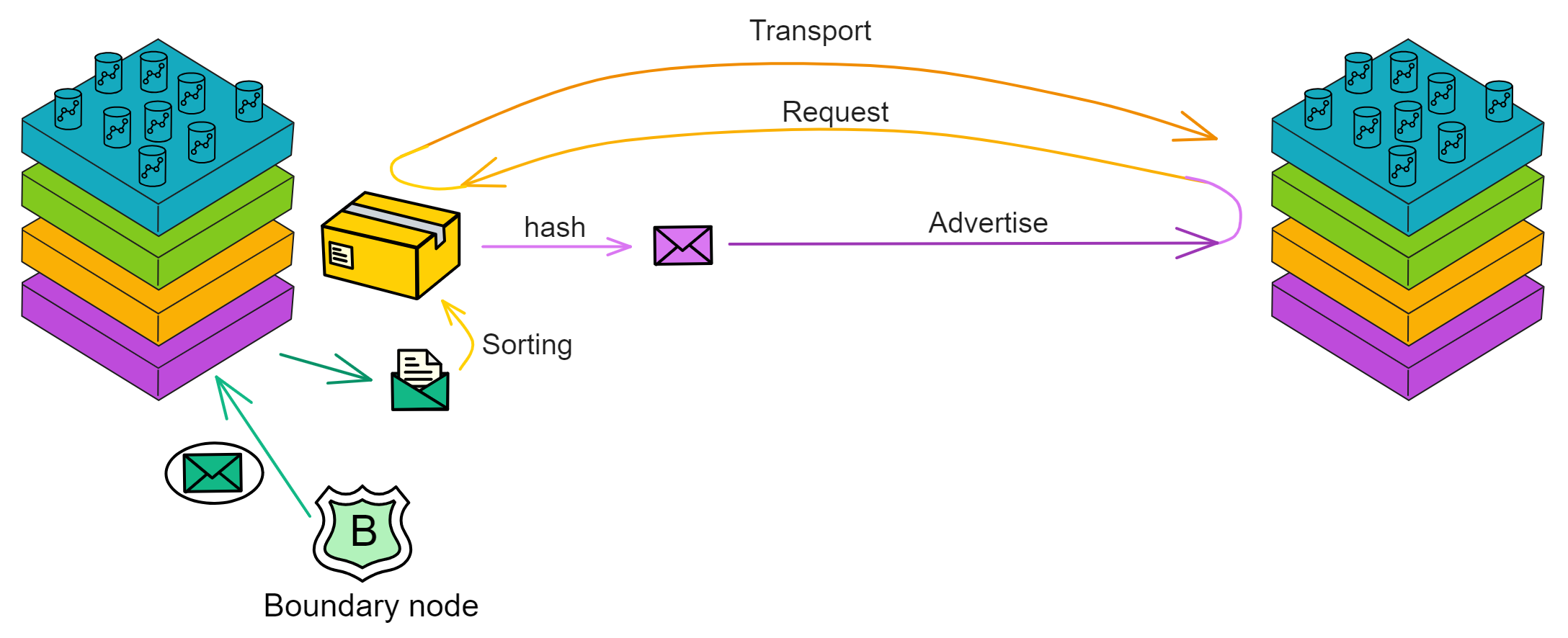

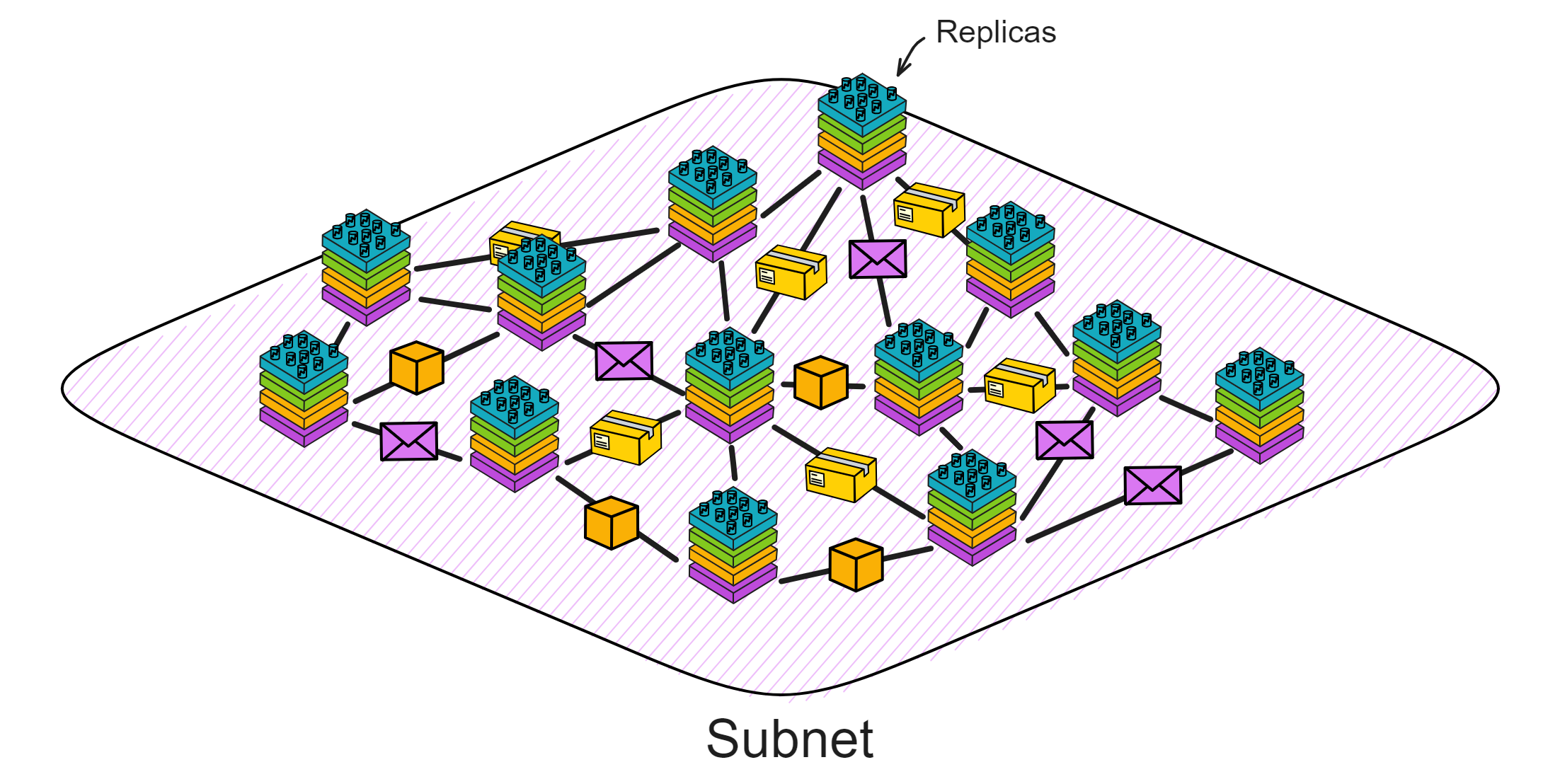

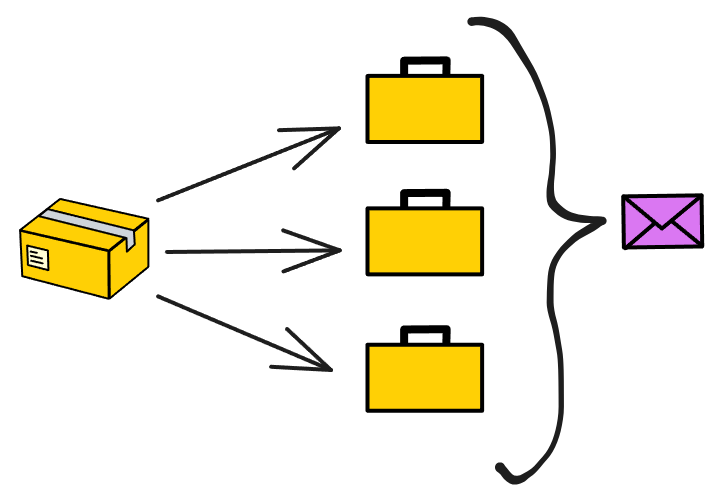

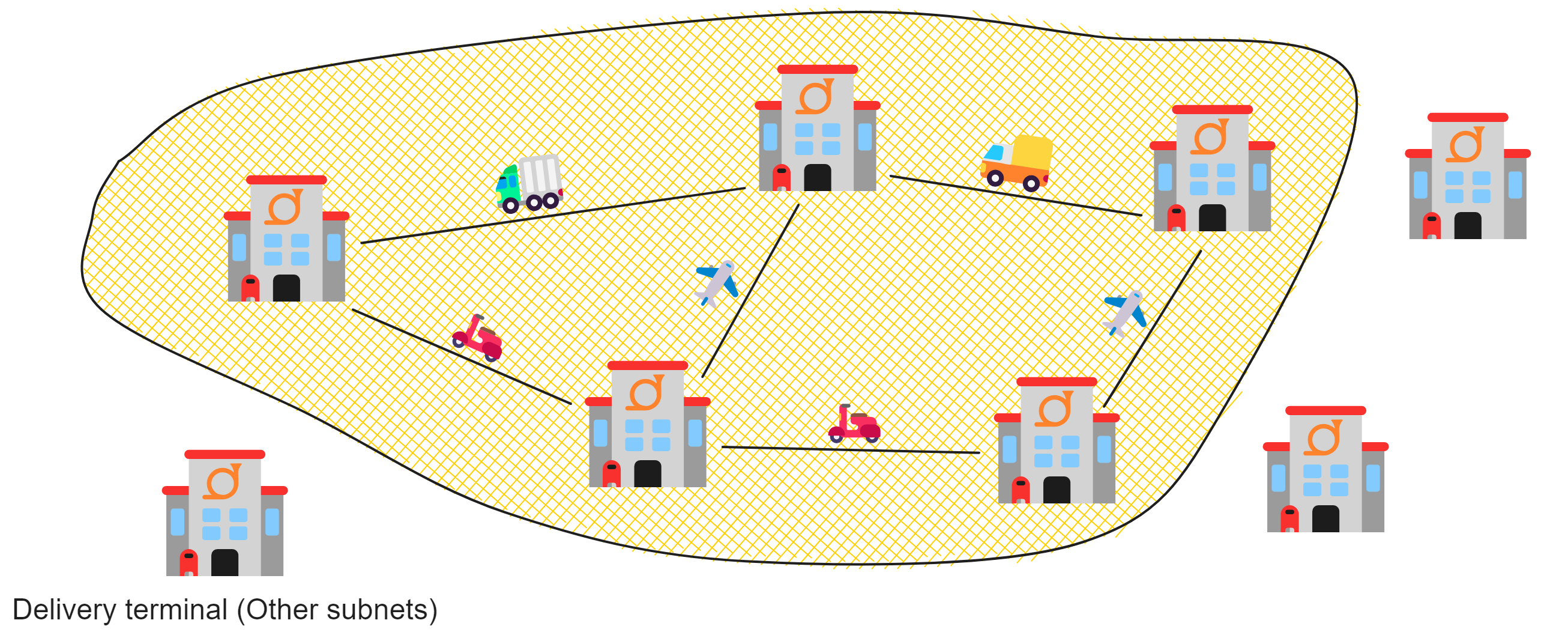

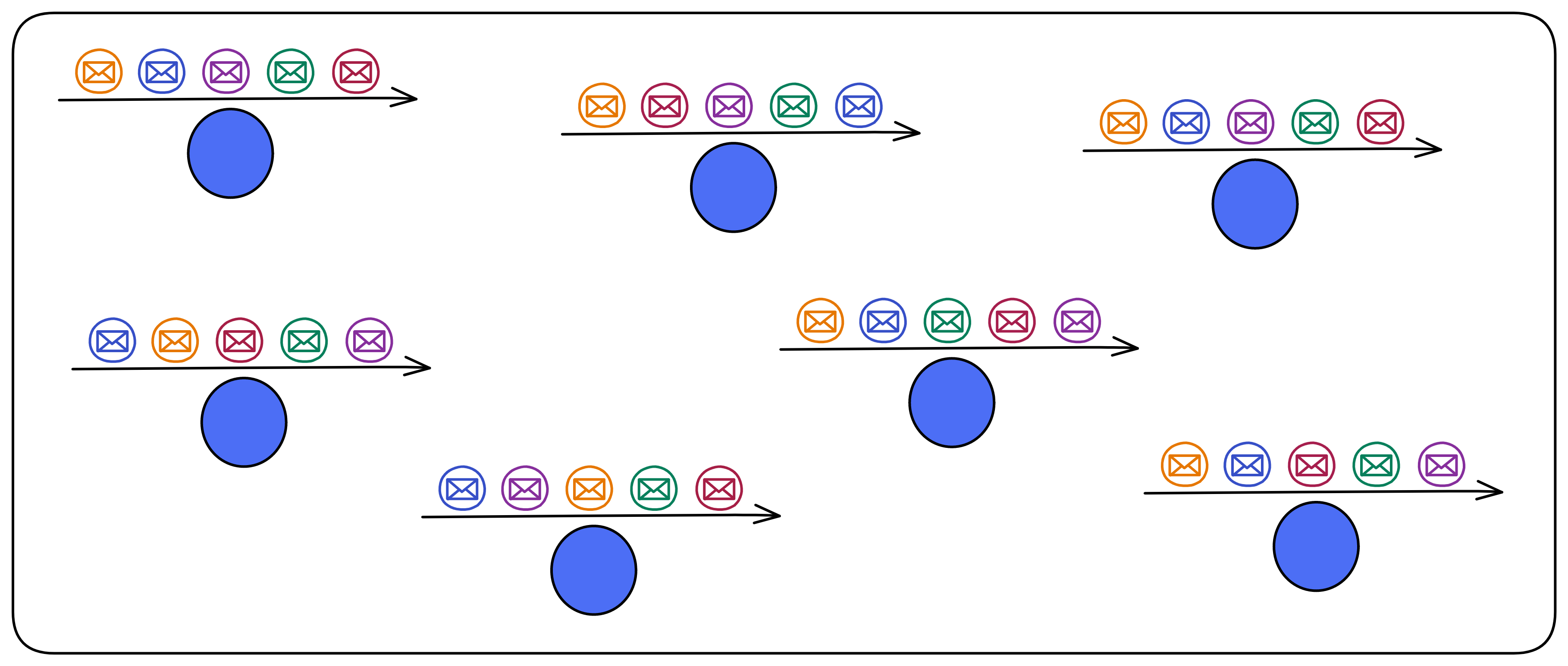

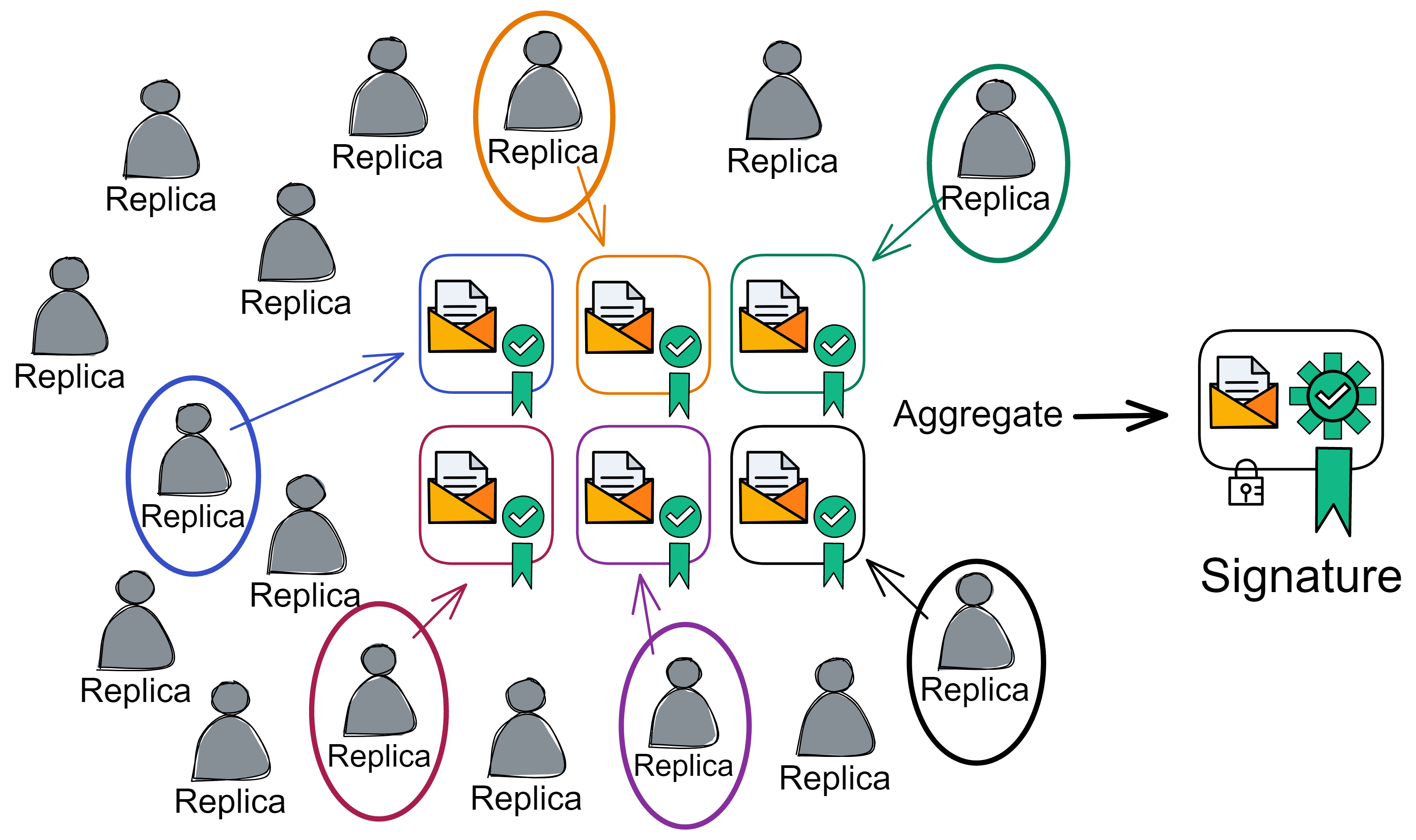

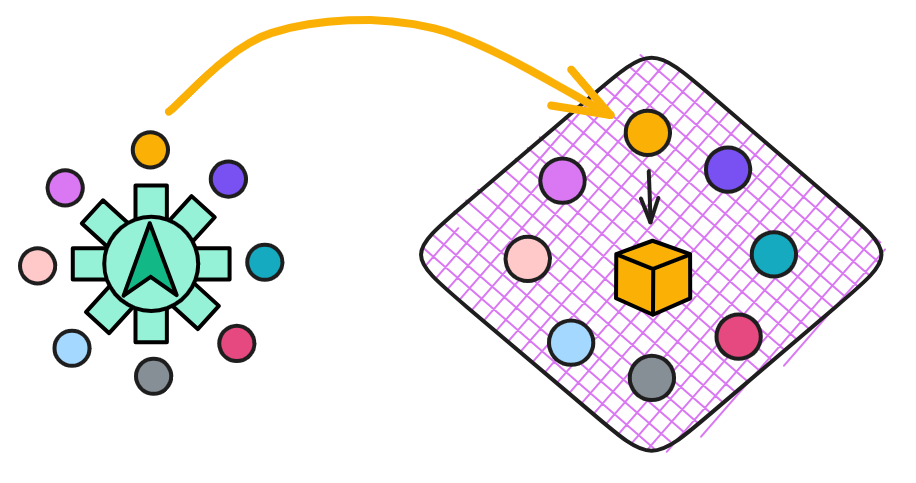

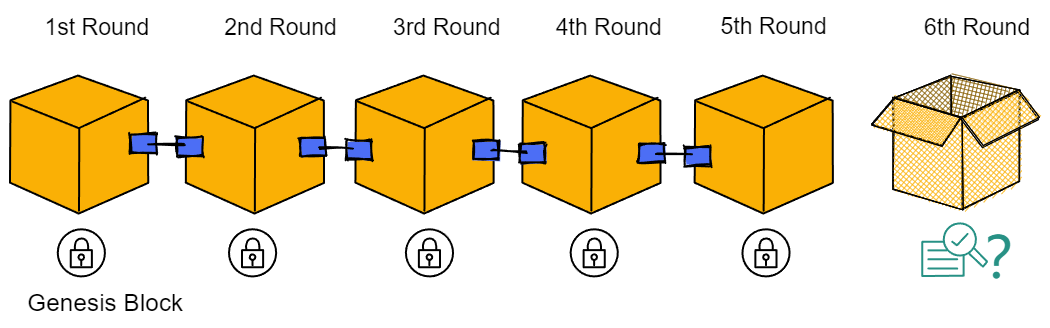

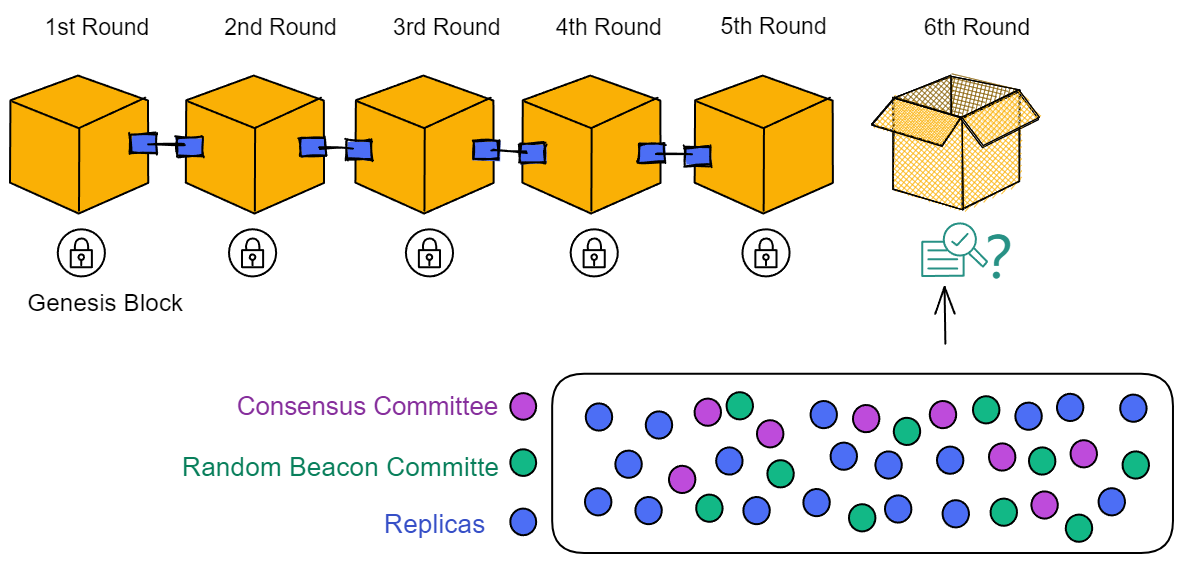

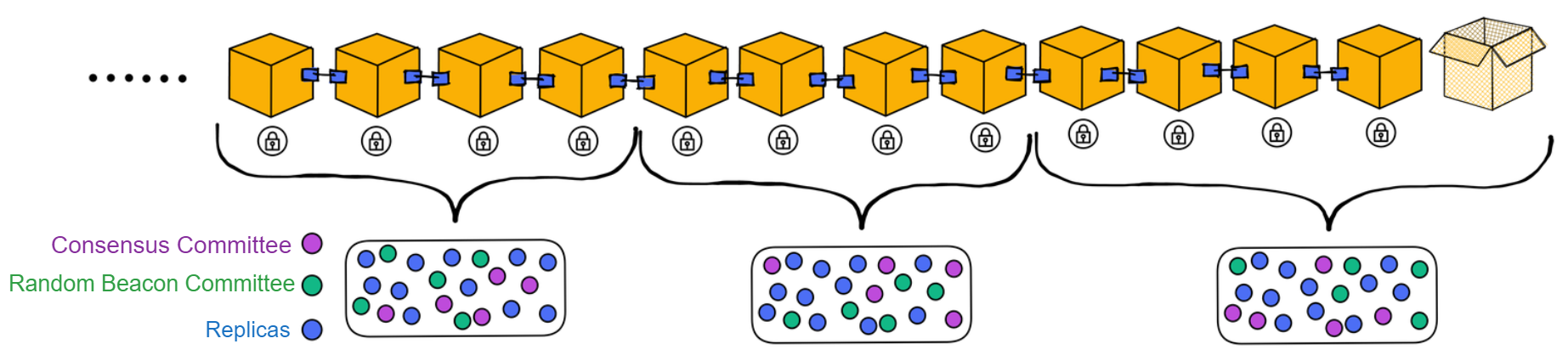

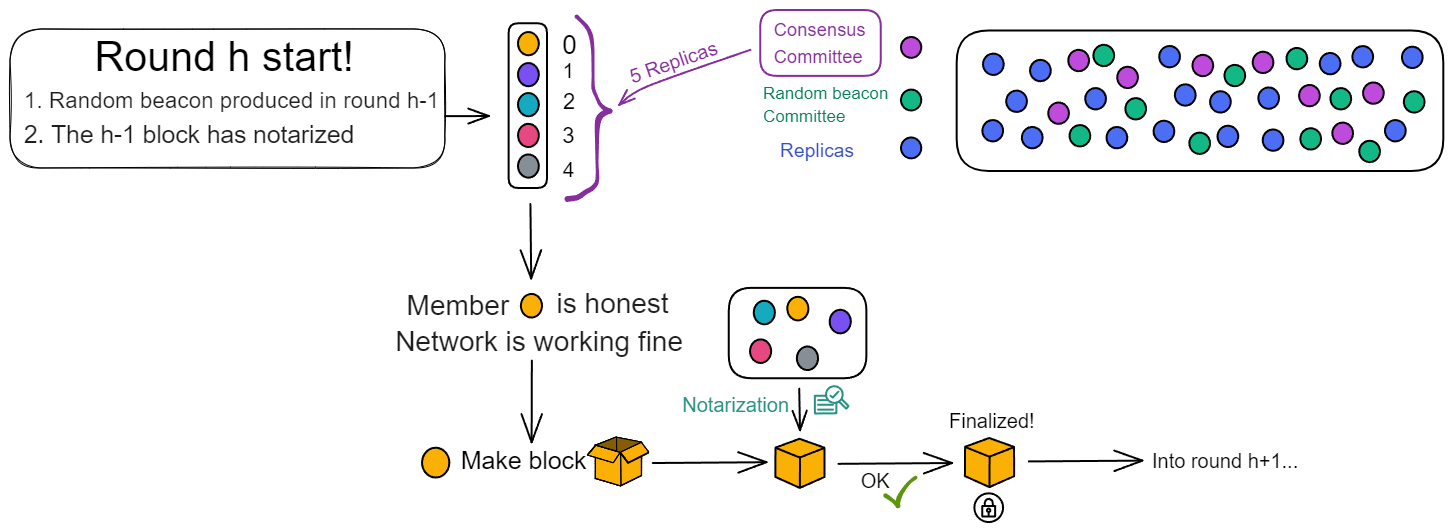

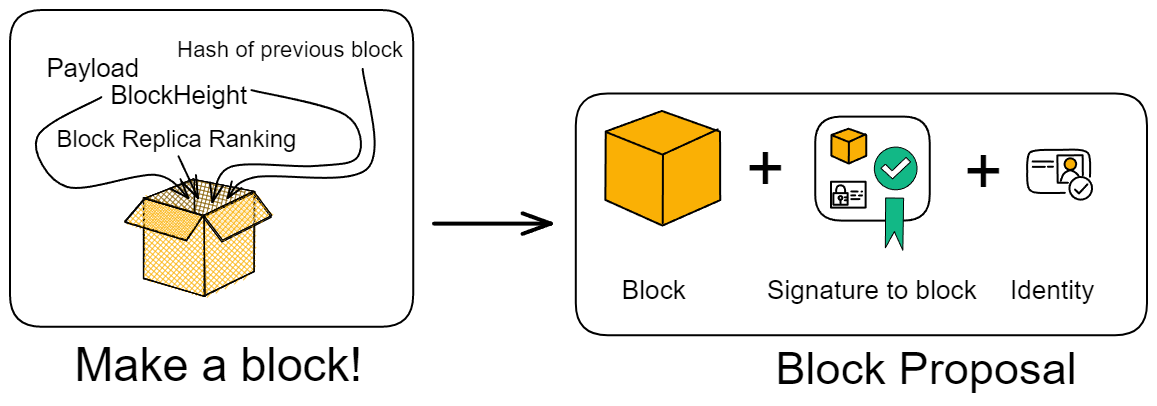

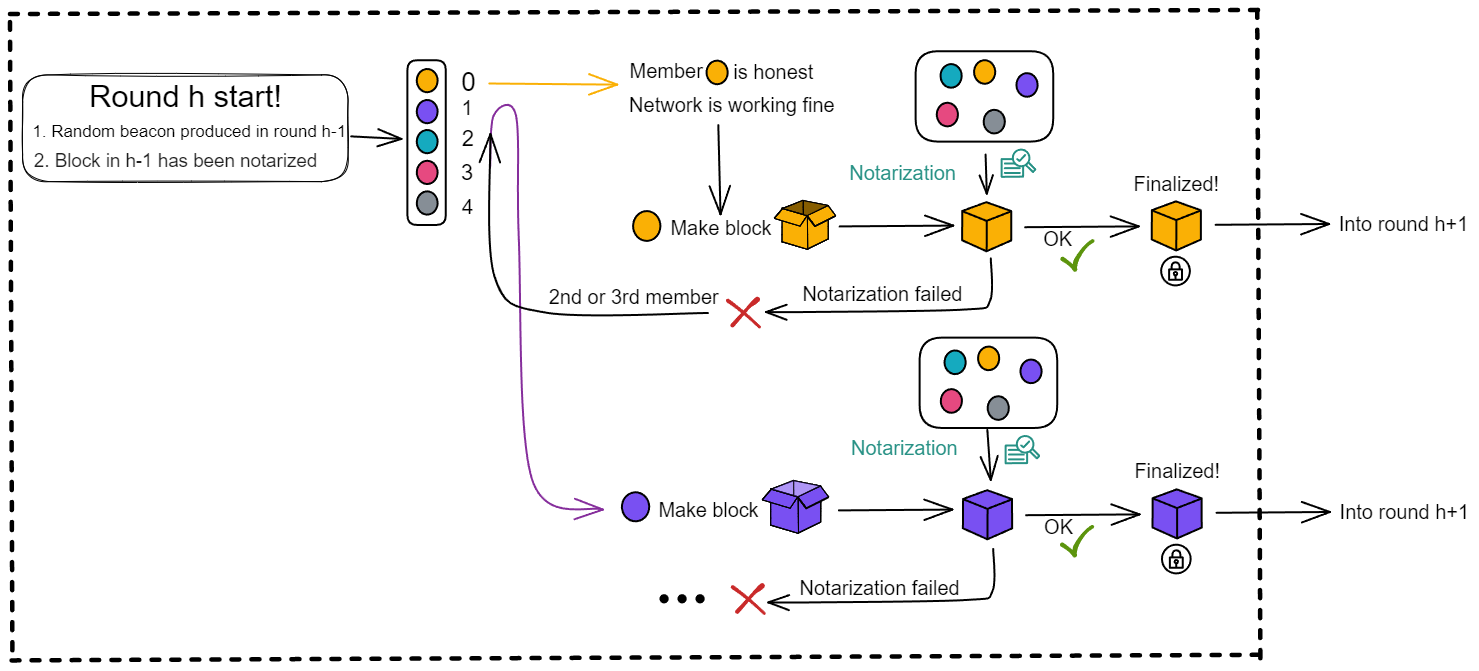

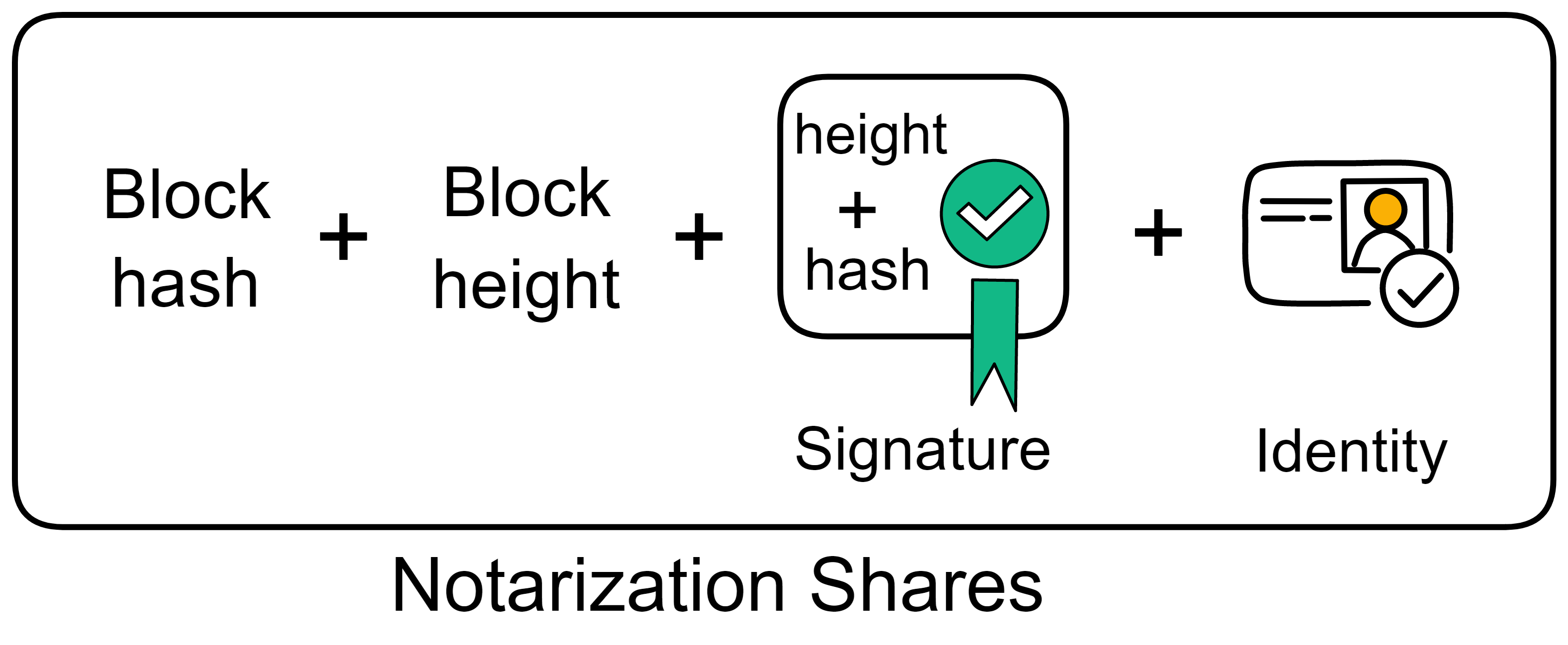

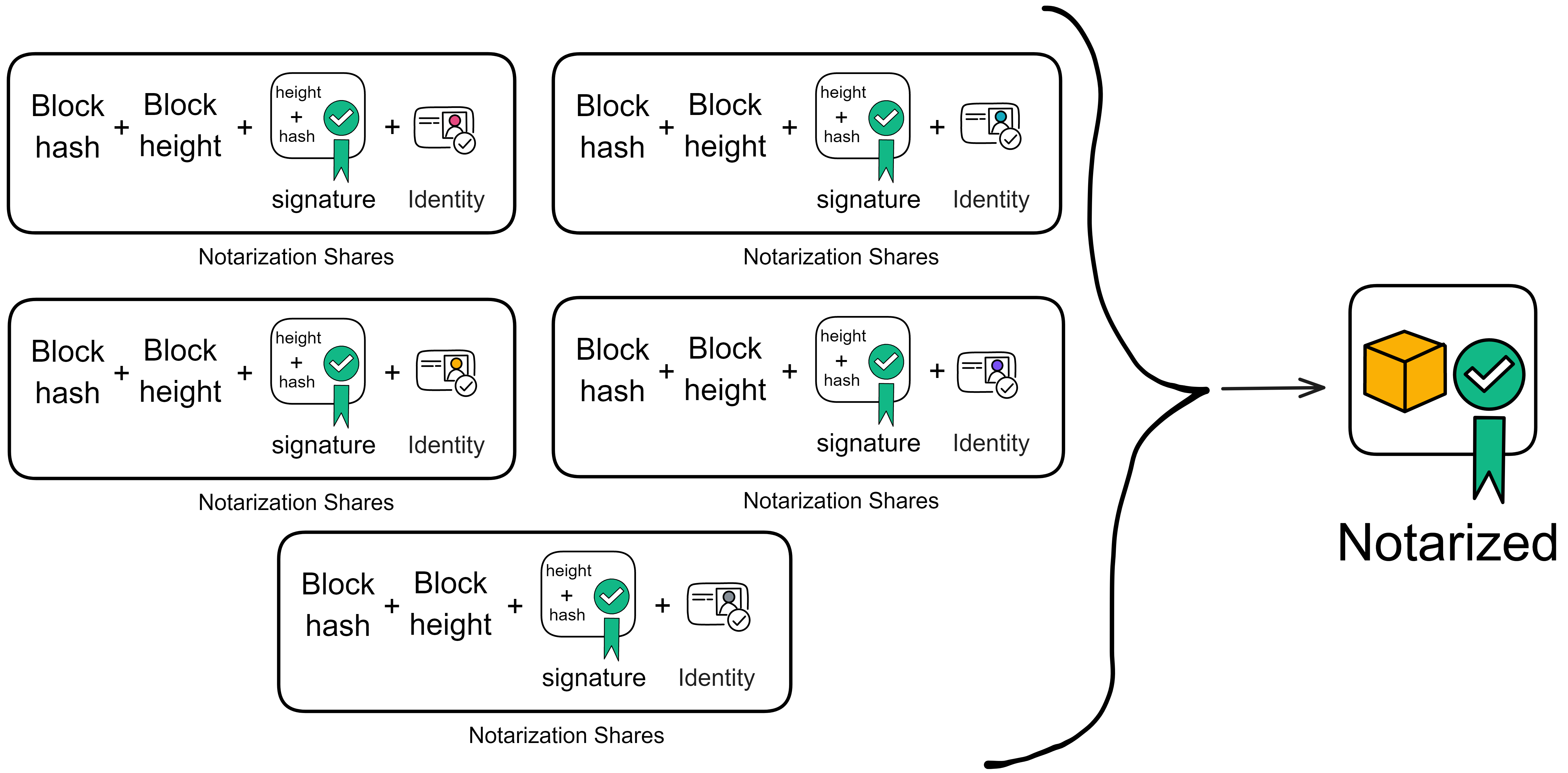

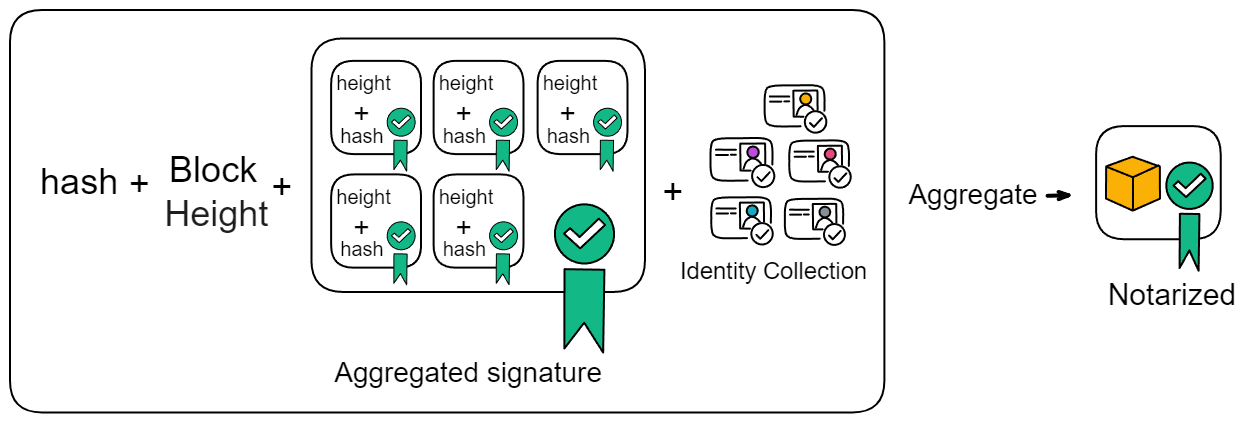

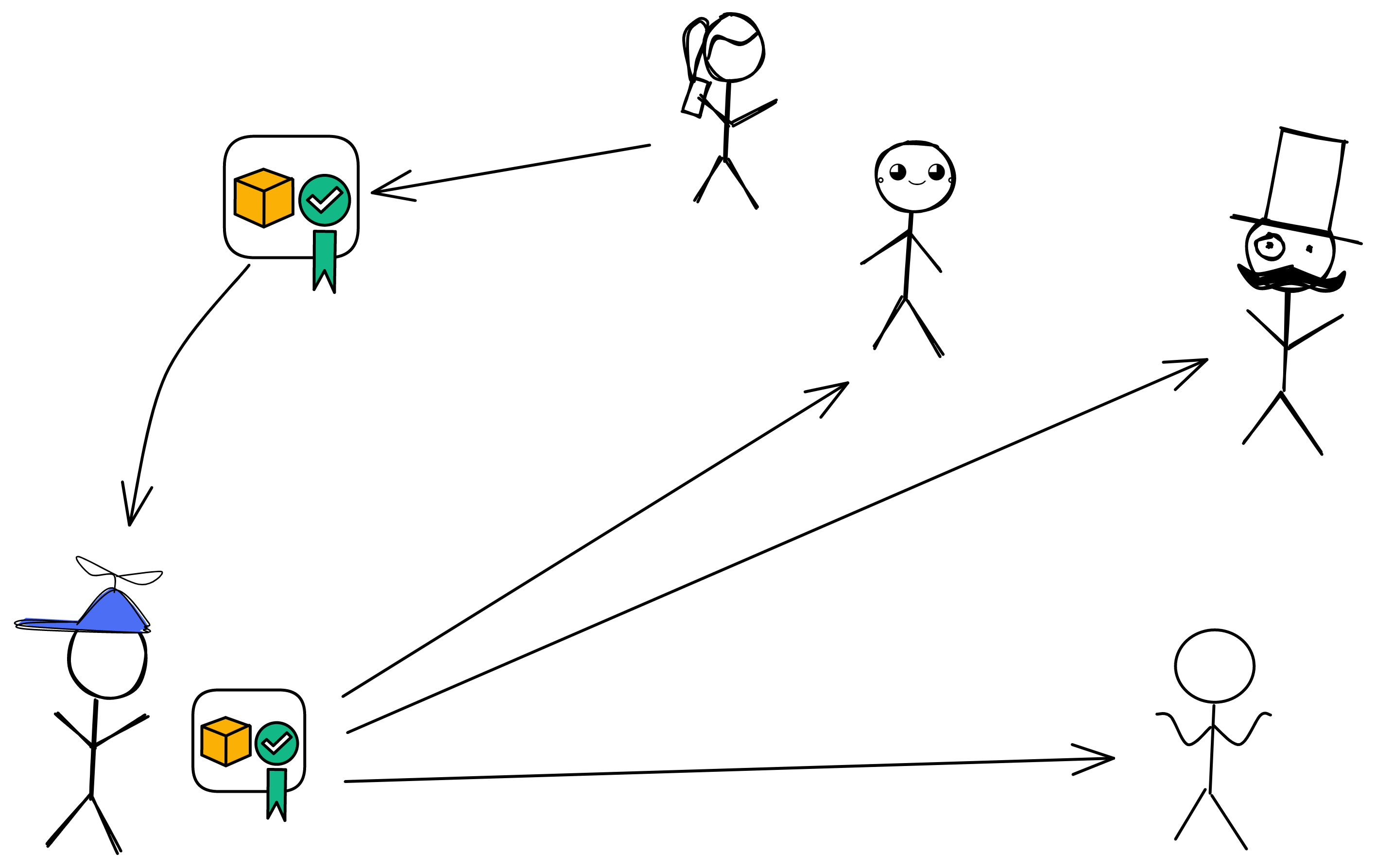

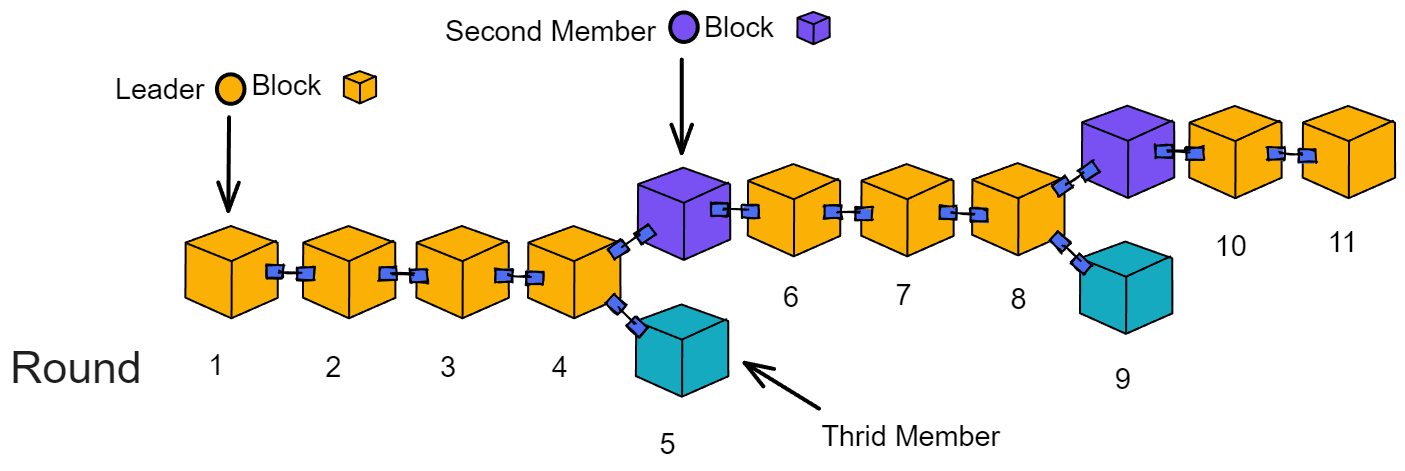

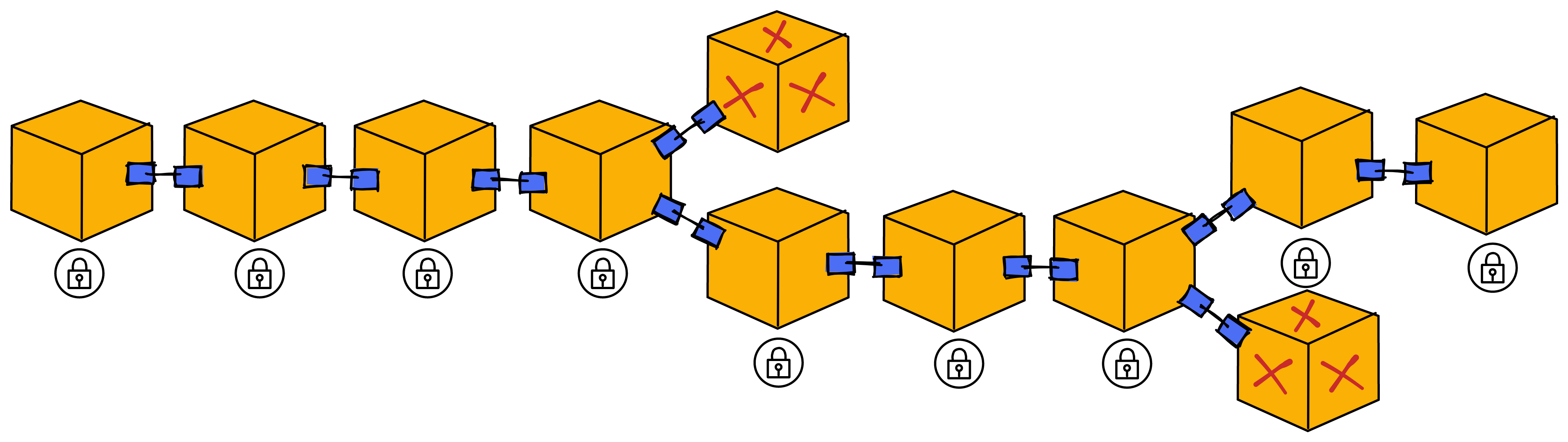

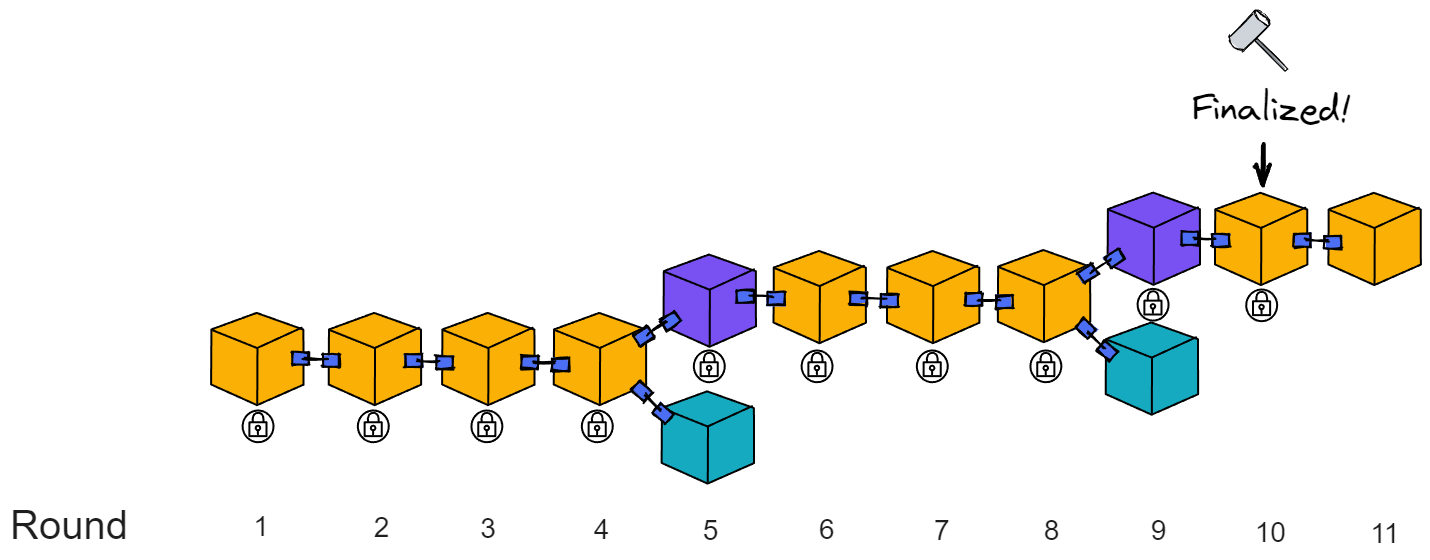

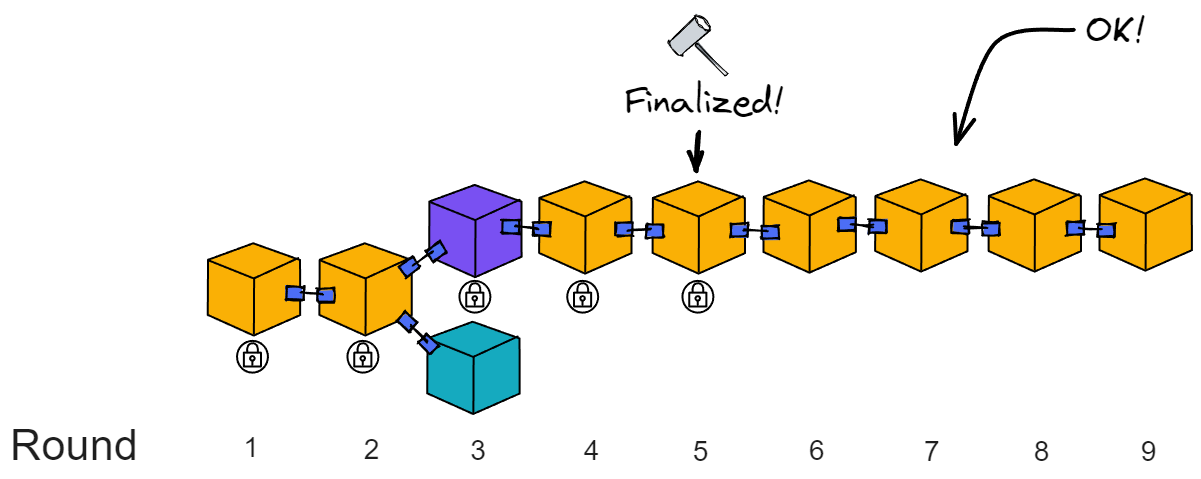

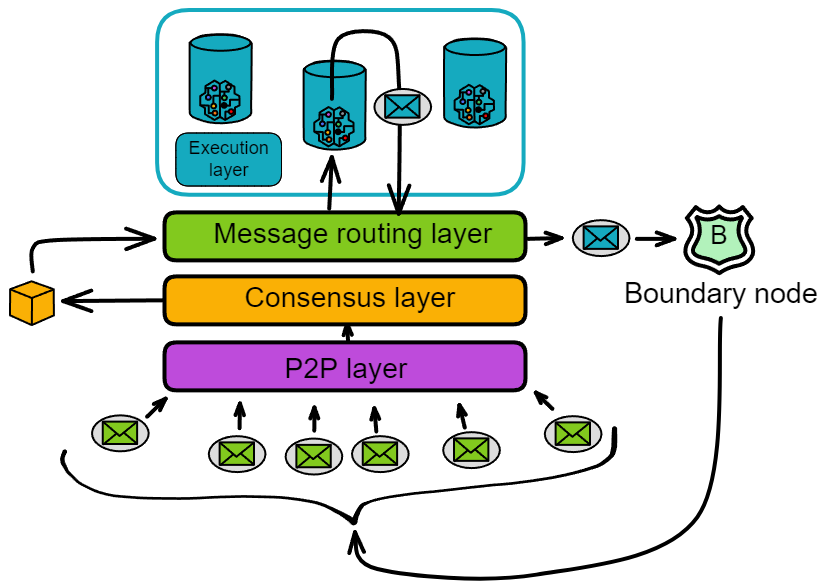

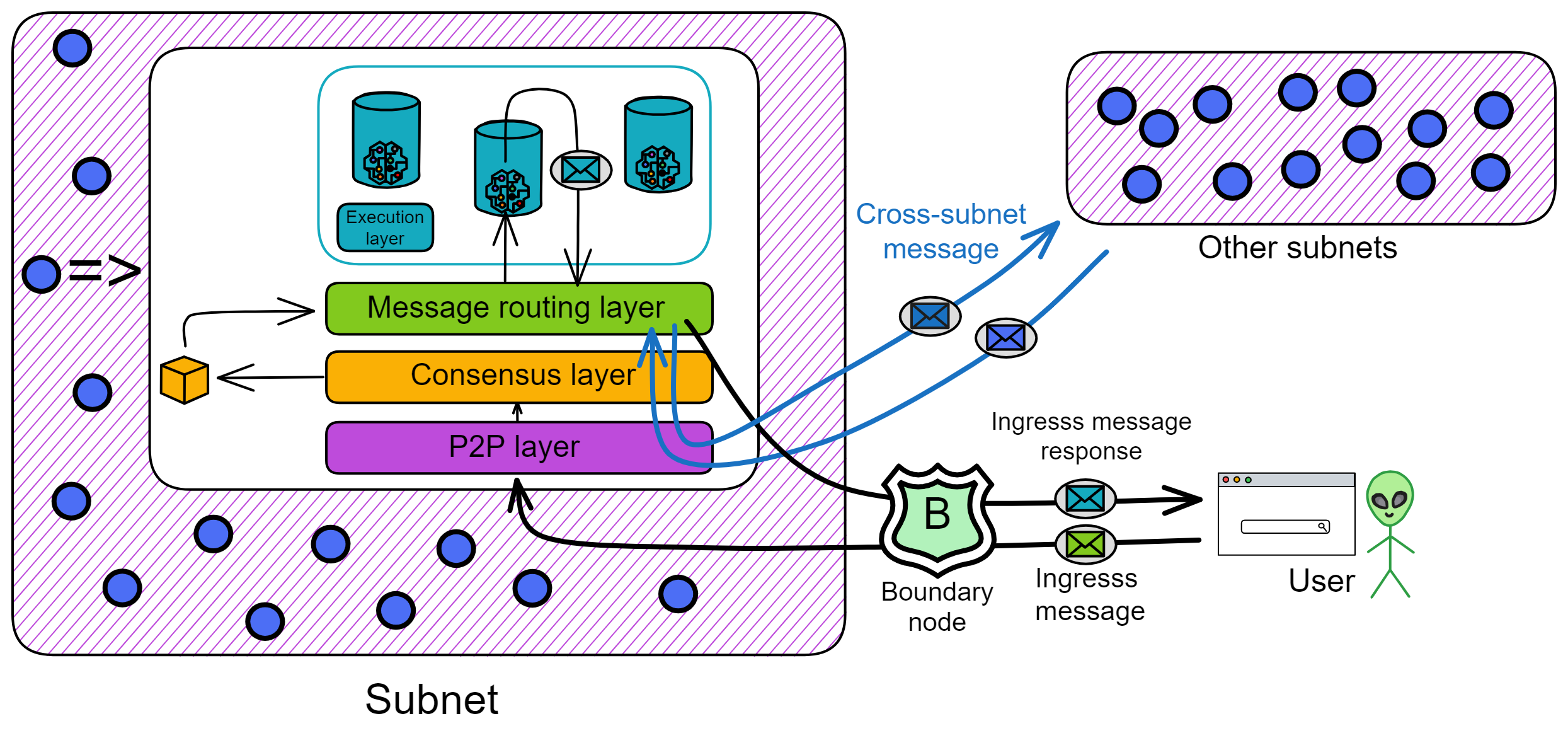

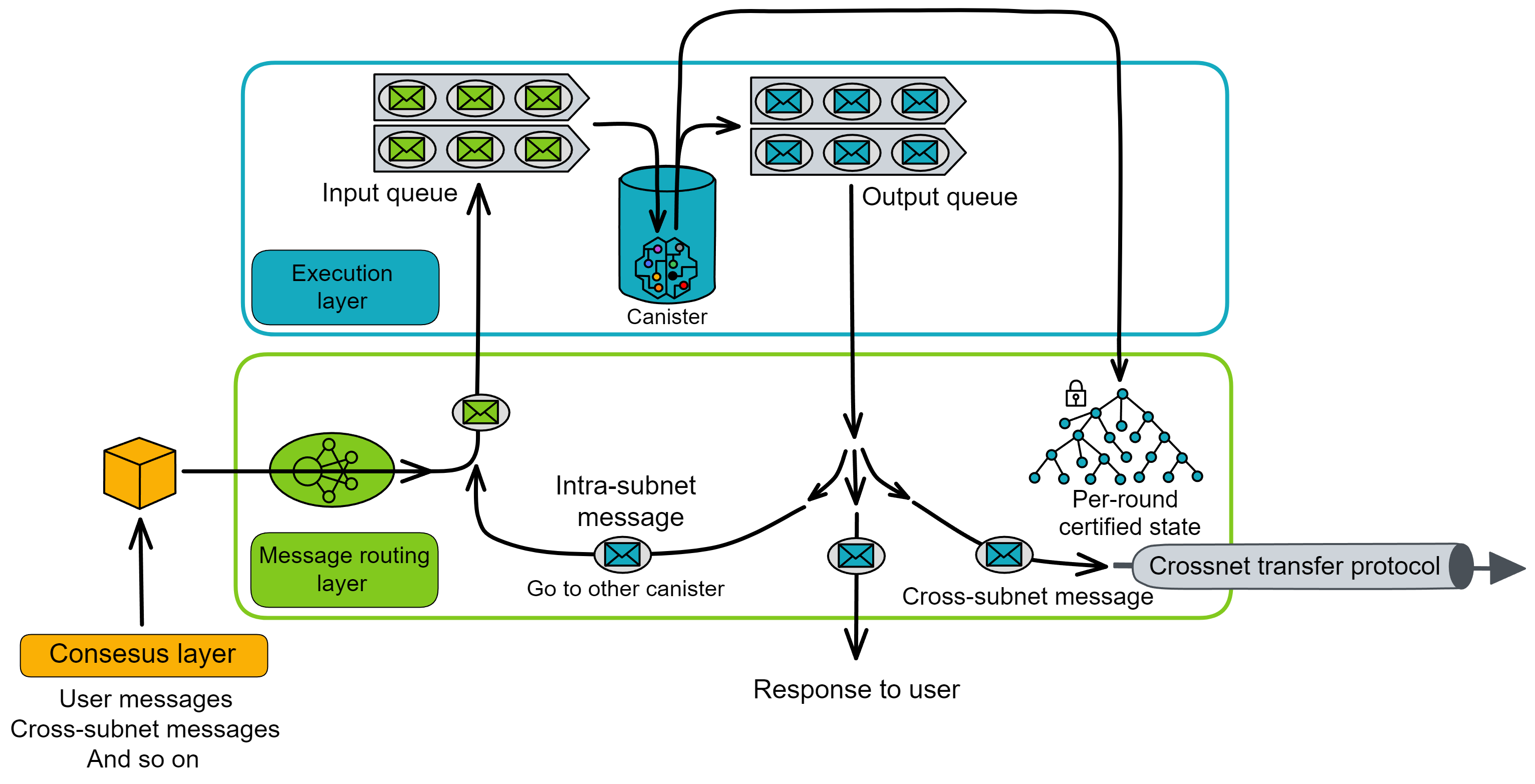

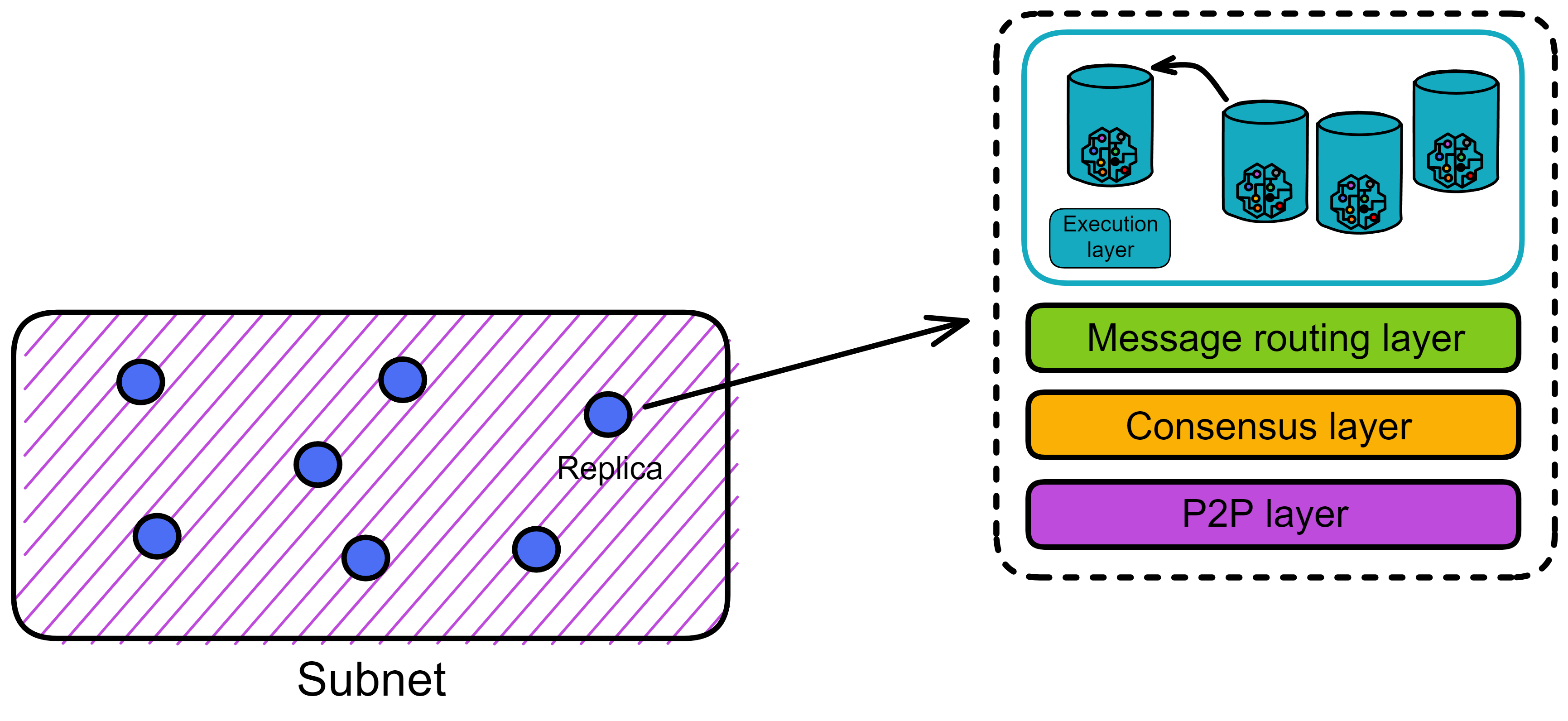

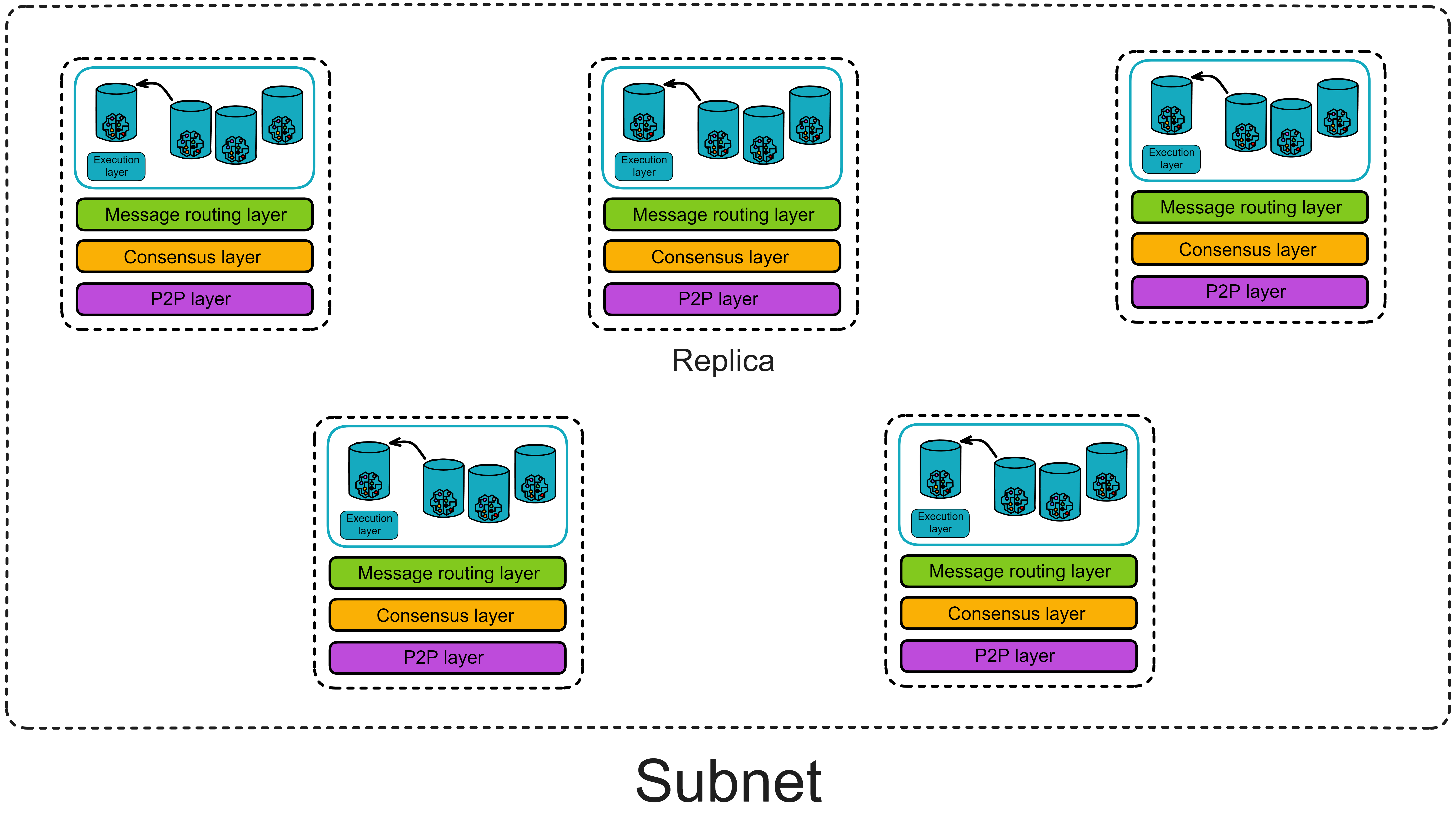

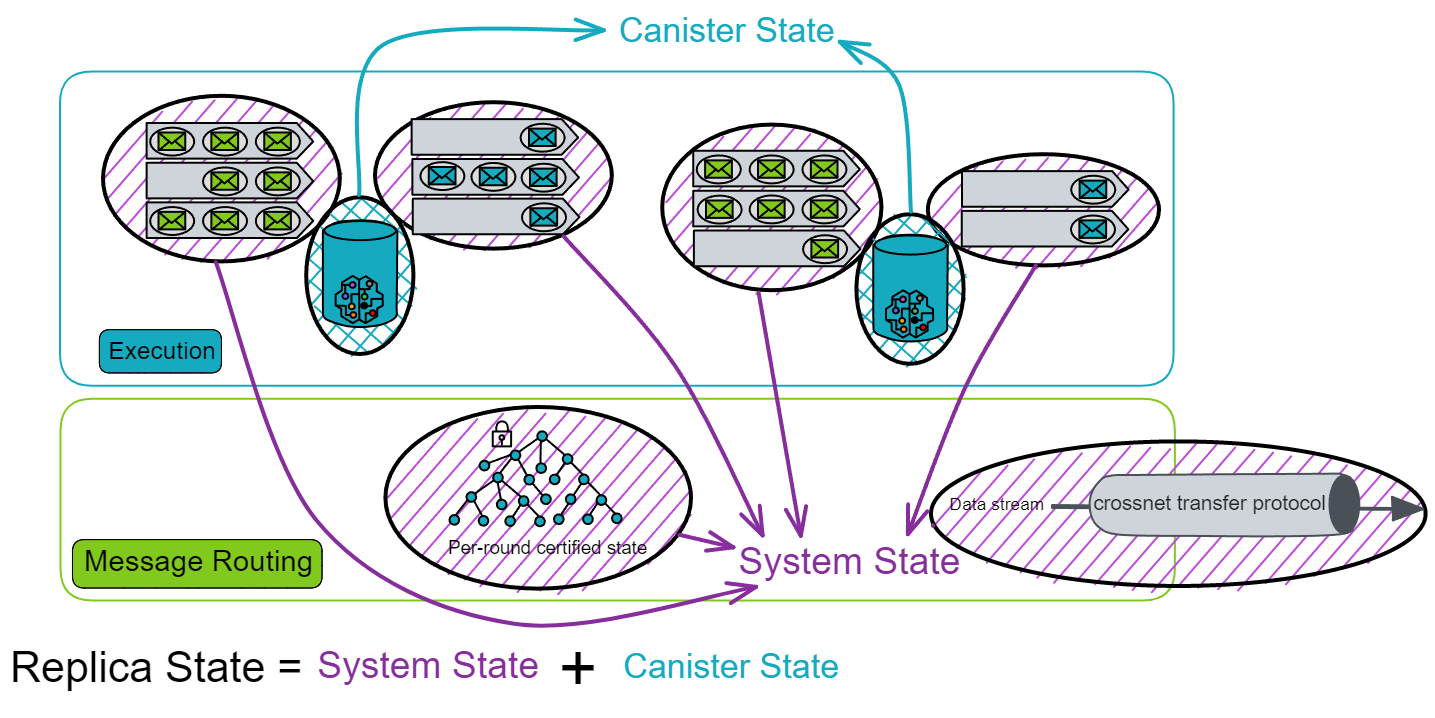

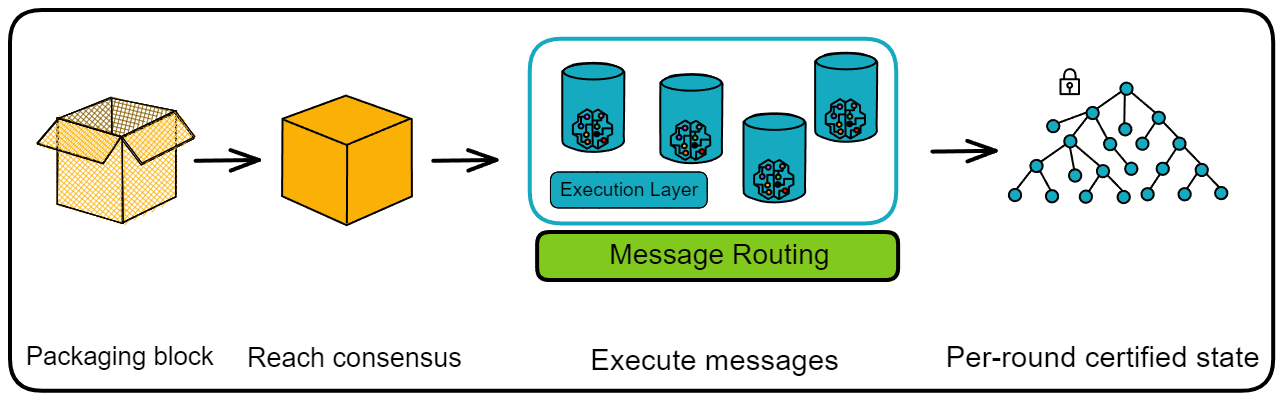

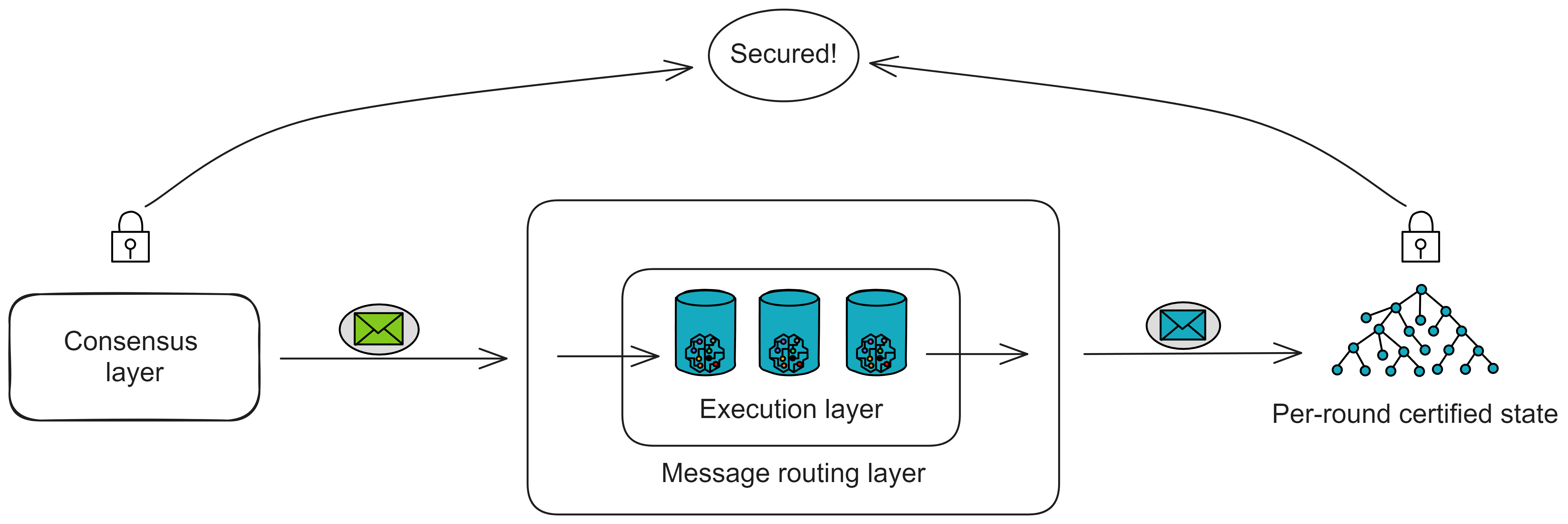

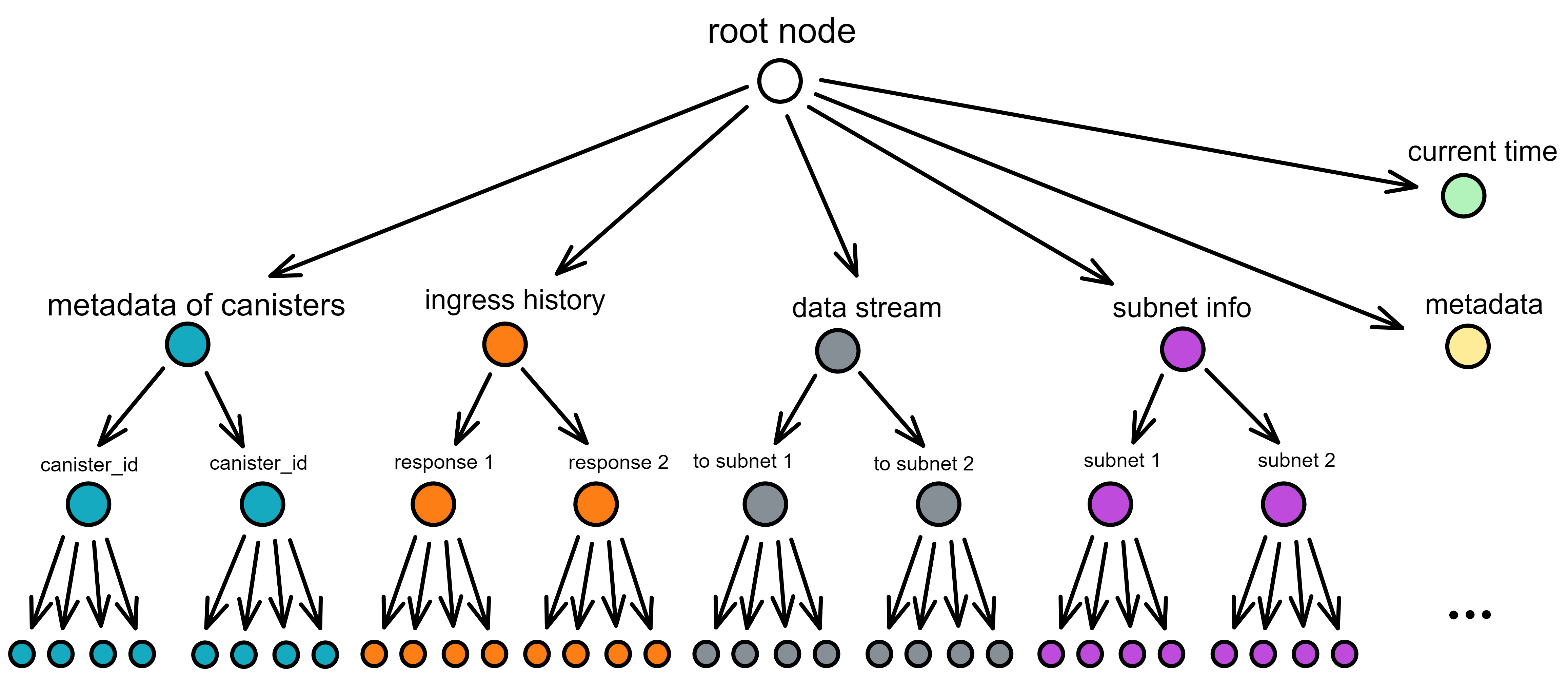

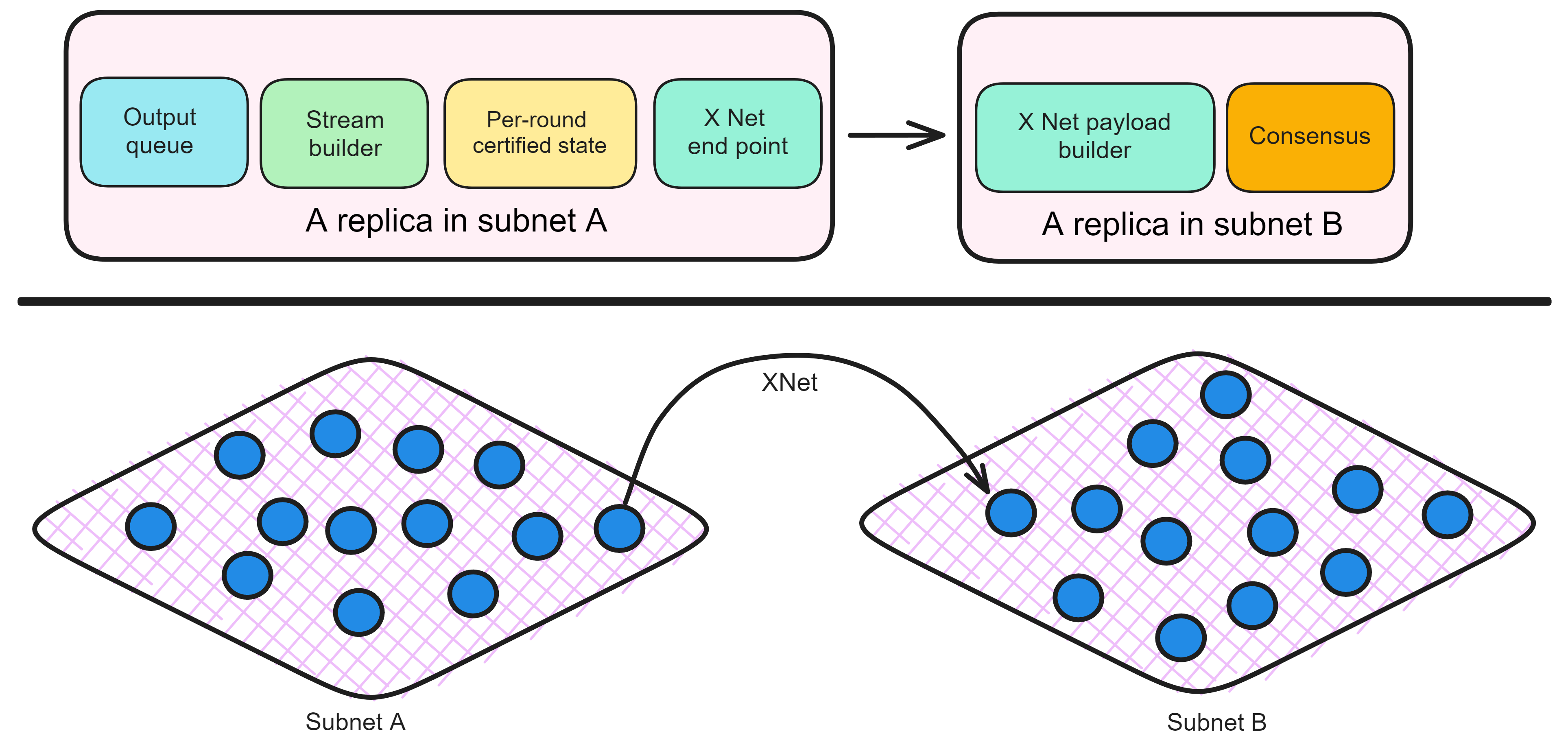

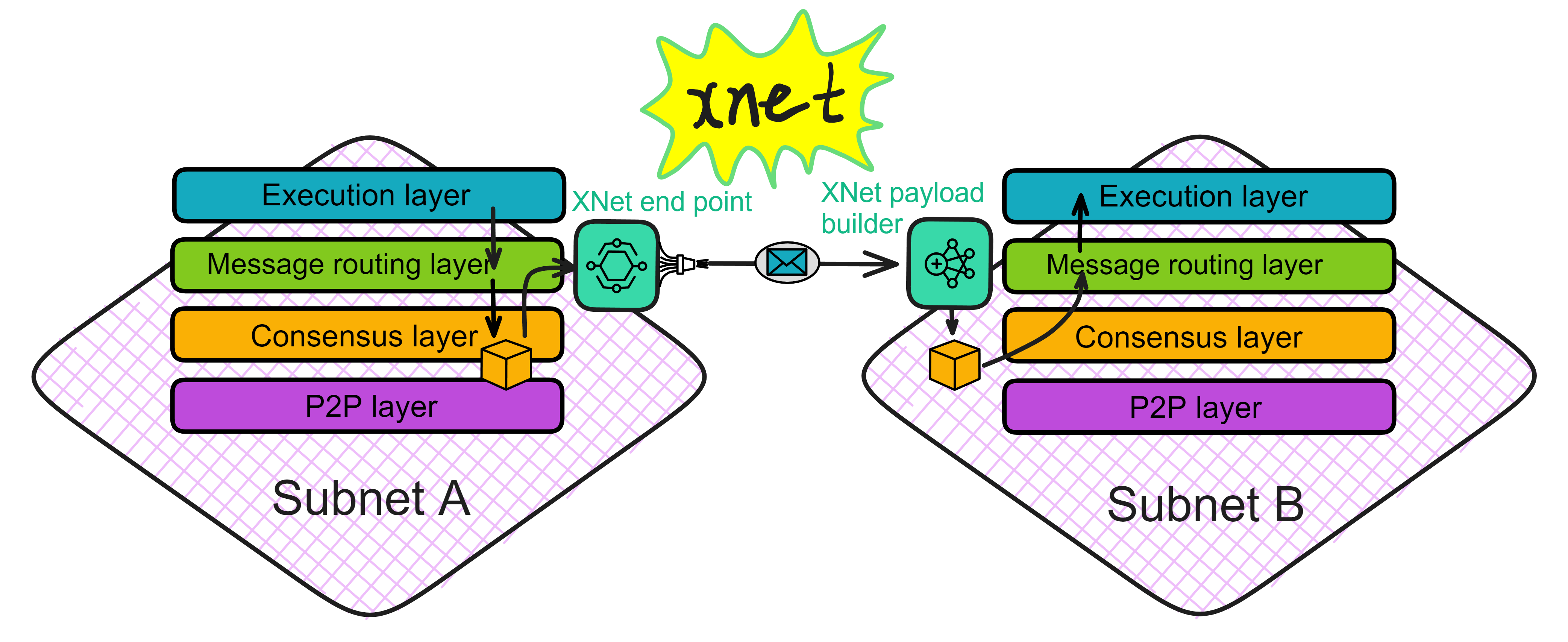

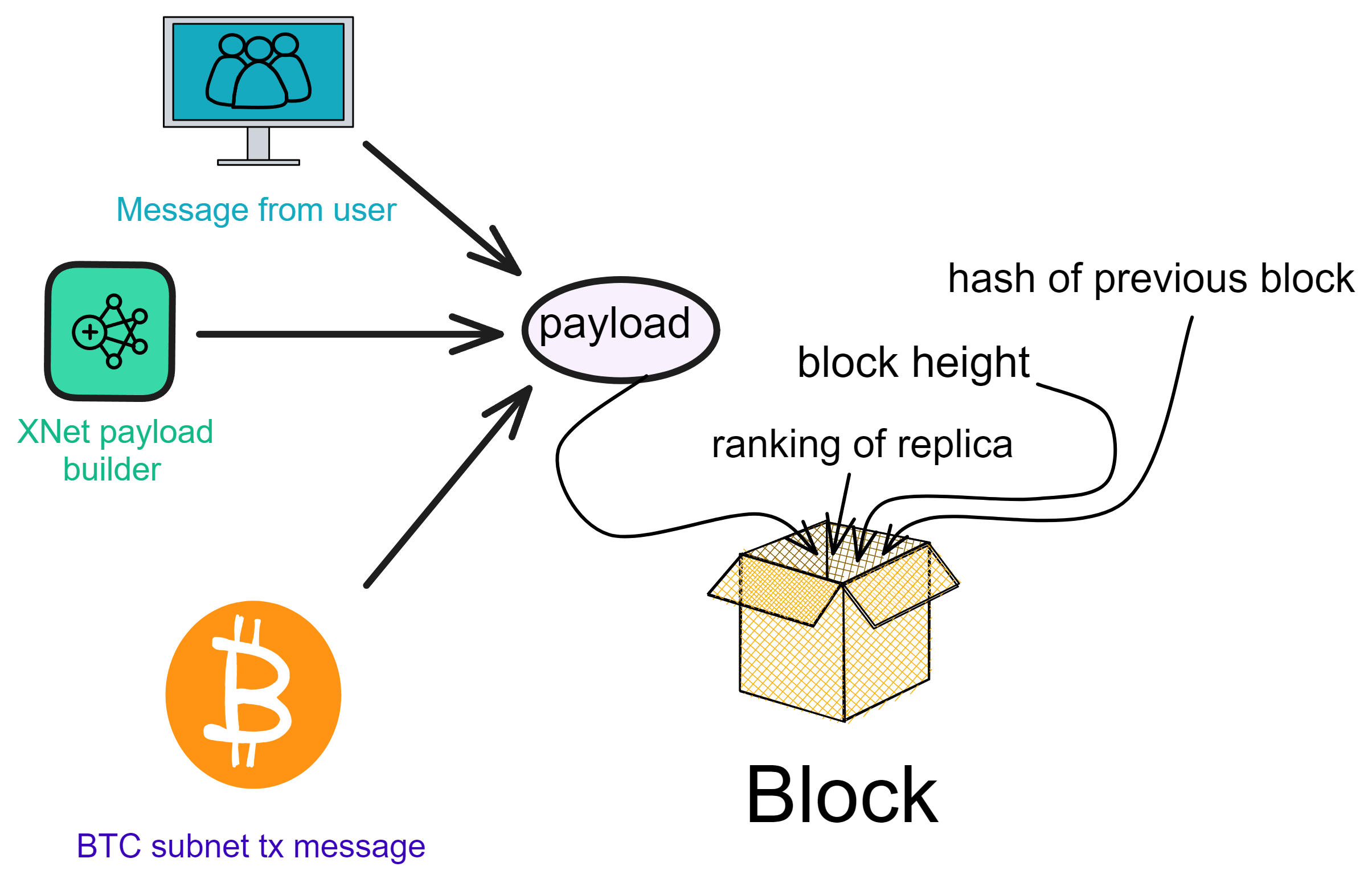

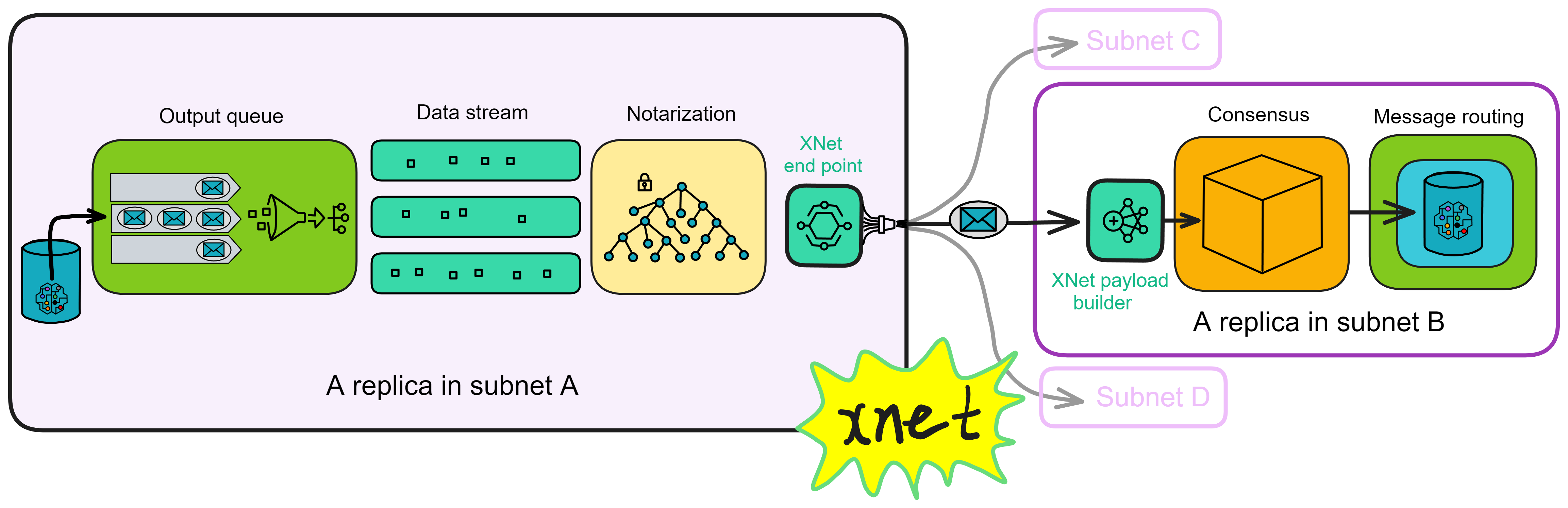

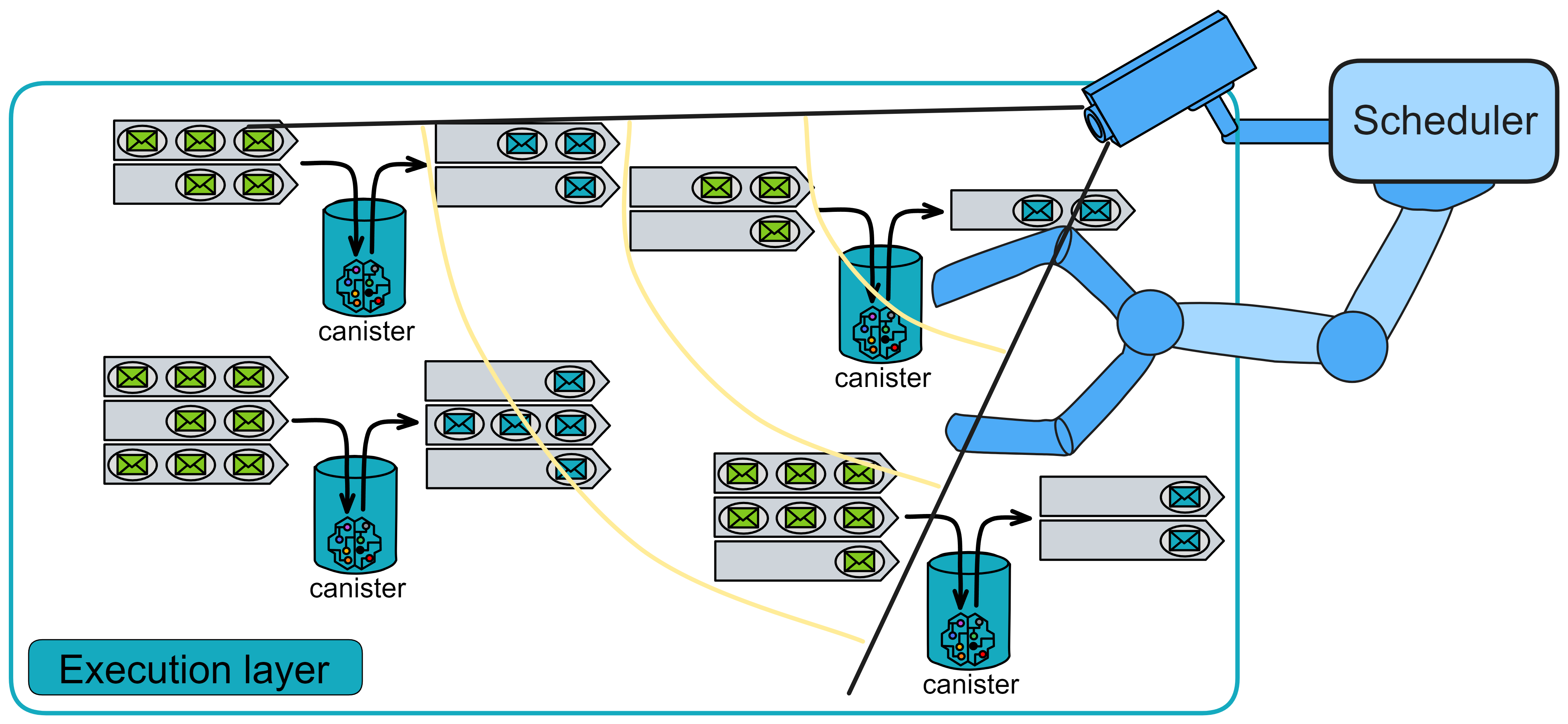

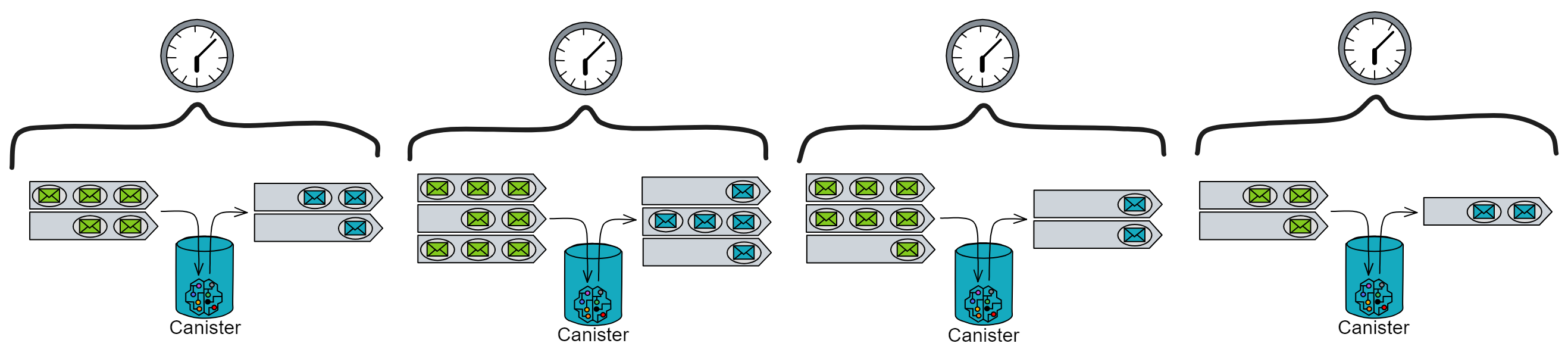

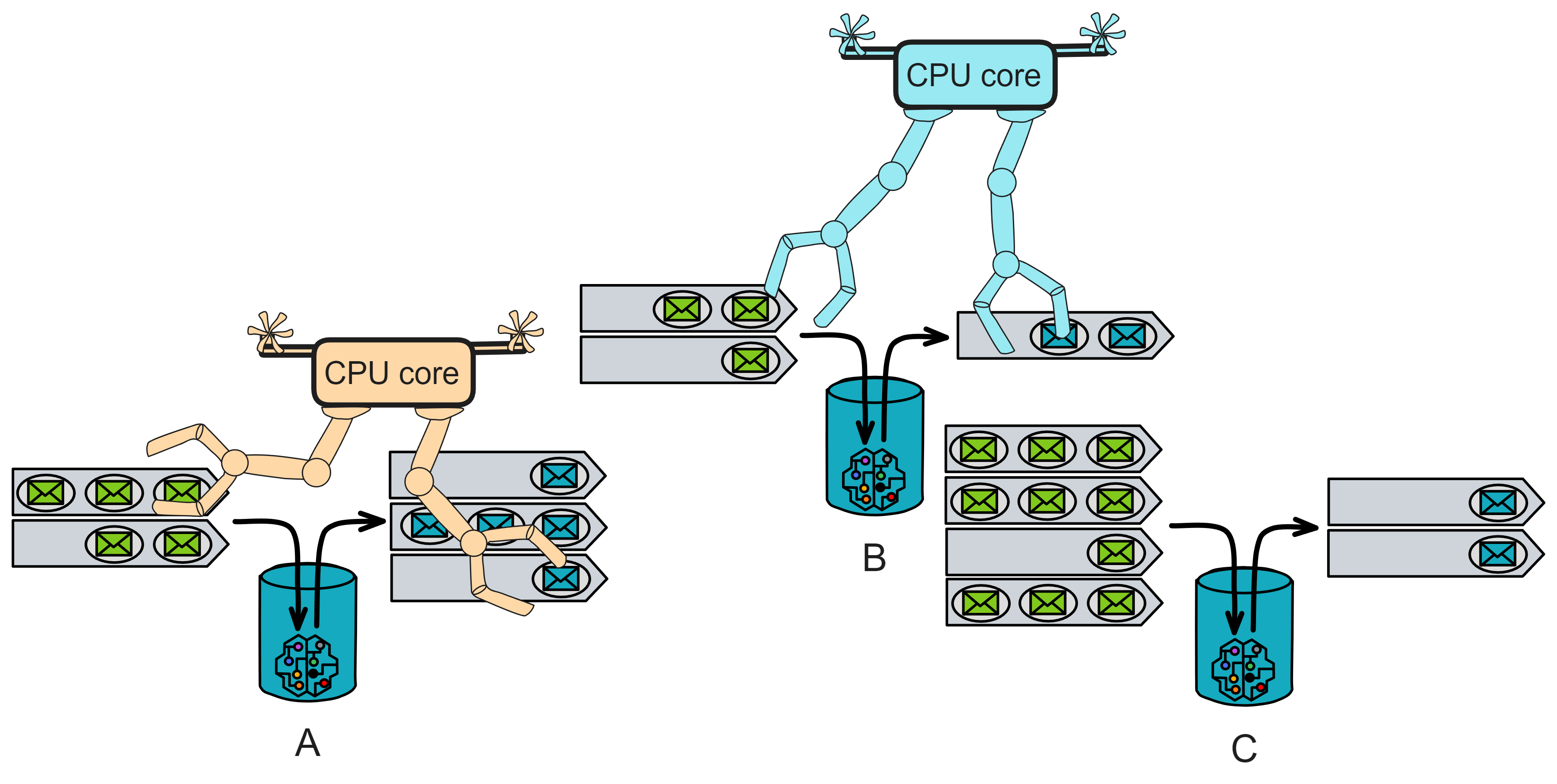

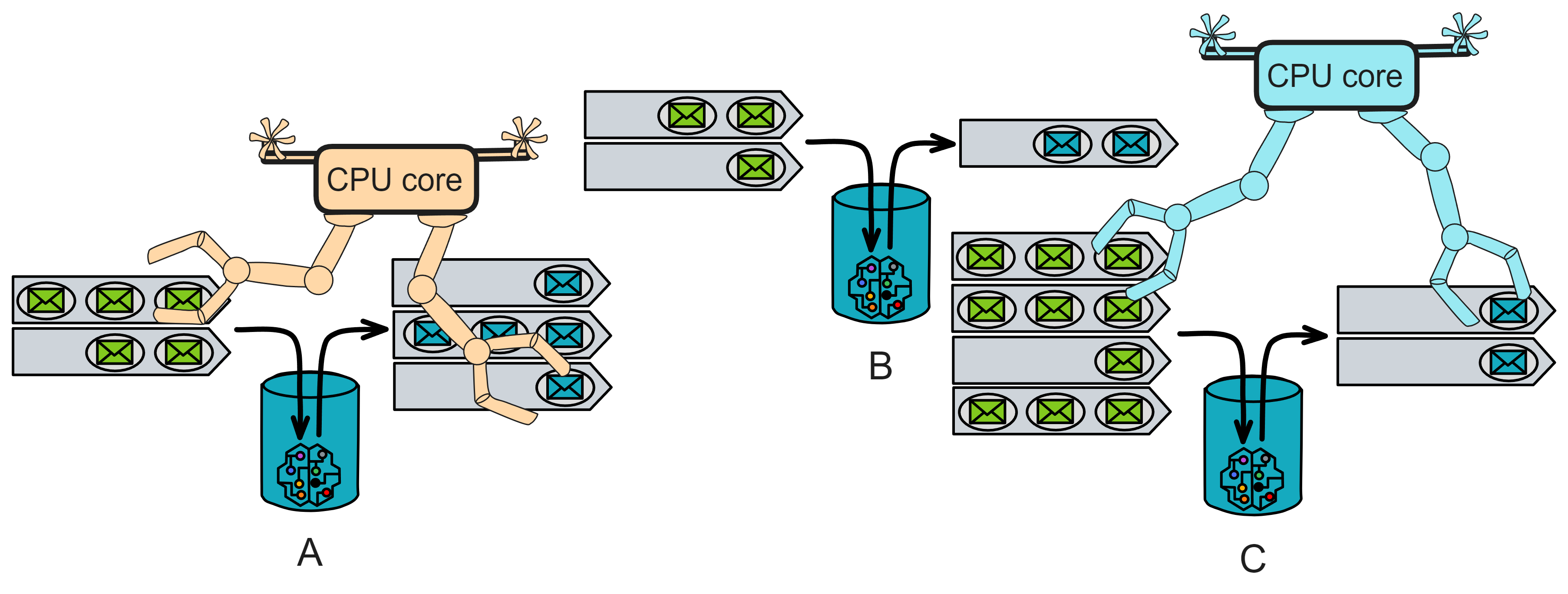

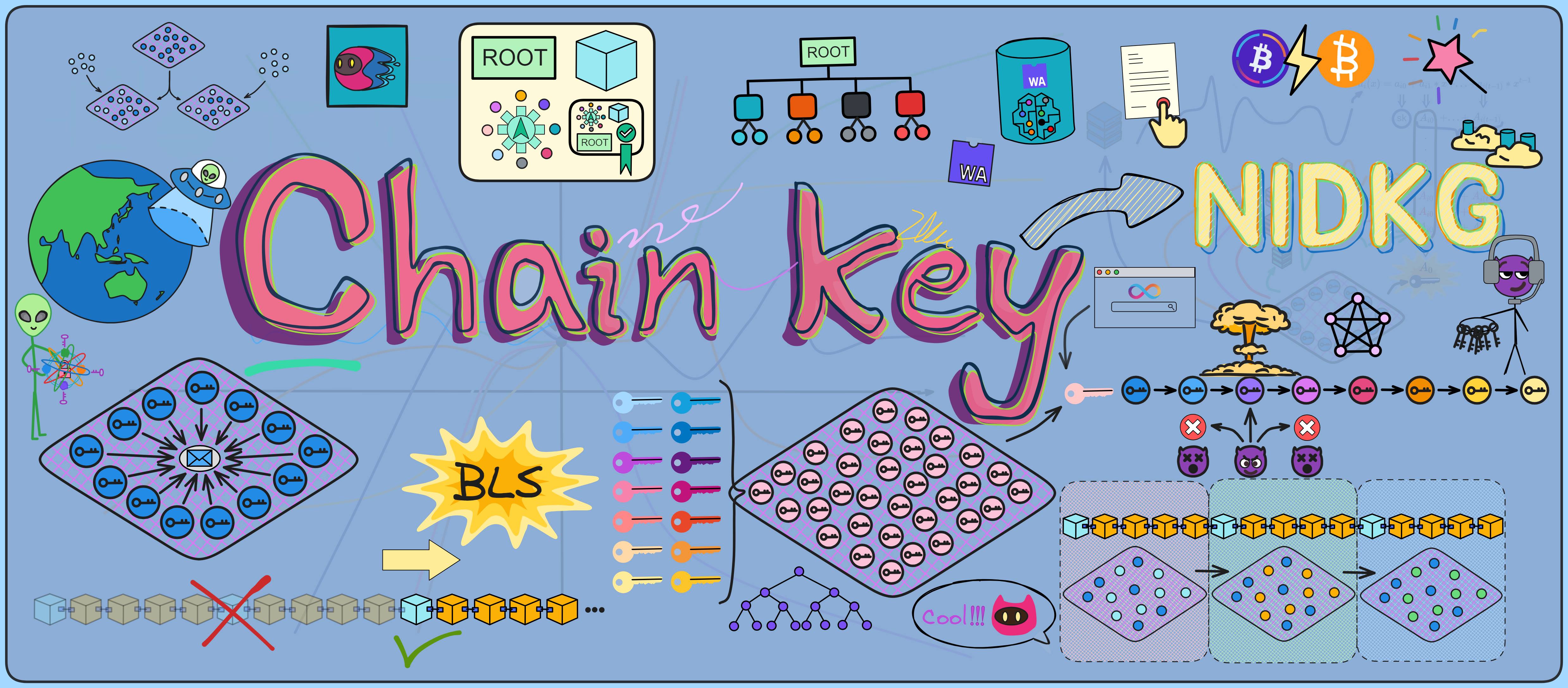

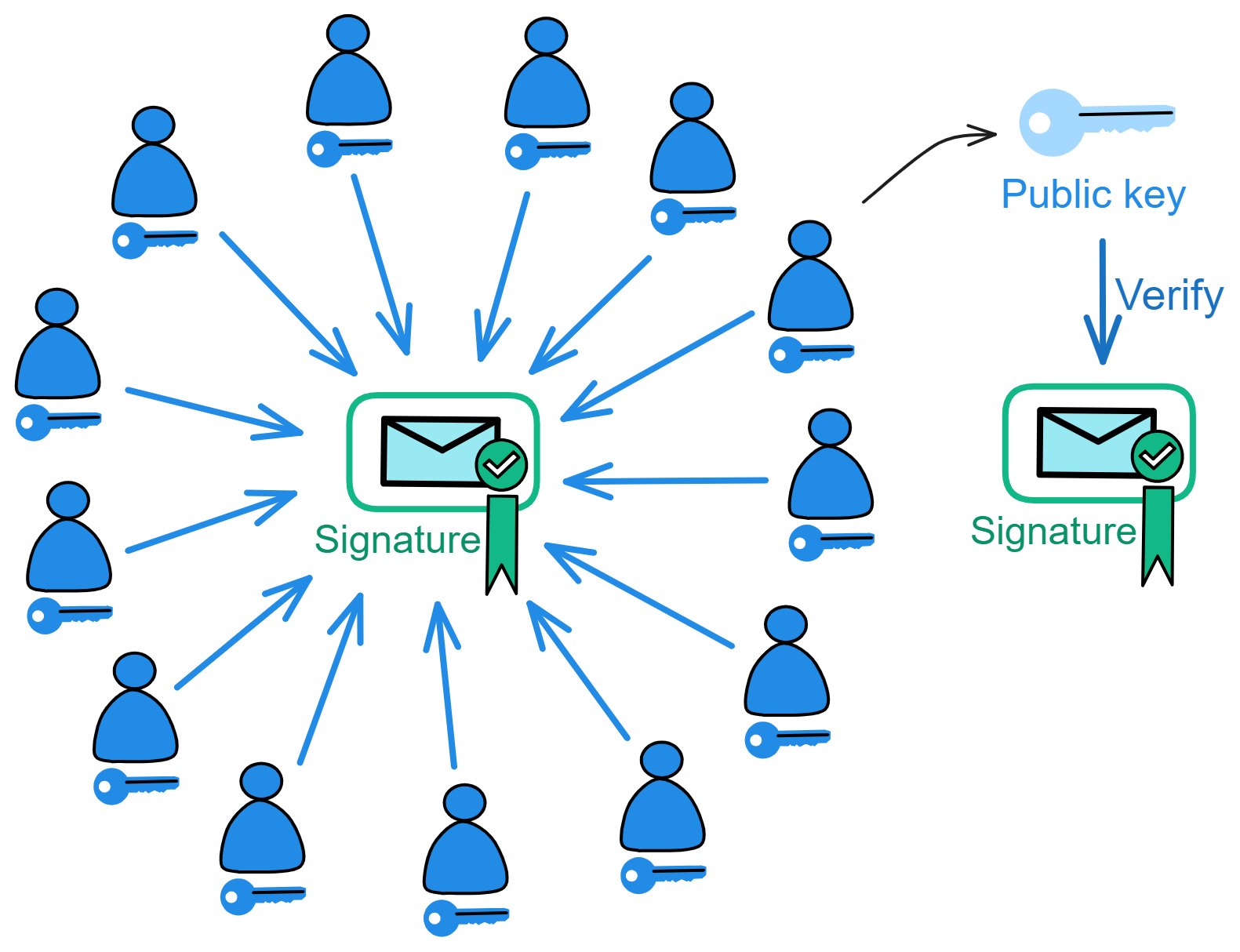

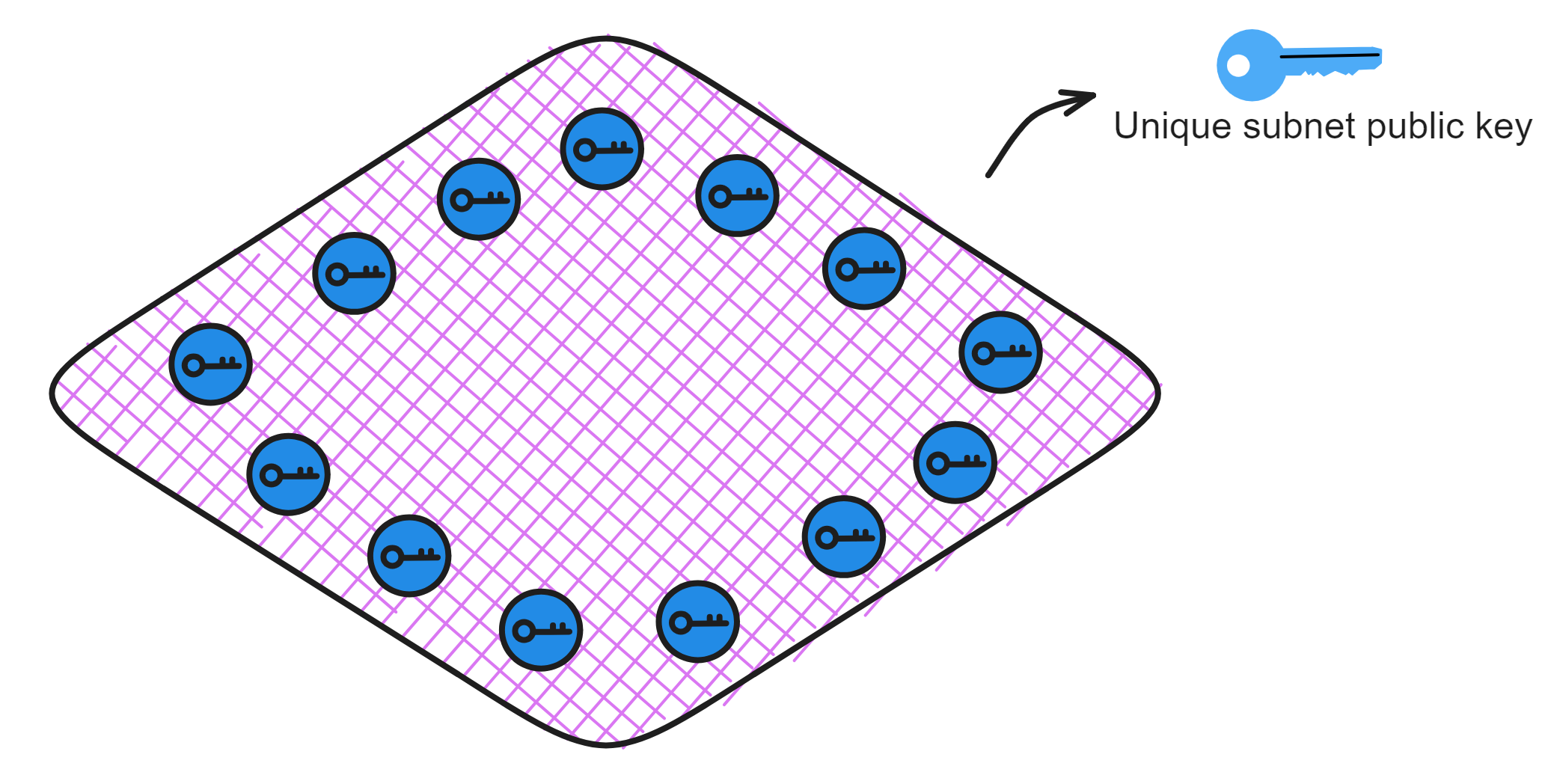

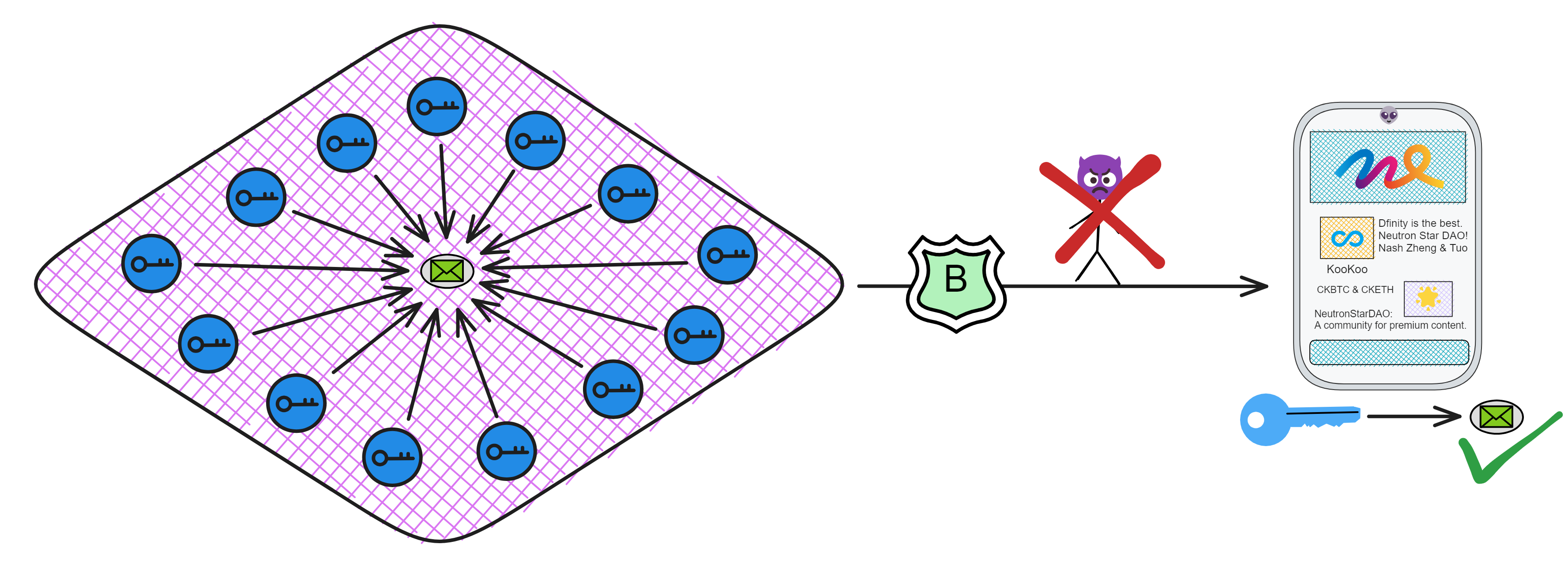

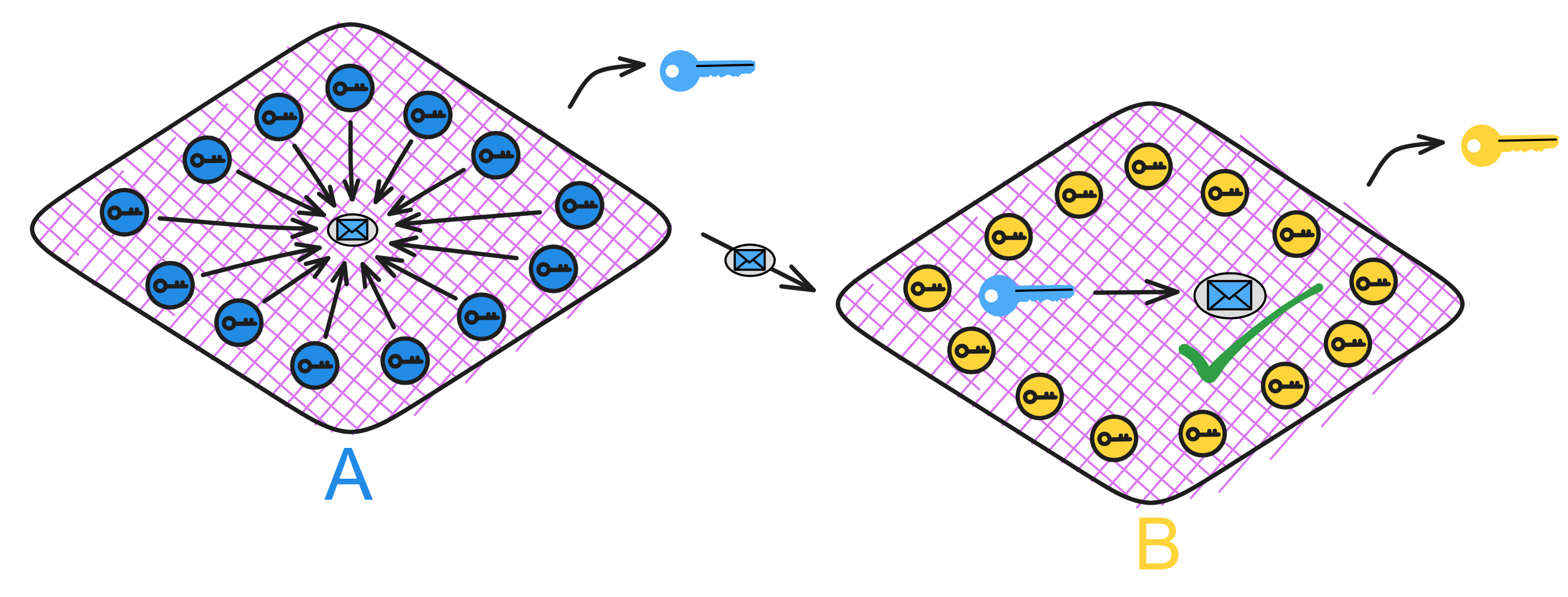

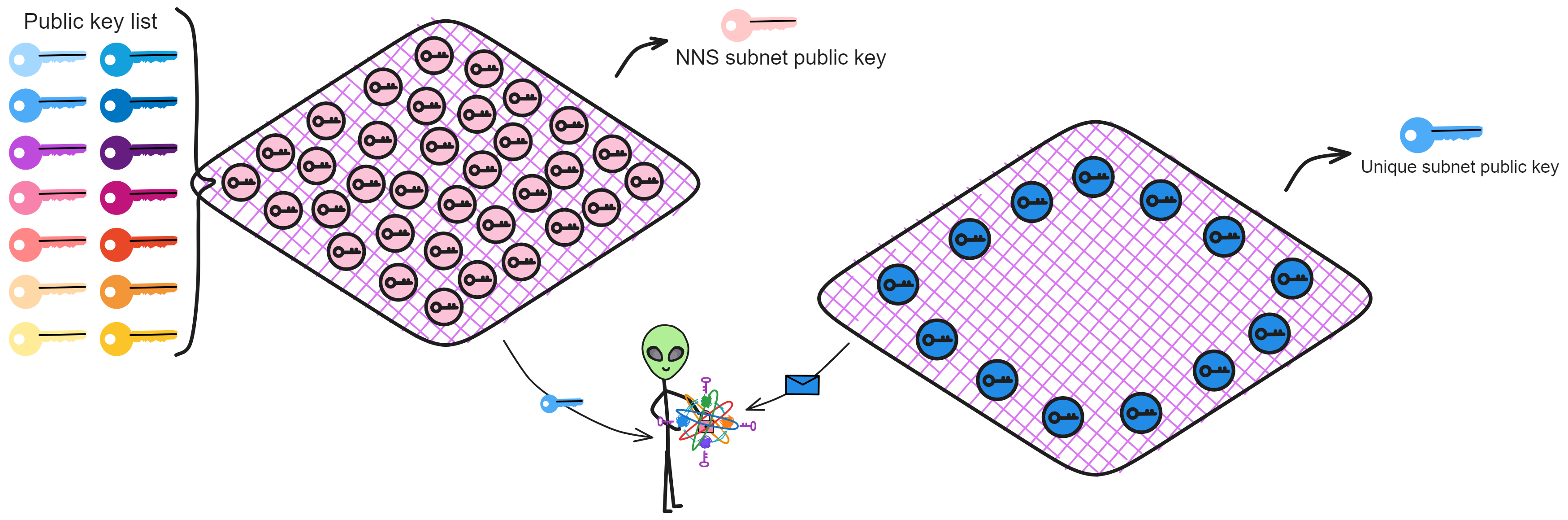

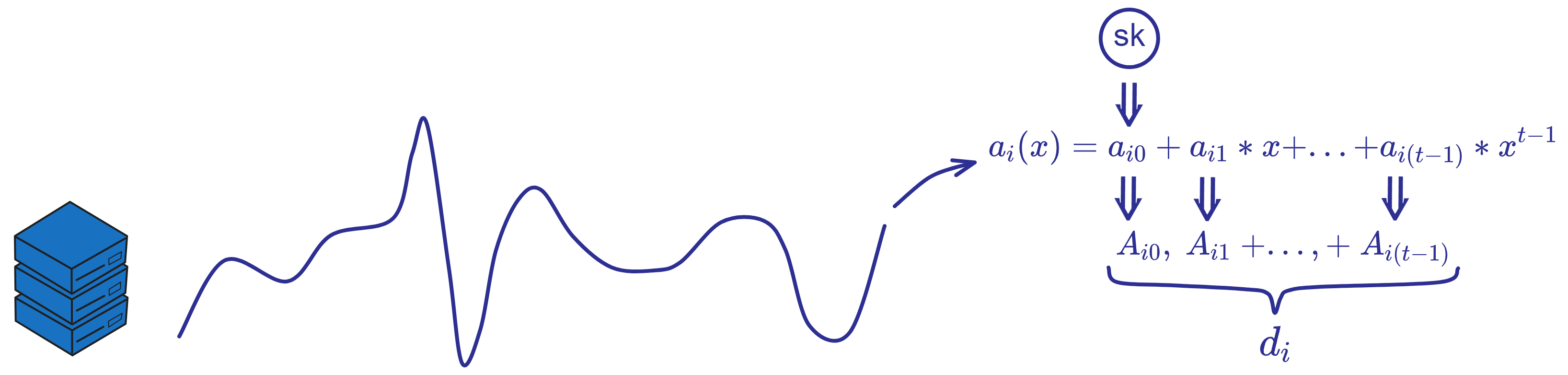

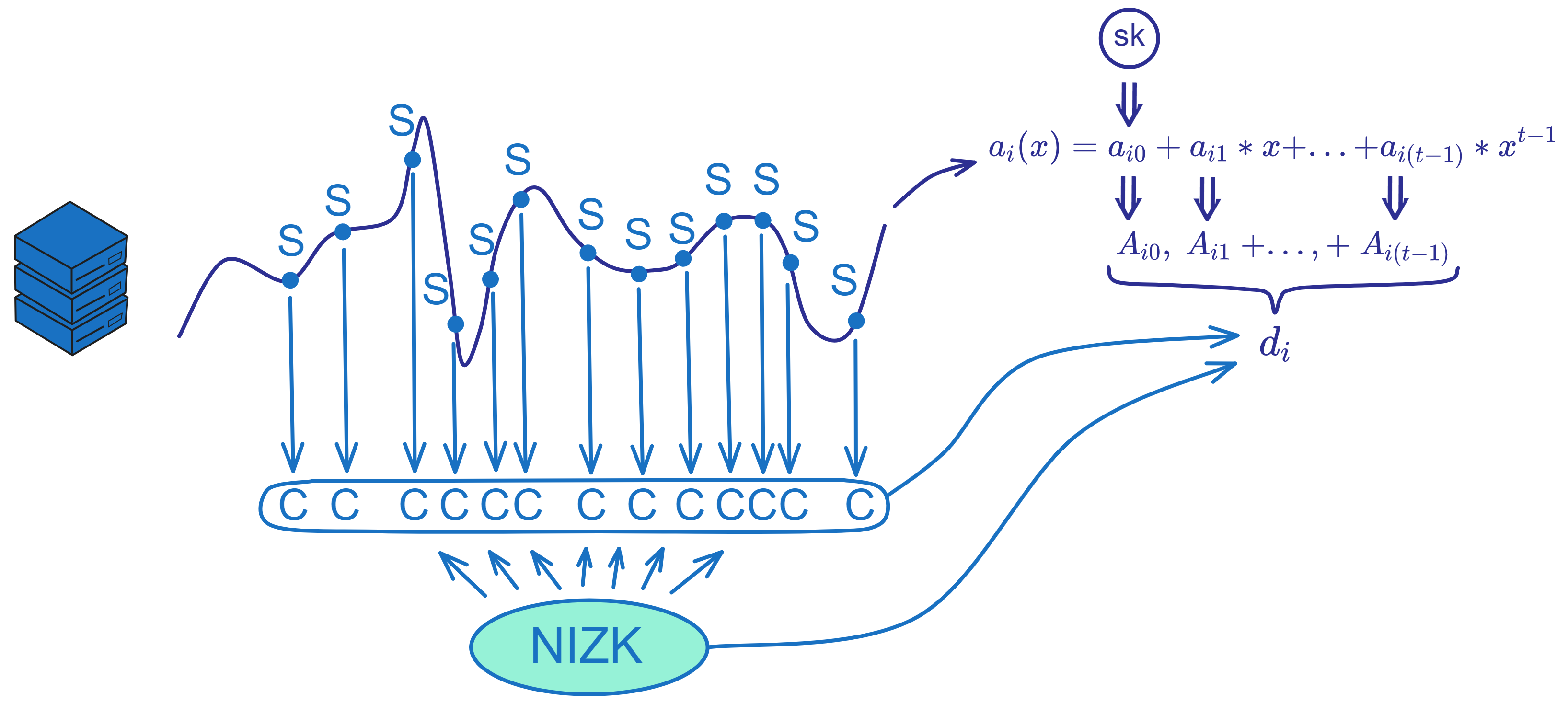

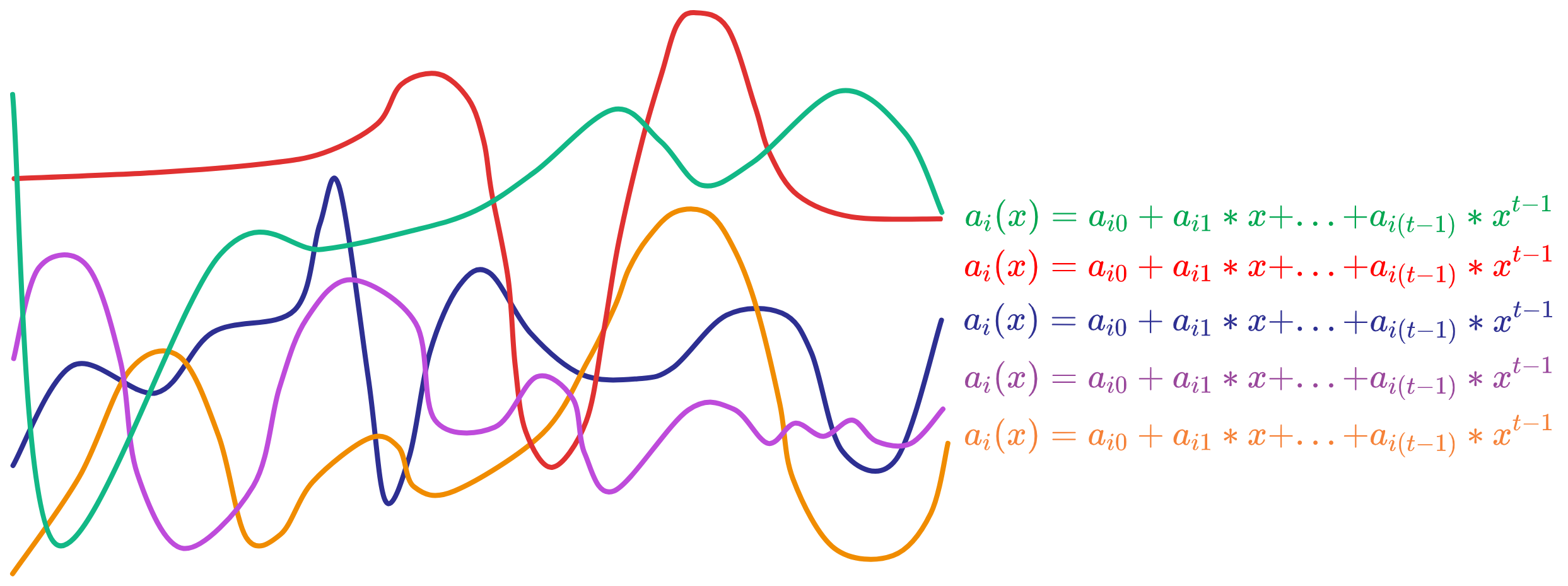

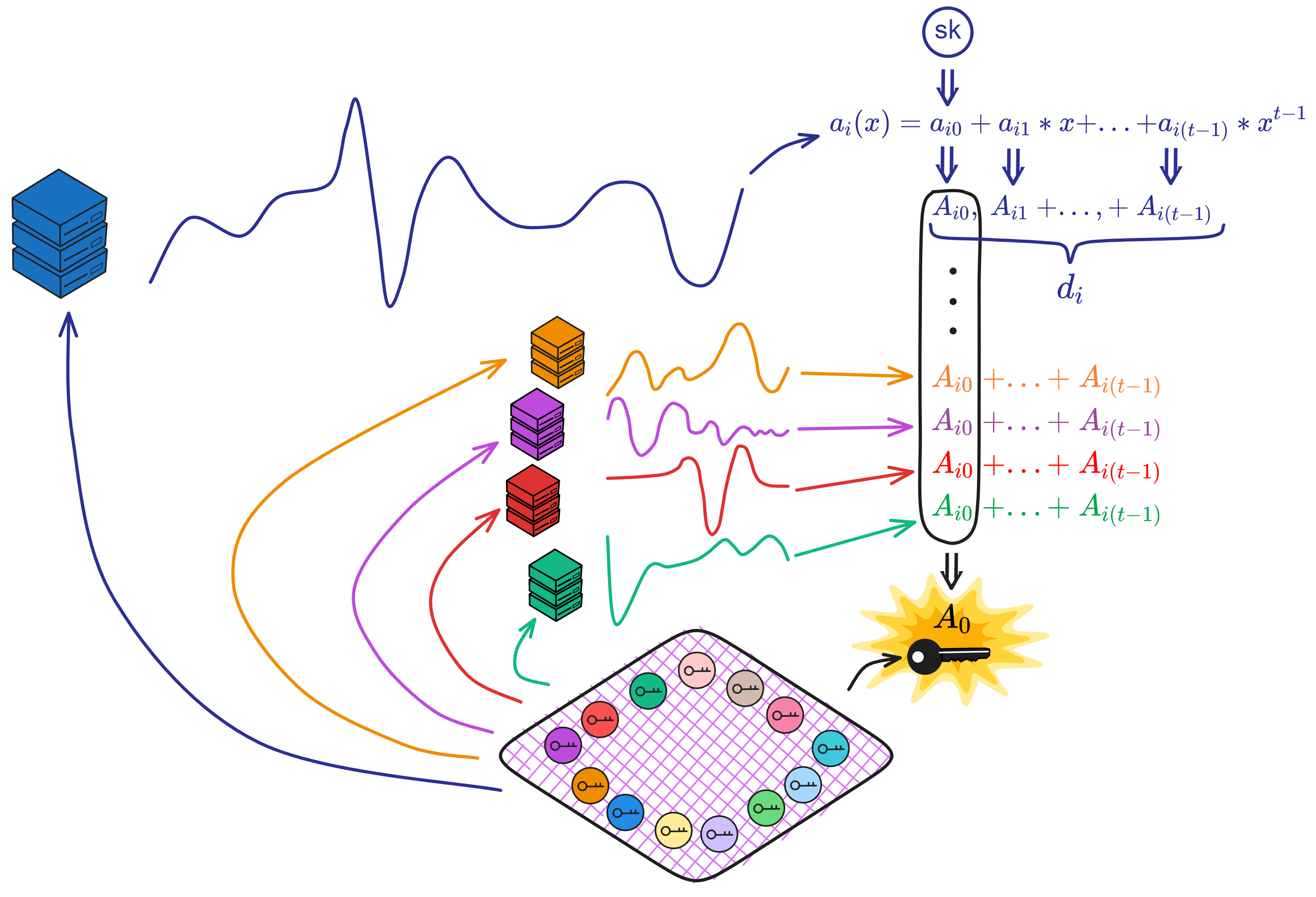

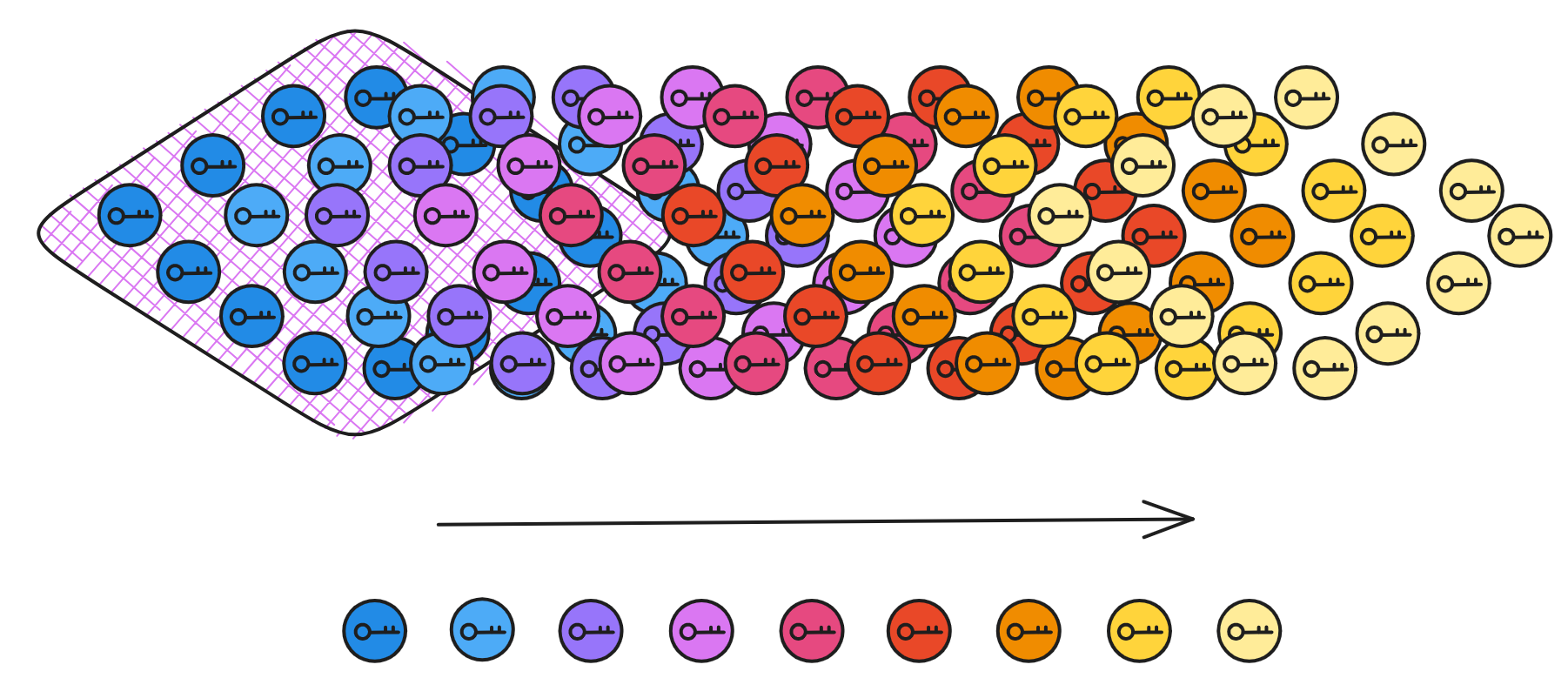

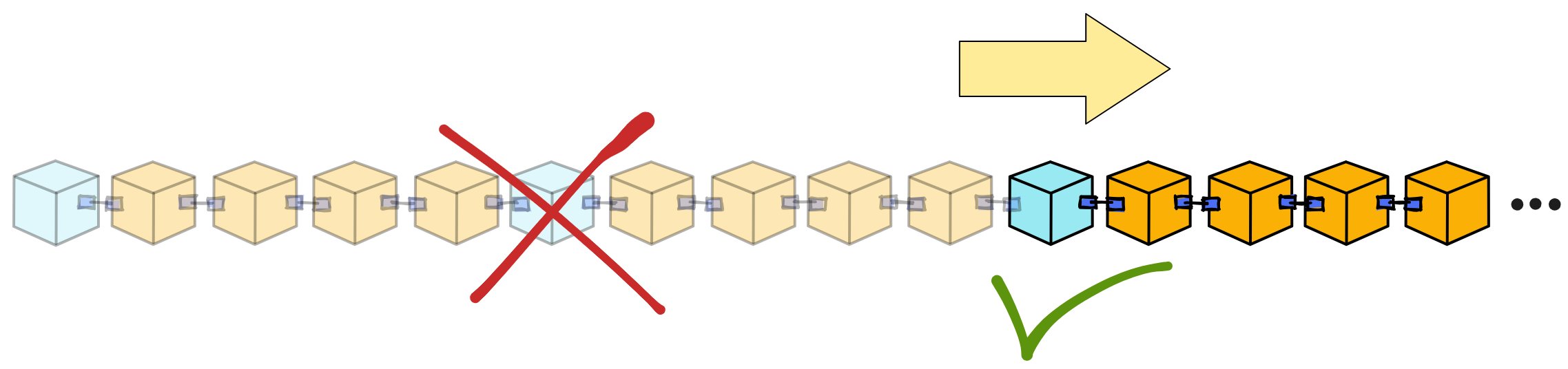

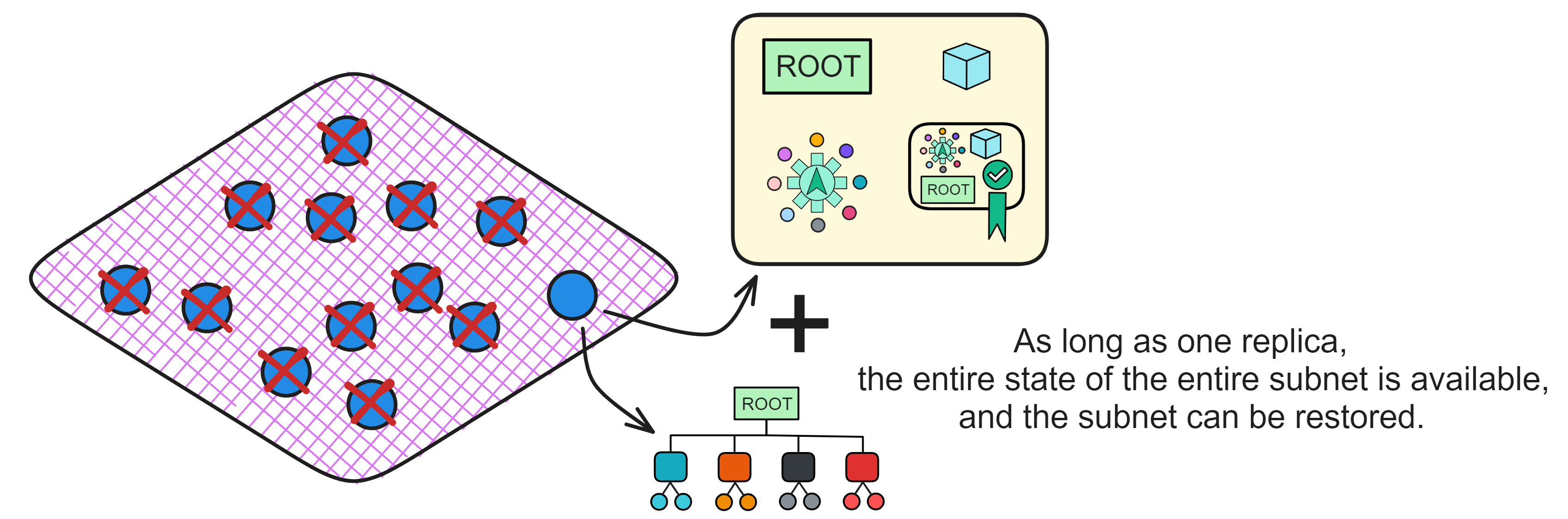

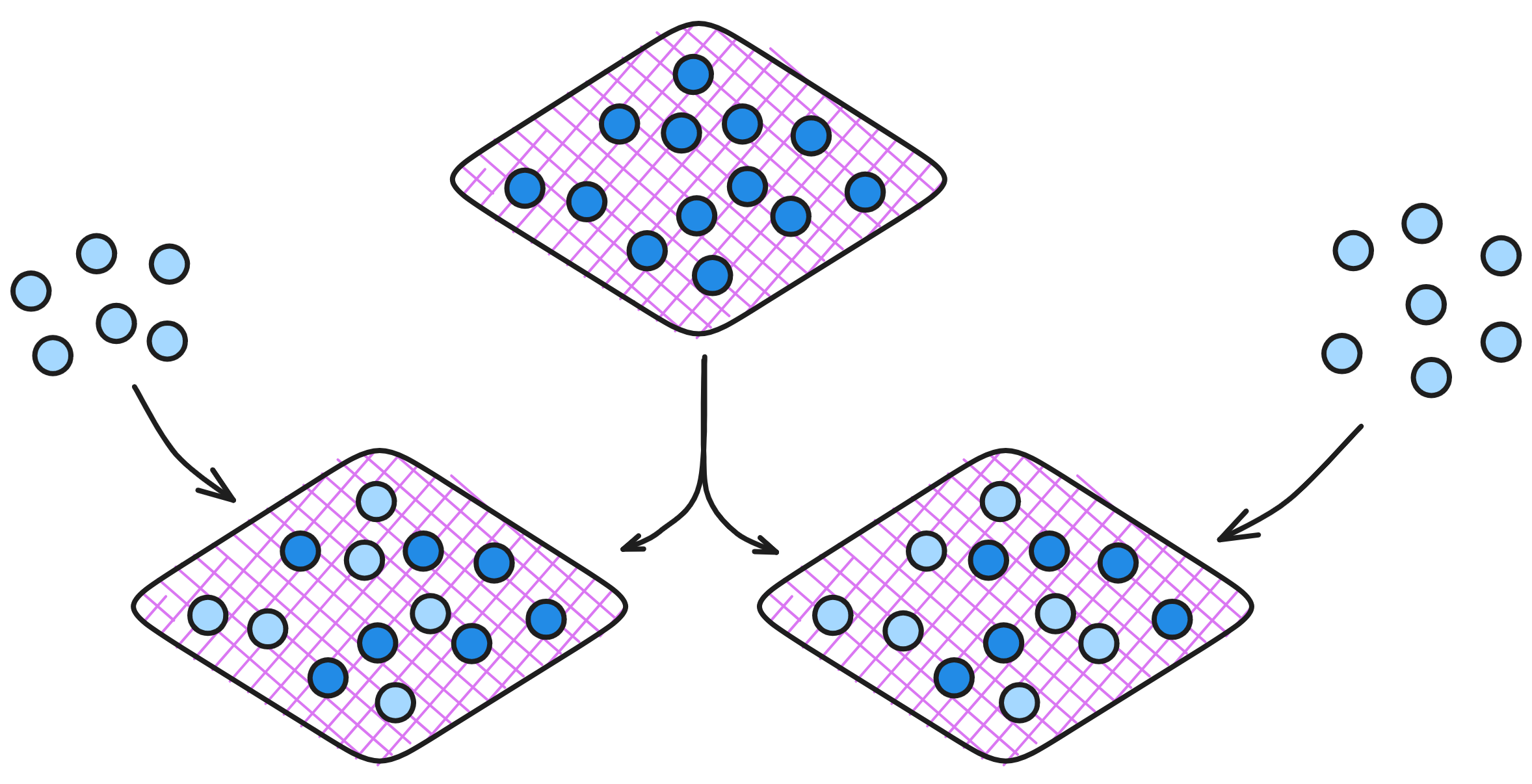

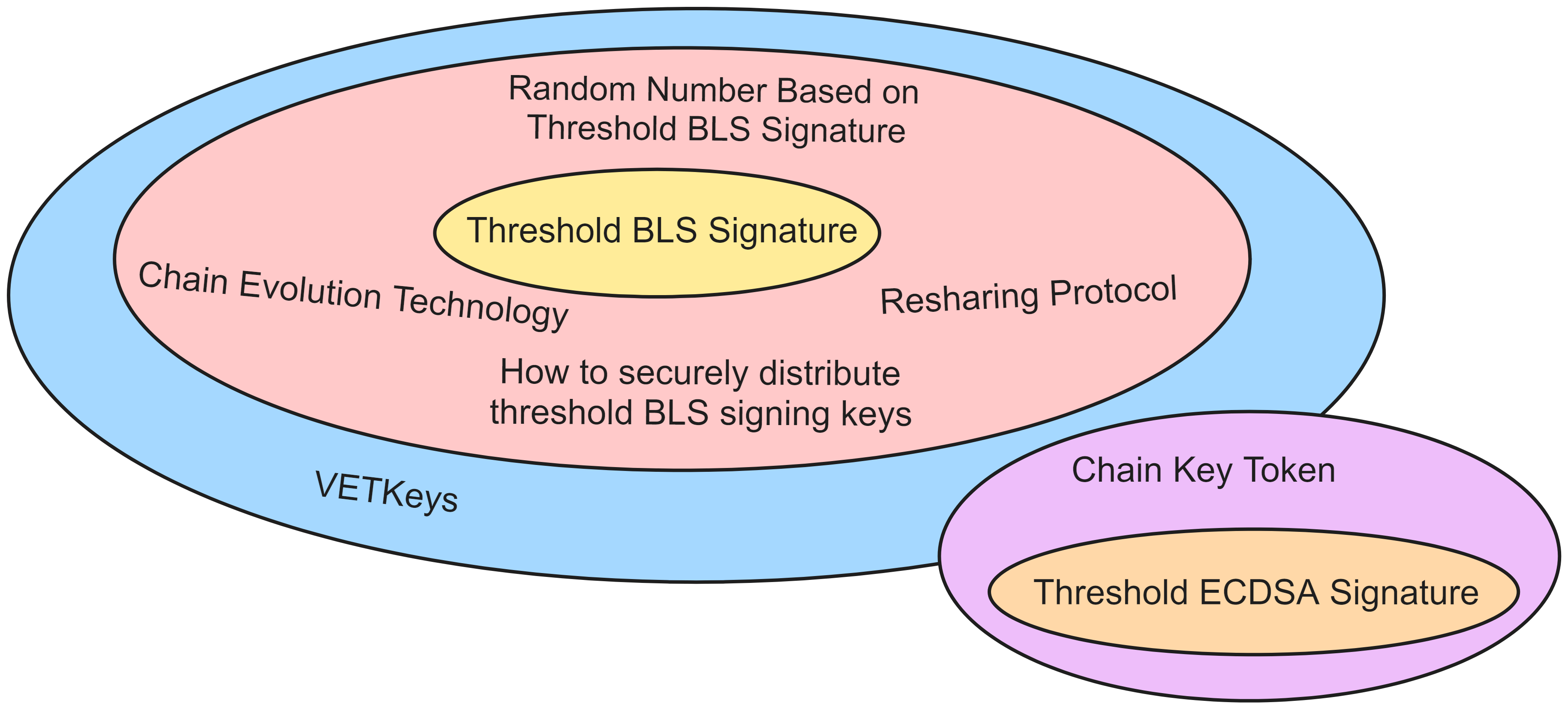

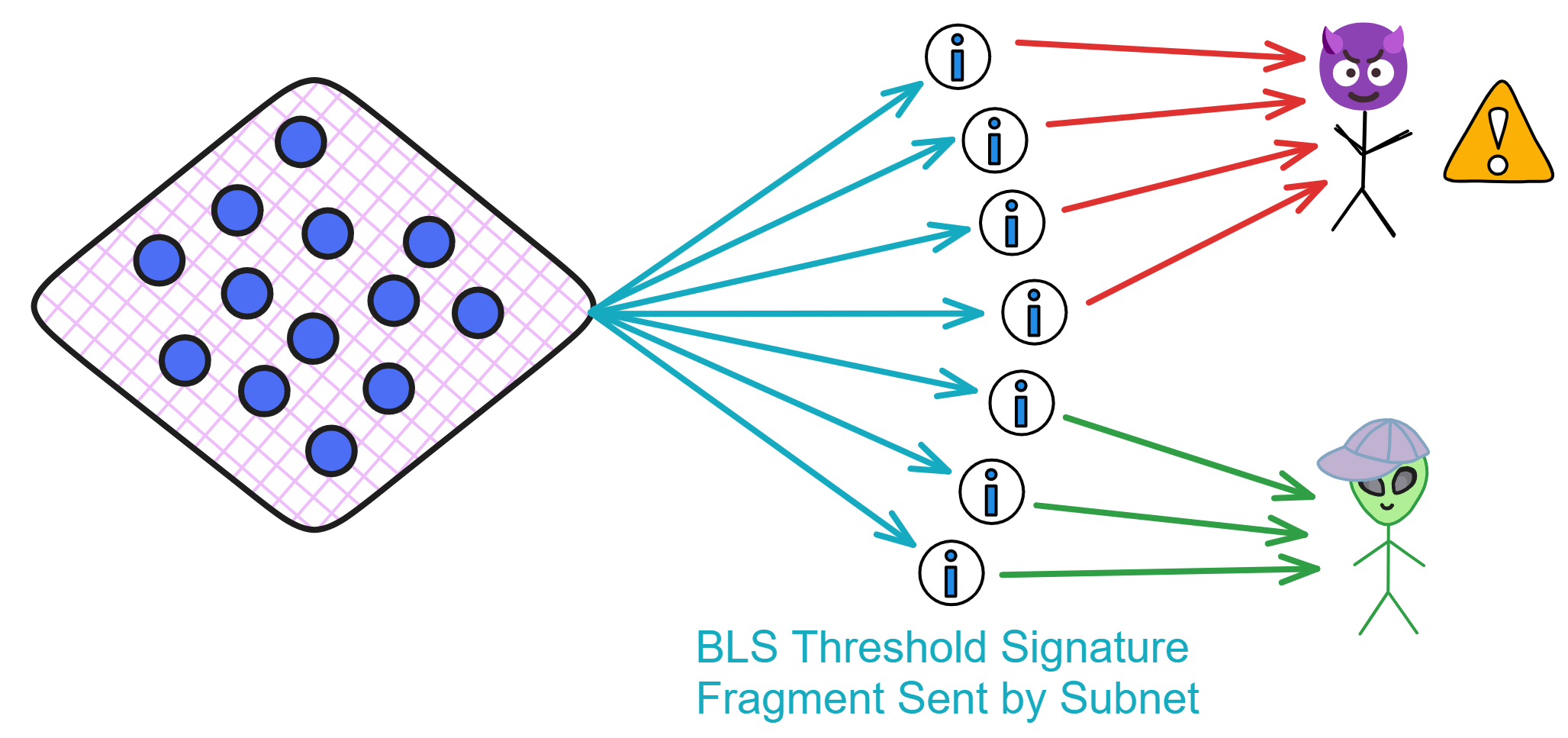

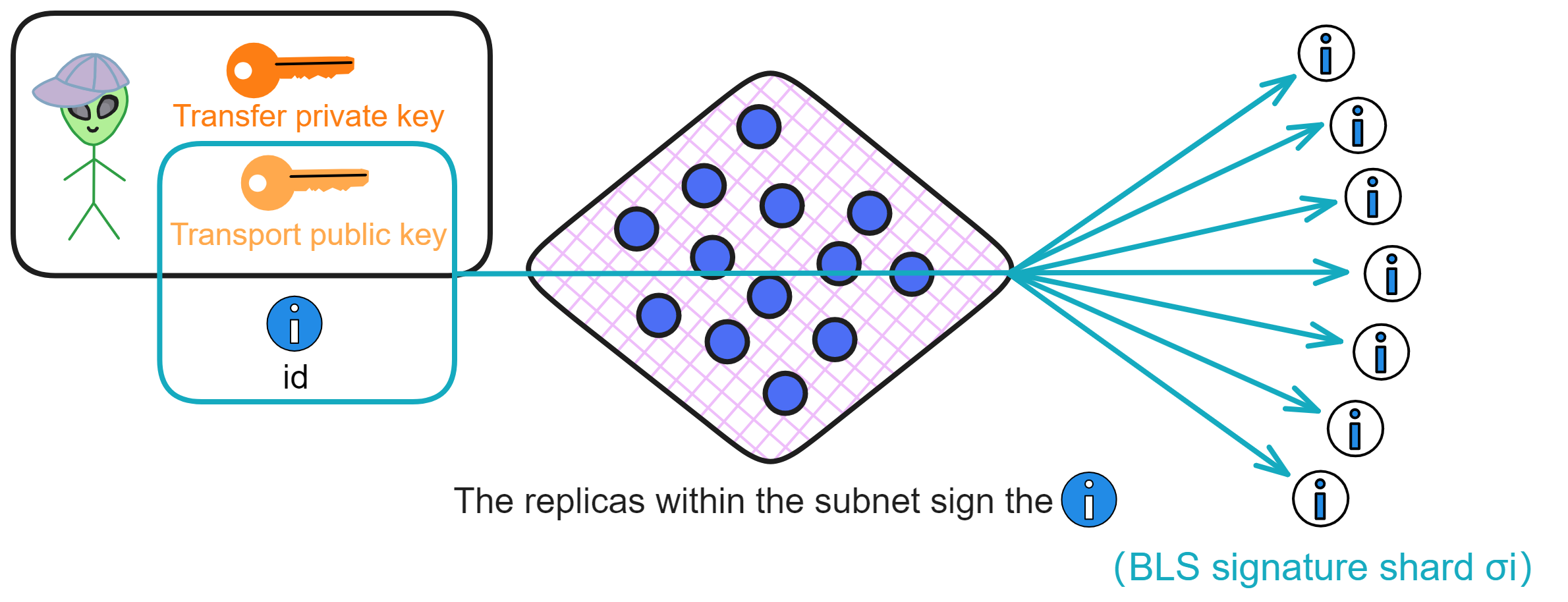

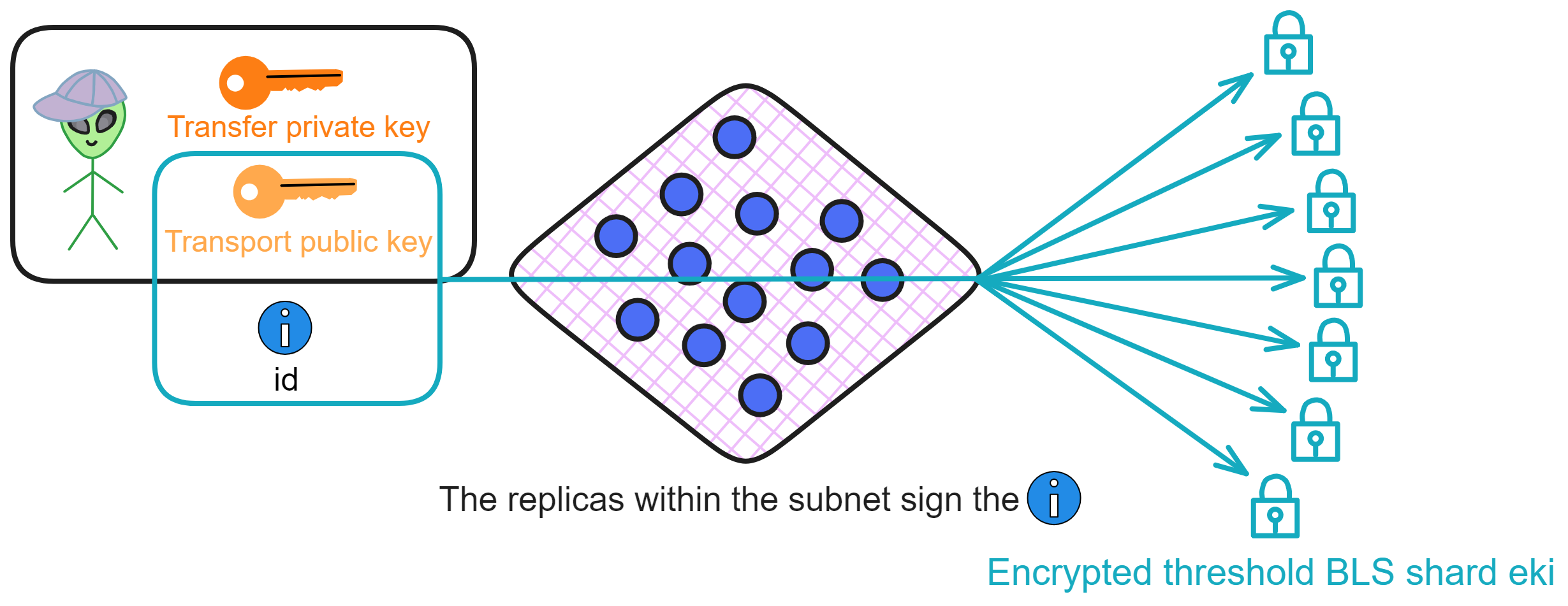

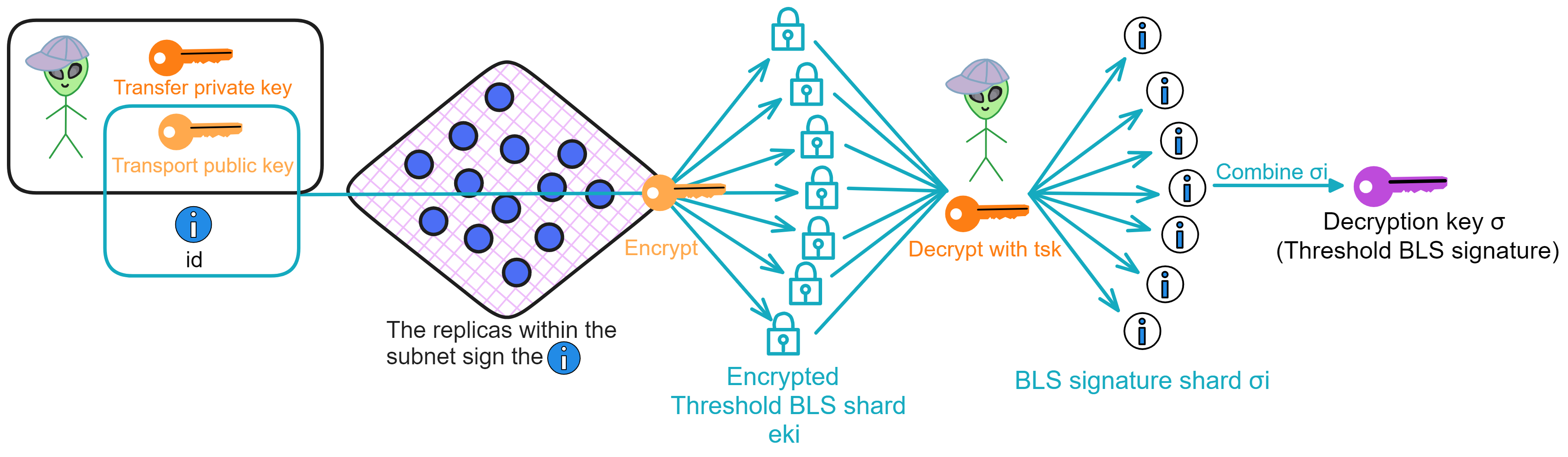

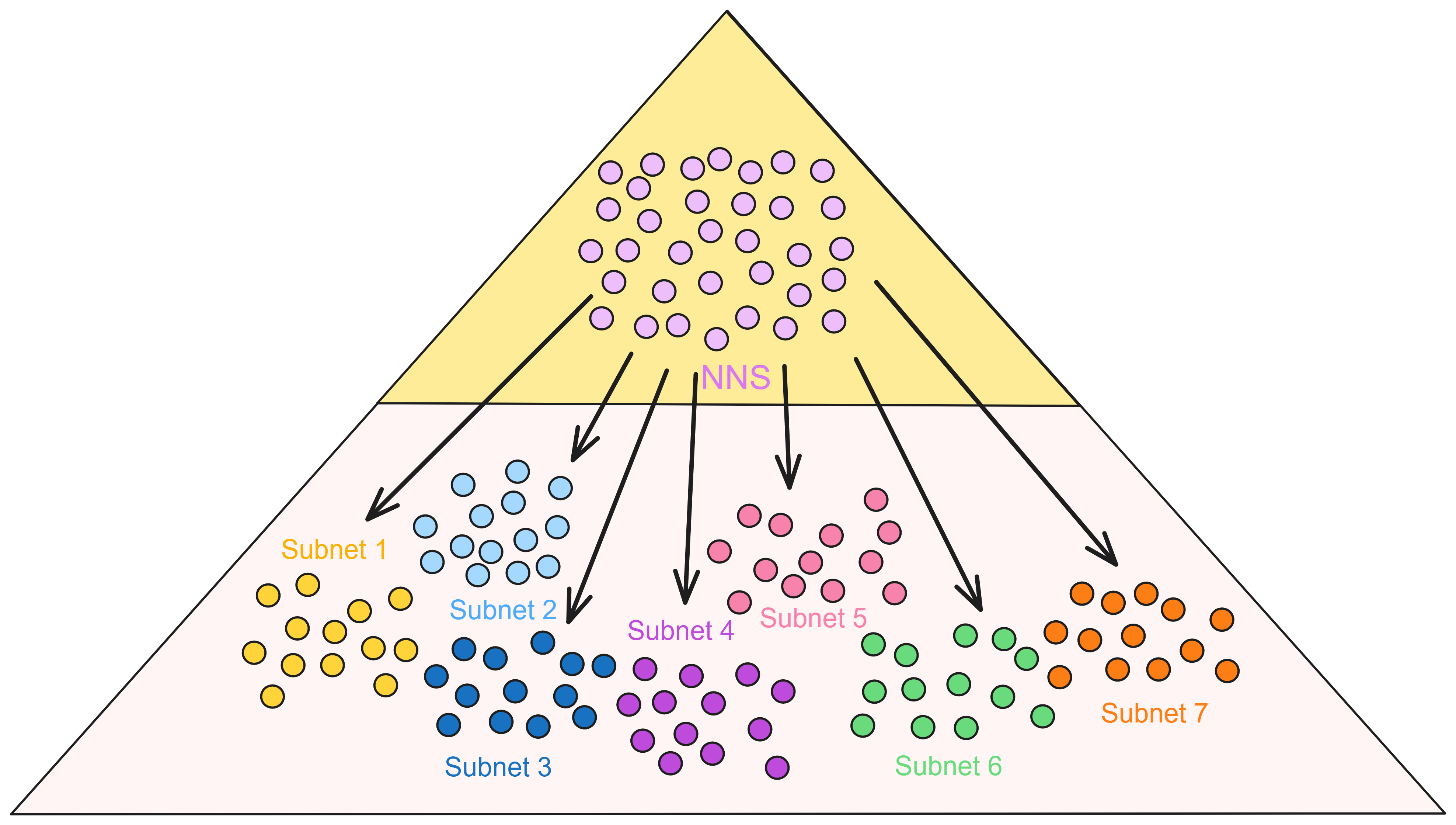

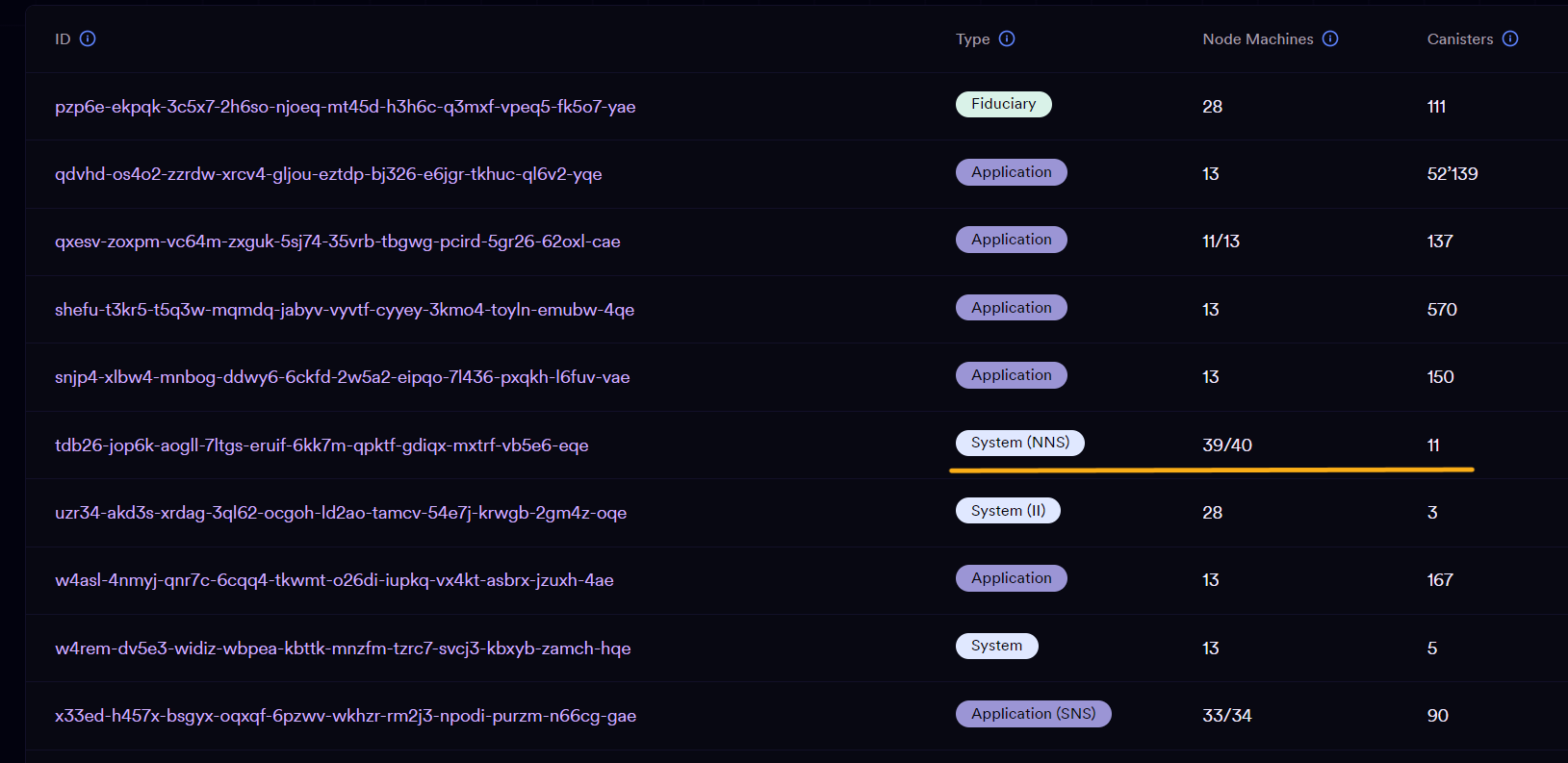

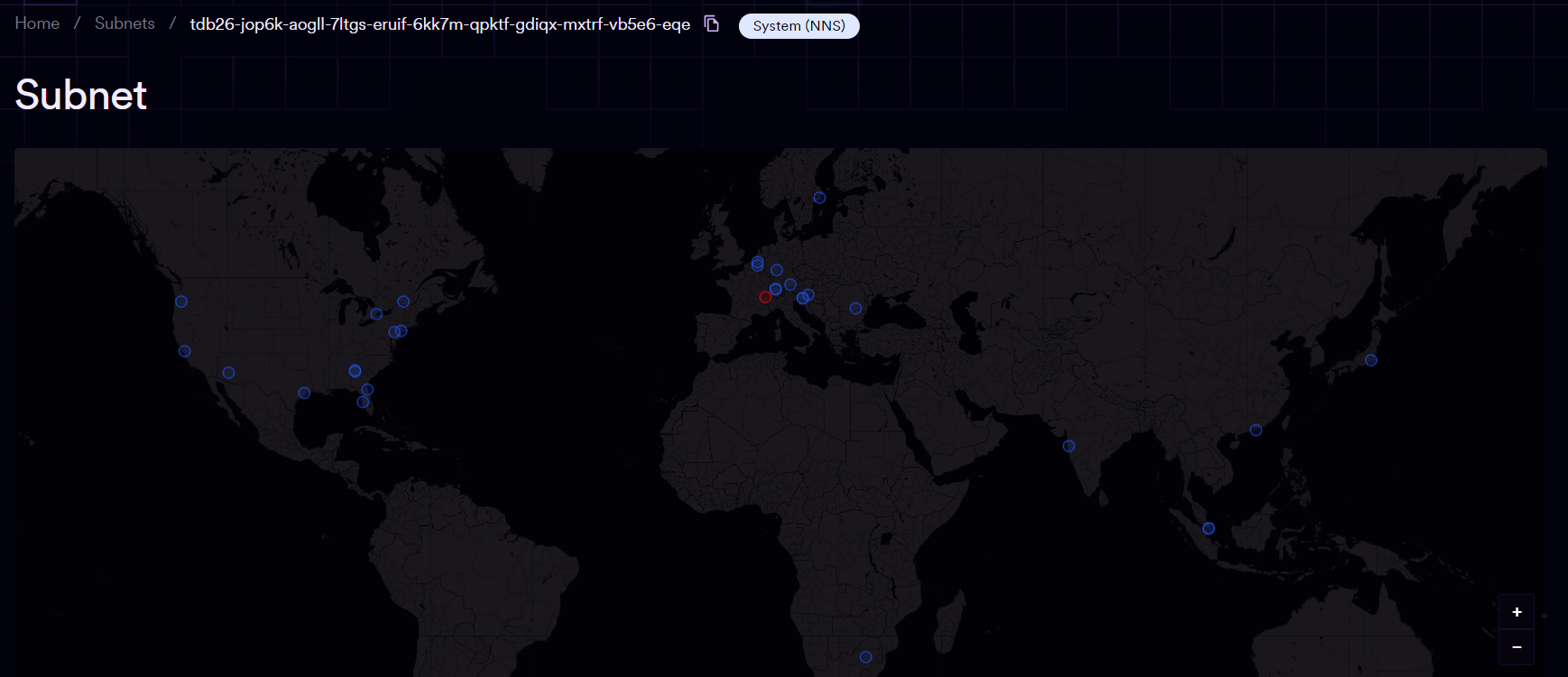

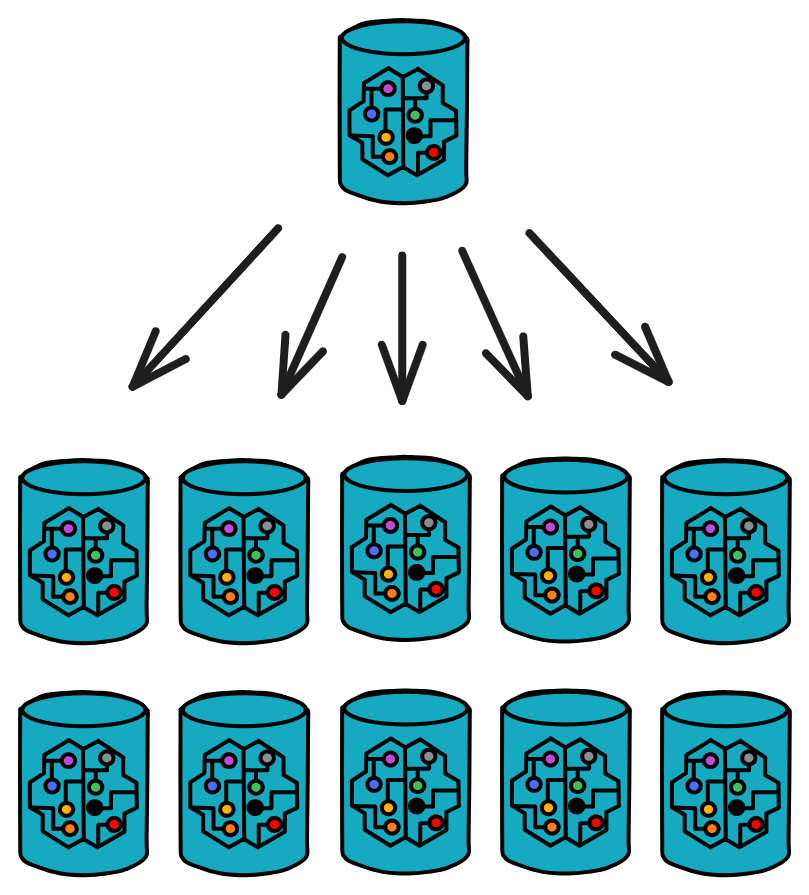

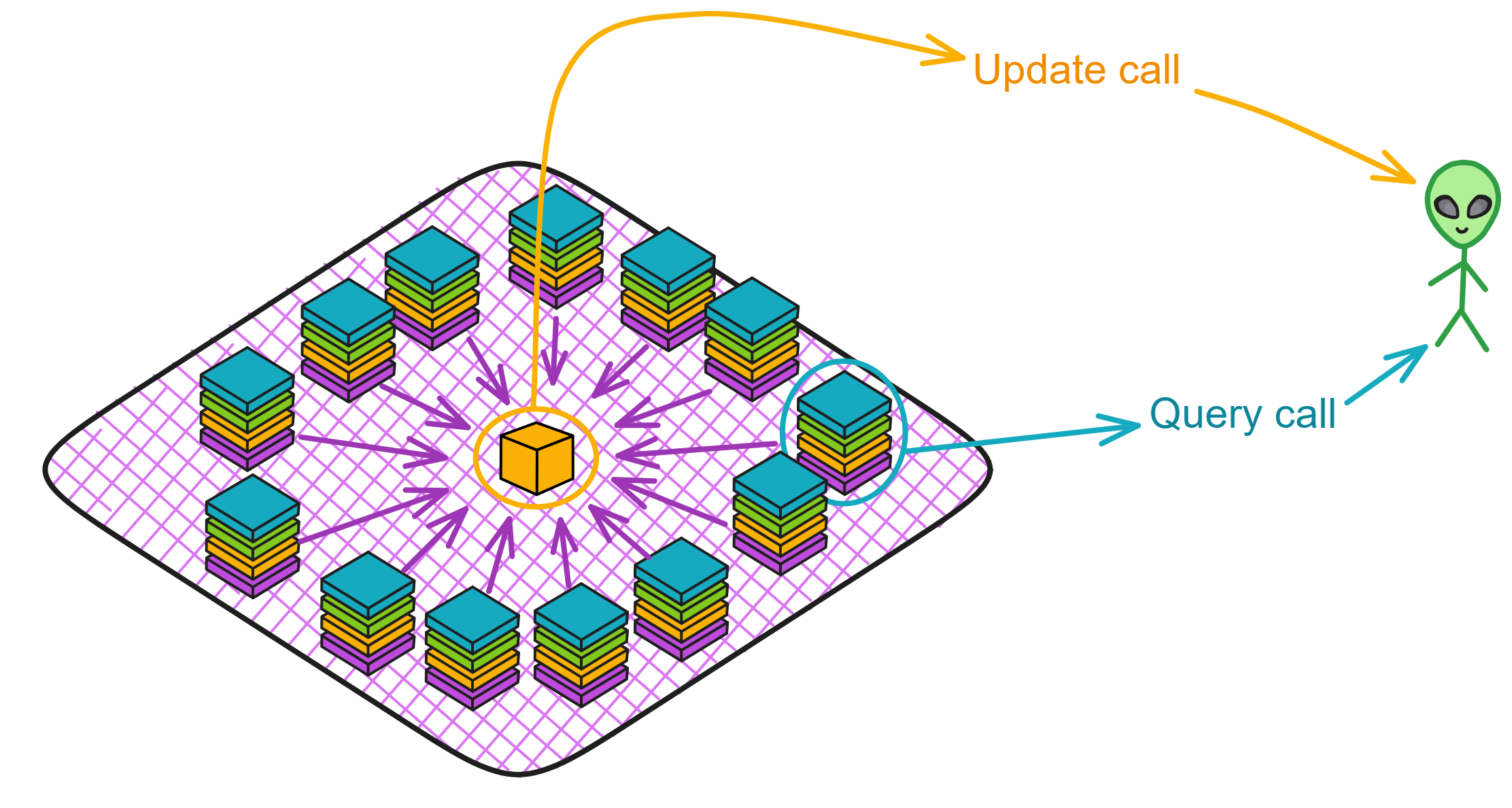

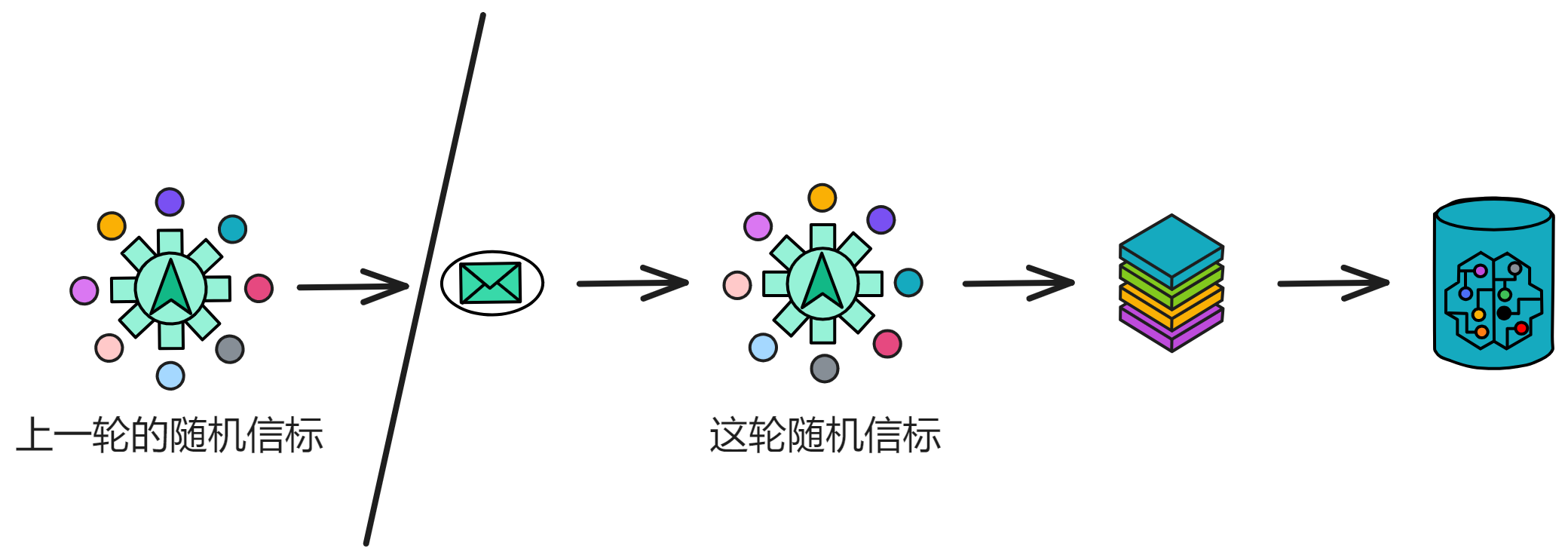

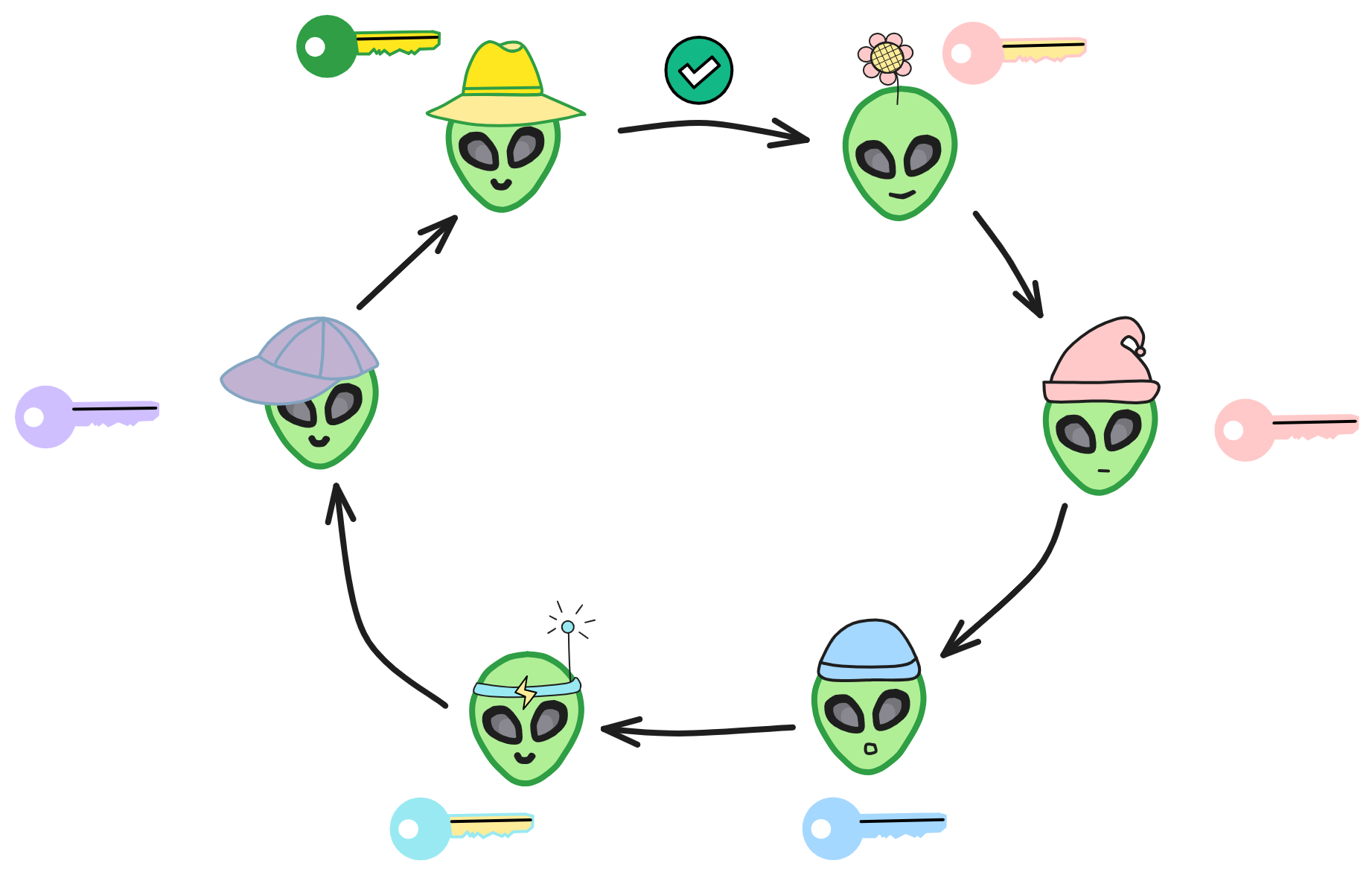

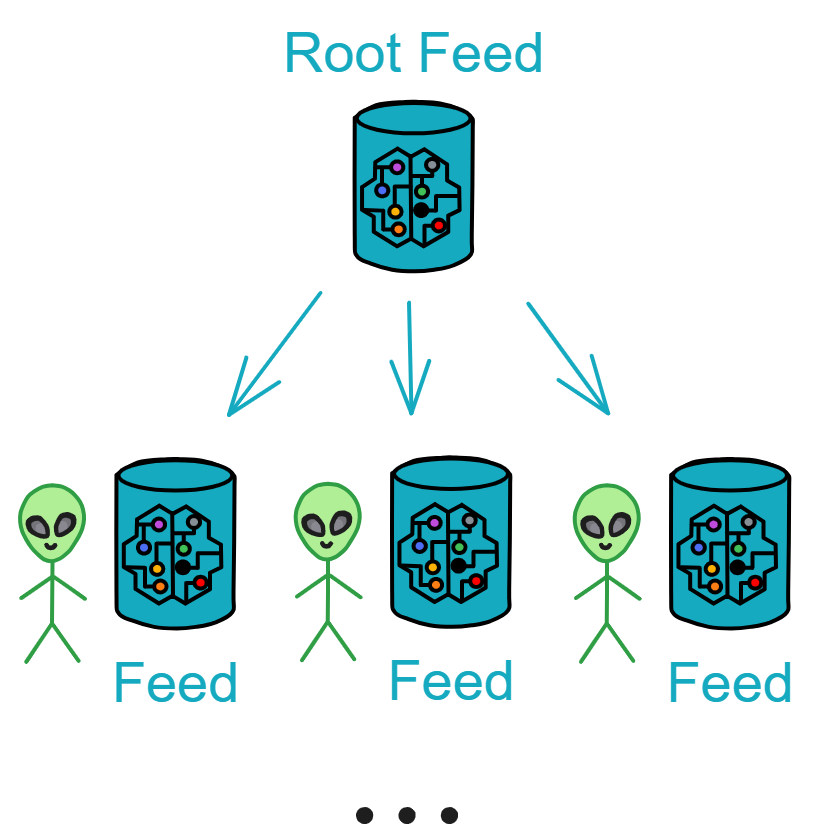

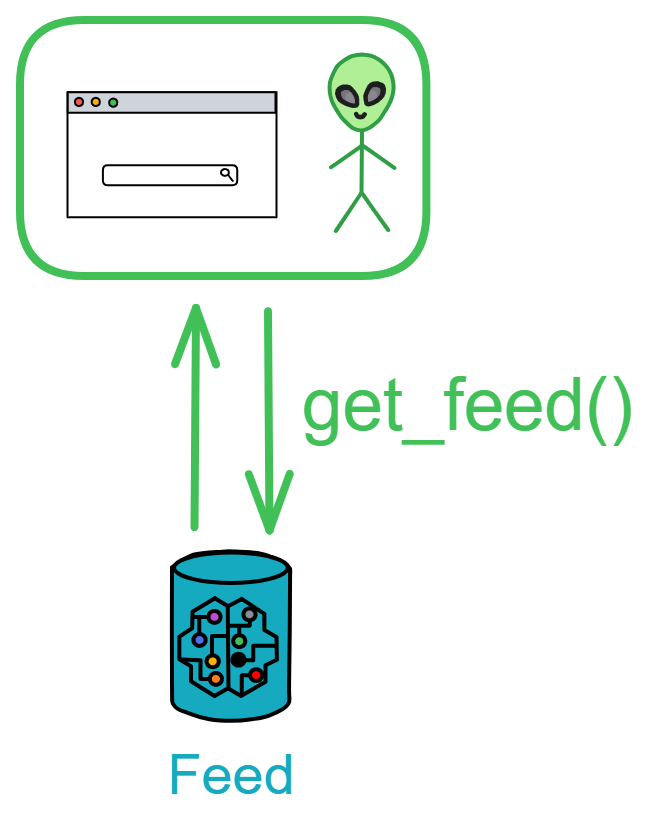

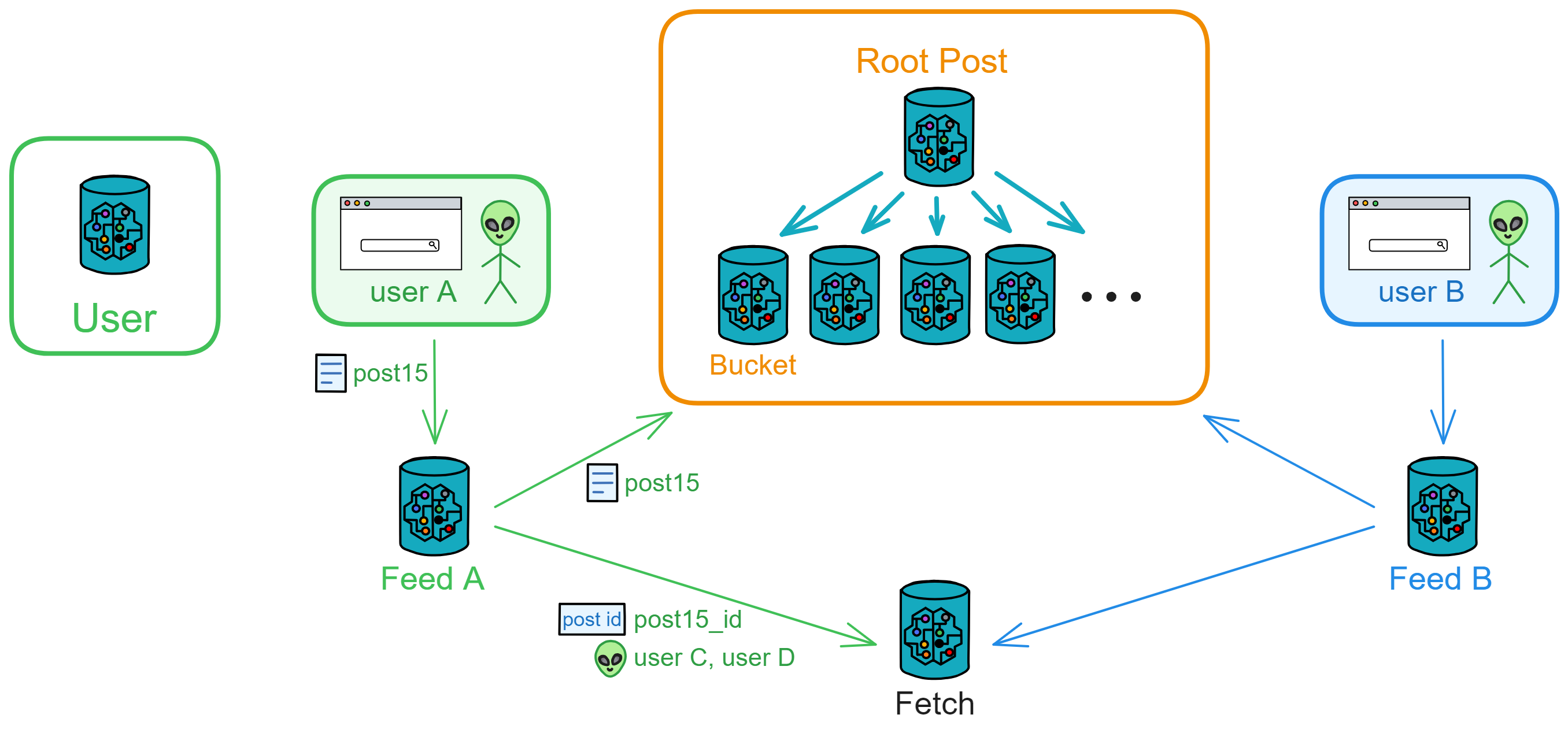

IC has many innovations, such as unlimited horizontal scalability. Through Chain Key, IC network has the ability to infinitely expand. The network is managed by a DAO - the Network Nervous System (NNS). So this requires an unusual consensus algorithm. IC's consensus only orders messages so that replicas execute messages in the same order. Relying on the BLS threshold signature algorithm and non-interactive distributed key generation (DKG) to randomly select who produces blocks, the consensus speed is very fast. This also gives IC higher TPS, millisecond level queries and second level data updates. The experience of using Dapps is much smoother than other public chains.

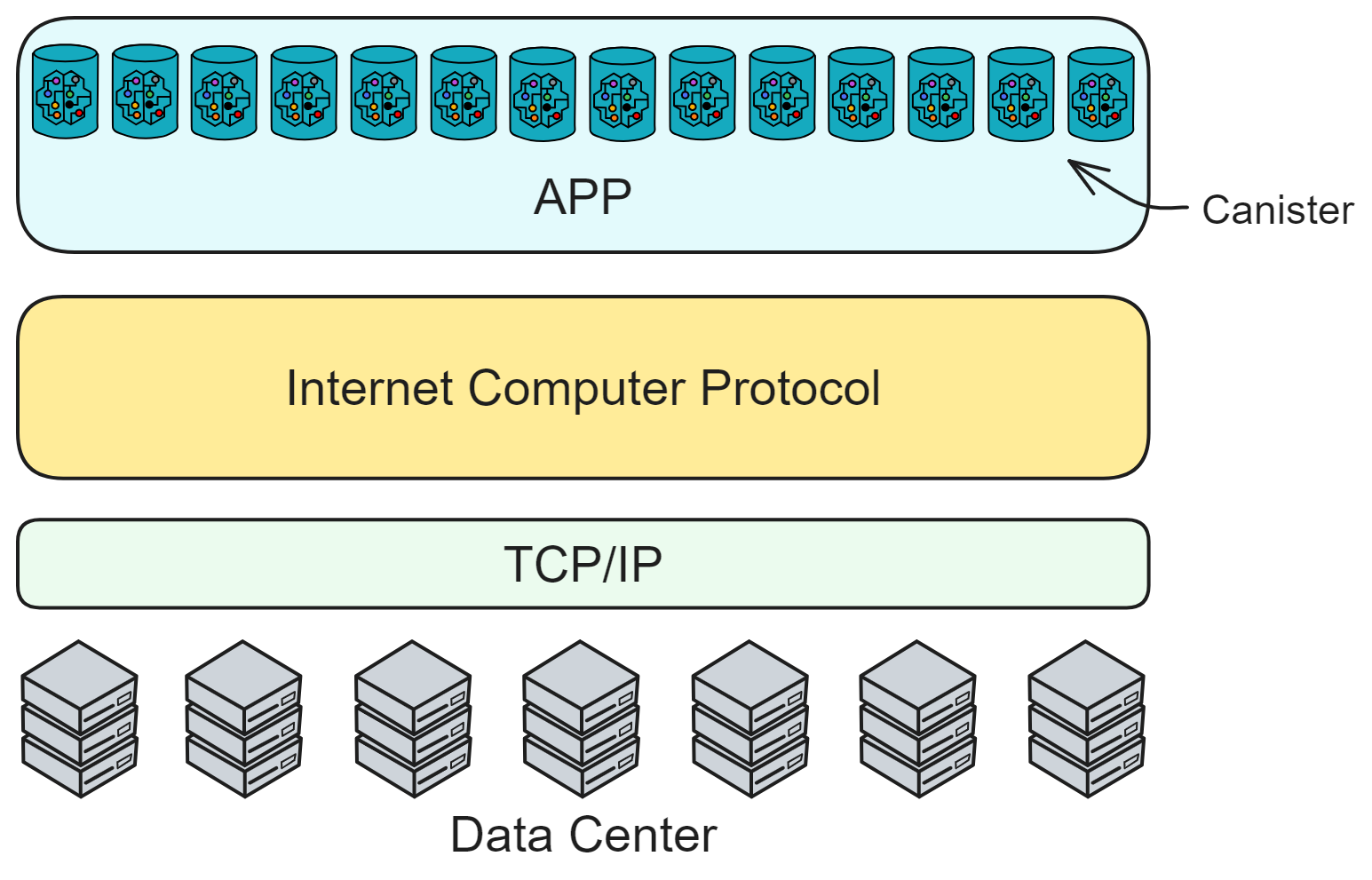

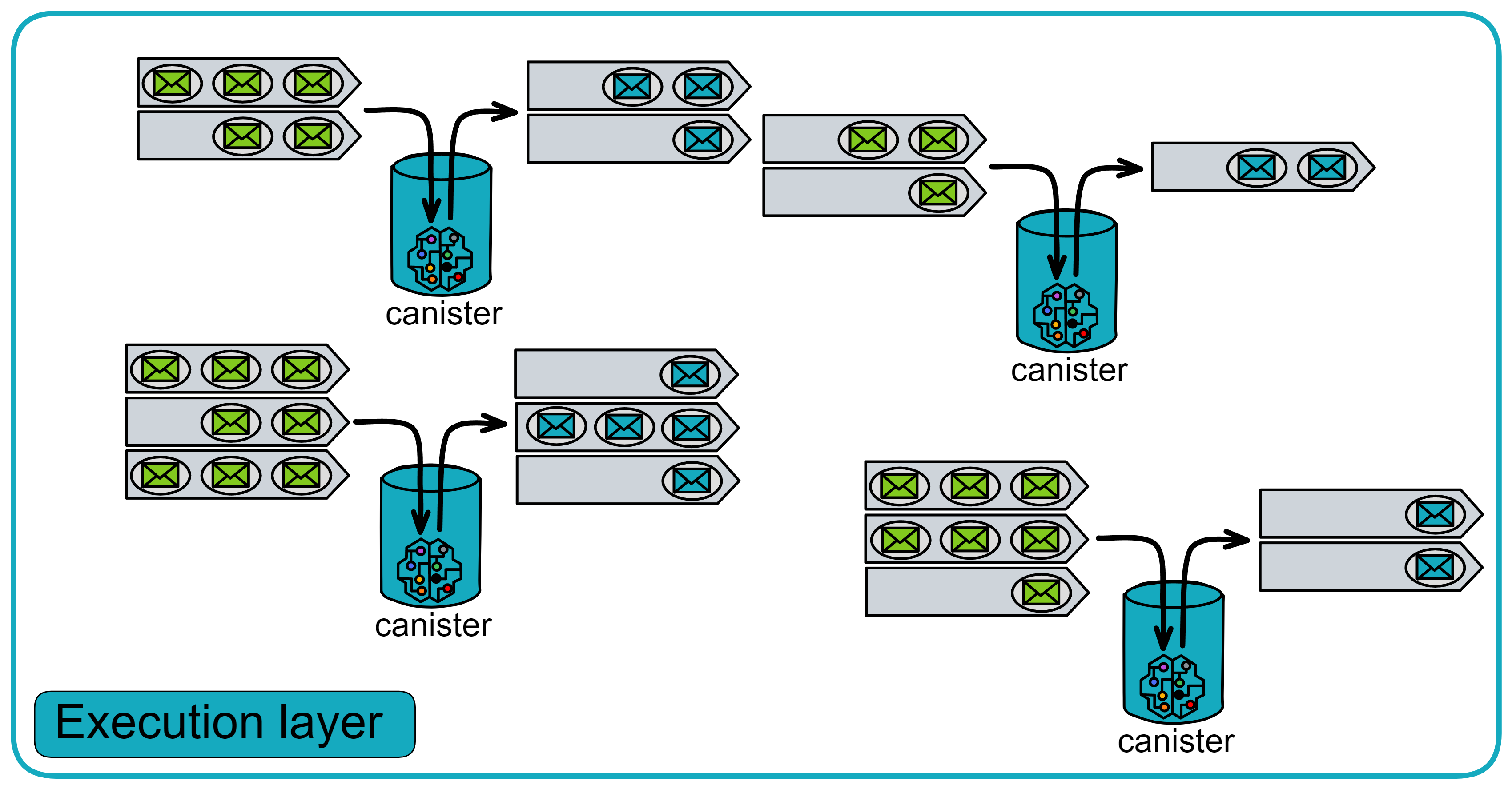

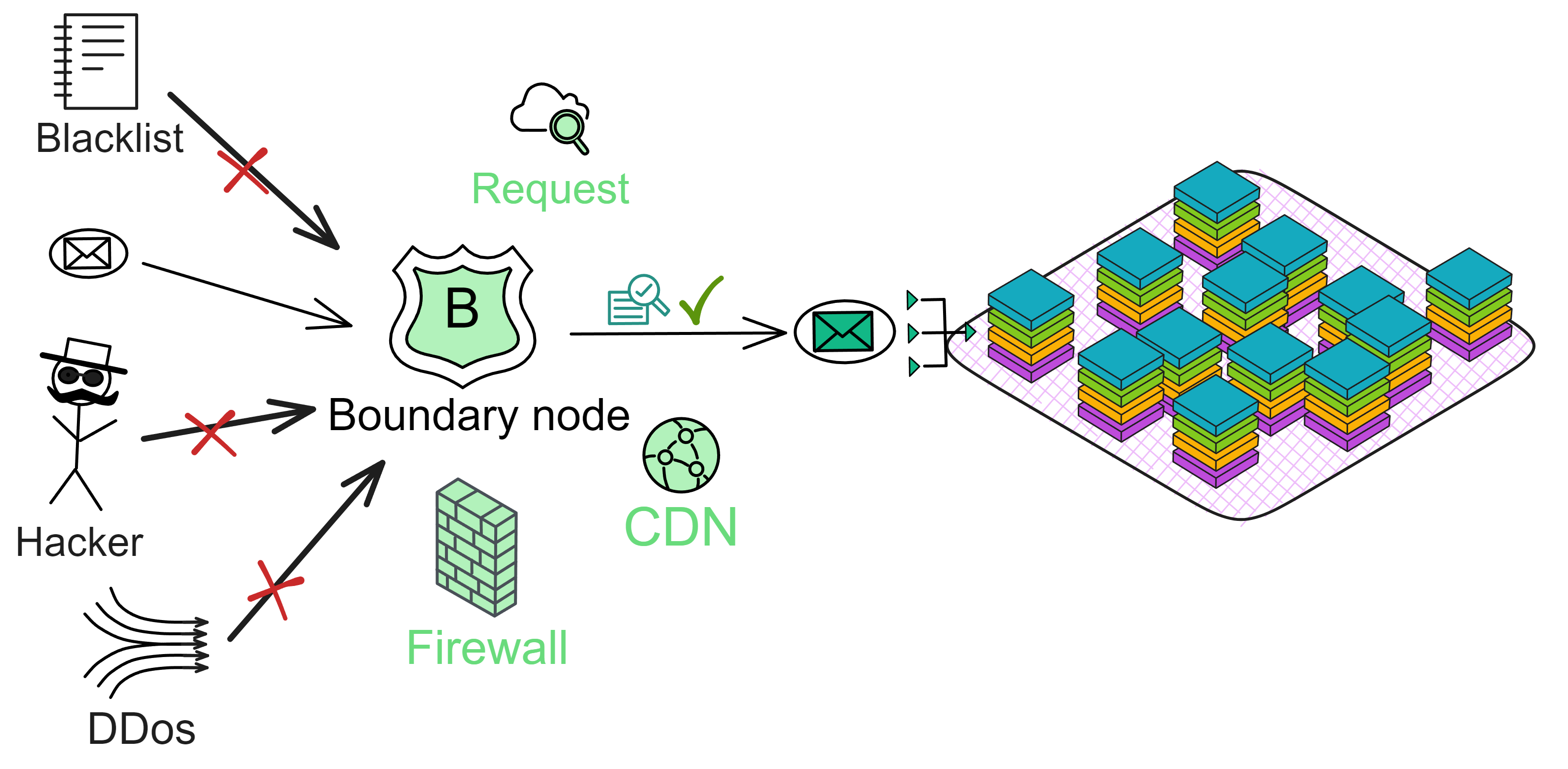

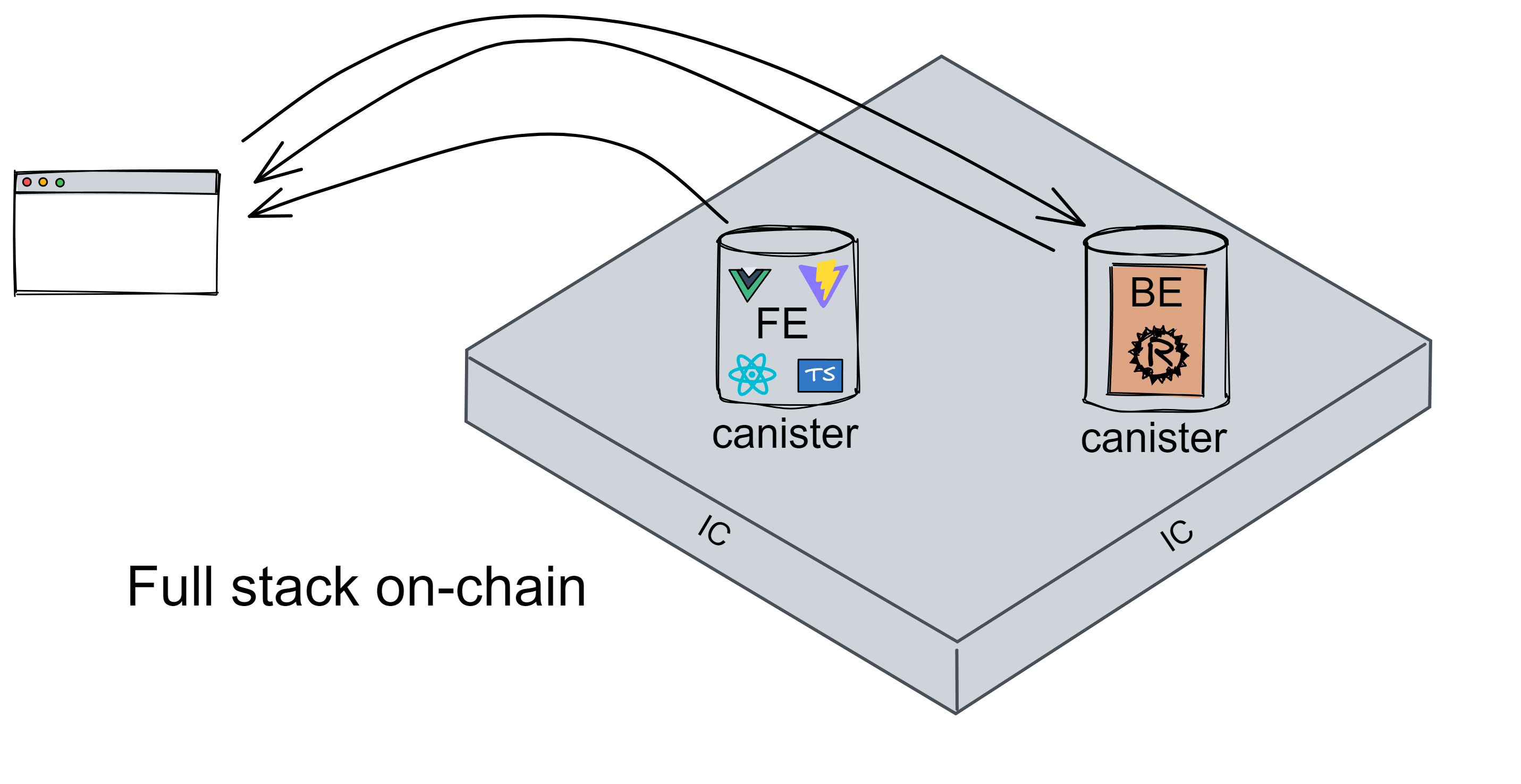

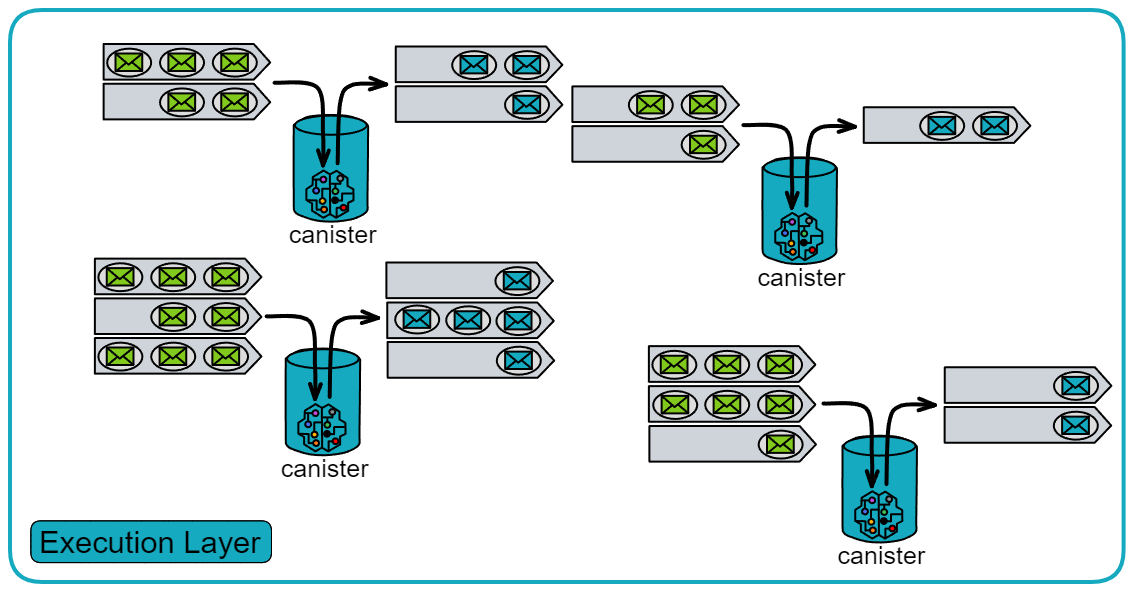

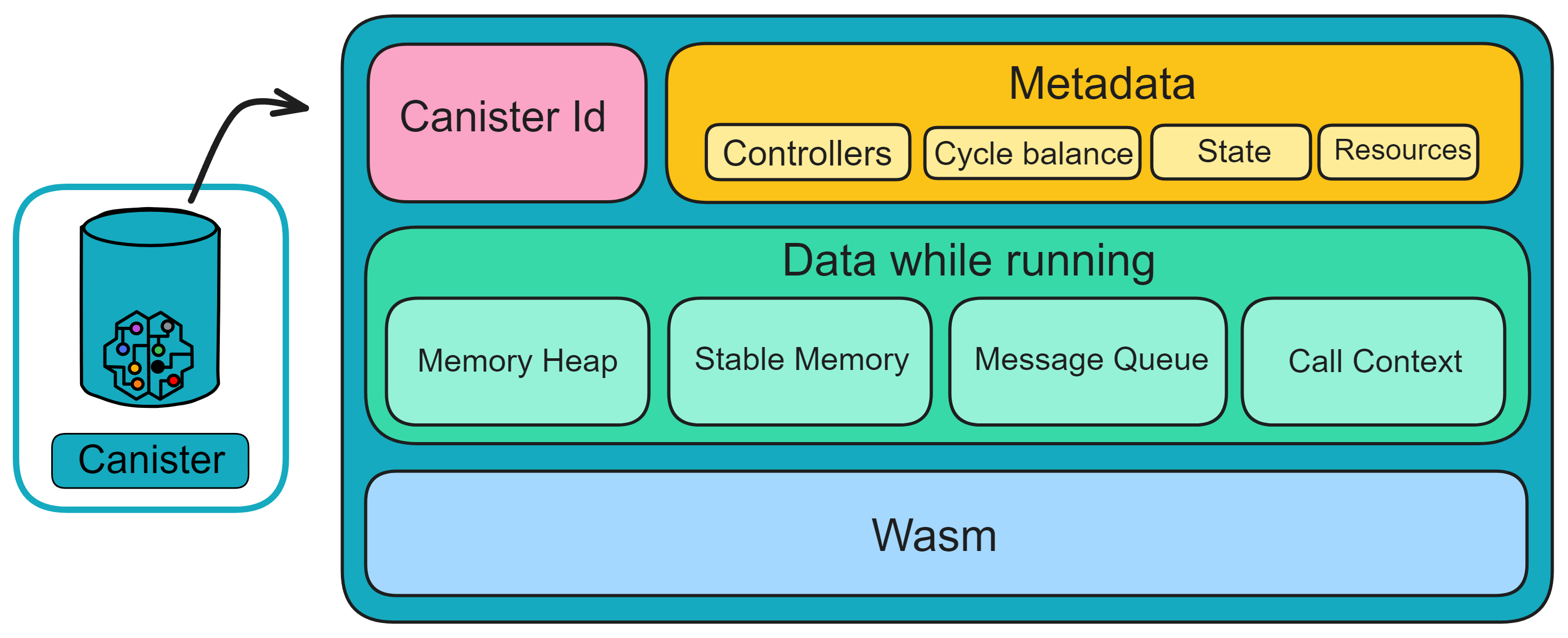

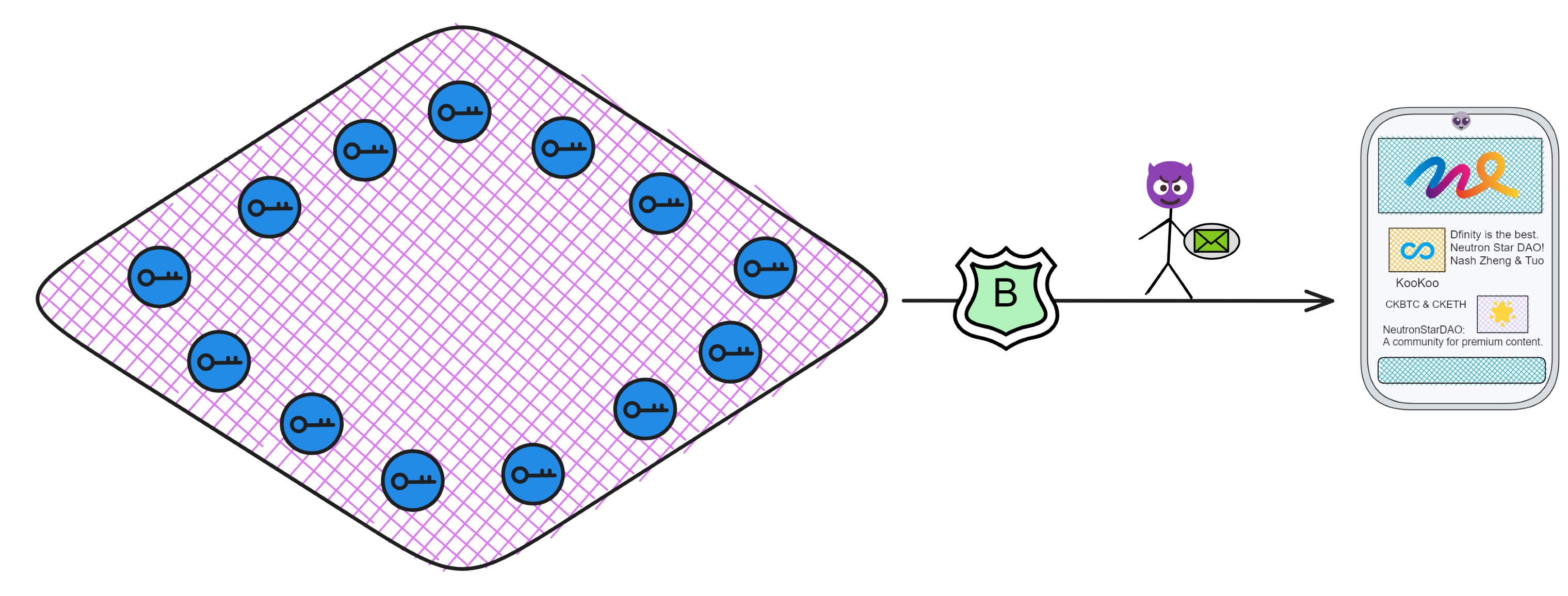

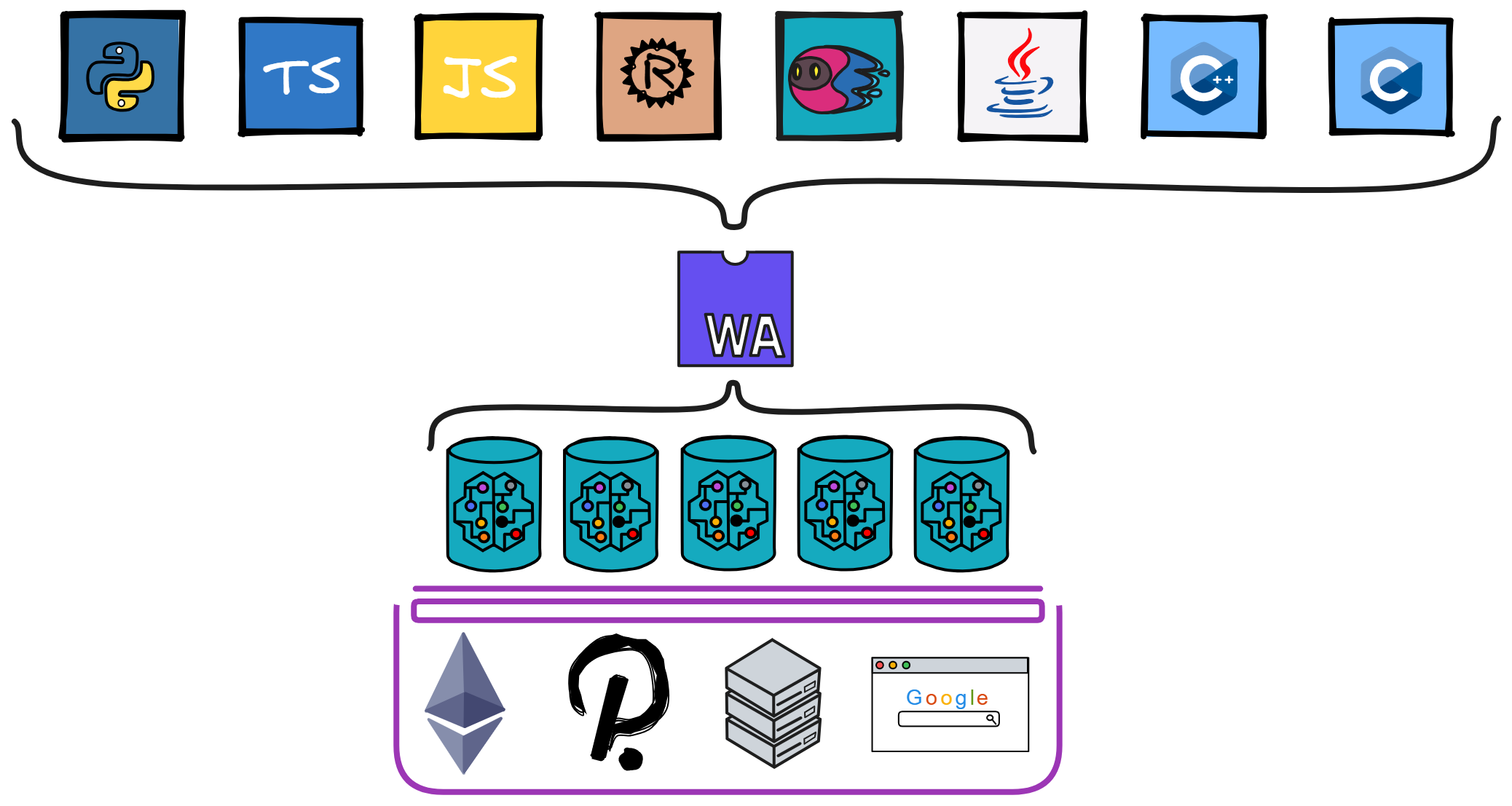

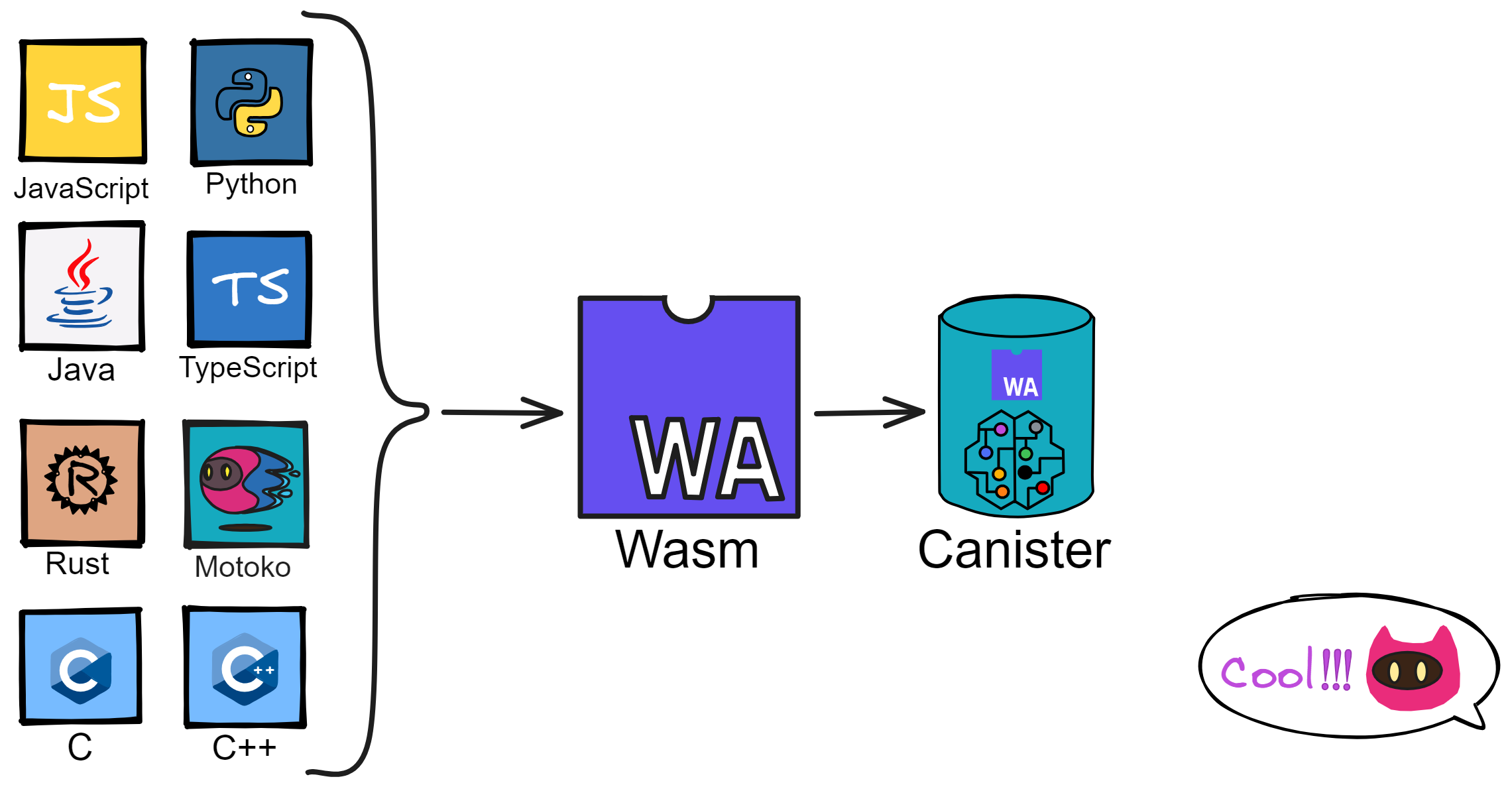

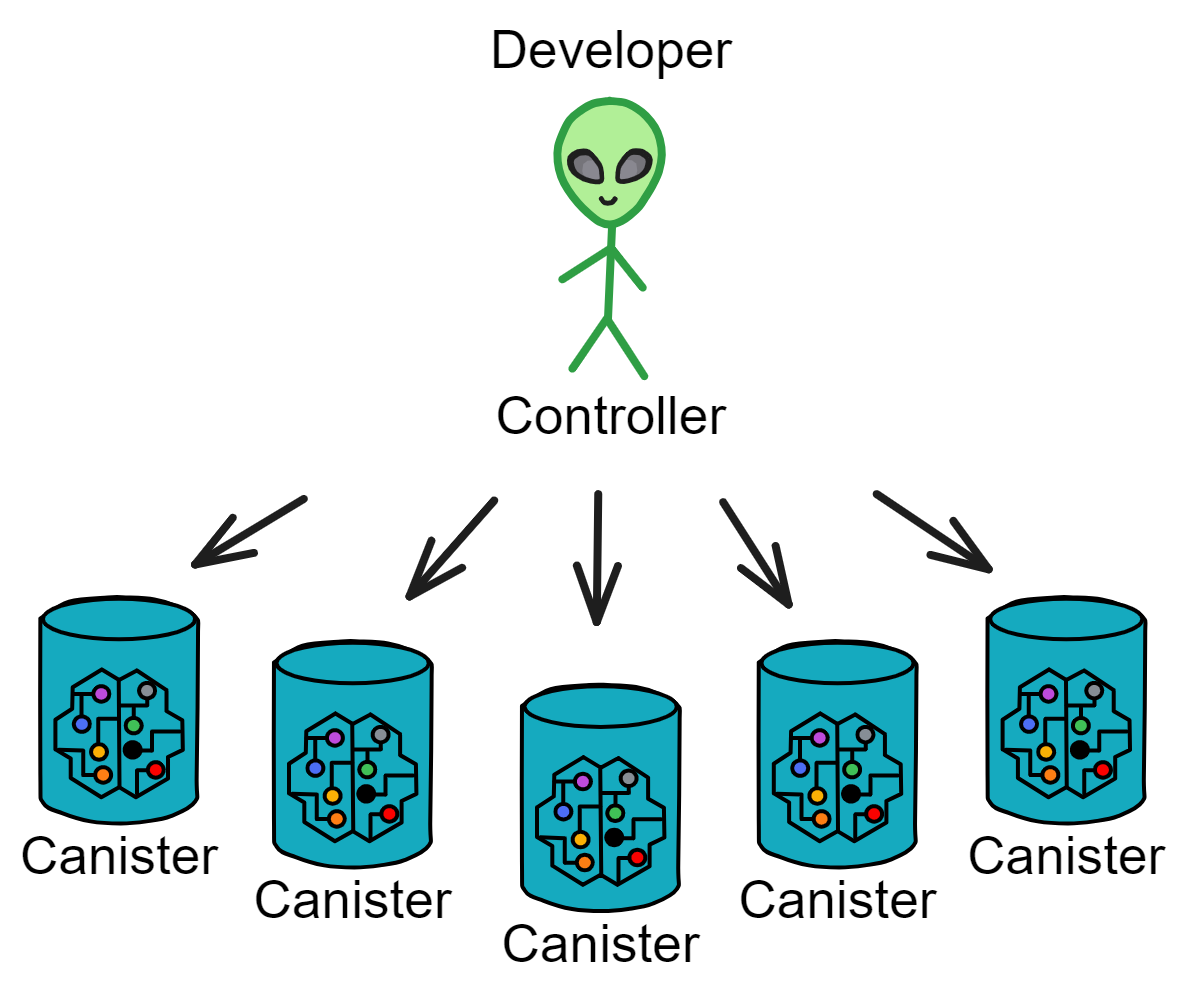

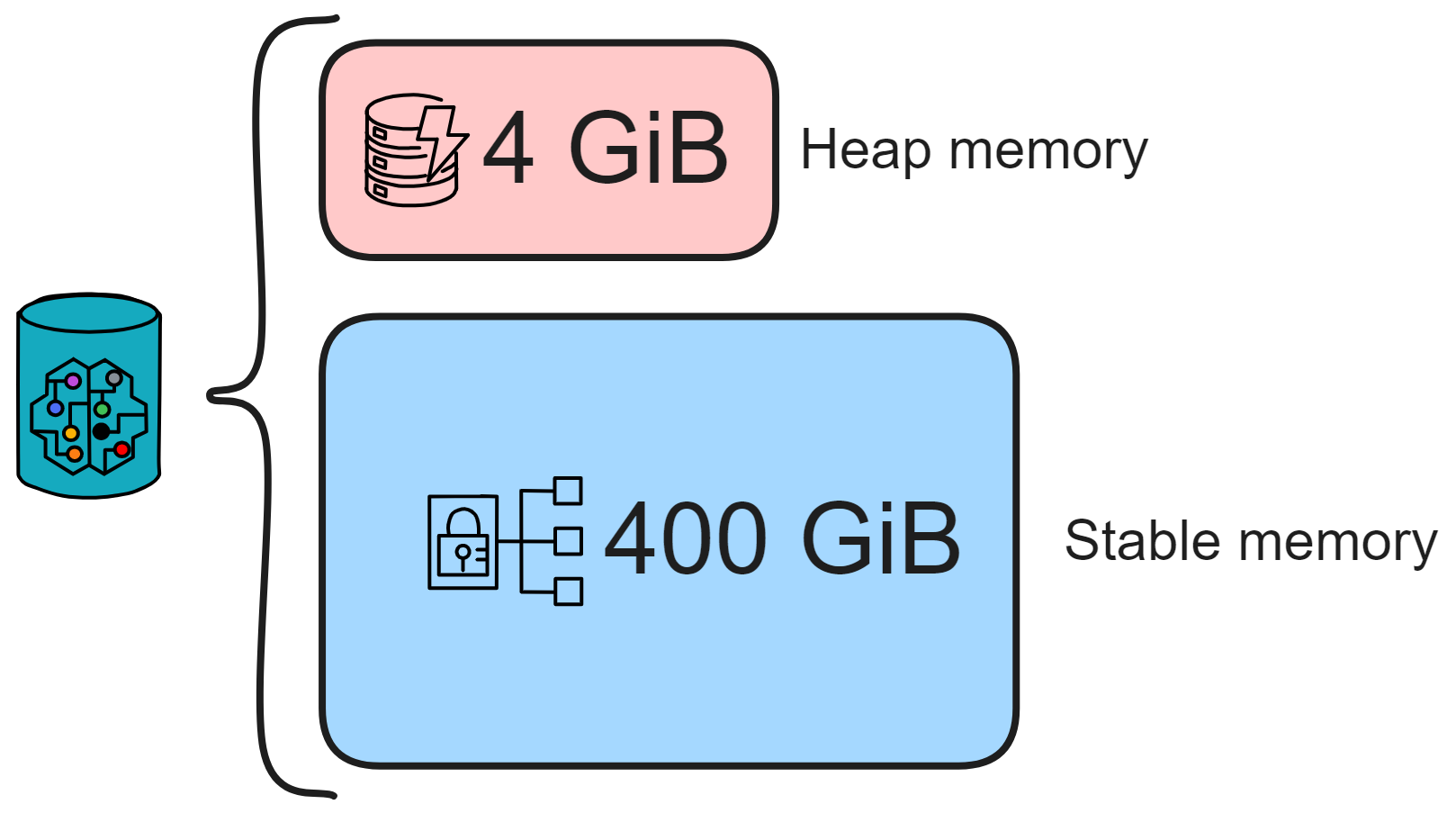

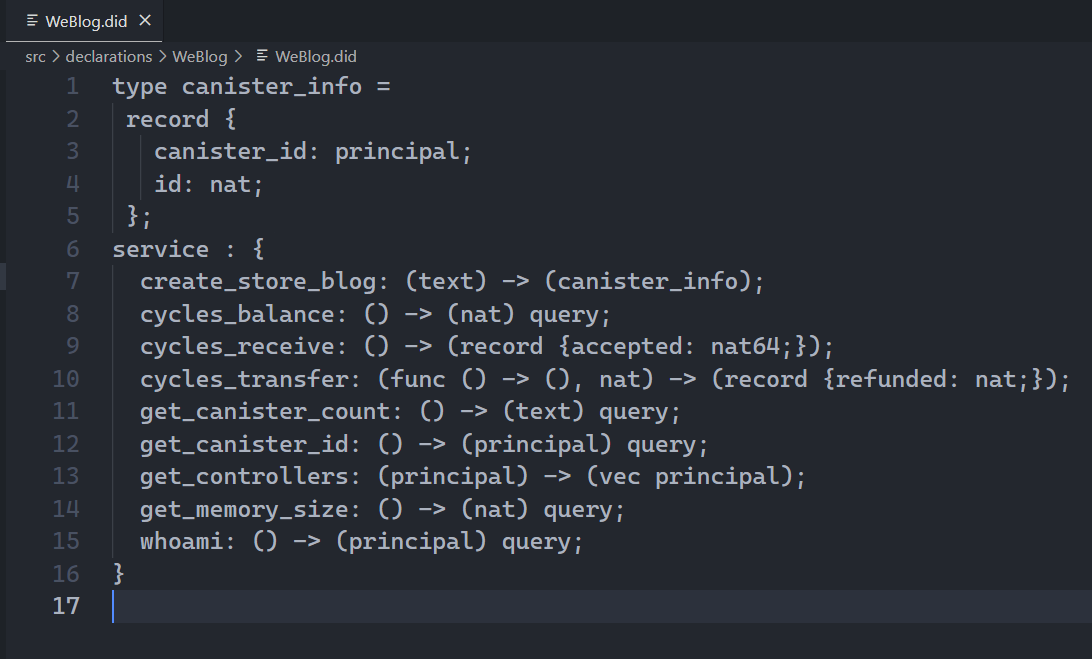

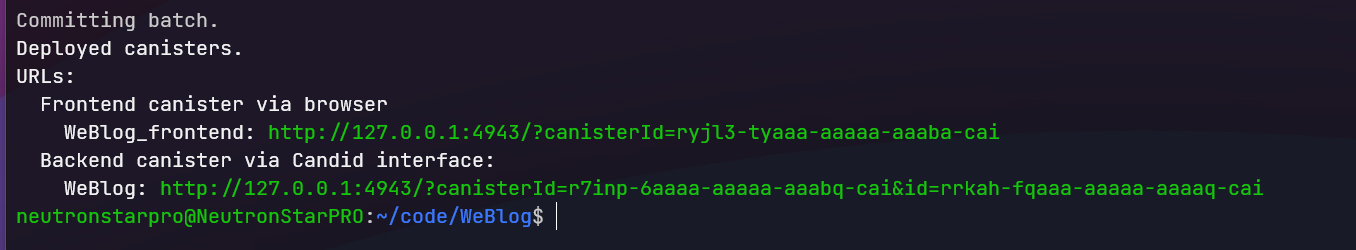

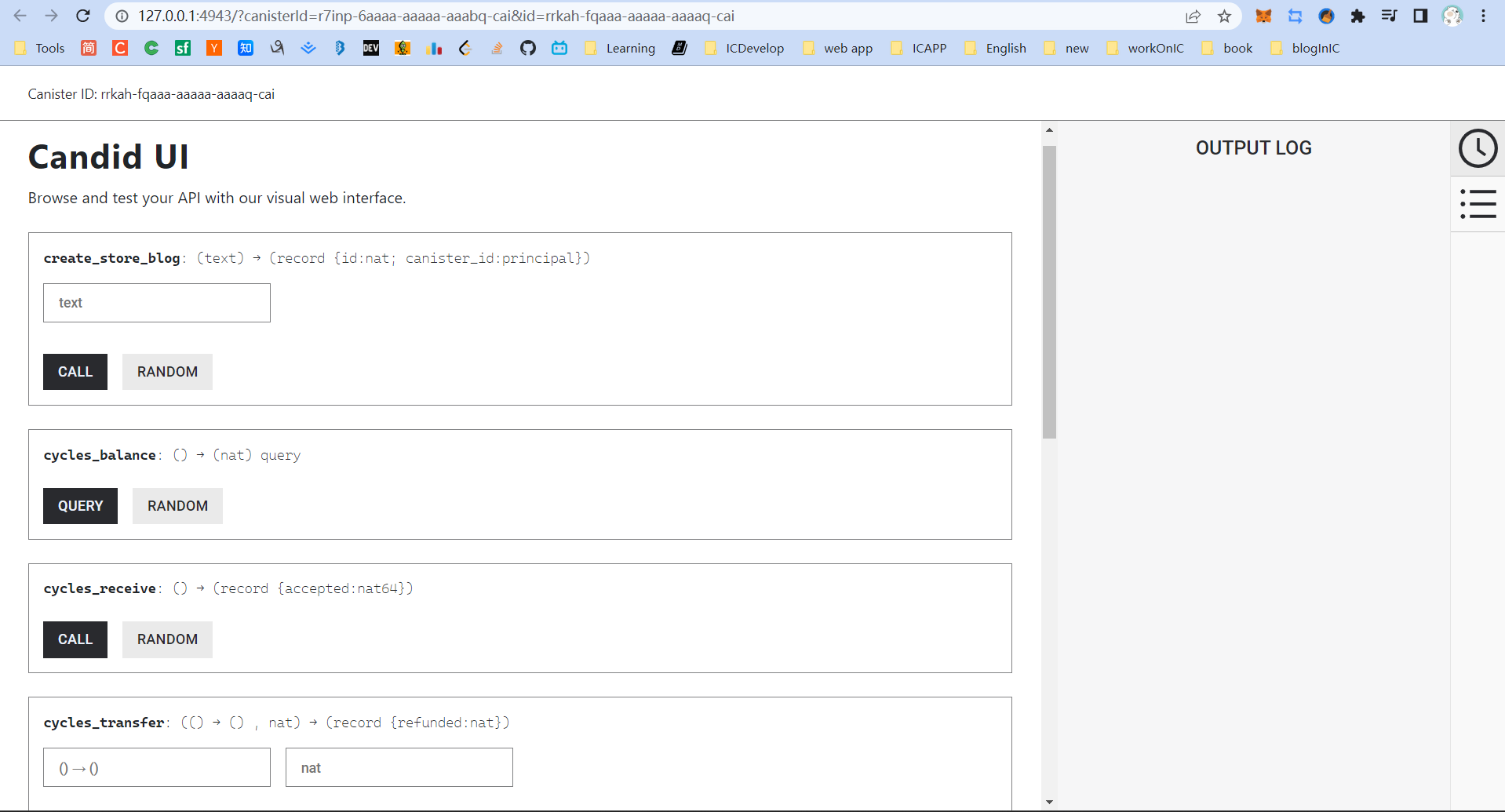

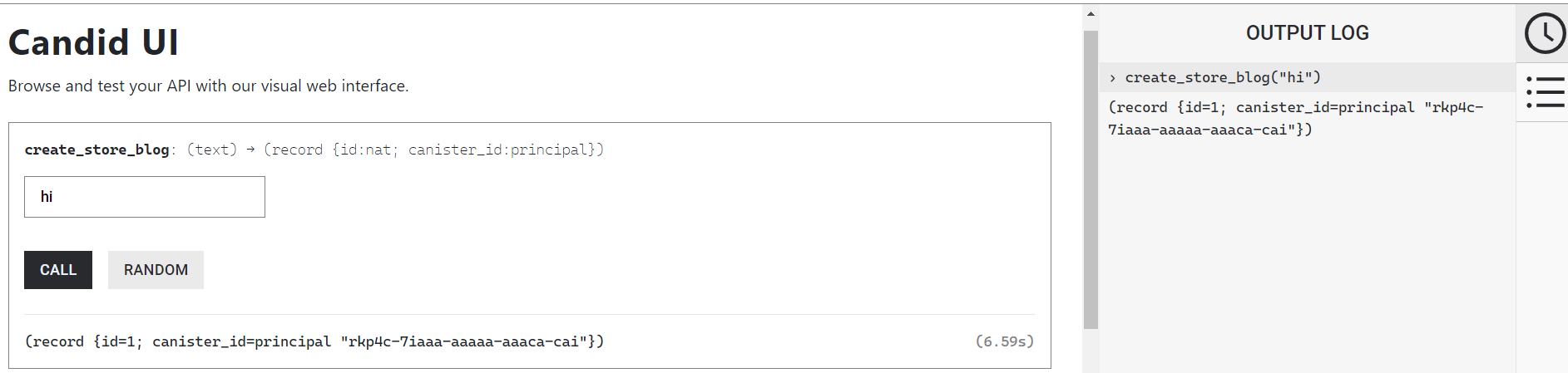

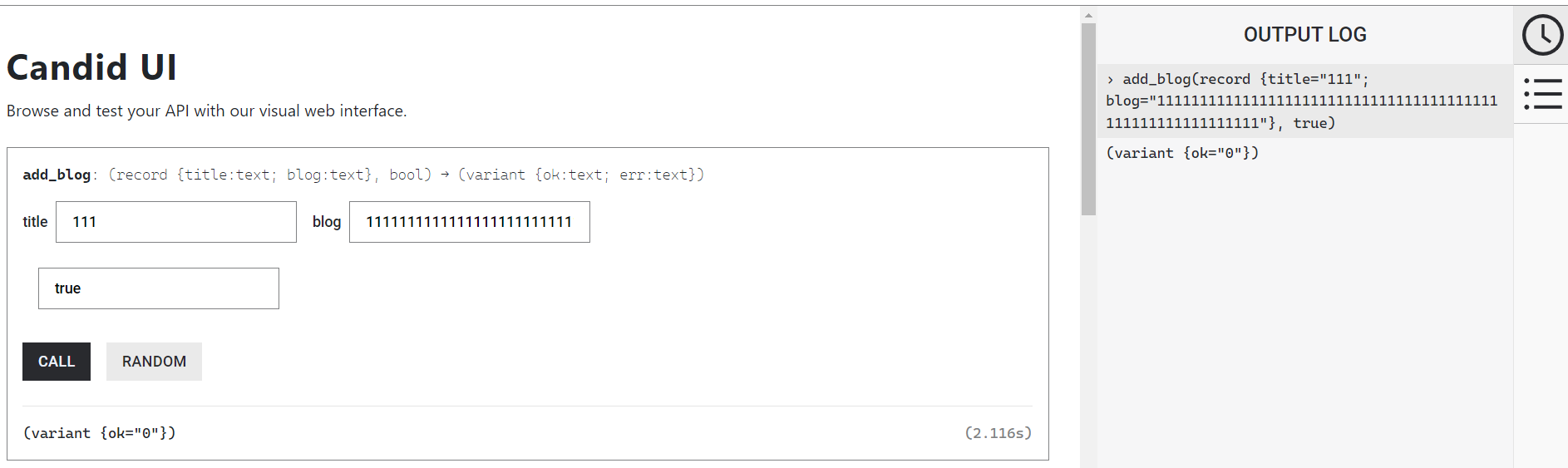

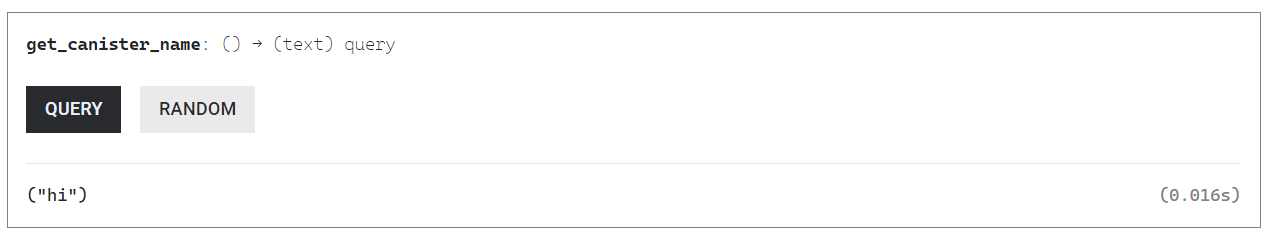

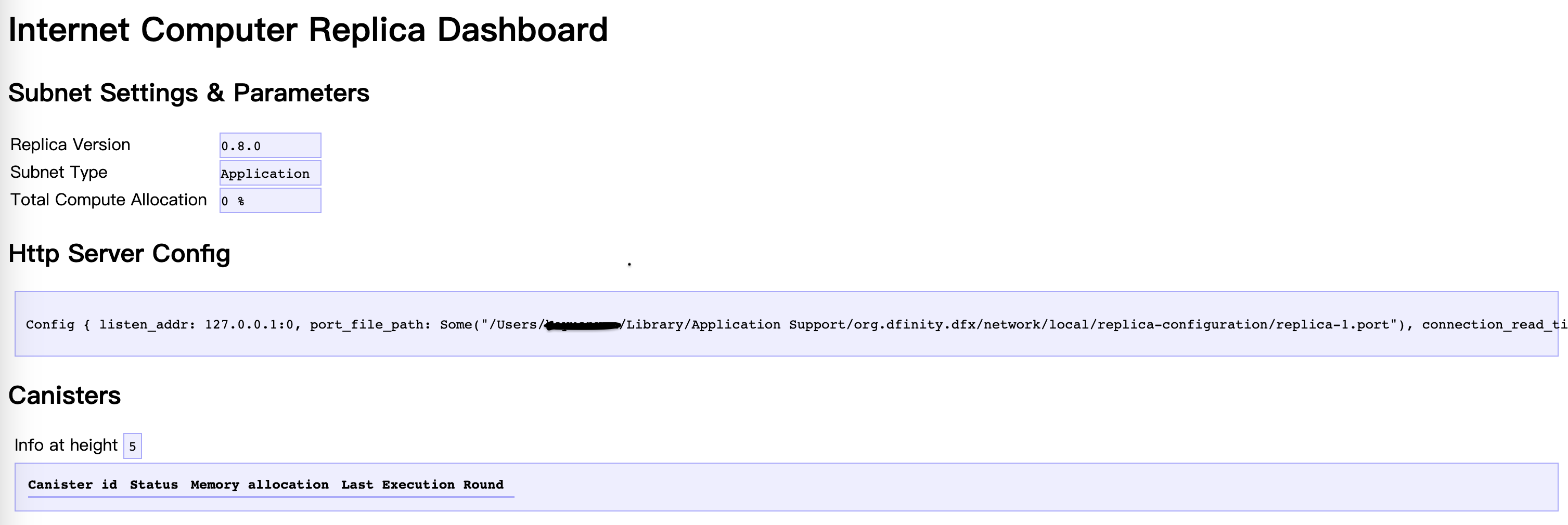

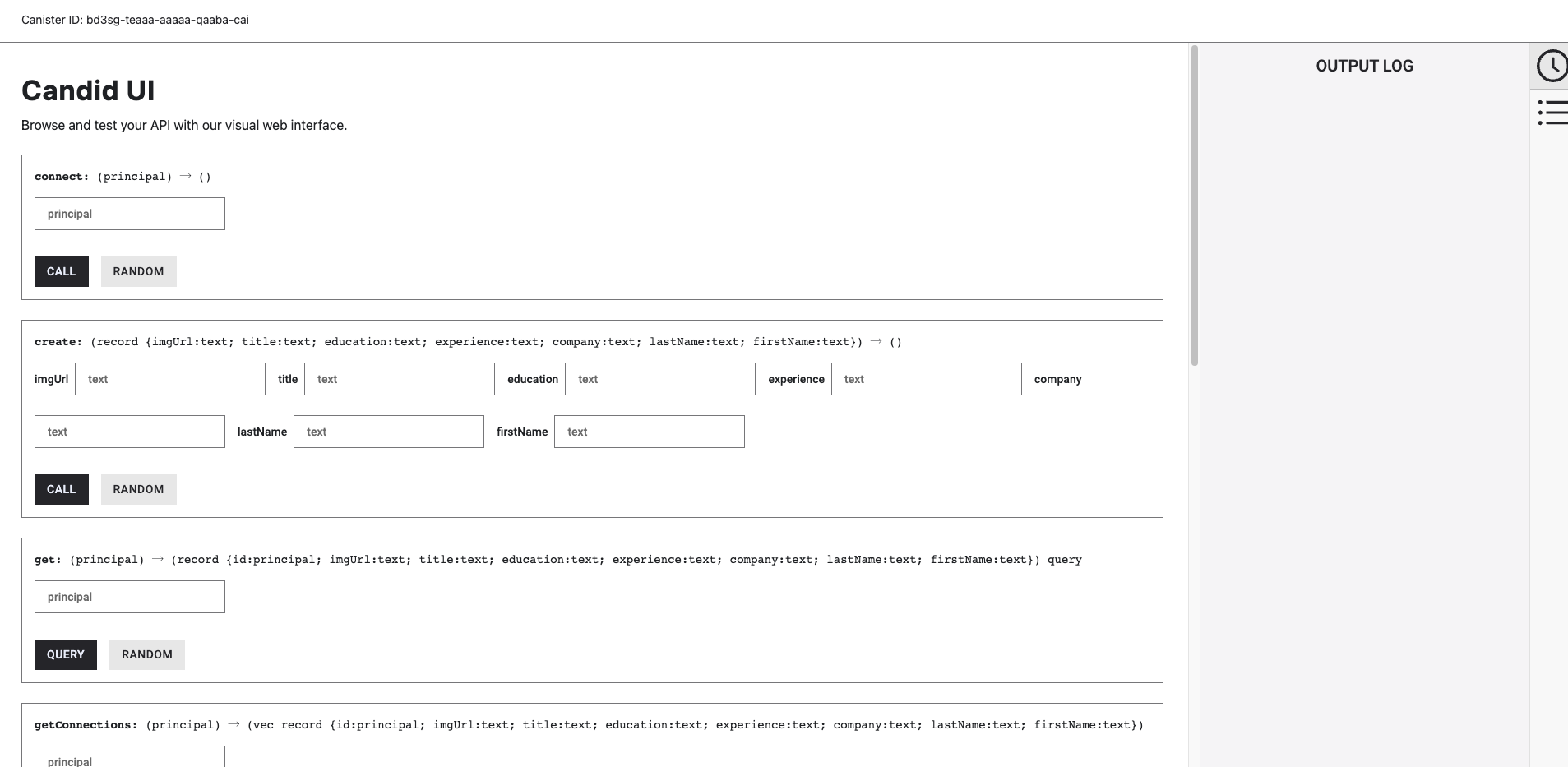

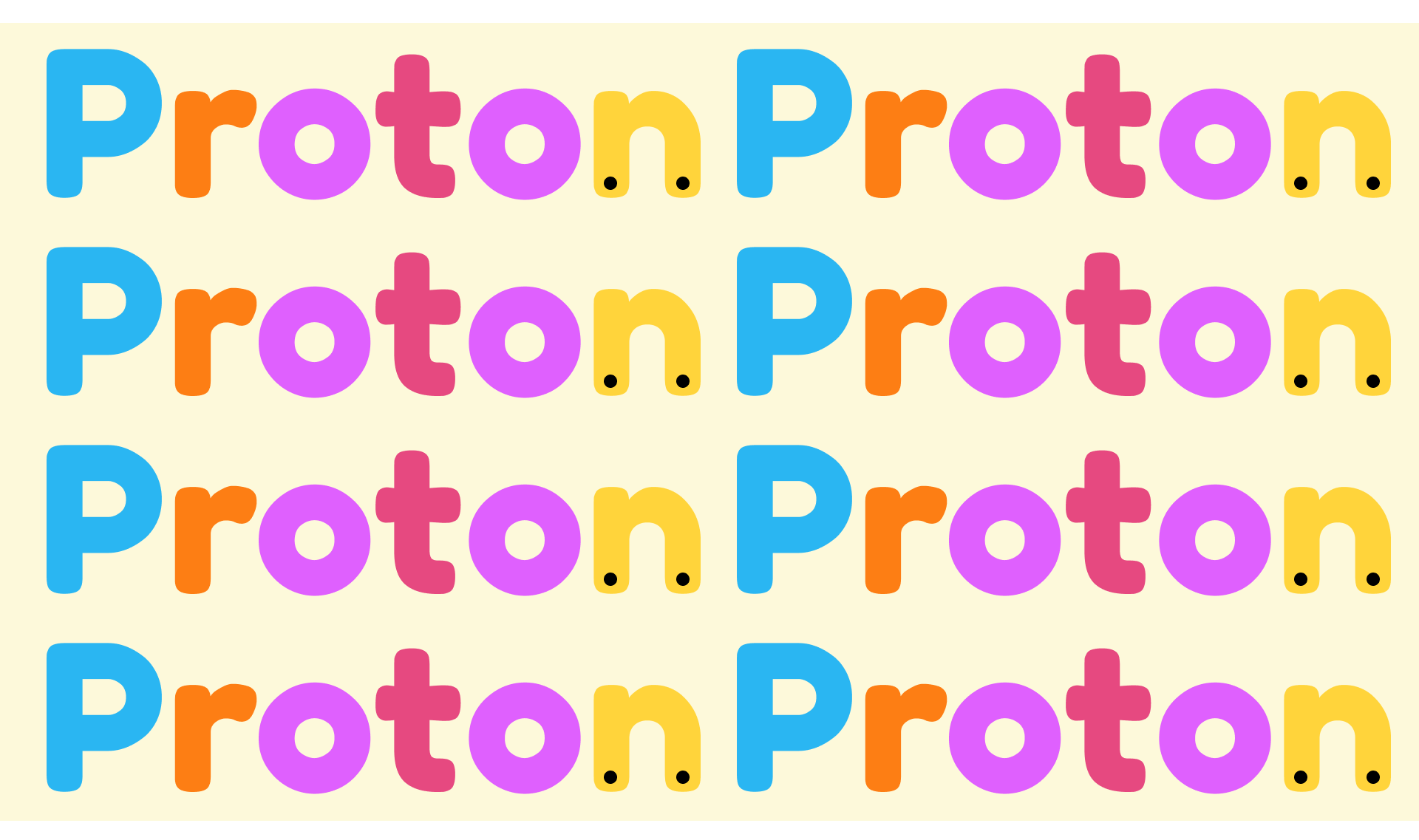

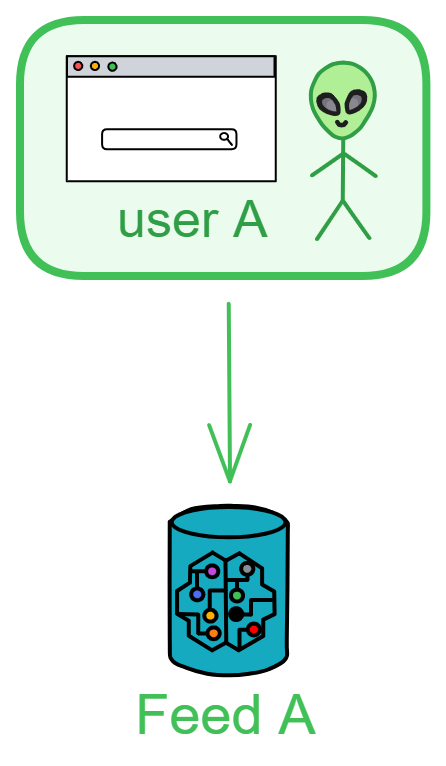

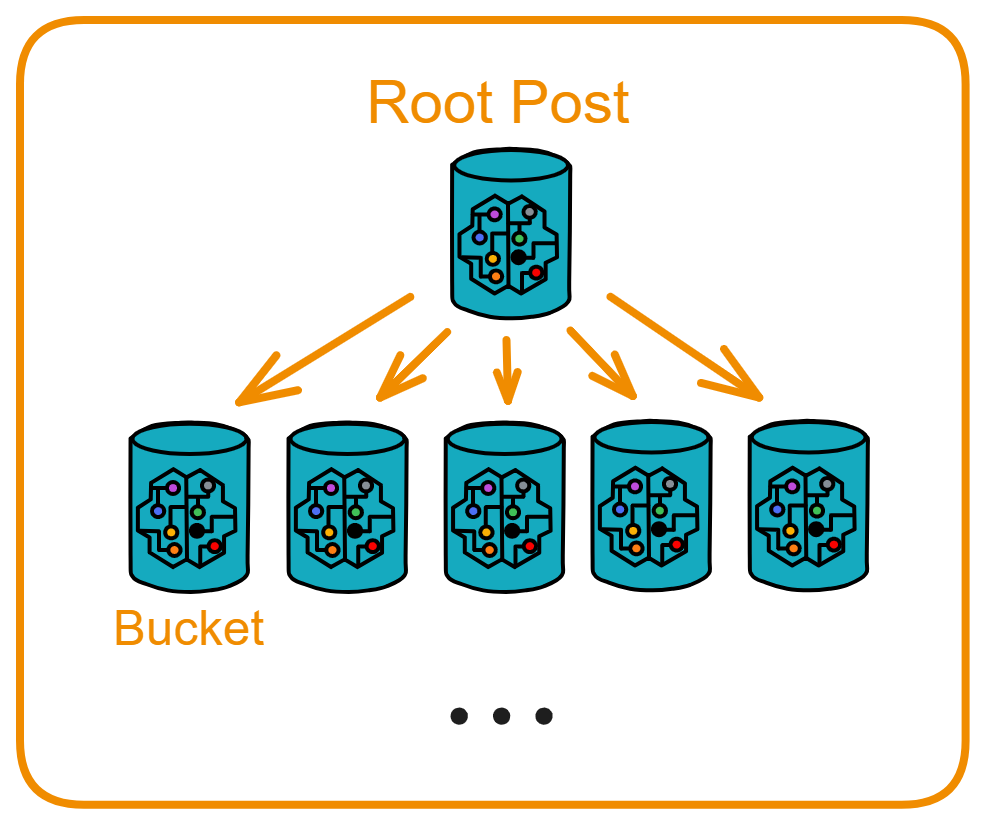

IC's goal is decentralized cloud services. In order to deploy Full Dapps on chain, all Dapps are installed in a virtualized container. "Canister" on IC is equivalent to smart contracts on Ethereum. Canisters can store data and deploy code. Developers can also test through the Candid UI automatically generated by the backend virtual container without writing a line of code. Clients can directly access the frontend pages and smart contracts deployed on IC through https. The virtual containers are like small servers, providing each Dapp with its own on-chain storage space. They can also support smart contracts directly calling external https servers without an oracle. This is the first time in the history of blockchain that smart contracts can communicate directly with external https servers. After further processing the messages, the smart contracts can respond. Like Ethereum and Bitcoin, IC also accepts the paradigm of "code is law". This also means that there is no governance to regulate the use of the platform or the underlying network itself. IC's "smart contracts" Canisters are not immutable. They can store data and update code.

Historically, for the first time, Bitcoin and Ethereum are directly integrated at the bottom layer through cryptography (not cross-chain bridges): IC is directly integrated with Bitcoin at the protocol level. The Canisters on IC can directly receive, hold and send Bitcoin on the Bitcoin network. In other words, Canisters can hold Bitcoin like a user's wallet. Canisters can securely hold and use ECDSA keys through the Threshold ECDSA Chain Key signing protocol. It is equivalent to giving Bitcoin smart contract functionality!

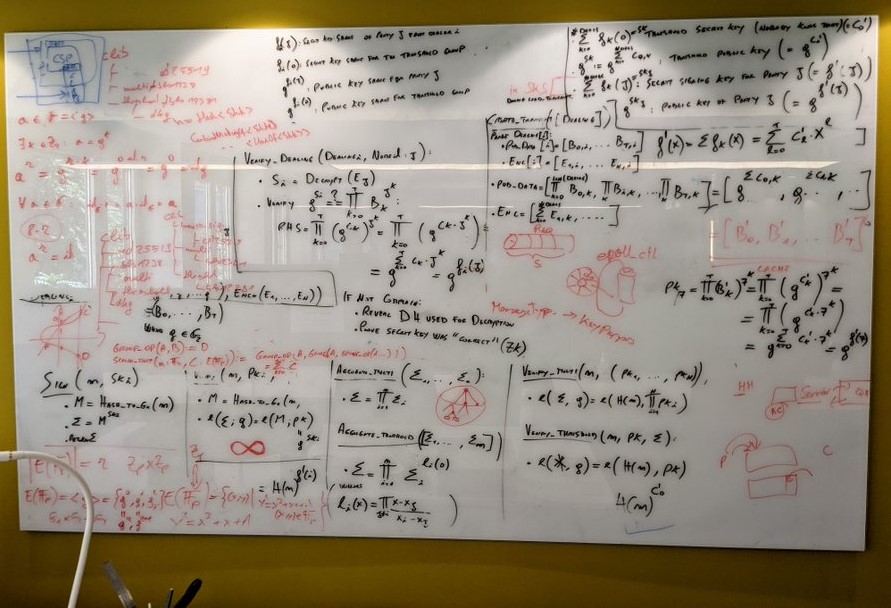

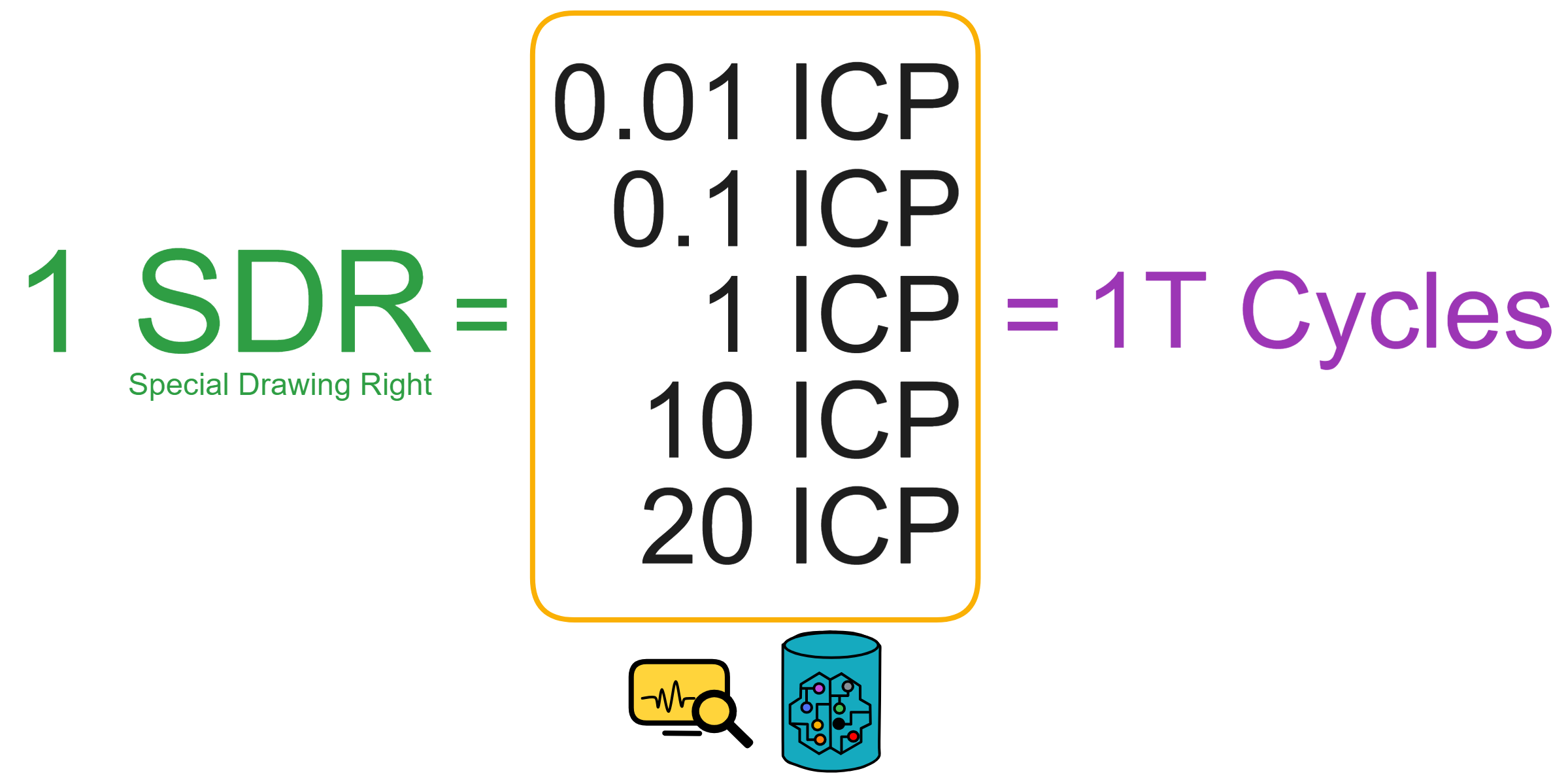

The whiteboard of the Zurich office's computing Bitcoin integration.

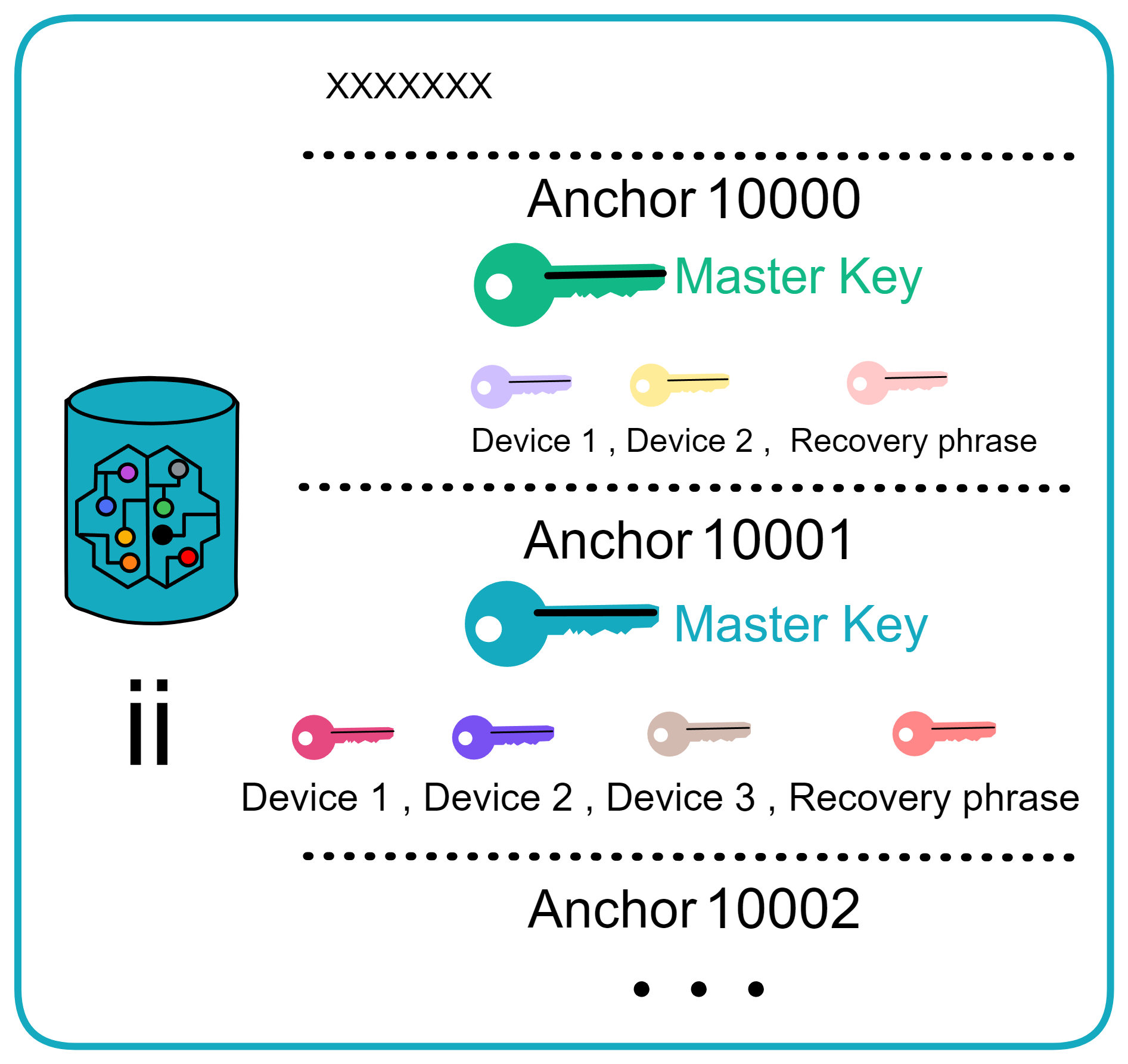

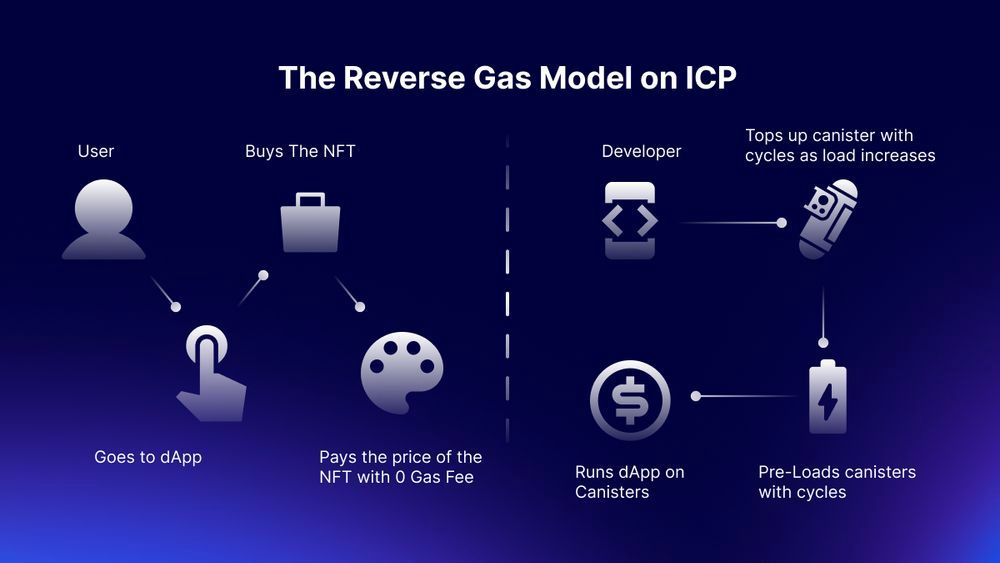

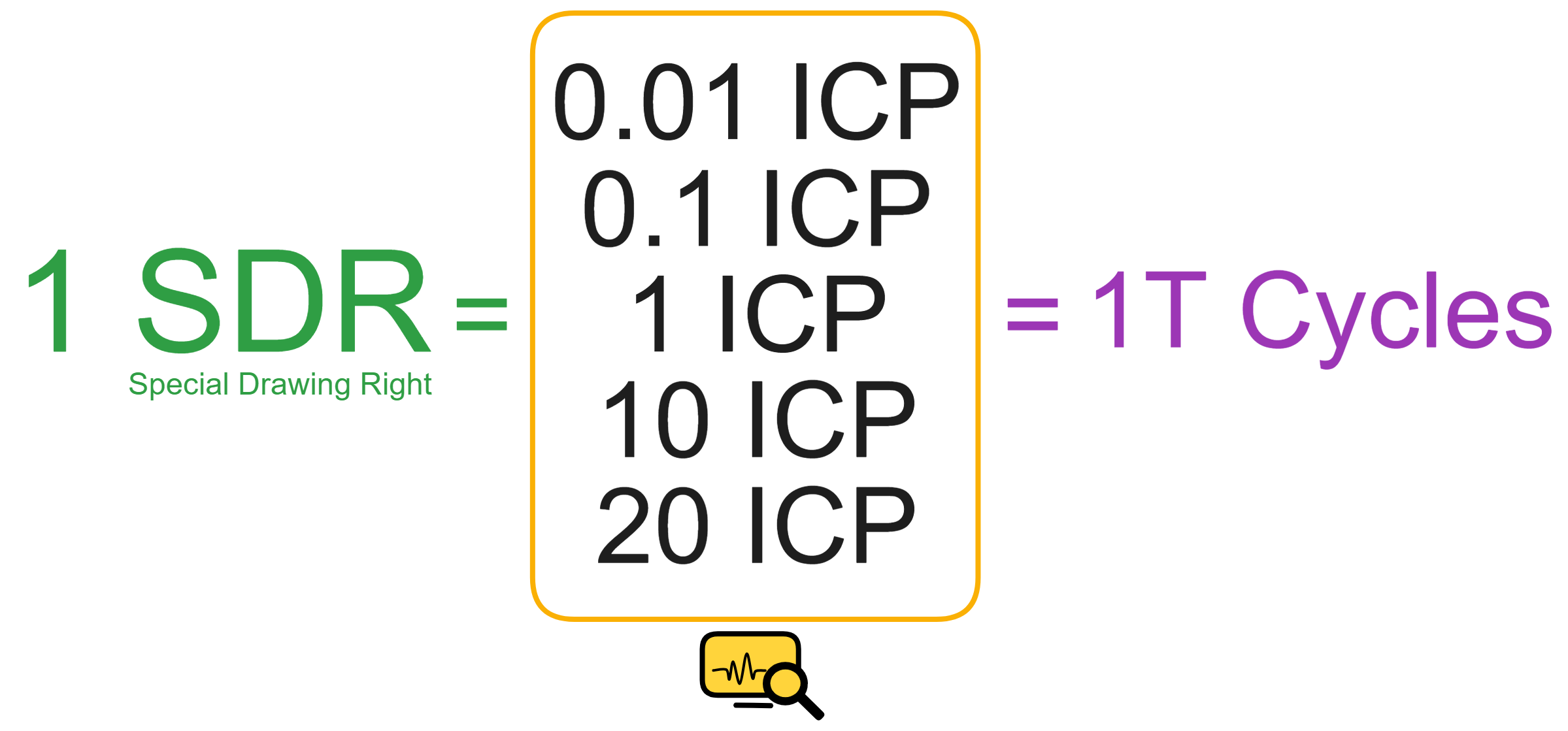

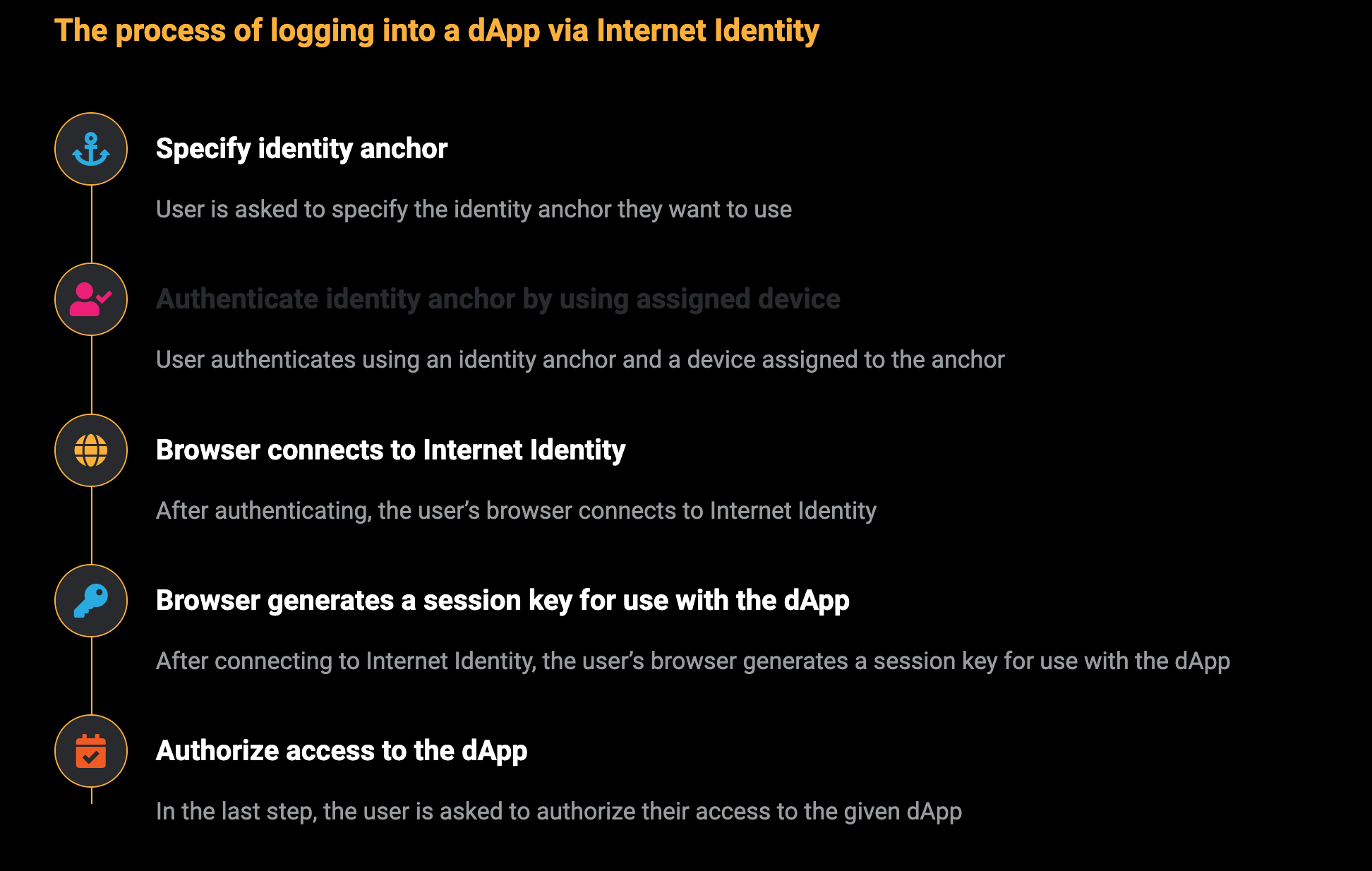

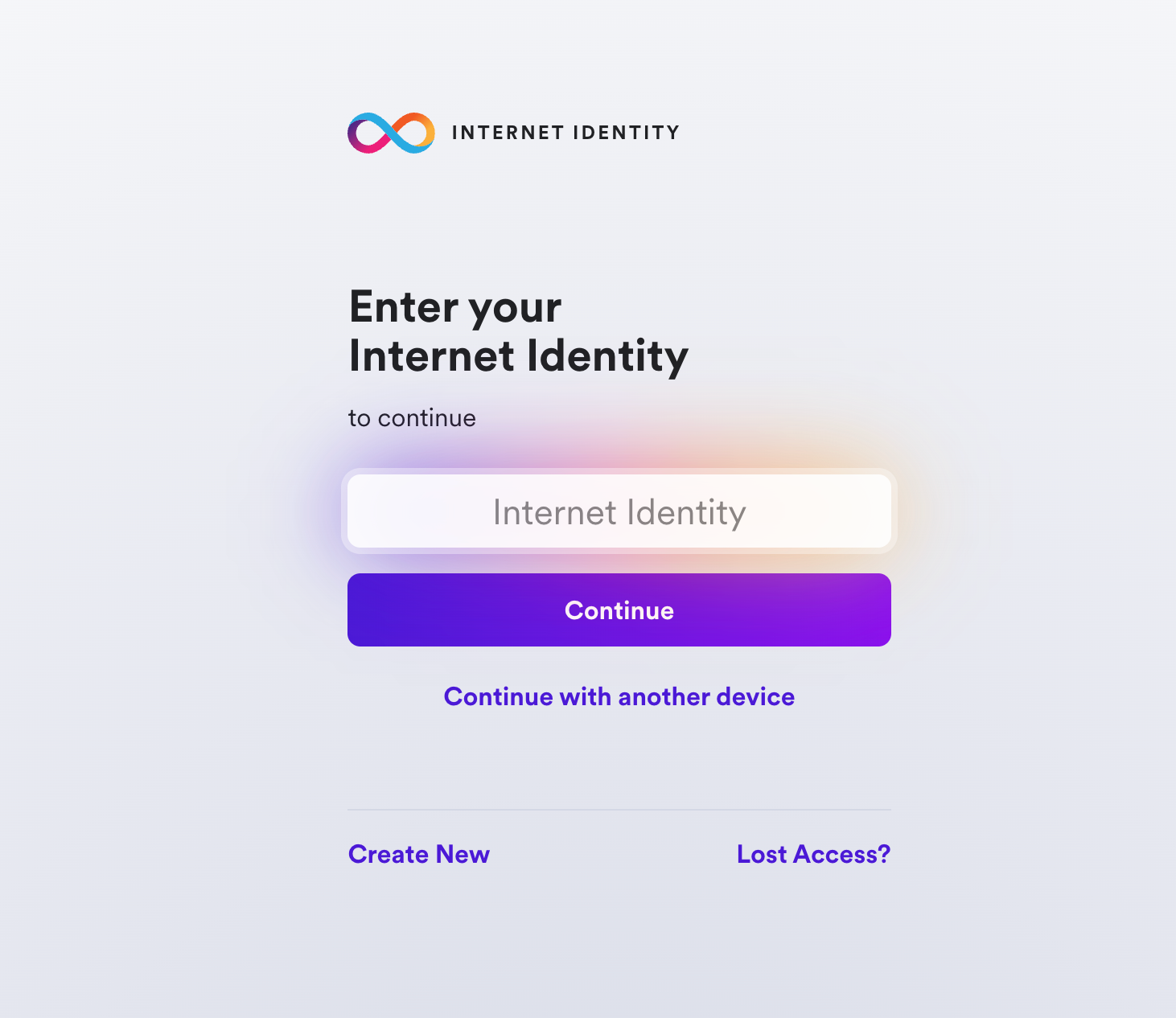

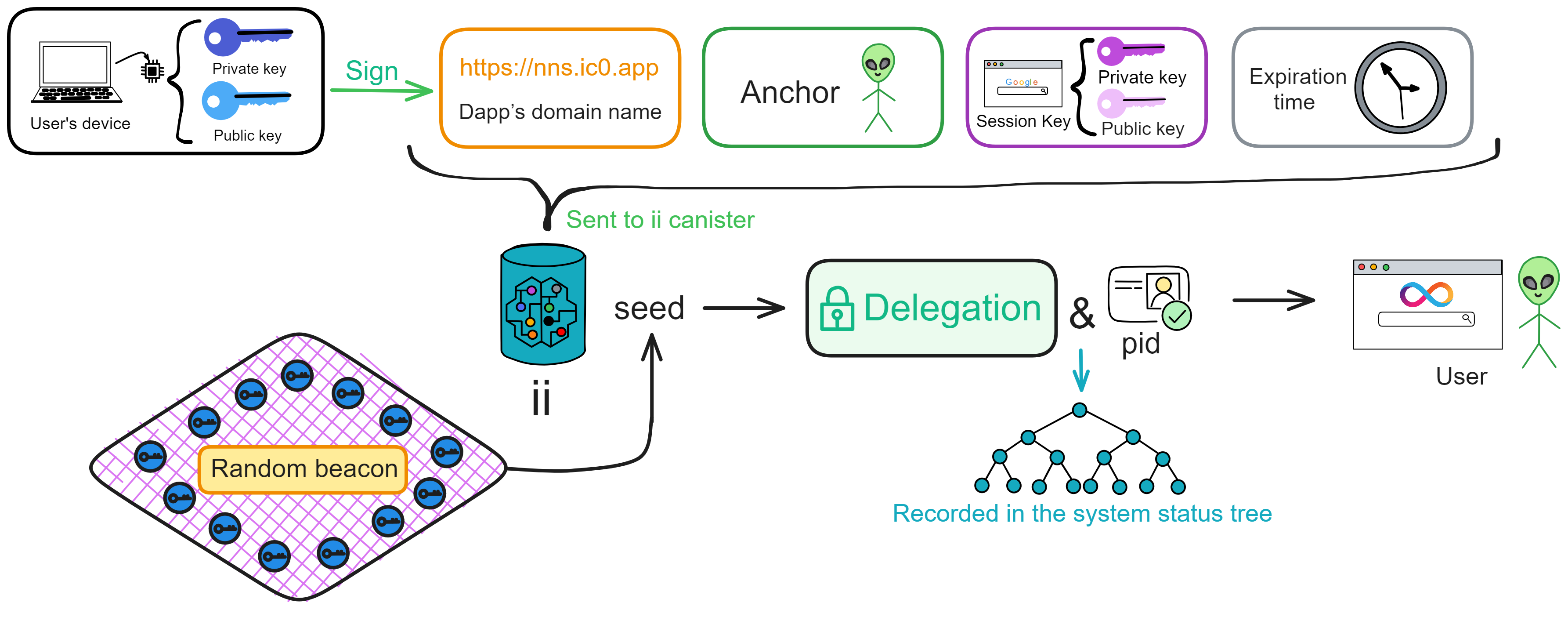

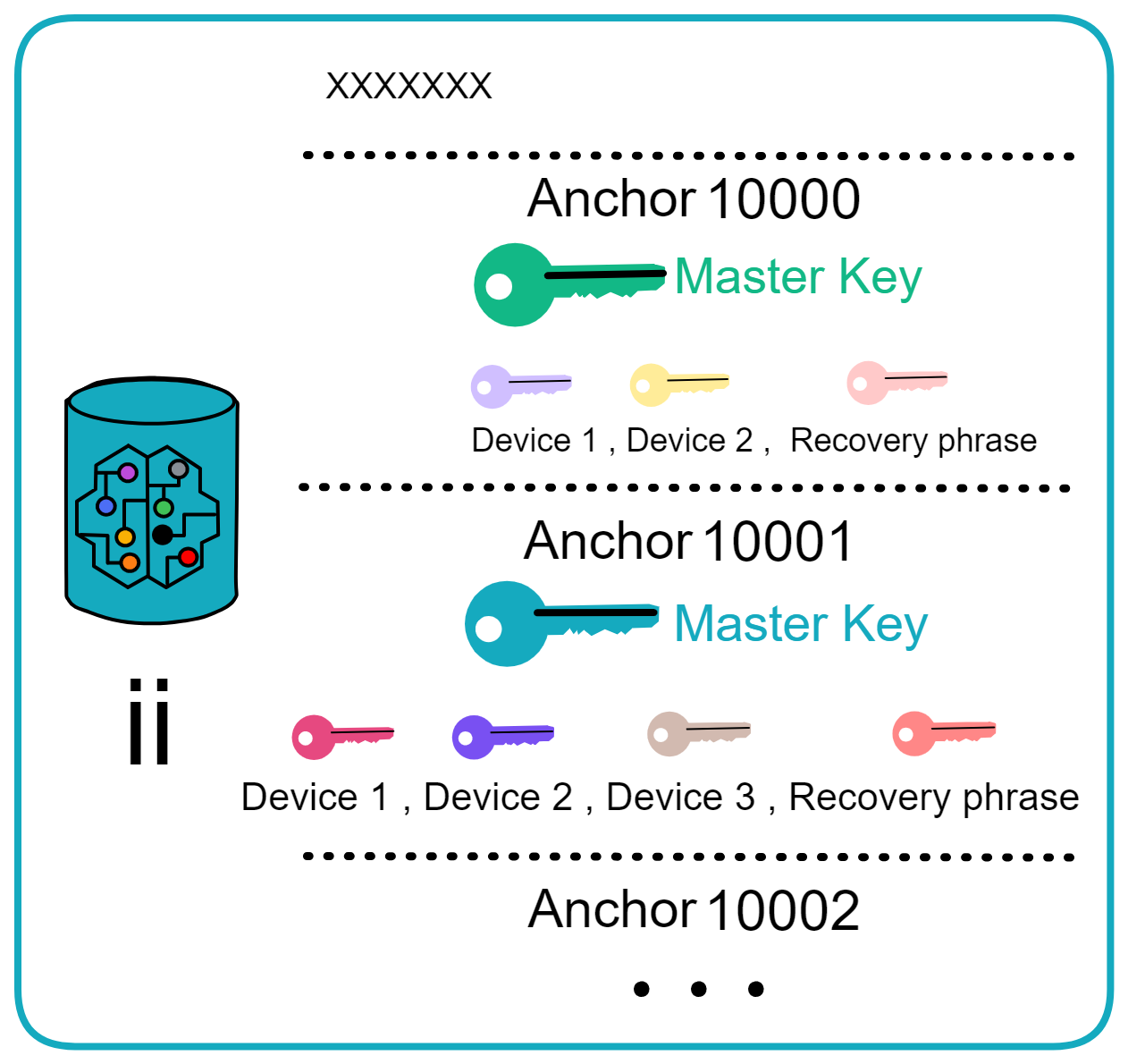

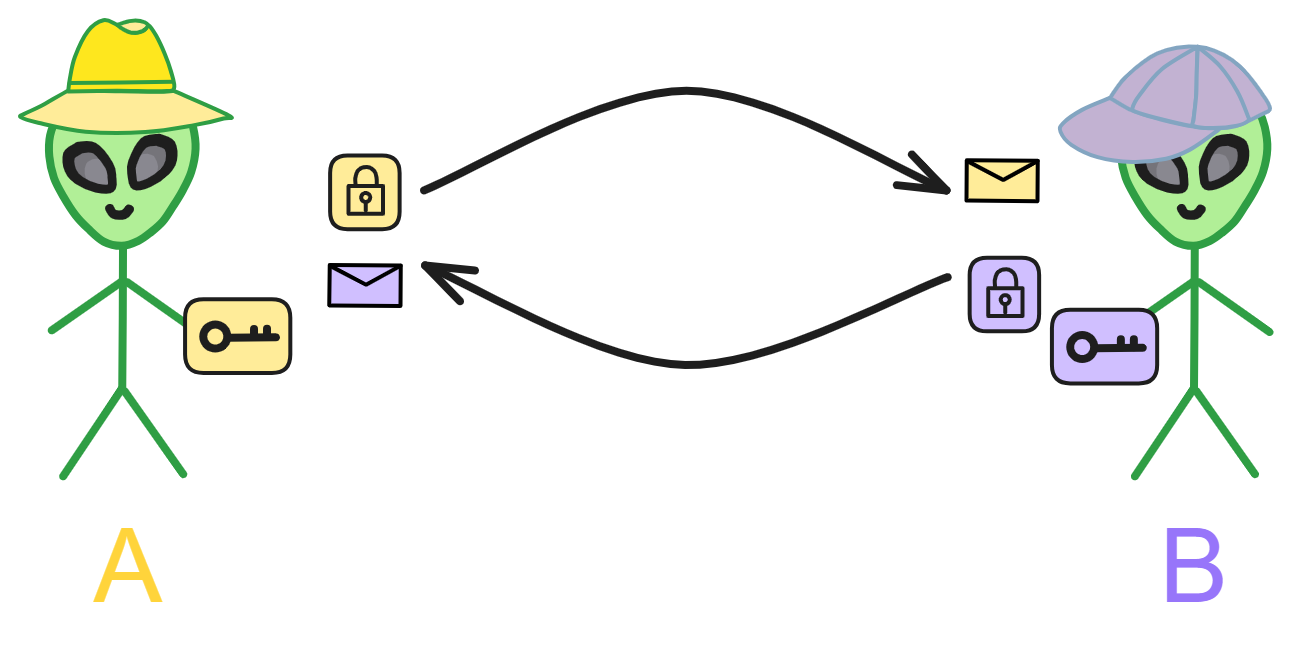

Since the data is on the chain, the Gas fee has to be very low so people will use it: 1 G for 1 year is $5! Low Gas alone is not enough. In order for users to use Dapps without barriers, IC uses a reverse Gas fee model where the Gas is paid by the development team. The DFINITY team also pegged Gas to SDR, turning it into stable Gas that does not fluctuate with the coin price. IC has a unified decentralized anonymous identity: Internet Identity (ii) as the login for Dapps and joins the network neural system to participate in governance...

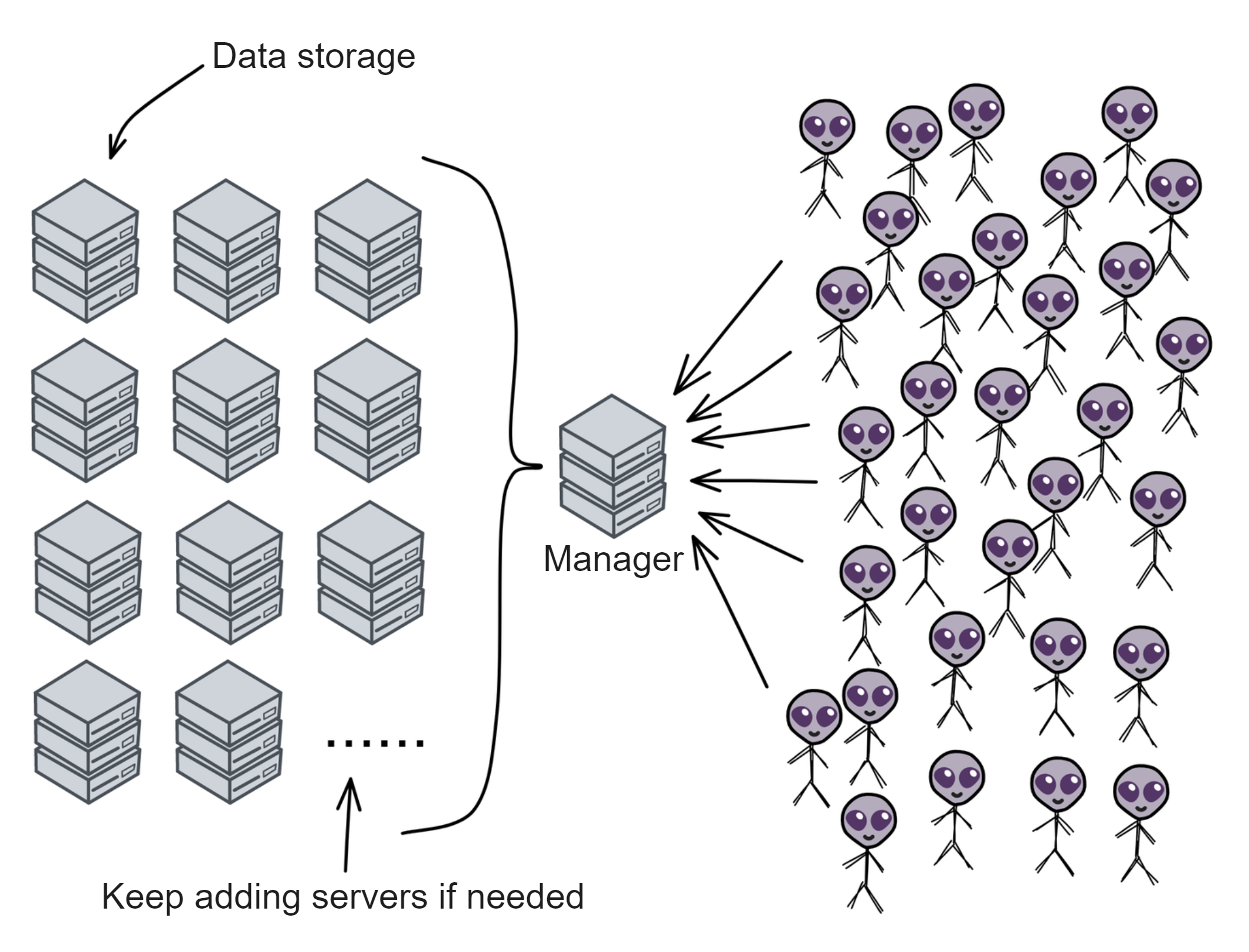

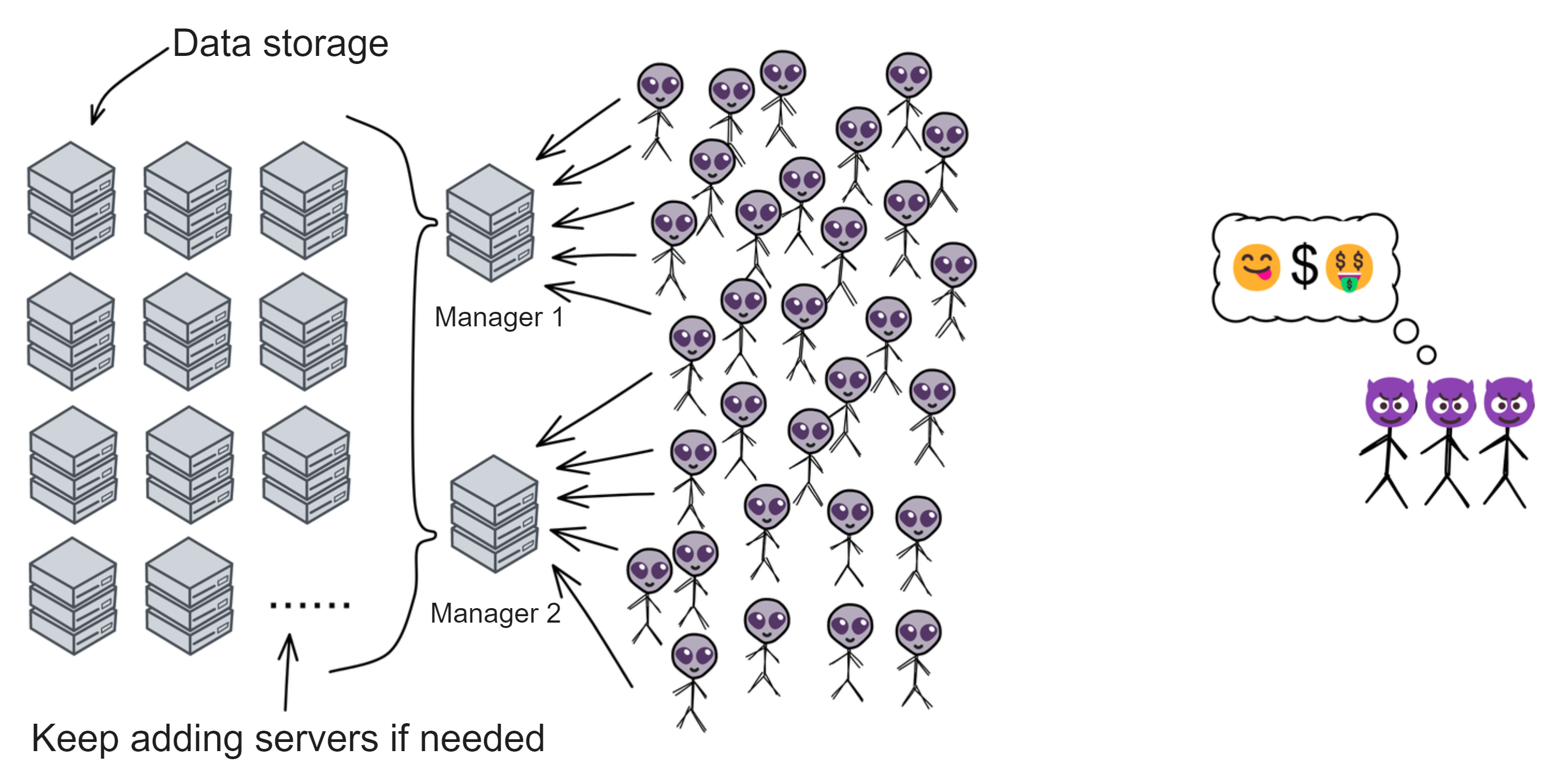

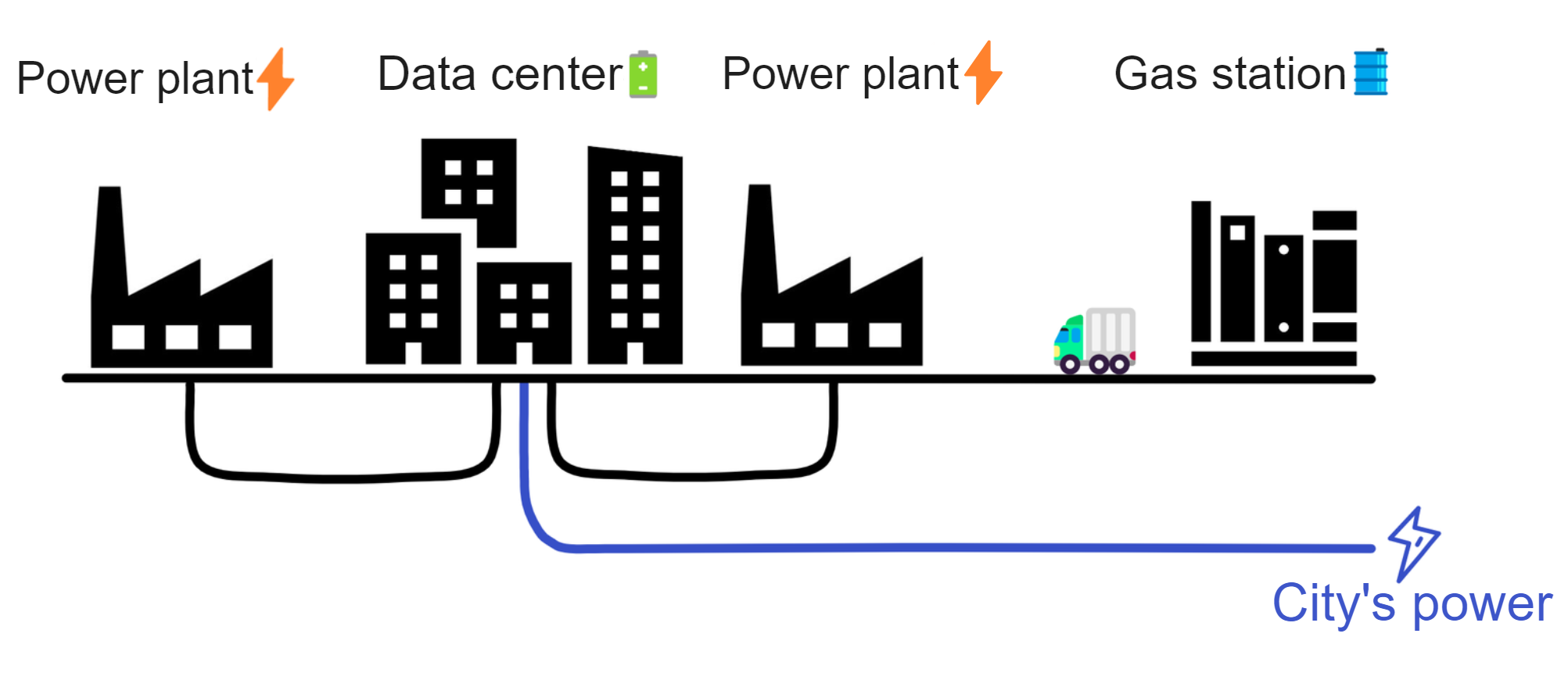

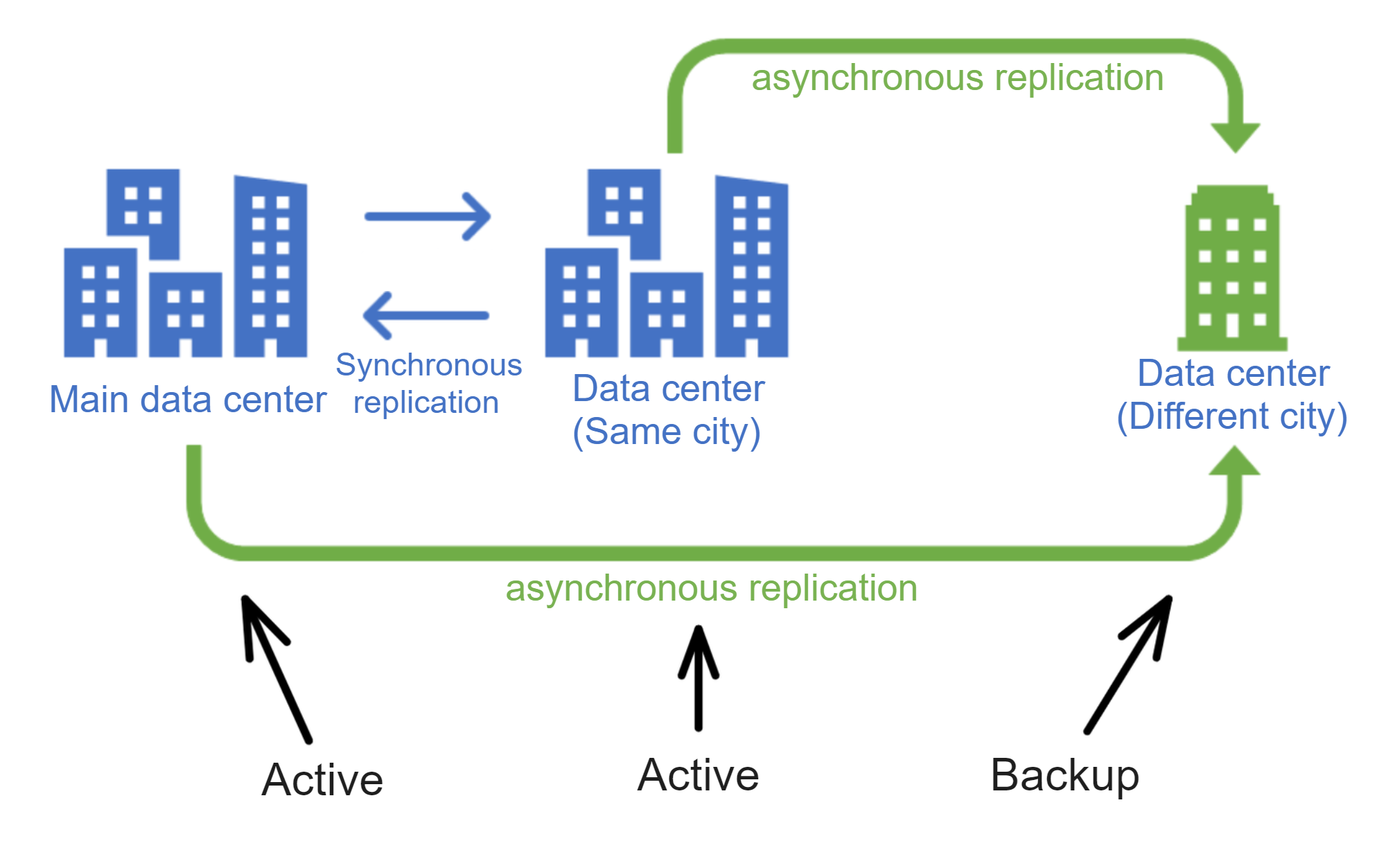

The IC architecture and consensus are also unique. In theory, IC has unlimited computation and storage - just keep adding server nodes. The improved consensus is a bit like practical Byzantine fault tolerance, yet more complex, because it's quite different from existing consensuses. Dominic gave it the name "PoUW" consensus, for Proof of Useful Work. BLS threshold signatures with VRF produce truly unpredictable random numbers, and everyone can verify the randomness is not forged. Sybil attack-resistant edge nodes, hierarchical architecture, randomly assigned block production - no need to elaborate, just one word: exquisite.

According to statistics from GitHub and Electric Capital (2023), IC has the most active developer community and is still growing rapidly.

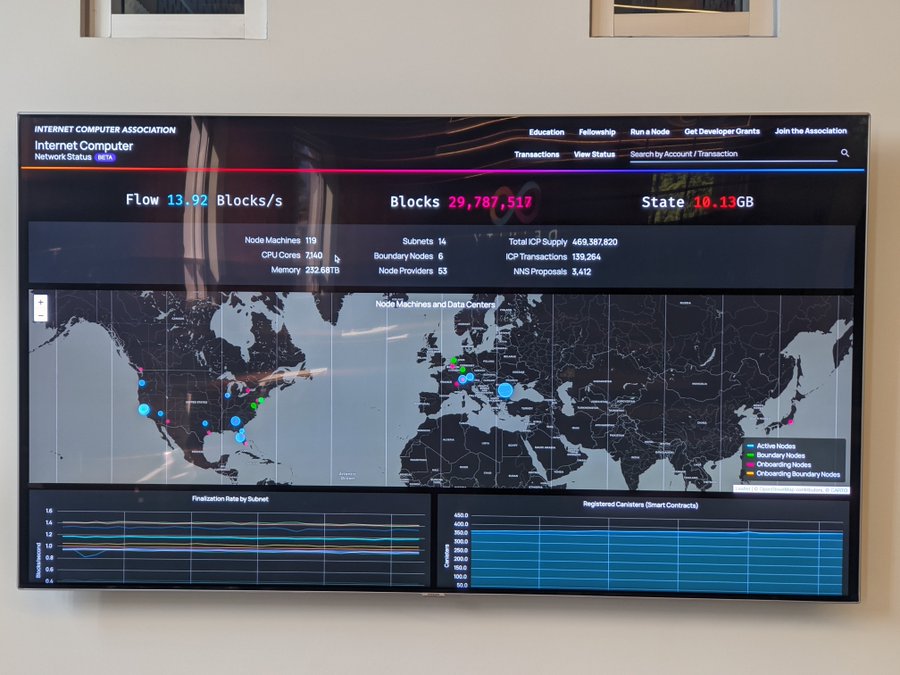

The photo on the office wall when IC was about to reach 30 million blocks after three weeks of mainnet launch.

In July 2021, many new DFINITY members interviewed and joined the team via video conferencing during the COVID-19 pandemic without meeting in person. On this day, a small group of people came to the office located in Zurich to meet face-to-face.

Dominic has written two visions for DFINITY in his blog:

On the one hand, many traditional monopolistic technology intermediaries, such as Uber, eBay, social networks, instant messaging, and even search engines, may be redesigned as "open-source enterprises," using proprietary software and their own decentralized governance systems to update themselves.

On the other hand, we hope to see large-scale redesign of enterprise IT systems to take advantage of the special properties offered by blockchain computers and dramatically reduce costs. The last point is not obvious, as computing on blockchain computers is much more expensive than traditional cloud computing such as Amazon Web Services. However, significant cost savings are possible because the vast majority of costs involved in running enterprise IT systems come from supporting human capital, not computing itself, and IC will make it possible to create systems with much less human capital involvement.

Image from Shanghai, October 2021.

On July 14, 2022, at a street café in Zurich, Dominic and his team were waiting for the 1,000,000,000th block of IC to be mined, while checking the real-time statistics of IC data on the dashboard.

DFINITY's new office building is located in Switzerland.

When leaving the office, Dominic took some photos of murals on the cafeteria wall that were created by talented IC NFT artists.

Dominic moved into his new house, and shared the good news on Twitter while enjoying a piece of cake.

After work, one must not neglect their musical pursuits.

After talking for so long, what problems does IC actually solve? In general, it solves the problems that traditional blockchains have low TPS, poor scalability, and Dapps still rely on some centralized services.

Bitcoin is a decentralized ledger. A chain is a network.

Ethereum created decentralized computing. There are also side chains, cross-chain interactions.

Cosmos, Polkadot achieved composability and scalability of blockchains. In the multi-chain era, many blockchain networks come together, with interactions between chains. And these chains have organizational protocols that can scale indefinitely.

The Internet Computer built a decentralized cloud service that can auto-scale elastically with ultra high TPS. It's a brand new system and architecture, redesigned from bottom to top.

The IC is designed to be decentralized at the base layer, which means deployed websites, smart contracts cannot be forcibly shut down by others.

Applications deployed on top can be controlled by users themselves, storing their private data. They can also choose to be controlled through a DAO, becoming fully decentralized Dapps, with community governance.

IC's smart contracts are Wasm containers, like small servers in the cloud, very capable, can directly provide computing, storage, web hosting, HTTP queries (oracles), WebSocket and other services.

The key to scalability is near-zero marginal cost. Polkadot's scalability is built on software engineers' development, while IC's scalability is automatically completed at the lower level. This greatly reduces the development cost of application teams on IC.

To build a highly scalable and high-performance public chain:

-

First, scalability and performance must be valued in the planning stage, and the design and layout in all aspects are aimed at achieving scalability and TPS as soon as possible.

-

Second, confidence and strength are needed to stick to their own path until the day the ecosystem explodes. In the short term, we need to endure the suppression of other competitors, withstand the pressure of cash flow for a long time, and ignore the incomprehension of the world.

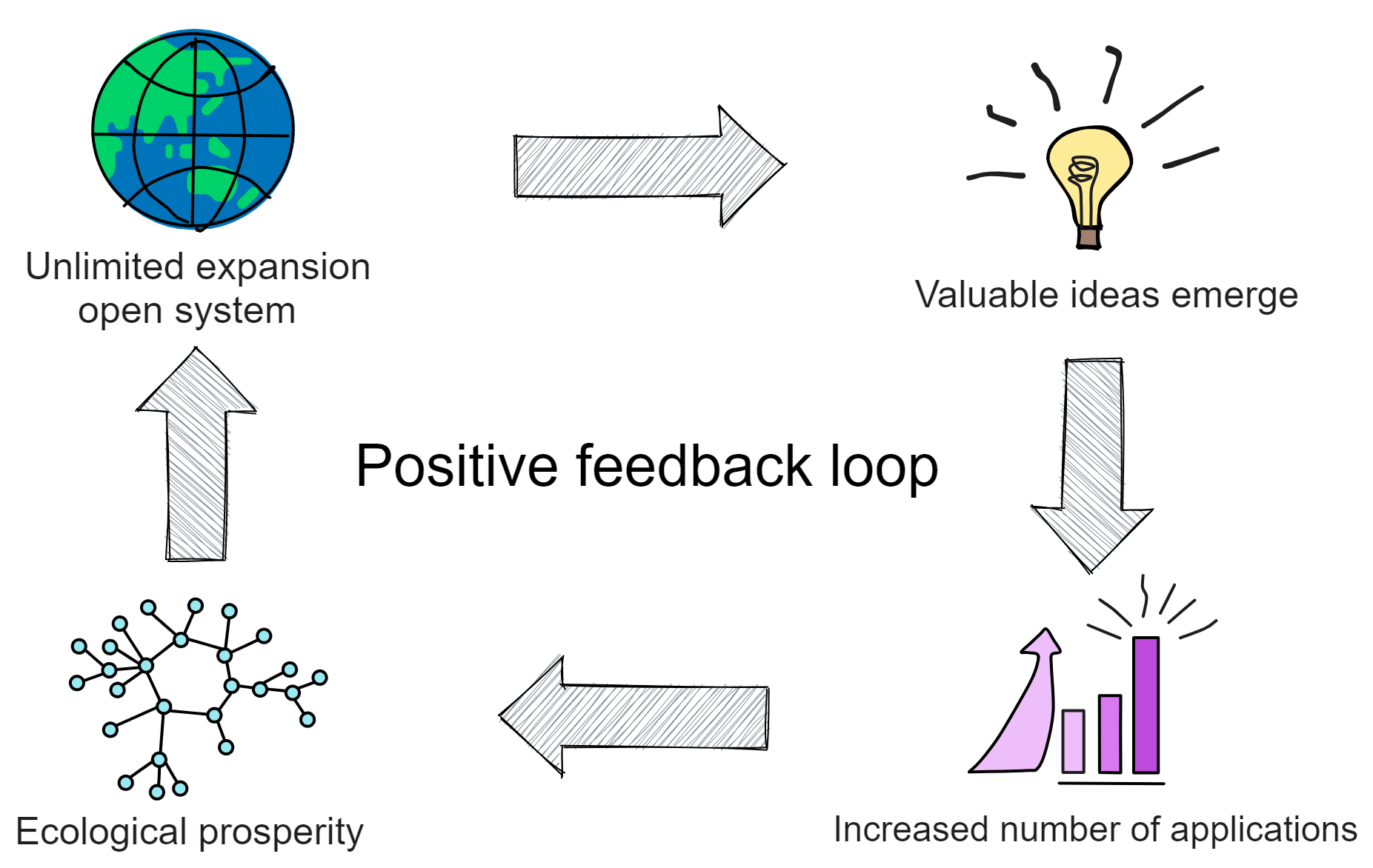

Focus on the research and development of underlying infrastructure until various creative applications appear and increase the number of participants in the ecosystem. The increase in quantity leads to the further emergence of new ideas and applications. This forms a positive feedback loop, making the ecosystem spontaneously more prosperous and more complex:

Scalability / Zero marginal cost / Open system → Increase in number of applications → Exponential increase in various connections → Valuable ideas emerge → Form applications → System complexity → Quantity continues to increase exponentially → Positive feedback loop → Ecosystem prosperity.

All technological development options have advantages and disadvantages. Judging who will eventually win based on the partial and one-sided technological advantages and disadvantages is naive and dangerous. The ultimate winner on the blockchain will be the one with the richest ecosystem, the largest number of developers, software applications, and end users.

The key words for the future of blockchain are: zero latency, zero marginal cost, open ecosystem, huge network effects, extremely low unit cost, and an extremely complex and rich ecosystem.

The huge changes in industries brought about by technological revolutions are sudden for most ordinary people. But behind this suddenness are years, even decades, of gradual evolution.

Once a few critical parameters affecting the industrial pattern cross the critical point, the ecosystem enters a period of great prosperity, and changes are extremely rapid. The profound impact on most people is completely unexpected. After the change ends, the industry enters a new long-term balance. For some time, almost no competitors can catch up with the leaders in the industry.

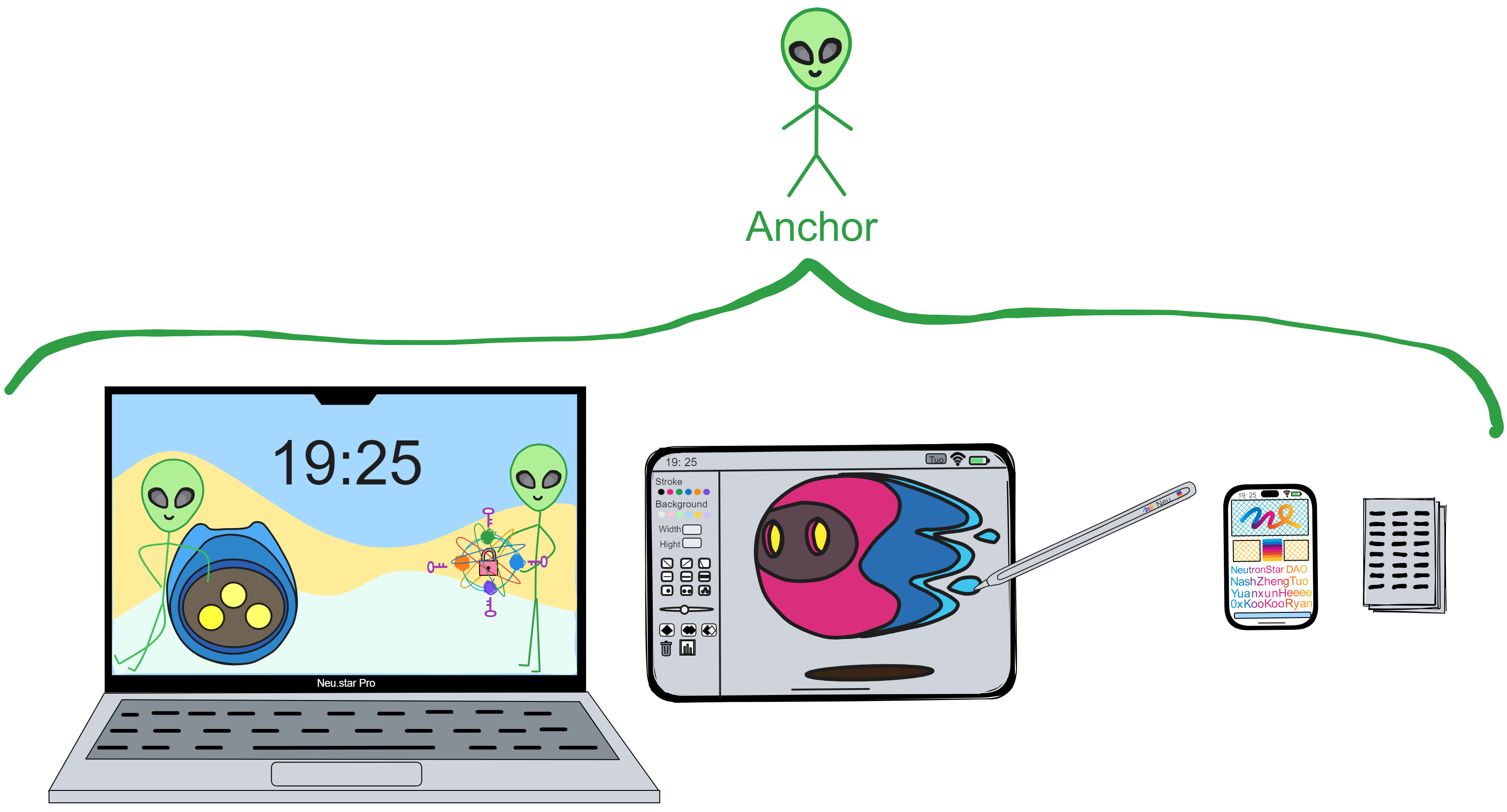

After 2 years of development, the IC ecosystem has emerged with many excellent applications. The front end and back end are all on-chain, and Dapps that do not rely on centralized services at all.

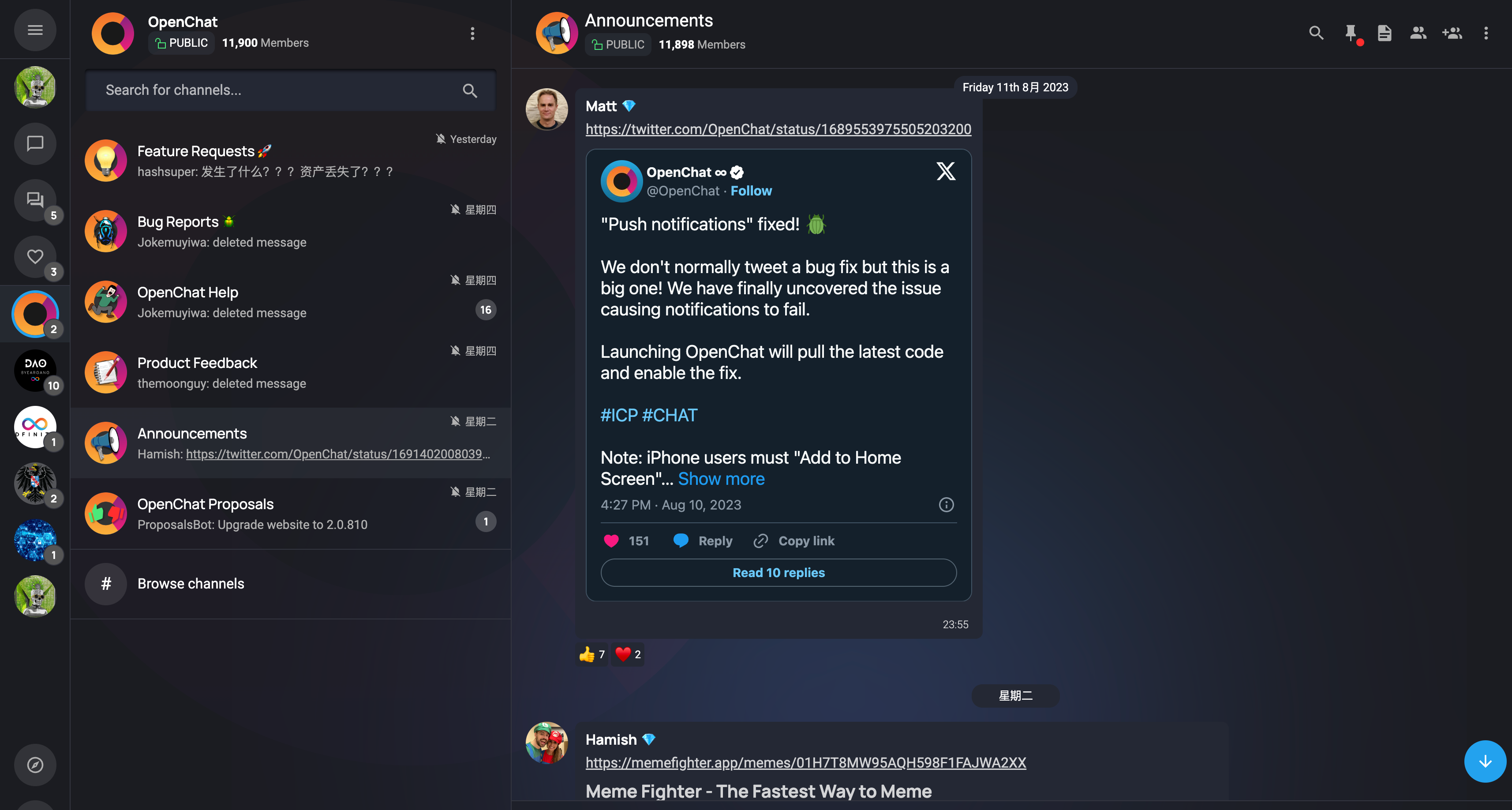

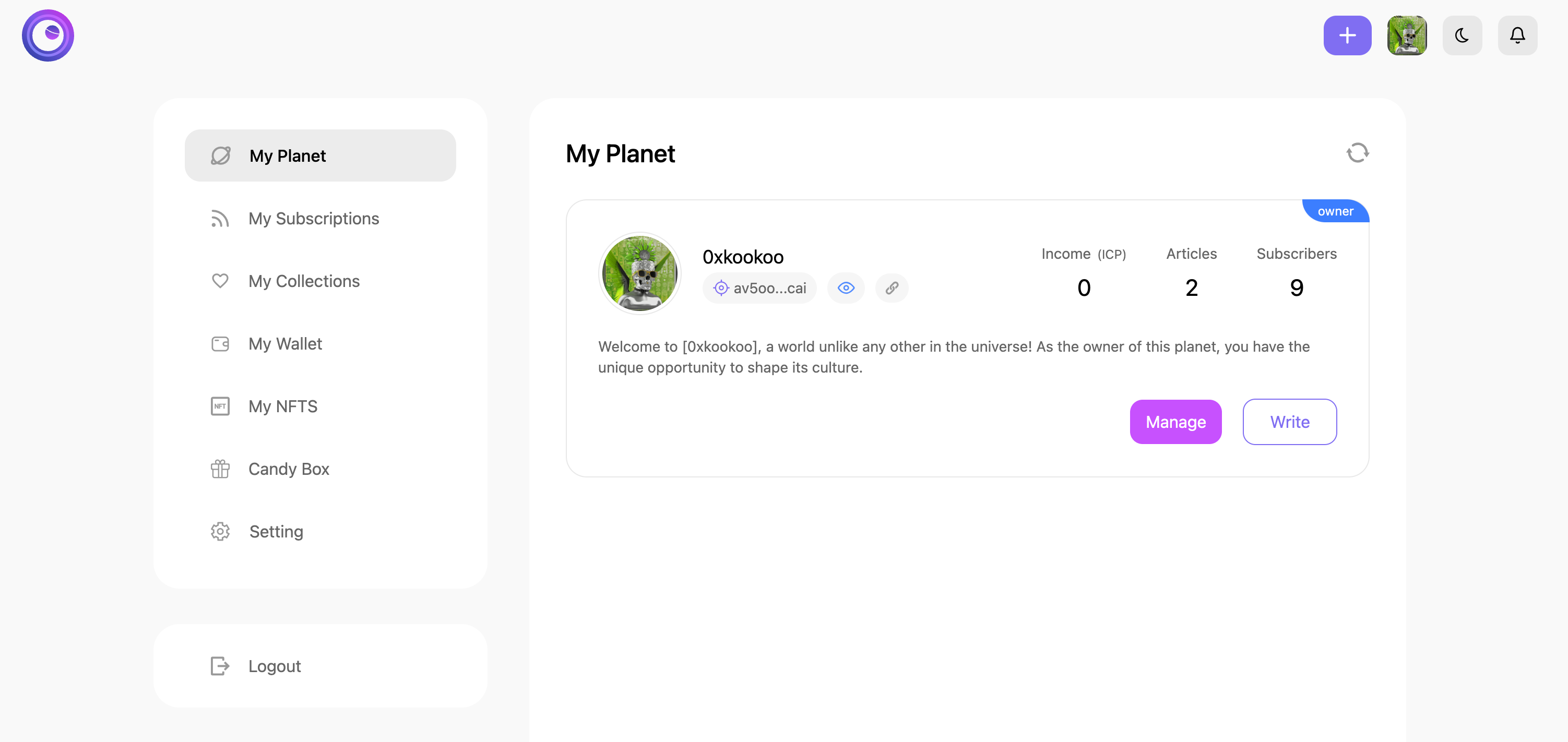

In the social Dapp (SocialFi) field, there are DSCVR, Distrikt, Mora, Openchat, etc. DSCVR is an end-to-end decentralized Web3 social media platform. Distrikt is a Web3 microblogging platform that allows everyone to share content or participate in discussions in a decentralized network. Mora can deploy a smart contract for each user to store the user's blog data. Mora allows users to publish blogs on the blockchain and permanently store their own data. Here is more about Mora. Openchat provides decentralized instant messaging services and is a decentralized chat Dapp.

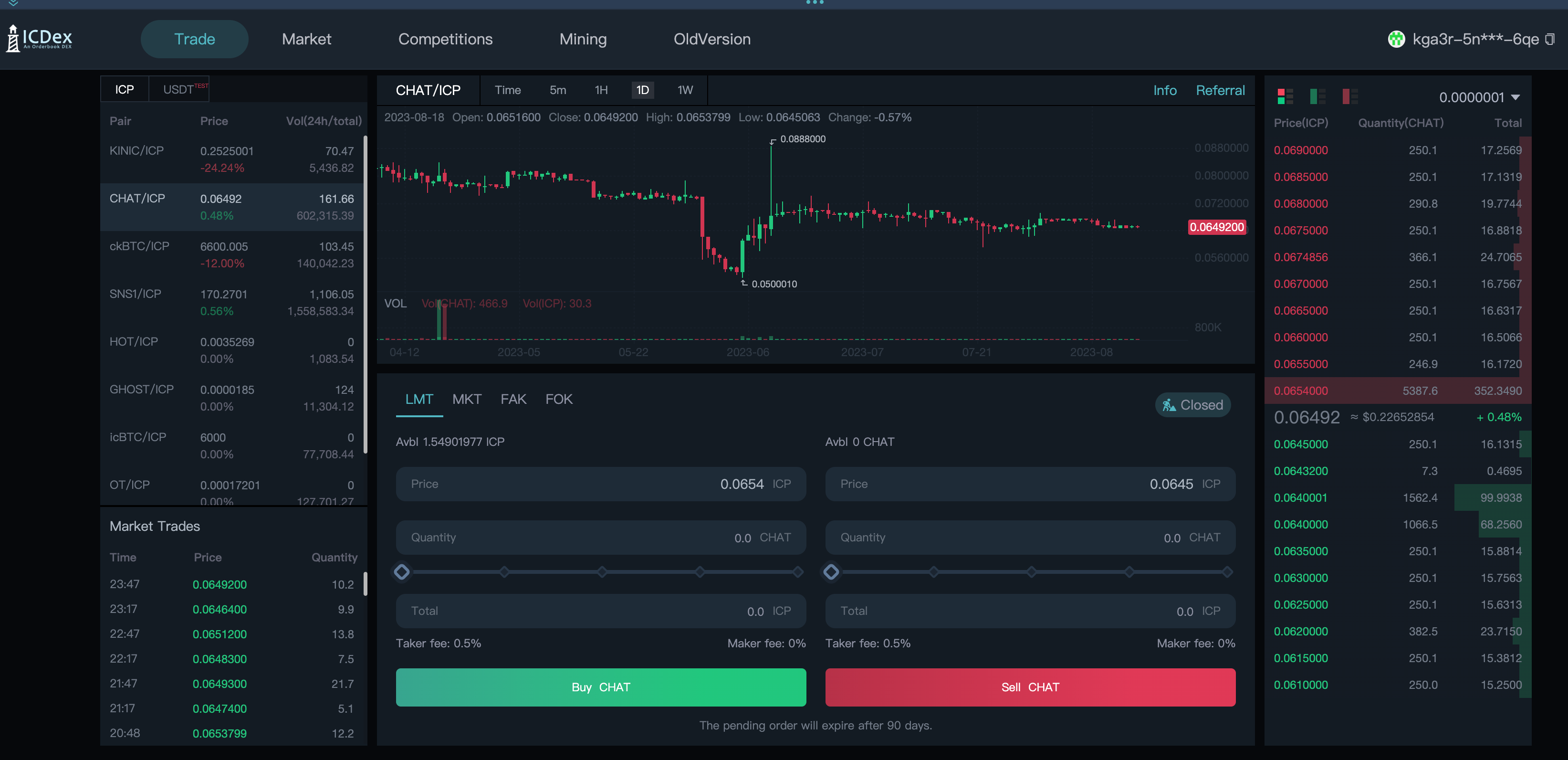

In the decentralized finance (DeFi) field, the IC ecosystem also has some very good Dapps: ICLightHouse, InfinitySwap and ICPSwap. 2022 was a year of collapse of trust in centralized institutions. 3AC, Celsius, Voyager Digital, BlockFi, Babel Finance, FTX and other leading hedge funds, lending platforms and exchanges were defeated and went bankrupt this year. Not only that, DCG grayscale, Binance and Huobi and other giants also suffered varying degrees of FUD. Centralized institutions cannot achieve complete transparency. Their trust depends on the reputation of the founders and the external image of the company's brand. Decentralization is based on "code is law" and "Don't trust, verify!". Without breaking, there is no standing. Under this revolutionary idea, the myth of centralization has been completely shattered, paving the way for a decentralized future. Decentralized financial services allow users to borrow, trade and manage assets without intermediaries, enhancing the transparency and accessibility of the financial system.

AstroX ME wallet is a highly anticipated wallet app. The ME wallet can securely and reliably store and manage digital assets, allowing users to easily manage their IC tokens and various digital assets.

There is also the decentralized NFT market Yumi. Users can create, buy and trade digital artworks, providing artists and collectors with new opportunities and markets.

The IC ecosystem has already emerged with many impressive Dapps, covering social, finance, NFT markets and wallets, providing rich and diverse experiences and services. As the IC ecosystem continues to grow and innovate, we look forward to more excellent applications. There are more interesting projects waiting for you to discover on the official website.

Switzerland is now a prominent "Crypto Valley", where many well-known blockchain projects have been born. DFINITY is the first completely non-profit foundation here.

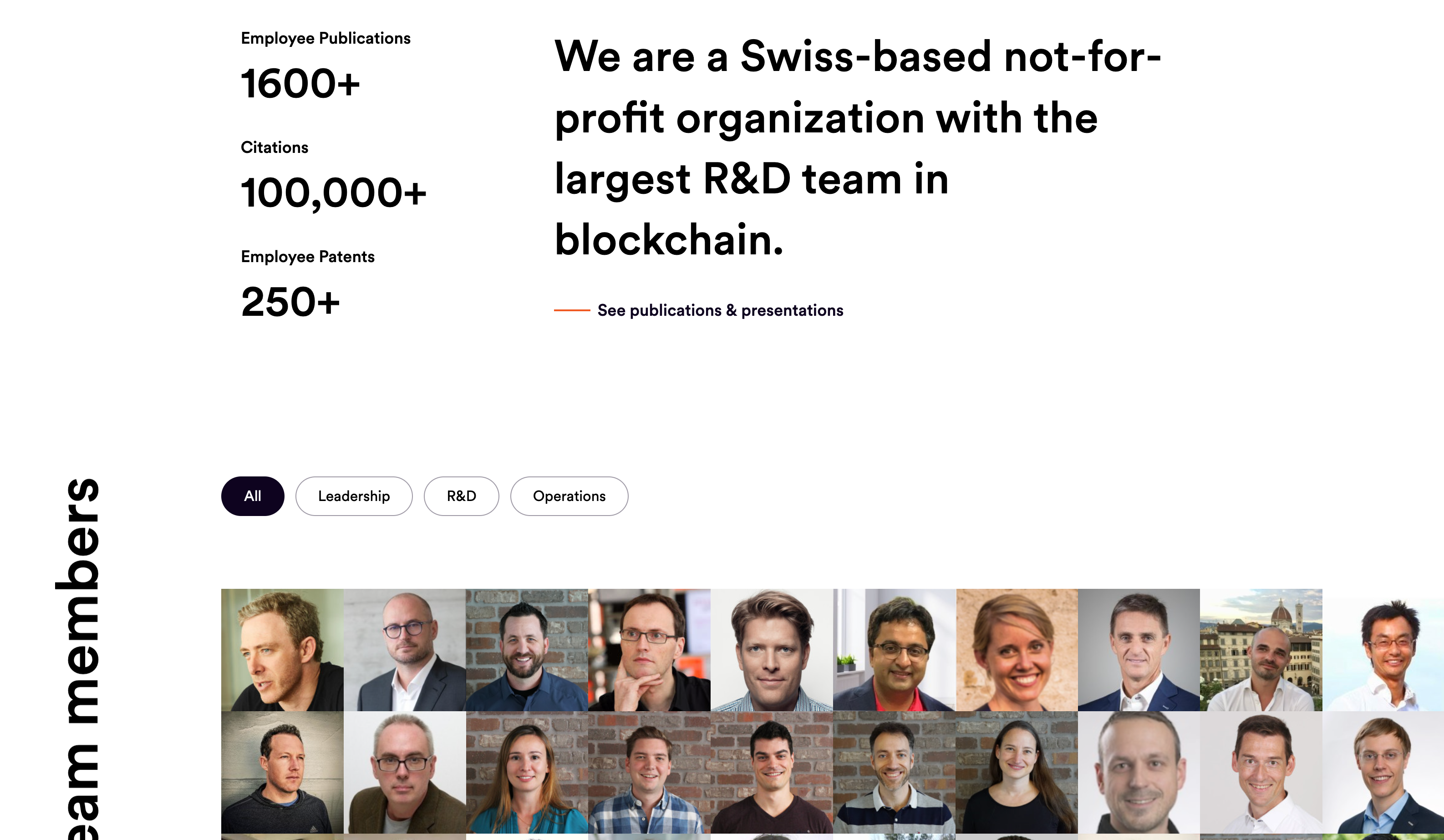

Dominic has assembled a very strong team of blockchain developers, including cryptography, computer science, and mathematics professors, PhDs and postdocs, cryptocurrency experts, senior engineers, and professional managers.

The Internet Computer is the crystallization of 5 years of R&D by top cryptographers, distributed systems experts, and programming language experts. Dfinity currently has close to 100,000 academic citations and over 200 patents.

I believe that blockchain will still be one of the most interesting, influential, and fastest-growing technology fields in the next 10 years. 🚀🚀🚀

Currently, there is no other chain better suited for deploying applications than IC.

This is the story I wanted to tell about Dominic, but his own story is far from over, and has just begun...

As Dominic himself said: "Our mission is to push towards a blockchain singularity, where the majority of the world's systems and services are created using smart contracts, and run entirely on chain, a transformation that will also take years."

@1.5x.jpg)

Who could have imagined that a blog post from distant 1998 would ignite the "crypto movement" that has been sweeping the world for the past twenty years, fueled by Dominic's passion for unlimited distribution...

That was a new world.

Epilogue

After completing the conclusion, I perceived a faint disturbance. The rustling, rustling sound approached gradually, rendering the entire room utterly silent. The source of the sound remained indistinct and unfathomable - it could have been the hum of the computer's fan, the gentle sway of tree branches outside the window, or simply a figment of my own imagination.

The sound became clearer and clearer. It sounded like it was coming from inside the computer?

I hurriedly pressed my ear against the computer's motherboard, but the sound did not emanate from there. I turned my gaze towards the window - could it be coming from outside? Yet everything outside appeared perfectly normal.

Then the sound came again, louder this time. It was a buzzing sound! That's it!

Suddenly, time came to a standstill. Everything around me froze in place. Neurons expanded and burst, releasing pheromones that catalyzed other neurons. My head began to shake uncontrollably, then suddenly expanded. My eyes bulged like a mouse's, and my ears twisted into pretzels... The sound seemed to be accompanied by the sound of breaking glass, footsteps on the ground, and the sound of birds and dogs barking...

A burst of white light coursed through my mind, quickly swelling into a vast, shimmering expanse of brilliance. All around me, an endless canvas of blue unfurled, adorned with intricate squares and lines of every hue. Then, a radiant spark flickered to life, blossoming into a majestic orb that engulfed all else in its path. As the light dissipated, the blue canvas gave way to an immaculate vista of pristine white.

Now, I don't remember anything.

Maybe it was just a dream.

Or maybe it was something that could change the world.

That's it for now, it's time to sleep. Goodnight.

By the way, the structure of the article is as follows:

If there are any parts you don't understand, feel free to take a break from the main storyline and explore them.

Next, let's delve into the technical architecture of IC.

The Future Has Come

I read through a lot of thoughts about blockchain, and summarise them into this article. Which I hope to give a relatively objective view about blockchain and internet technologies in the future.

Turkeys on the Farm

Blockchain is all the rage these days. It seems like everyone is buying cryptocurrencies.

The quadrennial bull market, digital gold Bitcoin, red-hot IXOs, meme coins with hundredfold increases...

Hold on, let me check the calendar. It's May 2023... Looks like we're almost due for another bull market, if it comes on schedule. Last time was 2020, so maybe this time it'll be 2024.

But don't get too excited, let me tell you a story:

In a farm, there was a group of turkeys. The farmer fed them every day at 11 a.m. One turkey, the scientist of the flock, observed this phenomenon for almost a year without any exception.

So, it proudly announced its great discovery: every day at 11 a.m., food would arrive. The next day at 11 a.m., the farmer came again, and the turkeys were fed once more. Consequently, they all agreed with the scientist's law.

But on Thanksgiving Day, there was no food. Instead, the farmer came in and killed them all.

This story was originally put forth by British philosopher Bertrand Russell to satirize unscientific inductive reasoning and the abuse of induction.

Let's not talk about whether the bull market will come or not.

Instead, let's look at some similar situations from history and see what happened:

During the late '90s Internet bubble, the market experienced several ups and downs. In '96, '97, and '98, there were several fluctuations. The last and largest surge occurred from October '98 to March 2000 when the Nasdaq index rose from just over 2000 points to around 4900 points. This gradual climb instilled a resolute belief in speculators: no matter how badly the market falls, it will always bounce back.

As people went through several bull and bear cycles, this unwavering belief was further reinforced. When the real, prolonged bear market began, they continued to follow their own summarized experience, doubling down and buying at what they thought were the dips...

When the bubble burst, stock prices plummeted more than 50% in just a few days, with most stocks eventually losing 99% of their value and going straight to zero. Many people who had quickly become wealthy by leveraging their investments bet their entire net worth on bottom-picking during the bear market, only to end up losing everything.

The essence of the Internet is to reduce the cost of searching and interacting with information to almost zero, and on this basis, it has given birth to many highly scalable, highly profitable, and monopolistic business models that traditional economists cannot comprehend. However, many projects and ideas from the '90s were launched prematurely, before the necessary hardware and software infrastructure was in place, and before personal computers and broadband internet became widespread. As a result, they ended up failing miserably, like Webvan, founded in 1996 and bankrupt by 2001, with a total funding of around $800 million.

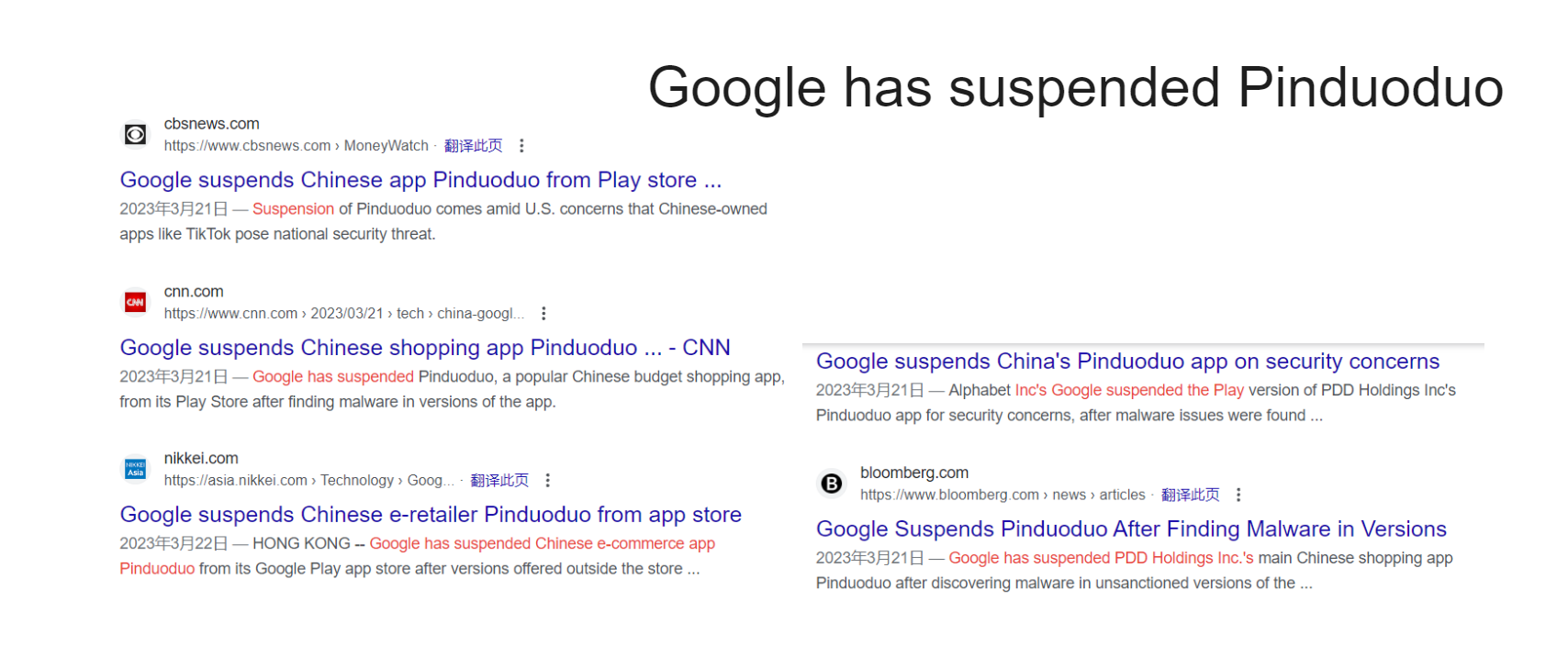

After the dot-com bubble burst in 2001, the maturation of infrastructure and the decrease in various costs led to the emergence of new applications (such as Taobao, YouTube, Netflix, Facebook, Amazon, AWS, iPhone, Uber, TikTok). Their explosive growth and massive scale far surpassed even the most pessimistic imaginations.

Similarly, a large number of overly advanced blockchain projects that cannot directly generate value for end users will eventually wither away, giving rise to various pessimistic and negative emotions.

However, once the infrastructure matures, many of the boasts and dreams of the past will ultimately be realized by entrepreneurs who appear at the right time and place.

When bitcoin was viewed by the public as an Internet payment in 2014, the actual throughput of bitcoin could not support payment when shopping in supermarkets. Ethereum initially called itself "a world computer". Many initially believed that Ethereum could replace Bitcoin because it had programmability. But this is actually a misconception, which can also easily lead to another mistaken view: That another next-generation smart contract platform is the killer of Ethereum, just because it provides more scalability.

Similarly, just as Ethereum cannot replace Bitcoin, the next "cloud service" blockchains are also unlikely to kill Ethereum, but expand adjacent possibilities and carry different applications, utilizing their unique characteristics. This does not mean that Bitcoin and Ethereum have established their position permanently. Bitcoin and Ethereum also have their own existing problems. More advanced technology does not necessarily replace existing technology, but is more likely to create a complex, specialized tech stack.

Today Ethereum's purpose is no longer for general computation on a large scale, but as a battle-tested, slow and secure computer for token-based applications like crowdfunding, lending, digital corporations and voting - a global accounting system for those worlds. Even if the Ethereum network is a bit congested, Gas is super expensive and it takes a few minutes to complete a transaction, these Dapps can still compete with banks, shareholder voting and securities firms.

Because these smart contracts allow completely free transactions between strangers without going through centralized institutions, and make the large personnel establishments of centralized institutions redundant. Automated market makers on Ethereum, like Uniswap, have only about 20 employees, currently worth about 20 billion. In comparison, Intercontinental, the parent company of the New York Stock Exchange, has nearly 9,000 employees and a market value of over $600 billion. Renowned blockchain investor Raoul Pal estimates that the global user base of blockchains is currently growing at over 110% per year, while global Internet users grew only 63% in 1997. Even following the trajectory of Internet development after 1997, the global user base of blockchains will grow from the current approximately 200 million to around 430 million by 2030.

Blockchain technology's essence is to reduce the barriers and costs of value exchange between global individuals and machines to almost zero.

However, Ethereum currently does not achieve this vision and ultimately still requires the maturity and popularity of various infrastructures.

Imagine what it would look like if blockchain technology successfully solves scalability, security and usability issues. Eventually there may be only a few public chains that can represent the future and go worldwide, carrying the decentralized dreams of people around the world.

Blockchains sit at the intersection of three major themes in modern society: technology, finance and democracy. Blockchains are a technology that uses the progress of encryption and computing to "democratize" money and many aspects of our daily lives. Its purpose is to improve the way our economy works, make it easier for us to control our own information, data and ultimately take control of our lives. In this tech age, this is what democracy should look like. We often hear people complain about tech giants (like Apple, Google and Facebook) snooping on our privacy data. The best way to solve this problem and give power back to the people.

The Coachman and the Driver

The Coachman and the Driver History repeats itself in a spiral ascent:

Now everyone can drive cars as long as they have money to buy one. 🚗

In the past, everyone could ride horses as long as they had money to buy one. 🐎

So the car is just a means of transportation for this era. In the future, people may rarely drive cars. Similar to how people ride horses now, only at riding clubs and some tourist spots. After self-driving technology matures, people will not need to drive at all, and those who can drive will become fewer and fewer. One has to go to racetracks to experience the joy of driving.

In the ancient times there were common horses, blood horses, war horses, and racetracks.

Now there are common cars, supercars, tanks, and racetracks.

Horses did not disappear, they were just replaced by cars.

When cars first appeared, they were looked down upon and hated for a long time due to noise, slow speed, frequent accidents, often breaking down, lack of gas stations, and lack of parking lots. Later, as more roads were built, more gas stations emerged, cars improved in quality, traffic rules were promoted, and horse carriages were completely replaced.

Tesla nowadays works the same way: it runs out of power quickly; catches fire; the autopilot drives into the sea or into trees; lacks charging poles; brake failures are exaggerated by the media to be a joke, oh no, it has become a laughing stock and meme. When the battery life extends, self-driving algorithms improve, charging poles become more common, and charging time shortens, what can oil cars do? 😄 Moreover, electricity will become cheaper and cheaper with technological progress, through solar, wind, geothermal, and eventually controlled nuclear fusion...

The negative media coverage to attract eyeballs is also an obstacle for people to objectively recognize new things. In order to attract attention, the media selectively reports negative news about new inventions much more than positive reports, especially for new things. When the iPhone came out, the media first ridiculed the Apple fans as brainwashed, then accused people of selling kidneys to buy phones, and then attacked the poor signal.Every time a Tesla catches fire, experiences brake failure, or gets into an accident, there are always people who are inexplicably happy about it, without objectively comparing the accident rate with other cars. While people curse the various problems of shared bicycles, they fail to notice that shared bicycles are changing the commuting habits of urbanites, reducing gasoline consumption, and even affecting real estate prices.

Constant bombardment of negative news makes it almost impossible for most people to really delve into and study the full logic behind new things. Judging that a technology has no future because of its current limitations is like continuing to burn oil lamps for fear of the danger of electric shock. In fact, oil lamps also have the risk of fire! If we can remain objective and curious, we will have many different views on the world, especially in this era of rapid technological development.

Similarly, many people do not understand the underlying operating principles of IC, do not know the innovations of Chain Key cryptography, do not know that IC solves the scalability problem, do not know BLS threshold signatures, and do not know IC's consensus algorithm. It is difficult to truly understand the concept of IC because it is a completely new and complex system without analogies. Even for those with a computer background, it takes months to fully and deeply understand all the concepts by delving into various forums, collecting materials, and keeping up with new developments every day. If you are just looking for short-term benefits and blindly following the trend, investing in ICP at the price peak and then labeling this thing as "scam" or "garbage" because of huge losses and lack of understanding is very natural. All the losing retail investors become disappointed and gradually join the FUD army on social media, leading more people who do not understand IC to develop prejudices. More importantly, people often do not know the information they missed due to ignorance. Individual prejudices are common. Each person has different learning and life experiences and different thinking models, which will automatically ignore things that they are not interested in or do not understand.

For more related reading: Were attacks on ICP initiated by a master attack — multi-billion dollar price manipulation on FTX? How The New York Times promoted a corrupt attack on ICP by Arkham Intelligence ,Interview with DFINITY: ICP is a victim of SBF's capital operation; much of the future of Web3 is in Asia.

Human society's productivity moves forward in cycles:

A new technology emerges → A few people first come into contact with and try it → More people are hired to develop and maintain this technology → Organizations (companies or DAOs) grow and develop → More and more people start to try it and improve productivity → Until another new technology sprouts, trying to solve problems and facilitate life in a more advanced and cutting-edge way → Old organizations gradually decline and die (those that change faster die faster, those that do not change just wait to die, and a few organizations can successfully reform) → A large number of employees lose their jobs and join new jobs → New organizations continue to grow and develop...until one day! People really don't have to do anything at all, fully automated, abundant materials...life is left with enjoyment only~

The essence of blockchain technology is that innovation can be carried out by everyone without the review and approval of authoritative institutions. Anyone can protect their rights and interests through blockchain technology without infringement by the powerful. Everyone is equal in cryptography. As long as the private key is properly kept, personal assets can be fully controlled by themselves without relying on anyone's custody.

Visa's TPS is 2400, Bitcoin's is 7. Even at Bitcoin's slow speed, it has gained the support of enthusiasts, organizations and some governments around the world. If the previous centralized applications, such as Telegram and Dropbox, can be transferred to a decentralized blockchain, what kind of scenario would that be? Productivity will definitely be improved.

Despite the widespread application and development of blockchain technology in recent years, there are still some obvious drawbacks. One of the main issues is scalability. As blockchain technology is widely applied in areas such as digital currencies, smart contracts, and supply chain tracing, the transaction and data volume in blockchain networks are growing rapidly, presenting significant challenges to the scalability of the blockchain. The current blockchain architecture faces problems such as low throughput and high latency, making it difficult to support large-scale application scenarios. This is because the traditional blockchain technology adopts distributed consensus algorithms, which require all nodes to participate in the process of block verification and generation, thus limiting the network throughput and latency. Additionally, as blockchain data is stored on each node, data synchronization and transmission can also become bottlenecks for scalability.

Therefore, solving the scalability problem of blockchain has become one of the important directions for the development of blockchain technology. Researchers have proposed many solutions to improve the throughput and latency performance of blockchain networks, such as sharding technology, sidechain technology, and Lightning Network. These technologies are designed to decompose the blockchain network into smaller parts, allowing for separate processing of transactions and data, and can be interoperable through cross-chain communication protocols. Through these innovative technologies, blockchain scalability can be improved to better meet the needs of actual application scenarios.

Once blockchain solves the problems of scalability and throughput, achieves breakthroughs in underlying technology, it may become the new infrastructure of the Internet and reshape the future Internet landscape.

Dfinity elected to reinvent blockchain's underlying technology, developed a superior decentralized network service through innovation, and fostered more Dapps to cultivate an entirely new decentralized Internet ecosystem.

This realm is so novel, encompassing such a vast knowledge domain, that no one person reigns supreme. Success springs from observing broadly, learning ceaselessly. Only then unveils insights inaccessible and incomprehensible to most.

Continue reading Dominic's story.

To talk about this, we have to start before Bitcoin.

If you're not familiar with Bitcoin, you can take a look at this first.

Bitcoin is completely virtual. It has no actual value and cannot create any value; it's just a virtual currency. Why do people go crazy sending money to buy Bitcoin? Let's dig a bit deeper: why does Bitcoin even exist?

Bitcoin was born against the backdrop of the 2008 financial crisis. The financial crisis spread globally, with no country immune. Fiat currencies became less reliable. Imagine the underlying relationship: governments issue legal tender backed by national credit. But the world is not always stable and peaceful, with wars, natural disasters and financial crises impacting society, which in turn affects the economy.

About wars: Society relies on governments, governments control armies, armies maintain society. Nations fund their armies through taxation. Conflicts between nations can arise if the benefits outweigh the costs, leading to war. Taxpayers fund the armies to launch wars. When the cannons boom, gold weighs a thousand taels. Some nations profit, some lose money.

About finance: Most countries in the world, like Europe and North America, have economic cycles. Small cycles make up large cycles, like a sine wave function. Periodic financial crises are like a sword hanging overhead; we can only pray: "Dharma, spare me!"

About natural disasters: They are difficult to predict. Though technological advances have reduced their impact on humans, threats remain for the next few decades. Viruses, floods, volcanoes - the economy suffers in response.

Since the economy will be impacted one way or another, can we find a type of money that is not devalued? It doesn't have to withstand all crises until mankind's destruction; it just needs to survive some natural disasters and human troubles. Money, I beg you, don't let a financial crisis on one end of the Earth affect people's normal lives on the other end.

There really is such a magical thing.

This kind of money originates from an "anarchist" idea. The previous b-money already reflects this idea.

The basic position of "anarchism" is to oppose all forms of domination and authority, including government, advocate self-help relations between individuals, and focus on individual freedom and equality. For anarchists, the word "anarchy" does not represent chaos, emptiness, or a state of moral degeneration, but a harmonious society established by the voluntary combination of free individuals to build mutual assistance, autonomy and anti-dictatorship, which is an autonomous system with authority but without government. They believe the root lies in "government", in the current pyramid structure from top to bottom. The layer-upon-layer management model not only has problems of inaction, corruption and waste, more importantly, there is always an organization above managing and maintaining order, which can easily lead to turmoil due to major conflicts of interest.

- Further analysis, you see that primitive society was very peaceful. People lived together spontaneously in large families, hunted together and cooperated. There was no so-called government or nation, only small-scale wealth accumulation and division of labor, and no laws or police. Because primitive society had no private property, everyone was a piece of meat that could run and jump, naked.

- Later, with private property came violence. The reason is simple, because you can profit from it .Through violent plunder of wealth, there will be "brave men" who do it for profit.

- Then, when there are a large number of "profitable" organizations, someone will stand up to protect everyone. The emergence of violent organizations to prevent violence - the army. Everyone pays a little money (taxes) in exchange for protection. People form governments to provide protection services more efficiently. People deposit their money in banks because banks can provide protection: vaults, safe deposit boxes, security guards, police, etc. This system relies on the government to operate, banks, police, either accept government regulation or are established by the government. My money is not on me, it's in the bank, haha you can't rob it.

- In this way, two large organizations (governments) will engage in larger-scale wars due to collective interest conflicts if the benefits outweigh the costs of launching the war. Recruit more armies to protect collective property...The violent conflicts become larger and larger, where is the peace? Nuclear deterrence.

By the 21st century, people's property, including social and entertainment, gradually shifted to the Internet. So the organizations that protect people's property also migrated online: online banks, Alipay.

No problem, leave your money with us, haha. Just pay a small fee, haha. It's the protection organization that helps everyone keep it, if you lose money, solve it with the platform! Wars have also become online attacks and defenses, protection organizations and hackers fight back and forth, desperately holding on to everyone's money.

In the past, protection organizations only provided physical protection and would not station troops in your home to guard you. But it's different online, all your data is uploaded to the server in one go. My data is also my asset! The data contains privacy, property, what you bought today, who you like to chat with, what you like to watch, what you want to eat at night ... All can be analyzed from the data. This is equivalent to a "network army" stationed in your home, monitoring your every move day and night. And how the data is processed is up to them. Because the protection organization controls the server, if they think it's not good, disadvantageous to someone, they can directly delete it without your consent.

Is it possible to flatten the "pyramid" and build a system of "personal sovereignty" for each person to represent equal and independent individuals, not dependent on protection organizations?

It is possible. There is a way for you to safely hold your own private property by yourself. You don't need a bank to protect your wealth, don't need a safe to protect, and don't need protection organizations, you can keep it by yourself.

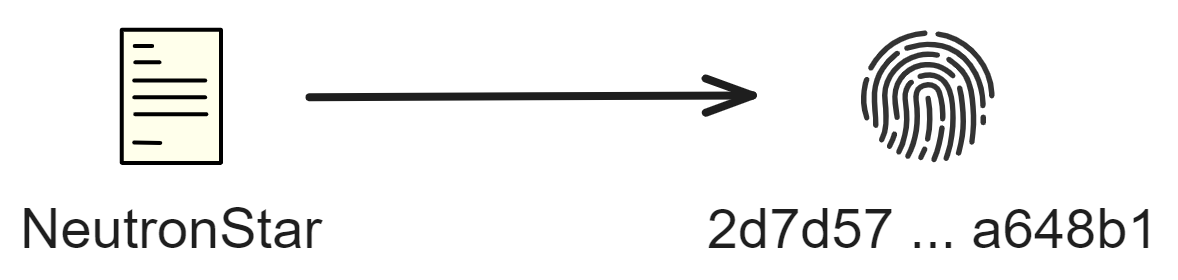

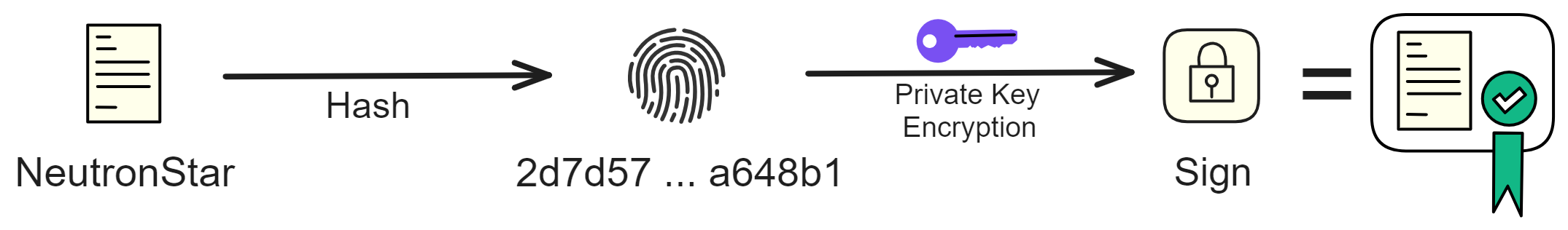

But how can you protect your property yourself? The answer is to use modern cryptography through mathematics!

Bombs can blow open safes, but they cannot blow open cryptography!

Bitcoin is just that kind of money! You generate your own private key, as long as the private key is not leaked, no one can take your bitcoins from you. Receiving and sending bitcoins are peer-to-peer transfers that bypass third-party centralized platforms (banks). Of course, if you lose the private key, you'll never be able to find your bitcoins again.

Your private key, mastering your own data, not dependent on third parties, perfect.

Of course, encrypted currency was not Satoshi Nakamoto's own idea.

David (Wei Dai) mentioned Tim May, who was one of the three people who jointly initiated the Cypherpunk research group in California Bay Area in 1992 along with Eric Hughes and John Gilmore. At the first meeting, the word "cypherpunk" was born by combining the roots of "cipher" and "cyberpunk."

They found in cryptography and algorithms a potential solution to the excessive centralization of the Internet. Cryptographers believed that to reduce the power of governments and companies, new technologies, better computers and more cryptographic mechanisms were needed. However, their plans faced an insurmountable obstacle: ultimately, all of their projects needed financial support, and governments and banks controlled that money. If they wanted to realize their plans, they needed a form of currency not controlled by the government. So the race for encrypted currency began. But the result was the exact opposite. All the initial efforts failed, including the legendary cryptographer David Chaum's ECash, as well as various encrypted currencies like Hashcash and Bit Gold.

Wei Dai is a Chinese American computer engineer and an alumnus of the University of Washington. In the late 1990s and early 2000s, he worked in the cryptography research group at Microsoft. During his time at Microsoft, he was involved in researching, designing and implementing cryptographic systems. Previously, he was a programmer at TerraSciences in Massachusetts.

In 1998, he published an informal white paper called "B-money, an anonymous distributed electronic cash system" on his personal website weidai.com. He is known for his contributions to cryptography and cryptocurrencies. He developed the Crypto++ cryptographic library, created the B-Money cryptocurrency system, and co-proposed the VMAC message authentication code algorithm. Wei Dai's pioneering work in the blockchain and digital currency field laid the foundation for later Bitcoin technology and was of milestone significance.

In November 1998, just after graduating from university, he proposed the idea of B-money in the community: "Effective cooperation requires an exchange medium (money) and a way to enforce contracts. In this paper, I describe a protocol whereby untraceable anonymous entities can cooperate more efficiently... I hope this protocol can help move encrypted anarchism forward, both theoretically and practically. " The design goal of B-money was an anonymous, distributed electronic cash system.

In the eyes of the Cyberpunks community, the problem with this approach was that the government could control the flow of money through policy management, and using these institutional services (banks or Alipay) required exposing one's identity. So Dai provided two alternative solutions (proof of work and distributed bookkeeping).

- Proof of work creates money. Anyone can calculate some mathematical problems, and the person who finds the answer can broadcast it to the entire network. After each network node verifies it, they will add or destroy work equivalent value encrypted currency in the account of this person in their own account book.

- Distributed bookkeeping tracks transactions. Neither the sender nor the receiver has a real name, only public keys. The sender signs with the private key and then broadcasts the transaction to the entire network. Each new transaction generates, everyone updates the account book in their hands, so that no one can stop the transaction and ensure the privacy and security of all users.

- Transactions are executed through contracts. In B-money, transactions are achieved through contracts. Each contract requires the participation of an arbitrator (third party). Dai designed a complex reward and punishment mechanism to prevent cheating.

We can see the connection with Bitcoin. Money is created through POW proof of work, and the account book work is distributed to a peer-to-peer network. All transactions must be executed through contracts. However, Dai believed that his first version of the solution could not be truly applied in practice, "because it requires a very large real-time tamper-resistant anonymous broadcast channel." In other words, the first solution could not solve the double spending problem, while Bitcoin solved the Byzantine Generals problem through incentives.

Dai later explained in the Cyberpunks community: "B-money is not yet a complete viable solution. I think B-money can at most provide an alternative solution for those who do not want or cannot use government-issued currencies or contract enforcement mechanisms." Many of the problems of B-money have remained unresolved, or at least have not been pointed out. Perhaps most importantly, its consensus model is not very robust. After proposing B-money, Dai did not continue to try to solve these problems. He went to work for TerraSciences and Microsoft.

But his proposal was not forgotten. The first reference in the Bitcoin white paper was B-money. Shortly before the publication of the Bitcoin white paper, Adam Back of Hashcash suggested that Satoshi Nakamoto read B-money. Dai was one of the few people Satoshi Nakamoto contacted personally. However, Dai did not reply to Satoshi Nakamoto's email, and later regretted it.

He wrote on LessWrong, "This may have been partly my fault, because when Satoshi emailed me asking for comments on his draft paper, I didn't reply. Otherwise I might have been able to successfully persuade him not to use a fixed money supply."

B-money was another exploration by the cypherpunk community to develop an independent currency in the digital world. In his memory, two cryptocurrencies were named "Dai" and "Wei" respectively, of which Wei was named by Vitalik in 2013 as the smallest unit of Ethereum.

However, with each new attempt and each new failure, the "cypherpunks" gained a better understanding of the difficulties they faced. Therefore, with many previous attempts to explore, Satoshi Nakamoto learned from the problems encountered by his predecessors and launched Bitcoin on October 31, 2008.

As Satoshi Nakamoto said in his first email on this issue, "I've been working on a new electronic cash system that's fully peer-to-peer, with no trusted third party." He believed that his core contribution was: creating a virtual currency managed and maintained by users; governments and businesses have almost no say in how the currency operates; it will be a completely decentralized system run by users.

Satoshi Nakamoto was well aware of the inglorious history of cryptocurrencies. In an article shortly after the release of Bitcoin in February 2009, Satoshi Nakamoto mentioned Chaum's work but distinguished Bitcoin from Chaum's work. Many people mistakenly regarded electronic money as a failed venture because all companies had failed since the 1990s. In my view, the failure of those digital currencies was because their systems were not decentralized. I believe Bitcoin is our first attempt to build a decentralized, trustless virtual currency system.

To ensure trust between participants, Satoshi Nakamoto designed a public chain that allows people to enter and check to ensure their money still exists. To protect privacy, Bitcoin uses an encrypted private key system that allows users to tell others their account without revealing their identity. To incentivize users to maintain the system, Bitcoin introduced the concept of mining, in which users can create new transaction blocks and earn rewards with newly minted bitcoins. To prevent hacking, blocks are cryptographically linked to previous blocks, making the transaction history essentially immutable. Bitcoin's real innovation is that its monetary system is completely decentralized, that is, there is no ultimate decision maker or authority to resolve disputes or determine the direction of currency development, but users collectively determine Bitcoin's future as a whole.

Cypherpunks remain vigilant about these threats. They are trying to weaken the government's and companies' surveillance capabilities by creating a set of privacy-enhancing programs and methods, including strong cryptography, secure email, and cryptocurrencies. Their ultimate goal is to decentralize decision making on the Internet. Cypherpunks do not concentrate power in the hands of a few but seek to distribute power to the masses so that everyone can decide together how the entire system should operate.

In the eyes of cypherpunks, the main problem of the Internet age is that governments and companies have become too powerful, posing a serious threat to individual privacy. In addition, the US government and companies abuse their power and status, charging consumers too much and imposing heavy taxes. The answer lies in decentralizing power—distributing power and decision making from a few to many. But before Bitcoin appeared, it was unclear how to achieve this, and Satoshi Nakamoto provided the solution.